![]()

Oracle Cloud Infrastructure (OCI) GoldenGate is a fully managed, native cloud service that moves data in real-time at scale. OCI GoldenGate processes data as it moves from one or more source data management systems to target endpoints. You can also design, run, orchestrate, and monitor data replication tasks without allocating or managing compute environments. OCI GoldenGate now supports more than 30 sources and targets, enabling real-time data-driven solutions on a wide cross-section of enterprise, non-Oracle, big data, and multi-cloud use cases.

For multi-cloud and Big Data use-cases,the heartbeat information can be really handy in determining the end-to-end lag between a source and a target system.

Prerequisites:

- OCI GoldenGate deployments already created for Source and Target endpoints.

- GoldenGate user already created for source Database: Preparing the Database for Oracle GoldenGate.

- Source & Target connections already created and attached to the specific OCI GoldenGate deployments.

Section I : OCI GoldenGate (Oracle Deployment): Heartbeat Information

For the scope of this blog post, we will set up a Heartbeat table for OCI GoldenGate (Oracle & Big Data) endpoints.

- Login into the source OCI GoldenGate (Oracle) deployment.

- Click on the left side hamburger Menu and click on Configuration.

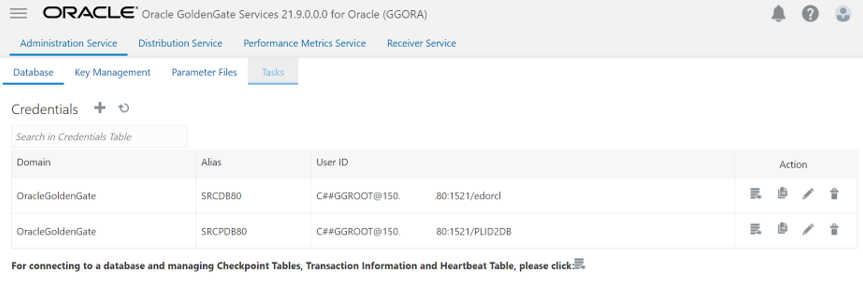

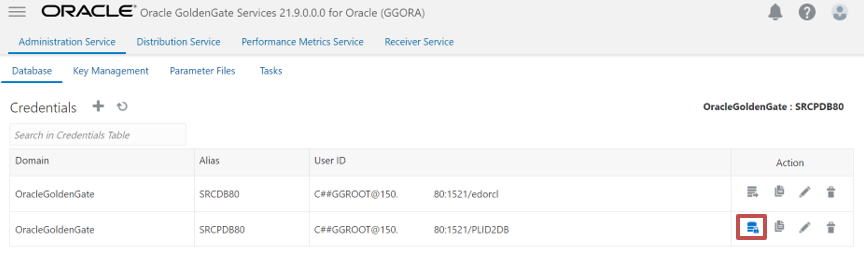

- To connect to a multi-tenant Oracle database, you need to add connections for the container database (CDB) and pluggable databases (PDBs), as shown below:

- In Database, click on Connect to Database icon within PDB Connection to Add Heartbeat table

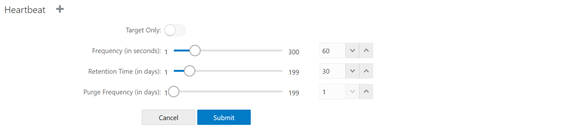

- Scroll down and click on the “+”icon besides Heartbeat.Keep the default values default or change them accordingly. Click Submit after adjusting the heartbeat options.

FREQUENCY number_in_seconds

Specifies how often the heartbeat seed table and heartbeat table are updated. For example, how frequently heartbeat records are generated. The default is 60 seconds.

RETENTION_TIME number_in_days

Specifies when heartbeat entries older than the retention time in the history table are purged. The default is 30 days.

PURGE_FREQUENCY number_in_days

Specifies how often the purge scheduler is run to delete table entries older than the retention time from the heartbeat history. The default is 1 day

- Go back to the Overview tab ,and now you can start to Add an Extract. The Extract process captures data from the source database and writes it to a trail file.

- Once the Extract is created, you can confirm if the heartbeat table is successfully added by checking the statistics within the Extract.

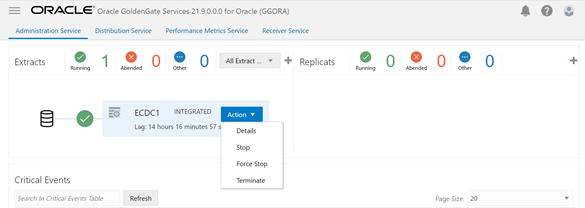

Click on Action -> Details on the Extract

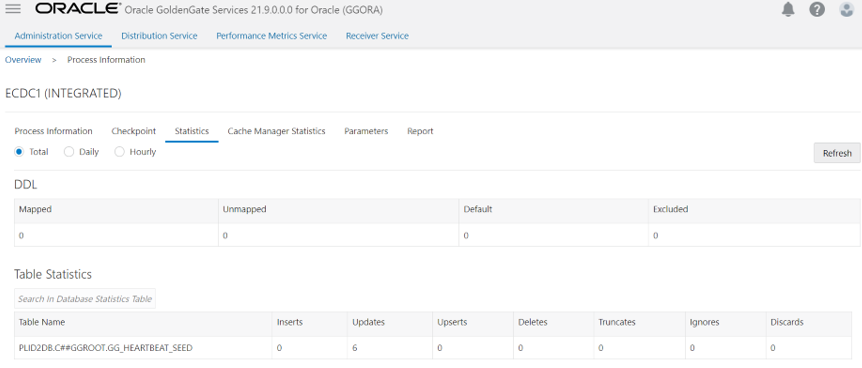

- Traverse to the statistics tab, and you should see updates to the GG_HEARTBEAT_SEED table as shown below

- Note: If the extract/replicat was already created before adding the Heartbeat table,then restart the extract and replicat process to reflect the heartbeat information.

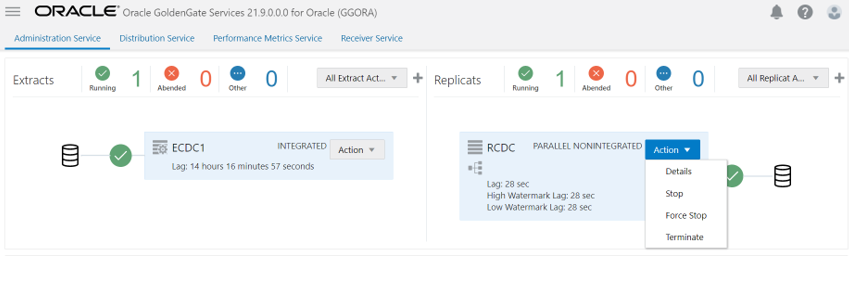

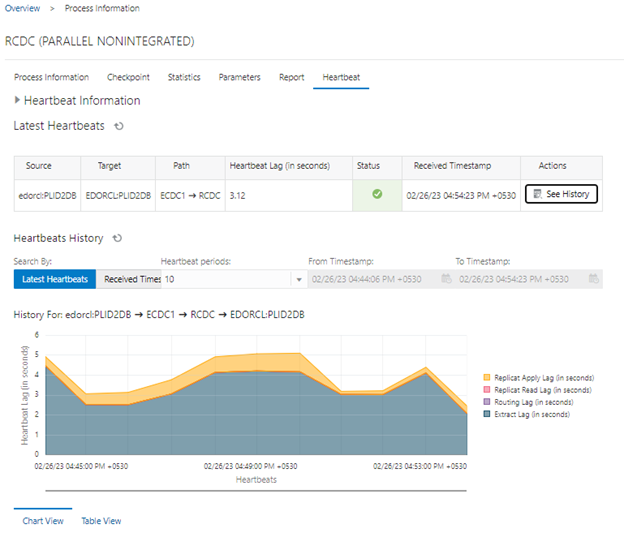

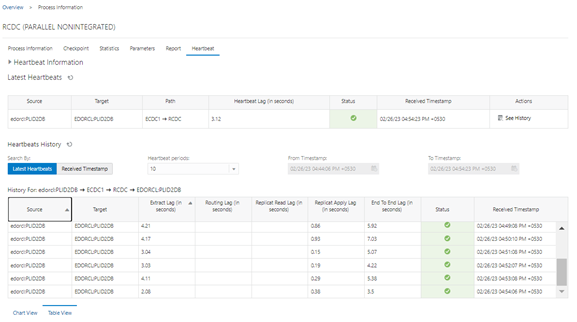

- To check the end-to-end lag and heartbeat information, Click on Action->Details on the Replicat

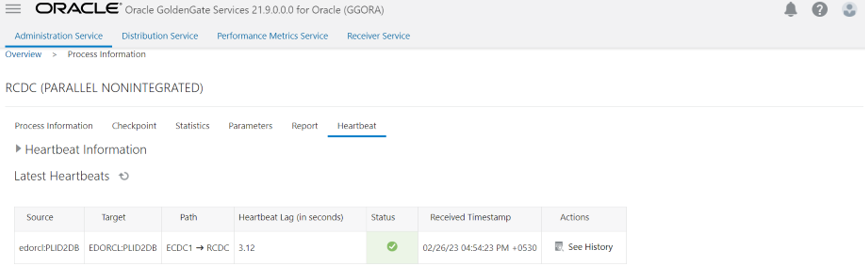

- Traverse to the Heartbeat tab to check the status and lag on the Heartbeat

- To check the lag at each hop within the replication,click on See History under Actions

- For a simplified view of the lag at each hop (Extract lag,Replicat lag, and end to end lag), click on the “Table View” option at the bottom

- Note:The Routing lag is not displayed here as the Extract and Replicat run in the same OCI GoldenGate deployment without any distribution path configured.

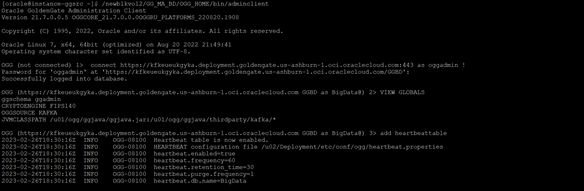

Section II:OCI GoldenGate (Big Data) deployment: Heartbeat Infomration

- The heartbeat records handled by OCI GoldenGate ( Big Data) are written to HEARTBEATTABLE files internally.

- To enable HEARTBEATTABLE, you need to log into the OCI GoldenGate (Big data) deployment from Admin Client.

connect <deployment_URL>:443 as <username> ! - Execute ADD HEARTBEATTABLE

- Once heartbeattable is added, execute the lag command on the replicat

In conclusion, the heartbeat information can be an incredibly helpful tool in determining end to end replication latency. By providing a real-time indication of lag at each hop in the replication stream, which will help quickly identify any issues or bottlenecks that may be impacting performance. As organizations continue to rely on data replication for critical business processes, the ability to monitor and optimize replication latency will become increasingly important.