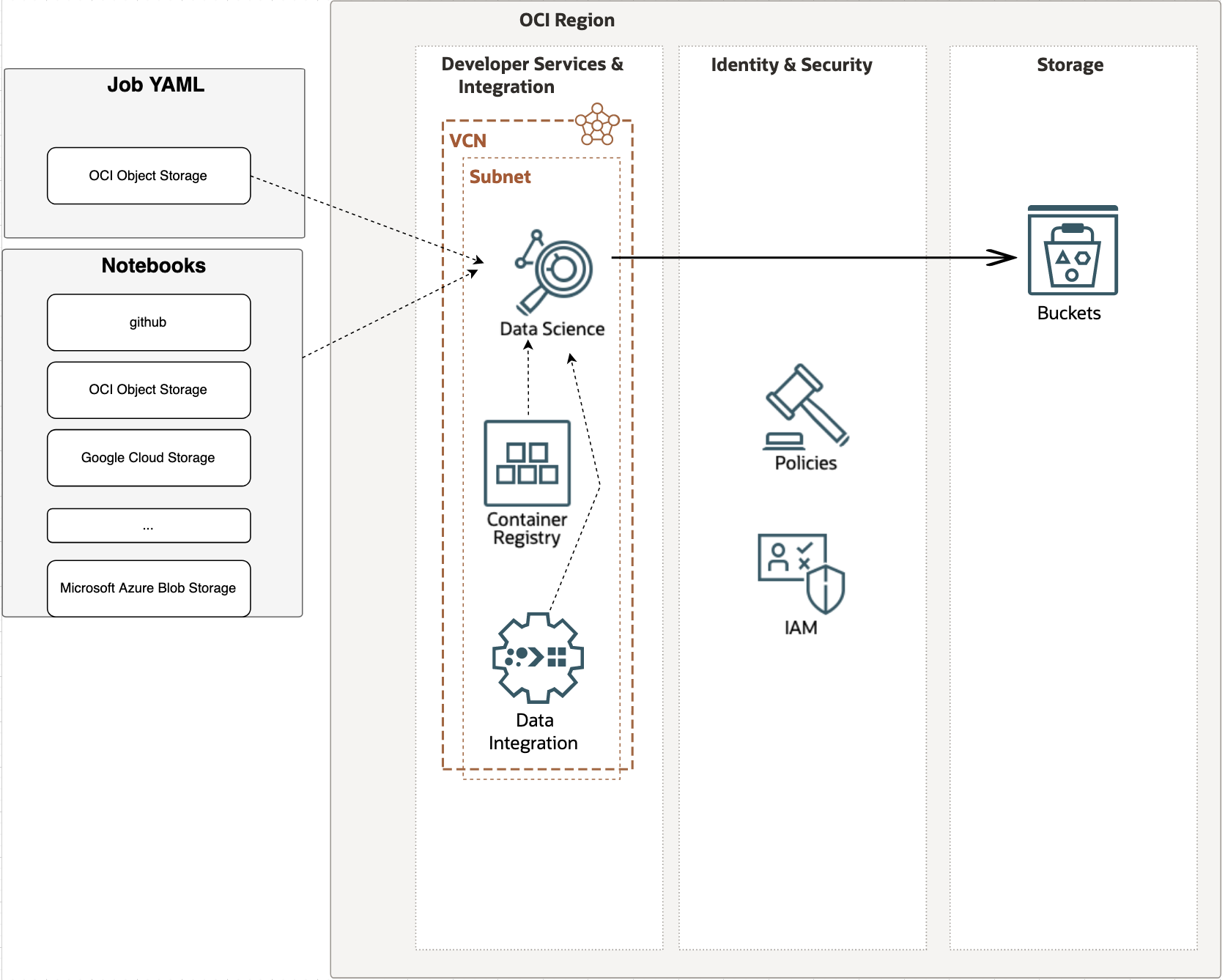

In this post we will look at the architecture for orchestrating and scheduling OCI Data Science notebooks as part of other data pipelines in OCI Data Integration, this is a follow on with an alternative approach to what was discussed here. The following diagram illustrates this architecture, it leverages the Oracle Accelerated Data Science SDK (see here) which is a python SDK for the many Data Science features, this SDK is used from a container image published to OCI container registry that triggers the notebook in OCI Data Science, the REST task in OCI Data Integration executes the data science job, the Data Science job is defined in YAML and pulled from OCI Object Storage, the task then polls on the OCI Data Science job run to complete using the GetJobRun API. The benefit of this approach is you can use the same container image for many notebook jobs, the job definitions are stored in YAML in OCI Object Storage.

Let’s see how this is actually done.

Using the Accelerated Data Science SDK

The contaienr image and supporting scripts are below You will need to download these, build the container image and push the container to OCI.

We use python to invoke the notebook.

|

|

The dsstatic.py file depends on the Data Science ADS it leverages an environment variable NOTEPAD_YAML_URL;

|

|

The sample YAML is below, this has the notebook along with the OCI Data Science projects and other info. For the demonstration I am using a publicly available ipynb and the ME_STANDALONE network option;

- noteBook the Python notebook URL to use, for example you can use the sample provided here to test https://raw.githubusercontent.com/tensorflow/docs/master/site/en/tutorials/customization/basics.ipynb

- jobName provide the job name for the OCI Data Science job that will be launched

- logGroupId the log group Ocid to be used.

- projectId the OCI Data Science project Ocid – create a project to be used

- compartmentId the compartment Ocid where the job will be created

- outputFolder the output folder in OCI to write the results ie. oci://bucketName@namespace/objectFolderName

The example is based on the Python/YAML example in [https://accelerated-data-science.readthedocs.io/en/latest/user_guide/jobs/run_notebook.html#tensorflow-example] (TensorFlow example) the OCI Data Science accelerated data science documentation. Its using a small shape for demonstration, with OCI you can run notebooks and data science jobs on all kinds of shapes including GPUs!

|

|

Permissions

Example permissions to test OCI Data Science and from OCI Data Integration.

Resource principal for testing from OCI Data Science for example (replace with your information);

- allow any-user to manage data-science-family in compartment YOURCOMPARTMENT where ALL {request.principal.type=’datasciencejobrun’}

- allow any-user to manage object-family in compartment YOURCOMPARTMENT where ALL {request.principal.type=’datasciencejobrun’}

- allow any-user to manage log-groups in compartment YOURCOMPARTMENT where ALL {request.principal.type=’datasciencejobrun’}

- allow any-user to manage log-content in compartment YOURCOMPARTMENT where ALL {request.principal.type=’datasciencejobrun’}

Resource principal for testing from Workspaces for example (replace with your information);

- allow any-user to manage data-science-family in compartment YOURCOMPARTMENT where ALL {request.principal.type=’disworkspace’,request.principal.id=’YOURWORKSPACEID’}

- allow any-user to manage object-family in compartment YOURCOMPARTMENT where ALL {request.principal.type=’disworkspace’,request.principal.id=’YOURWORKSPACEID’}

- allow any-user to manage log-groups in compartment YOURCOMPARTMENT where ALL {request.principal.type=’disworkspace’,request.principal.id=’YOURWORKSPACEID’}

- allow any-user to manage log-content in compartment YOURCOMPARTMENT where ALL {request.principal.type=’disworkspace’,request.principal.id=’YOURWORKSPACEID’}

Container Build and Push Deployment

Follow the regular container build and push pattern. I will not go through this here, there are tutorials on this here that are useful to go through;

https://docs.oracle.com/en-us/iaas/Content/Registry/Concepts/registryprerequisites.htm

Orchestrating in OCI Data Integration

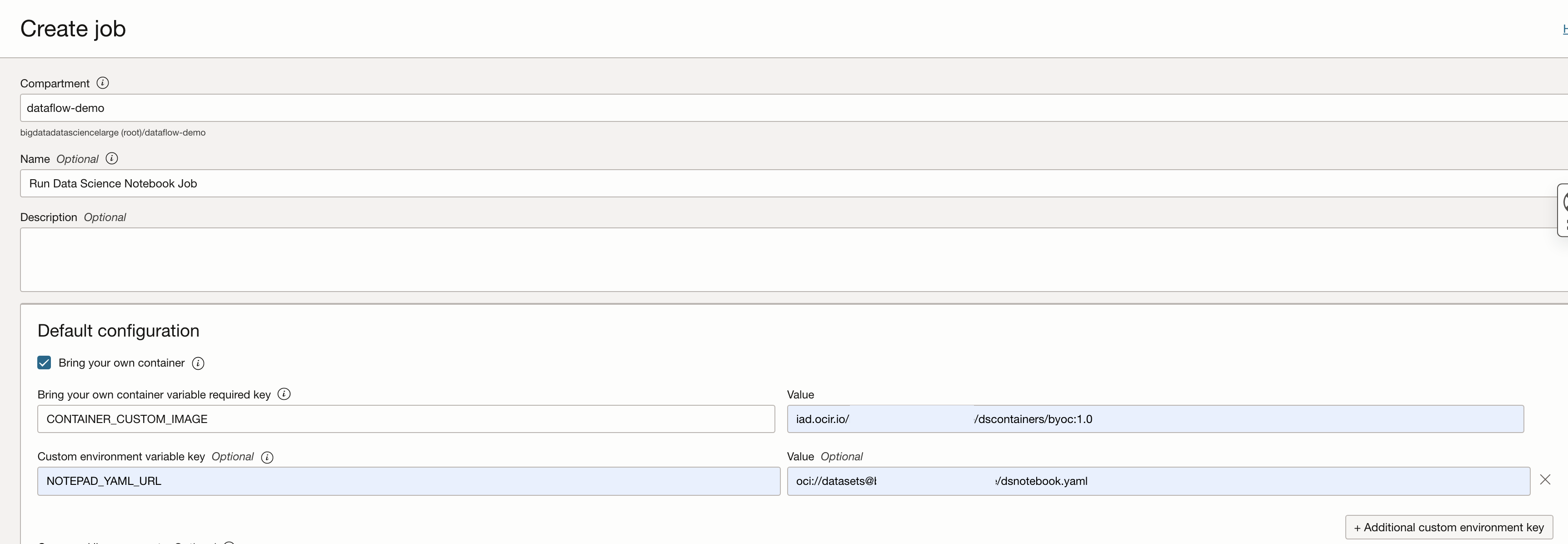

Create the Data Science Job using the Bring Your Own Container option – also pass the environment variable with the YAML (oci://bucket@namespace/object);

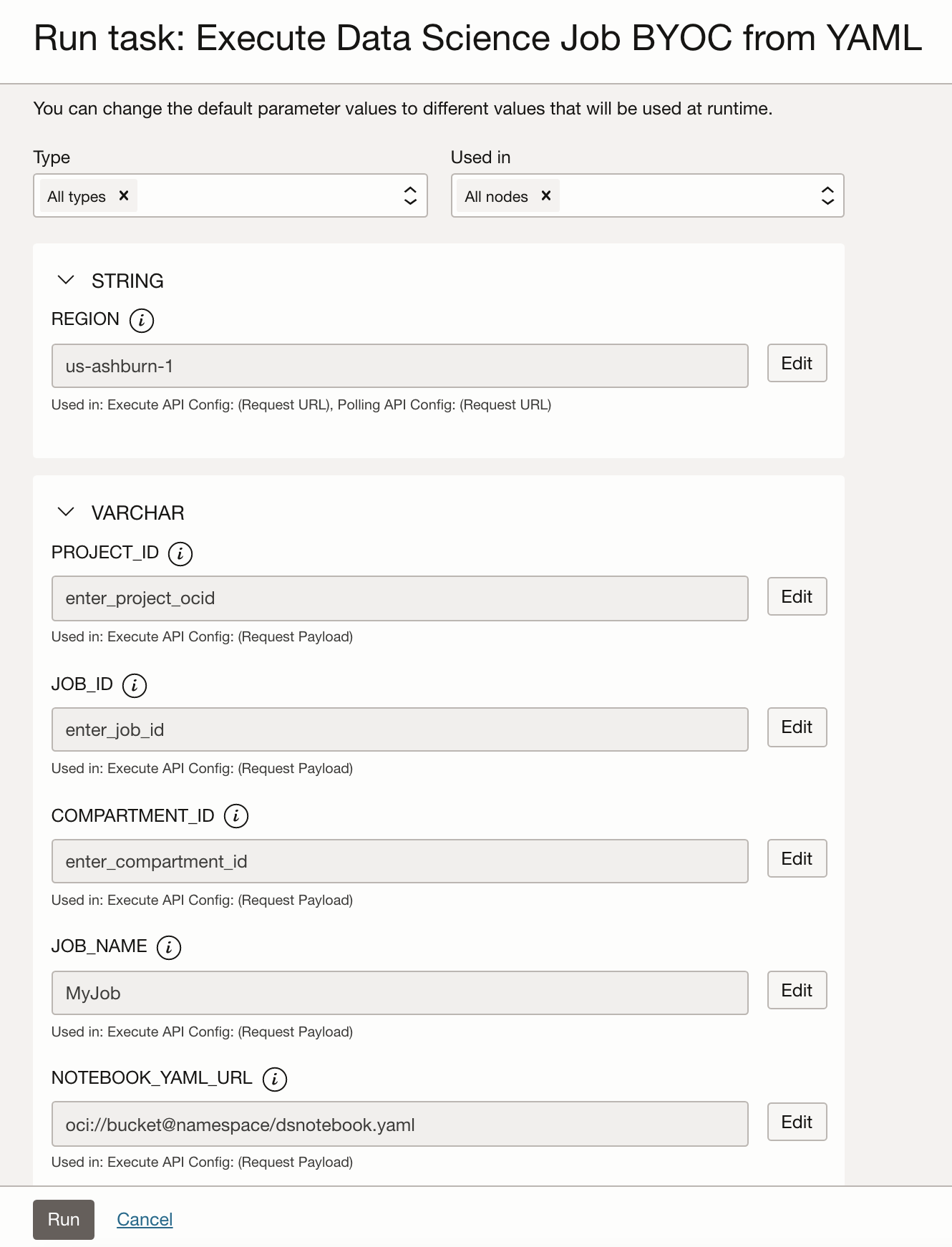

We will now use the OCI Data Science job id when we run the job within OCI Data Integration, it is a parameter in the REST task.

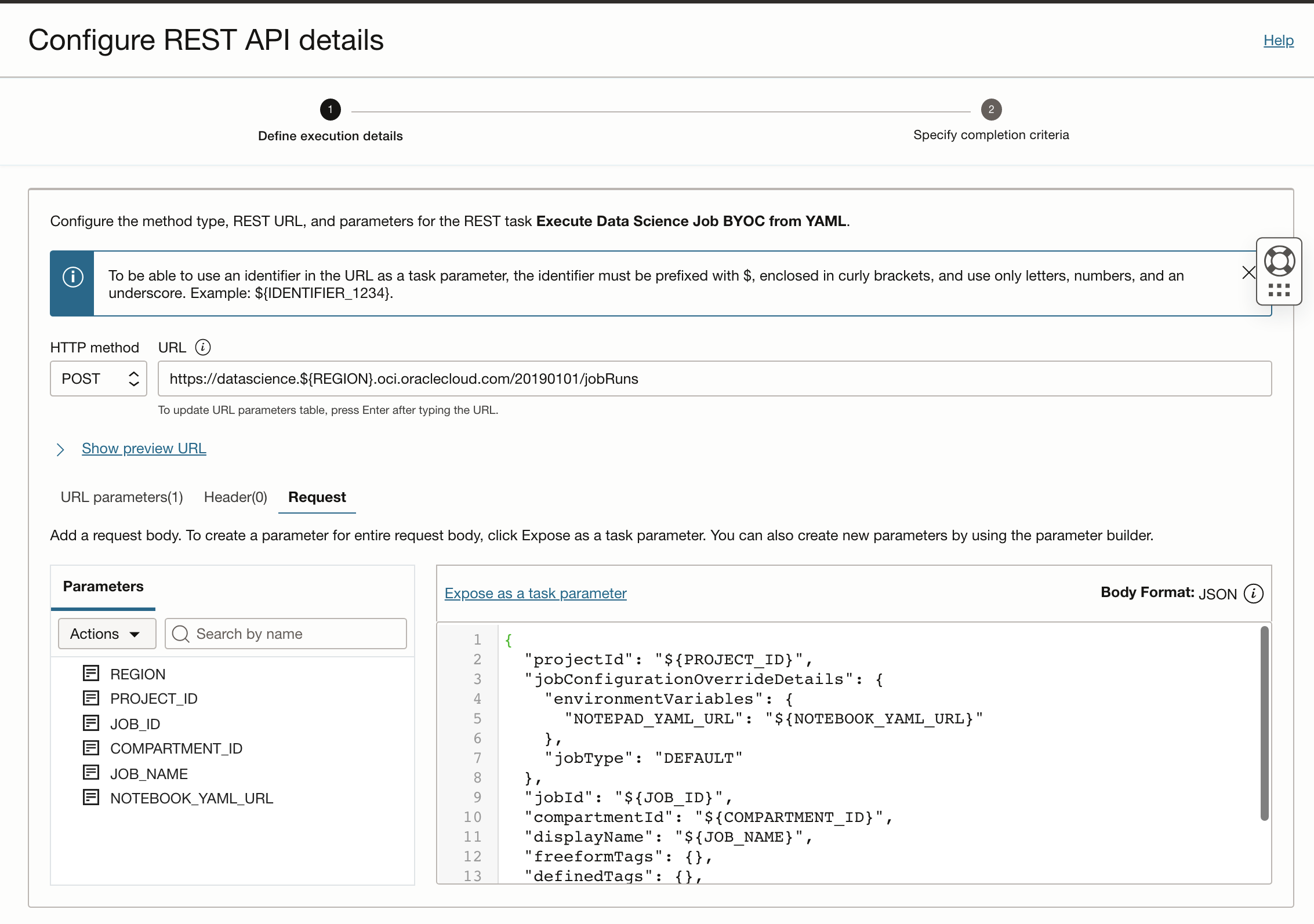

See the post here for creating REST tasks from a sample collection, the REST task calls the OCI Data Science API and then polls on the Data Science GetJobRun API [https://blogs.oracle.com/dataintegration/post/oci-rest-task-collection-for-oci-data-integration] (Invoking Data Science via REST Tasks), there is a REST task for BYOC;

The execution notebook can be orchestrated and scheduled from within OCI Data Integration. Use the Rest Task to execute the notebook.

You can get this task using the postman collection from here.

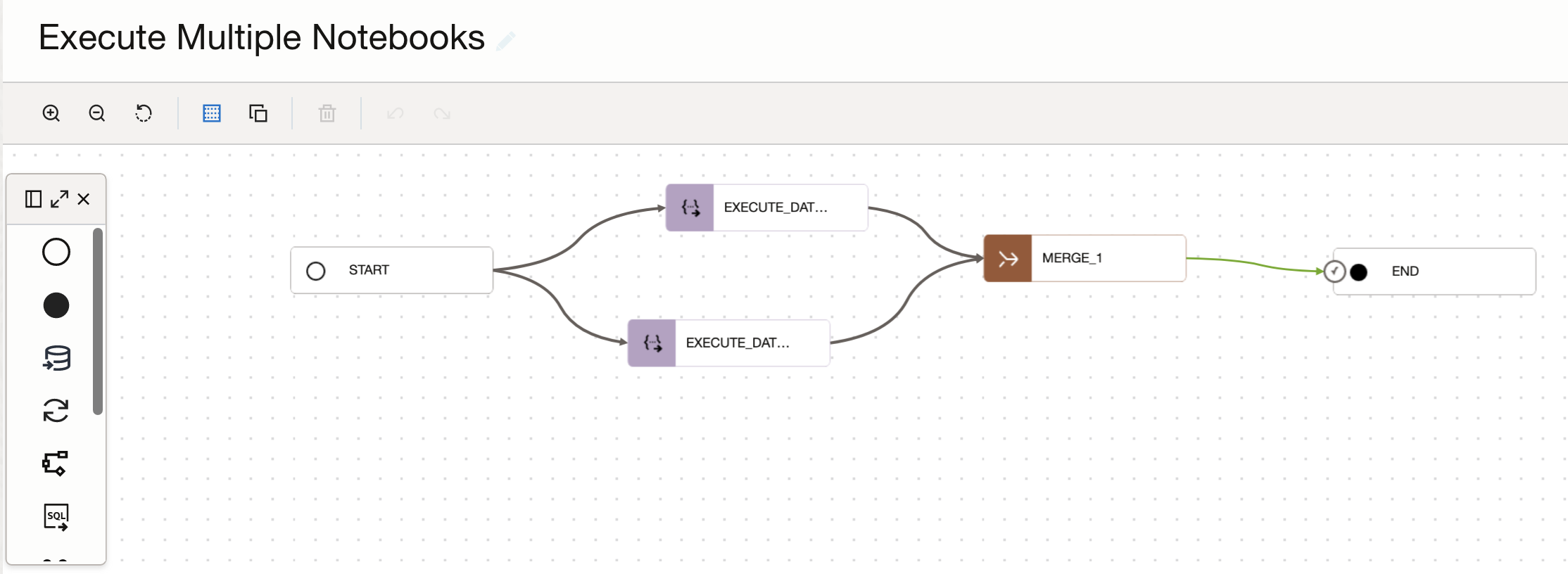

You can use this task in a data pipeline and run multiple notebooks in parallel and add in additional tasks before and after;

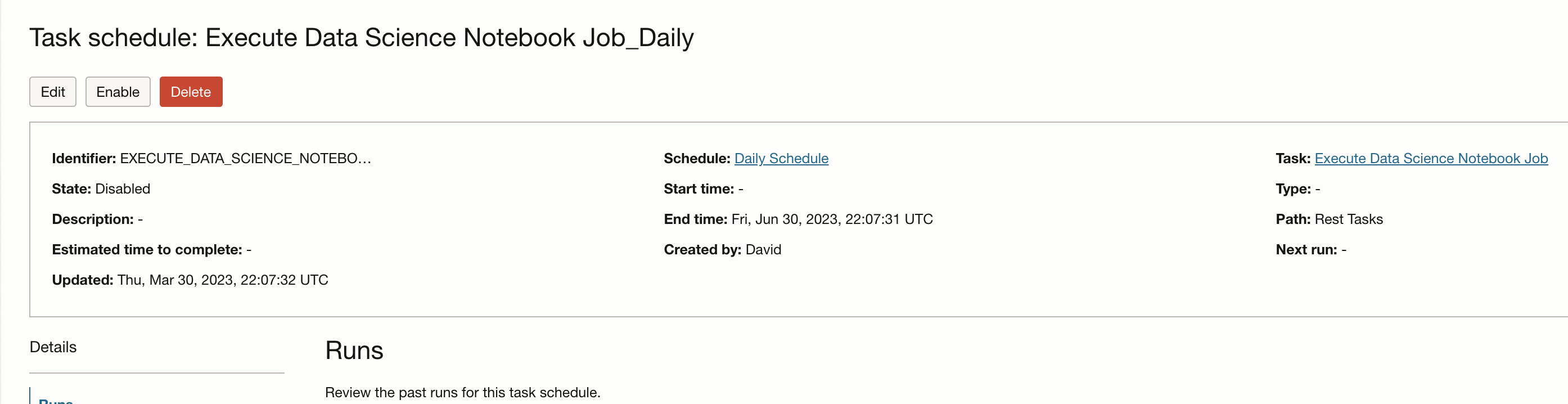

You can schedule this task to run on a recurring basis or execute this via any of the supported SDKs;

That’s a high level summary of the capabilities, see the documentation links in the conclusion for more detailed information. As you can see, we can leverage OCI Data Science to trigger the notebook execution and monitor from within OCI Data Integration.

Want to Know More?

For more information, review the Oracle Cloud Infrastructure Data Integration documentation, associated tutorials, and the Oracle Cloud Infrastructure Data Integration blogs.

Organizations are embarking on their next generation analytics journey with data lakes, autonomous databases, and advanced analytics with artificial intelligence and machine learning in the cloud. For this journey to succeed, they need to quickly and easily ingest, prepare, transform, and load their data into Oracle Cloud Infrastructure and schedule and orchestrate many otger types. oftasks including Data Science jobs. Oracle Cloud Infrastructure Data Integration’s Journey is just beginning! Try it out today!