In a hub configuration, Oracle GoldenGate Extract runs remotely on a separate server—often referred to as the hub—rather than on the source database machine. While this setup improves deployment flexibility and centralizes data movement, it introduces a critical performance dependency: the network connection between the database server and the hub.

Why Network Performance Matters

The capture component within the source database communicates with the Extract process over a SQL*Net connection. This means that network bandwidth and latency directly affect extract performance.

- Bandwidth must consistently exceed the rate of workload generation. If the network link is under-provisioned, capture will spend excessive time in “network wait,” unable to push changes efficiently. This leads to congestion in the extract pipeline and triggers flow control mechanisms, throttling overall throughput.

- Latency, especially when exceeding ~100 milliseconds, compounds the problem. High-latency environments force the capture process to wait longer for acknowledgments, reducing efficiency and delaying transaction delivery downstream.

How to Detect a Network Bottleneck

If you’re troubleshooting performance issues in a hub-based deployment, here are signs that network limitations are the root cause:

- The Extract process on the remote host appears mostly idle—low CPU and memory usage, minimal trail write activity, and healthy disk I/O.

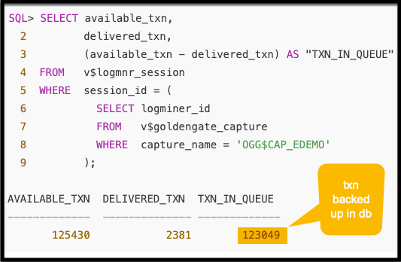

- Large number of LCRs accumulate in memory

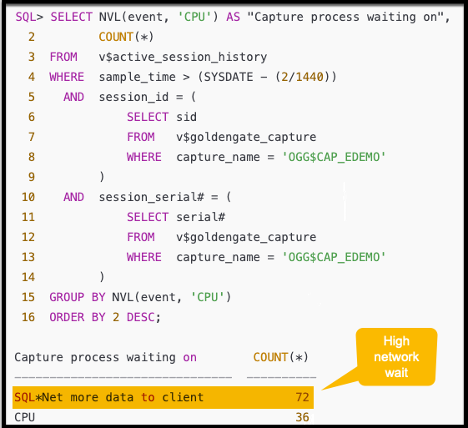

- Meanwhile, the capture component is dominated by “sqlnet more data to client” waits, indicating it’s struggling to push data to the remote Extract.

Capture component waiting on network wait

Additionally, you might observe cascading effects in the LogMiner pipeline:

- LogMiner Reader experiences redo allocation slot or logminer reader: buffer wait.

- LogMiner Preparer waits on logminer preparer: memory.

- LogMiner Builder stalls on logminer builder: queue full wait.

Delays occur when Logical Change Records (LCRs) accumulate in memory while waiting to be transmitted over the network. If the capture process can’t flush them due to a slow or saturated link or if the Extract is too busy, LCRs pile up in memory. A high number of chunks waiting to be sent triggers the LogMining engine to initiate flow control and throttle the components.

Resolution

When troubleshooting performance in hub-based Oracle GoldenGate Extract configurations, it’s crucial to distinguish between the two fundamental aspects of a network connection: bandwidth and latency.

- Bandwidth is the maximum rate at which data can be transferred across the network. If the bandwidth is lower than the workload generation rate, performance will suffer regardless of tuning efforts. In this case, the only solution is to increase the available bandwidth, as the network becomes a hard bottleneck that stalls the entire extract pipeline.

- Latency, by contrast, refers to the delay in communication across the network. If bandwidth is sufficient but latency is high, network waits still occur—especially due to frequent round trips between the capture and extract processes.

This is where SDU (Session data unit) comes in.

Tuning for Latency: The Role of SDU Size

In scenarios where bandwidth is sufficient, but latency is high, increasing the SDU size can help alleviate performance issues. SDU defines the packet size used for SQL*Net communication between the capture and extract processes. By default, it’s set to 8 KB, but Oracle Database 12c release 2 and above allows it to be increased up to 2 MB.

- Larger SDU packets utilize bandwidth more efficiently by reducing protocol overhead and maximizing the payload per transmission.

- They minimize round trips between the capture and extract processes, which is especially valuable when latency is high but bandwidth is sufficient.

- As a result, the capture process spends more time on CPU processing instead of waiting on the network—improving throughput and reducing extract latency.

Final Thoughts

In remote Extract deployments, network health isn’t just a background detail—it’s a performance linchpin. Poor bandwidth or high latency can silently undermine replication throughput, even when the database and Extract configurations appear sound.

By proactively monitoring SQL*Net waits, adjusting network bandwidth, SDU size when latency is high, you can ensure smooth, high-throughput data movement in hub-based GoldenGate environments—and avoid the silent drag of network-induced bottlenecks.

Reference

https://docs.oracle.com/database/121/NETAG/performance.htm#NETAG014

Other Blog posts by the Author

https://blogs.oracle.com/dataintegration/post/oracle-goldengate-parallel-replicat-performance-tuning