Over many years, Oracle Database has proven its versatility by not only supporting many of the latest data types and workloads including JSON, graph, spatial, in-memory, blockchain, and vector, but also by leveraging the best, state-of-the-art hardware. Today, we leverage cutting-edge CPU technologies in our Engineered Systems and Cloud.

At Oracle CloudWorld 2024 in Las Vegas, we demonstrated two proof-of-concept designs that use NVIDIA GPUs to accelerate AI Vector Search functionality in Oracle Database 23ai. Since then, we have continued to collaborate with NVIDIA on defining and implementing ways to enhance the efficiency and robustness of these proof-of-concept implementations.

The first capability we demonstrated at CloudWorld 2024 was the GPU-accelerated creation of vector embeddings from a variety of different input data sets such as text, images, videos, etc. Vector embedding creation is a necessary first step in AI Vector Search, and using NVIDIA GPUs helps improve the performance of bulk creation of vector embeddings for large volumes of data.

The second early-stage proof of concept we showed during that event illustrates how NVIDIA GPUs and NVIDIA cuVS can be used to accelerate vector index creation and maintenance within Oracle Database. Vector indexes are essential for accelerating AI vector search, and the fast creation and maintenance of vector indexes is therefore essential for supporting enterprise AI vector workloads.

Both capabilities show how NVIDIA GPUs can work in synergy with CPUs to accelerate computationally intensive portions of vector search workloads.

We are always looking for innovative ways to combine our software innovations with modern hardware that can significantly benefit Oracle Database customers”, said Tirthankar Lahiri, Senior Vice President, Mission-Critical Data and AI Engines, Oracle. “Our AI vector search capabilities are already highly optimized to leverage modern CPU technology, and our collaboration with NVIDIA on the use of modern GPUs to accelerate compute-intensive portions of the AI vector workflow, is an excellent example of our approach to improve the synergy between software and hardware, in order to better meet the needs of our customers

NVIDIA AI tools and GPUs can accelerate Oracle Database vector embedding generation and index creation, enabling larger amounts of data to be analyzed for semantic search and retrieval augmented generation (RAG),” said Kevin Deierling, Senior Vice President of Networking, NVIDIA. “By making it easier to combine GPU-accelerated AI Vector Search with business data, Oracle Database users will be able to improve customer experiences and employee productivity.

Accelerating Oracle Database performance for Vector Search capabilities

AI Vector Search in Oracle Database 23ai enables intelligent search for unstructured as well as structured business data by using AI techniques. It includes the ability to run imported ONNX models using CPUs inside Oracle Database, a VECTOR datatype to store vector embeddings, VECTOR indexes for approximate nearest neighbor (ANN) search, and SQL operators for native vector search capabilities that let developers combine searches of traditional business data and vector data within Oracle Database and simplify the apps they’re creating.

We have highly optimized vector search workloads by implementing SIMD-optimized vector distance kernels and state-of-the-art approximate search indexes which leverage the full memory bandwidth and multi-core parallelism of modern-day CPU platforms such as Exadata Storage Servers. Even after maximizing the benefits of CPU-based processing, certain aspects of vector workloads, including generating vector embeddings and creating vector indexes, can benefit from GPU acceleration.

GPUs provide exceptional memory bandwidth and computational power for specialized compute-intensive use cases. They do not replace the general-purpose CPUs used for traditional database workloads like OLTP or traditional analytics. However, they may help speed up operations involving “dense computations,” including common AI and machine learning (ML) operations that involve multi-pass computations on the same memory-resident data, or bulk vector operations that are often required by vector databases.

In order to increase the performance of GPUs in the creation of vector indexes, highly optimized algorithms are needed for the GPUs. In our prototyping efforts, we are using NVIDIA cuVS, an open source library that contains algorithms for vector search and clustering. These algorithms are designed to increase throughput, decrease latency, and speed up index builds across all levels of recall.

Generating vector embedding for Oracle Database 23ai using OCI’s NVIDIA GPU-accelerated instances

Vector embeddings are a mathematical representation of the semantics of complex content, such as images, text, or complex business objects. Vectors are generated by deep learning AI models called “embedding models.” Vectors that are stored in and searched by Oracle Database are created by running embedding models against source data that can be resident either within or external to the database. The embedding models themselves can be database resident or externally invoked. In either case, the resulting vectors are then stored in VECTOR columns within the database.

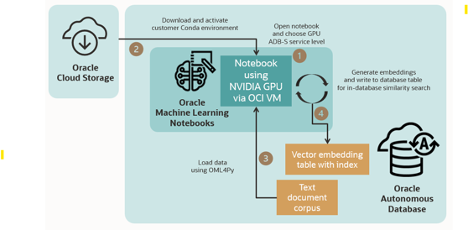

In our first demonstration at Oracle CloudWorld, we showed how integrated access to NVIDIA GPUs through Oracle Machine Learning (OML) Notebooks in Oracle Autonomous Database – Serverless can be used to generate vector embeddings. This capability lets users leverage the Python interpreter in OML Notebooks—an integral feature of Autonomous Database—to load data from a database table into a GPU instance. This instance supports the notebook Python interpreter, and allows applications to bulk-generate vector embeddings and store those embeddings within Autonomous Database. Once stored, they can be searched using AI Vector Search. Provisioning of the GPU instance is done automatically for users, and data is transferred between the database and the GPU instance using functions from Oracle Machine Learning for Python.

Generating Oracle Database 23ai VECTOR indexes on GPUs

Vector indexes play a crucial role in approximate search of vector data. Constructing these indexes is compute-intensive and time consuming. Furthermore, vector indexes have to be maintained and periodically refreshed (or even repopulated) as data gets updated or new data is loaded.

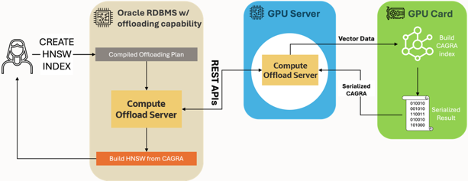

In this proof-of-concept demonstration at Oracle CloudWorld, we showed the integration of Oracle Database 23ai with NVIDIA GPUs for the creation of an in-memory graph index type known as HNSW (Hierarchical Navigable Small World). The demonstration highlighted how a new, Oracle-developed compute-offloading framework enables Oracle Database to transparently delegate complex vector index creation tasks to external servers equipped with powerful GPUs—maintaining simplicity while delivering enhanced performance.

When a user sends a request to create an HNSW vector index to the Oracle Database, the database intelligently compiles and redirects the task, along with the necessary vector data, to a new Compute Offload Server process. This server process leverages the computational power of GPUs and employs the CAGRA algorithm, part of the NVIDIA cuVS library, to rapidly generate a graph index. Once the CAGRA index is created, it is automatically and efficiently transferred back to the Oracle Database instance where it is converted into an HNSW graph index. The final HNSW index is then readily available for subsequent vector search operations within the Oracle Database instance.

This innovative approach combines the versatile converged data management capabilities of Oracle Database with the raw computational power of GPUs. By seamlessly integrating GPU acceleration into the database and offloading the computationally intensive task of index creation to specialized hardware, we can achieve remarkable improvement in processing speed and efficiency, while maintaining the ease of use and reliability that Oracle Database users expect.

Proof-of-concept enhancements since Oracle CloudWorld 2024

Since Oracle CloudWorld, we have continued to work with NVIDIA to enhance our proof-of-concepts. In particular, we have focused our collaborative efforts in two areas: a) developing and implementing streaming APIs for efficient data processing, and b) enhancing memory management for large, real-world applications.

Streaming APIs for efficient data processing

We are collaborating with NVIDIA to introduce a new set of streaming APIs in cuVS that revolutionize how data interact with GPUs for CAGRA graph index creation. Rather than requiring the entire dataset to be prepared and cached on the GPU node before processing, data is now intelligently chunked and streamed to the GPU in real-time.

This streaming approach helps reduce memory overhead on the GPU nodes during index creation since data no longer needs to be stored contiguously in memory before index creation operations can be invoked. Furthermore, this is done with no increase in latency, achieving performance that is similar to using the original APIs.

Advanced memory management

While GPUs have increasingly large amounts of local memory, real-world applications have datasets which greatly exceed that capacity. Oracle is working with NVIDIA on sophisticated memory management strategies that we expect will have benefits that include:

- Efficient processing of datasets that are larger than GPU memory.

- Optimized data movement between host memory and GPUs.

- Delivering high levels of performance while managing memory constraints.

Conclusion

The ability to offload vector embedding generation to GPUs and the proof-of-concept use case on offloading computationally intensive portions of HNSW index creation show how NVIDIA GPUs can and potentially could be used in the AI Vector Search pipeline. Oracle’s ongoing work and collaboration with NVIDIA is leading to increasingly robust implementations designed to address the needs of our enterprise customers. Stay tuned for more exciting developments from this collaboration.

For more information on how to get started,