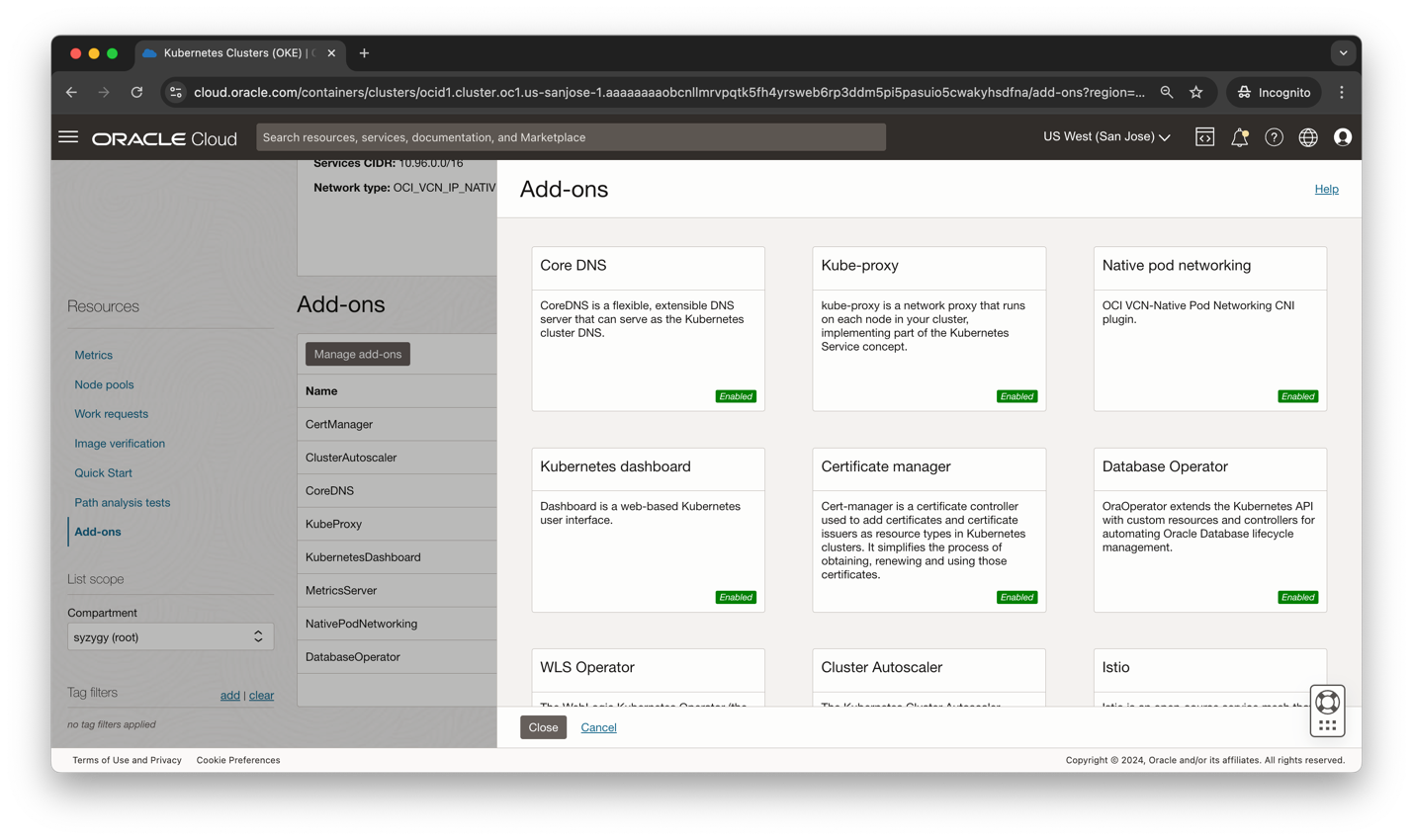

Early last year, Oracle Cloud Infrastructure Kubernetes Engine (OKE) introduced cluster add-ons to simplify the deployment and ongoing lifecycle management of commonly used cluster software. OKE add-ons introduced an opportunity to offload the management of cluster operational software to Oracle. They also provide customers with advanced use cases the flexibility to customize these add-ons or fully opt out of default Kubernetes operational software to bring equivalent software.

We are excited to share the release of four additional add-ons: the Kubernetes Cluster Autoscaler, the Istio service mesh, the OCI native ingress controller, and the Kubernetes Metrics Server, as well as support for new configuration arguments to provide you greater control over the add-ons deployed to your clusters.

What are Kubernetes add-ons?

Kubernetes cluster add-ons are software tools that implement cluster features and extend the core functionality of Kubernetes clusters. Add-on software is built and maintained by one of three sources: The Kubernetes community, third-party vendors, or cloud providers like OKE. Some add-ons are essential for a cluster to operate correctly and are installed by default into every OKE cluster. Examples include CoreDNS, your choice of Flannel or OCI virtual cloud network (VCN)-native pod networking container network interface (CNI), and kube-proxy. You can see these add-ons on all clusters created through OKE.

Other add-ons are optional components that extend core Kubernetes functionality to improve cluster manageability and performance, such as how the Kubernetes Cluster Autoscaler enables your cluster to dynamically add and remove nodes based on changing workload demands. You see these add-ons only if you choose to deploy them to your cluster.

Why should I use add-ons?

Simplicity

Kubernetes clusters and the workloads that run on them consist of numerous components, many of which are the user’s responsibility to maintain and contribute to operational overhead. To keep this overhead to a minimum, many of our users prefer to offload the responsibility of as many cluster components as possible to a trusted provider like OKE. Given that preference, we identified common operational software used by our customers for which we could assume responsibility. This ownership starts from building the container images and manifests and extends to configuring the add-ons and keeping them updated over time. All OKE add-ons include the latest security patches, bug fixes, and are validated to work with OKE. Users can opt into automatic add-on updates to help ensure that any time a new add-on version is released or a cluster is upgraded, the update is automatically applied to the add-ons running in their cluster.

Using add-ons allow you to consistently ensure that your OKE clusters are secure and stable and reduce the amount of work that you need to do to install, configure, and update operational software. Add-ons don’t encroach on the flexibility of users to deploy their choice of third-party software to their clusters, including alternatives to the add-ons we provide.

Control

OKE add-ons give you more options for configuring essential cluster software. You can choose to enable optional add-ons you want to deploy to your cluster or disable or opt out of using essential add-ons altogether. Users with specific compliance or audit requirements can choose to pin to an add-on version, rather than opt into automatic updates, to gain greater control over when their add-ons are updated.

Each add-on comes with a set of supported customizable arguments. You can change the default configuration of the add-ons through the OKE API and update them when needed. For example, CoreDNS, a general purpose authoritative domain name system (DNS) server commonly found in Kubernetes environments, comes with the option to bring your own Kubernetes ConfigMap, with a Corefile section that defines CoreDNS behavior. This Corefile configuration includes several CoreDNS plugins with different DNS functions to extend the basic functionality. These supported customizations allow you to tailor your add-ons to your specific use cases while still benefitting from lifecycle management by Oracle. Other arguments are common to all add-ons and include options like nodeSelectors and tolerations, which are used to control the worker nodes on which add-on pods run. For more information about add-on arguments, see Cluster Add-on Configuration Arguments.

What has changed since launch?

OKE add-ons launched with support for a number of essential add-ons, including CoreDNS, kube-proxy, the OCI VCN-native pod networking CNI plugin, flannel CNI plugin, snf optional add-ons, including the Kubernetes Dashboard, the Oracle Database Operator for Kubernetes, the WebLogic Kubernetes Operator, and cert-manager. Since launch, we continued to invest in the add-on model by working with customers to identify more commonly used software that we could add support for through OKE add-ons and more configurations options that customers wanted to control. This collaboration resulted in the release of four additional add-ons, including the Kubernetes Cluster Autoscaler, Kubernetes Metrics Server, the Istio service mesh, and the OCI native ingress controller, as well as through adding support for new supported configuration arguments applicable to all add-ons. Now, let’s look at the changes in detail.

Cluster Autoscaler

The Kubernetes Cluster Autoscaler automatically resizes a cluster’s managed node pools based on application workload demands. When pods can’t be scheduled in the cluster because of insufficient resources, Cluster Autoscaler adds worker nodes to a node pool. When nodes have been underutilized for an extended time and when pods on those nodes can be placed on other existing nodes, Cluster Autoscaler removes worker nodes from a node pool. For more information, see Deploying the Kubernetes Cluster Autoscaler as a cluster add-on.

Native Ingress Controller

In the world of Kubernetes, ingress is an object that comprises a collection of routing rules and configuration options to handle inbound HTTP and HTTPS traffic. To avoid the need for a Kubernetes service of type LoadBalancer and an associated OCI load balancer to be created for each service requiring access to traffic from the internet or from a private network, you can use a single ingress resource to consolidate routing rules for multiple services,

The OCI native ingress controller implements the rules and configuration options defined in a Kubernetes ingress resource to load balance and route incoming traffic to service pods running on worker nodes in a cluster. The OCI native ingress controller creates an OCI flexible load balancer to handle requests and configures the OCI load balancer to route requests according to the rules defined in the ingress resource. If you change the routing rules or other supporting resources, the OCI native ingress controller updates the load balancer configuration accordingly. For more information, see Using the OCI native ingress controller as a cluster add-on rather than as a standalone program.

Istio Service Mesh

Istio is an open source service mesh used to manage inbound traffic, service-to-service communication, collect telemetry, and enforce policies across the cluster. Istio uses Envoy proxies, deployed as sidecars, to manage all inbound and outbound traffic for all services in the mesh, without requiring changes to the underlying services. For more information about Istio, see the Istio documentation.

When you deploy Istio as a cluster add-on using OKE, you can optionally create an Istio ingress gateway to route incoming HTTP and HTTPS requests. Alternatively, you can use other supported ingresses to route traffic to the appropriate service running on the cluster. An ingress gateway is a single point of entry into the service mesh through which all incoming HTTP and HTTPS request traffic flows. Istio uses ingress and egress gateways to configure load balancers running at the edge of a service mesh. The ingress gateway routes traffic to the appropriate service based on the request. Similarly, an egress gateway defines exit points from the service mesh For more information, see Using the OCI native ingress controller as a cluster add-on rather than as a standalone program.

Metrics Server

The Kubernetes Metrics Server is a cluster-wide aggregator of resource usage data. The Kubernetes Metrics Server collects resource metrics from the kubelet running on each worker node and exposes them in the Kubernetes API server through the Kubernetes Metrics API. Other Kubernetes add-ons require the Kubernetes Metrics Server, including the Horizontal Pod Autoscaler and the Vertical Pod Autoscaler). For more information, see Using the Kubernetes Metrics Server as a cluster add-on rather than as a standalone program.

Additional configuration arguments

With the add-ons released since launch, OKE has added support for the following cluster add-on configuration arguments applicable to all add-ons. Configuration arguments are key value pairs that you pass in when you enable a cluster add-on.

- affinity: Kubernetes node affinity is a Kubernetes scheduling concept that allows you to assign a Kubernetes pod, in this case the pods associated with your chosen cluster add-on, to a particular node in a Kubernetes cluster. It helps ensure that your add-on is scheduled onto a node with a matching label.

- containerResources: Container resources allow you to specify the resource quantities that the containers in each cluster add-on request and set resource usage limits that those containers can’t exceed. This control is useful when your use case requires different resource requirements than those configured by default.

- rollingUpdate: Rolling update deployments support running multiple versions of an application at the same time. When you or an autoscaler scales a RollingUpdate deployment in the middle of a rollout (either in progress or paused), the deployment controller balances the extra replicas in the existing active ReplicaSets (ReplicaSets with pods) to mitigate risk. This process is called proportional scaling. This argument allows you to control the wanted behavior of rolling update by setting values for maxSurge and maxUnavailable.

- topologySpreadConstraints: Topology spread constraints allow you to control how the pods associated with your chosen cluster add-on are spread across your cluster. This feature can help you achieve high availability and efficient resource utilization.

We plan to continue adding support for more supported add-on arguments, so feel free to reach out if you’d find a particular argument useful for your workloads.

Conclusion

The new updates to our add-on feature give customers more add-ons to choose from and more supported arguments to for greater flexibility. We hope that these changes enable you to offload continued labor of managing more of your cluster software and that they provide our advanced users more configuration options to support their complex use cases.

For more information, see the following resources:

- Configuring Cluster Add-ons (documentation)

- OCI Kubernetes Engine (documentation)

- Kubernetes at scale just got easier

- Start your free trial of Oracle Cloud Infrastructure