In today’s rapidly evolving AI landscape, separating the hype of an AI deployment from its actual ROI is important, and, in the process, you must maintain full control over your data, with the right controls in place.

From running two GPUs on the edge through a single device, to running a full hyperscale cloud with a GPU cluster in a customer’s data center, to running the largest and most complex large language models (LLMs) on the world’s largest cloud supercomputer, Oracle’s Distributed Cloud portfolio with AI infrastructure, powered by NVIDIA accelerated computing platforms, enables AI anywhere. A consistent stack from edge to core to cloud offers the right infrastructure for you to develop and deploy innovative sovereign AI solutions and address key data requirements like residency, privacy, and security.

AI Opportunities, Sovereignty Challenges

AI presents enormous opportunities across multiple industries by enabling automation, data-driven decision-making, and enhanced personalization. However, these advancements come with significant data sovereignty challenges as nations and organizations alike seek to control data generated within their borders. Most AI systems rely on vast datasets, raising concerns about where data is stored and who has access to it. Balancing innovation with data control requires clear legal frameworks, secure data-sharing agreements, and the development of AI models that can operate within jurisdictional constraints.

AI also strongly depends on the datasets it draws from, which are often distributed across heterogeneous environments, such as enterprise data centers, factory floors, retail locations, medical facilities, colocation data centers, and public cloud regions, introducing new data integration challenges across systems.

Oracle Cloud Infrastructure (OCI) enables you to run AI-enabled applications on a consistent stack across environments with the same security, the same pricing and service level agreements (SLAs), the same support structure, and access to the same set of hyperscaler cloud services. This cross-environment consistency helps simplify increasingly complicated deployment possibilities for AI, which could undermine the need for data control that underpins sovereign AI.

Enhance Existing Applications or Build Next-Gen AI-Powered Solutions Anywhere

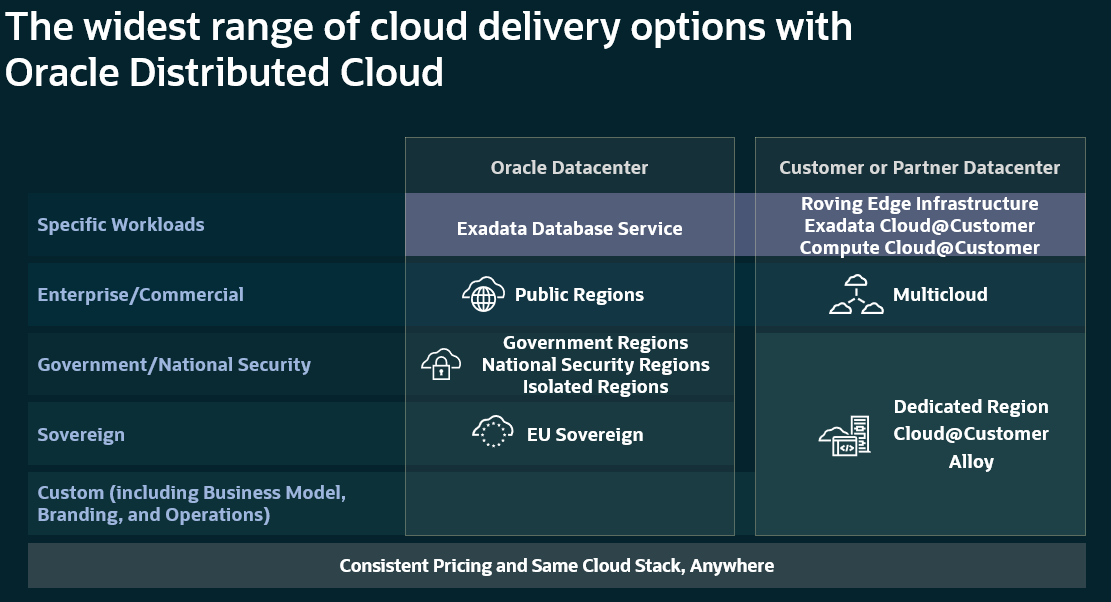

To help you meet your requirements for AI workloads in the public cloud, in your data center, or at edge locations, OCI offers an industry-leading Distributed Cloud portfolio that lets you deploy AI anywhere, enabling you to unlock deeper insights from all of your data and improve controls across environments.

For Sovereign AI, Oracle offers a broad range of customizable controls that can help you address regulatory and sovereignty requirements around data control, residency, and processing locations. Oracle also offers secure and physically isolated cloud regions that can support governments and defense missions.

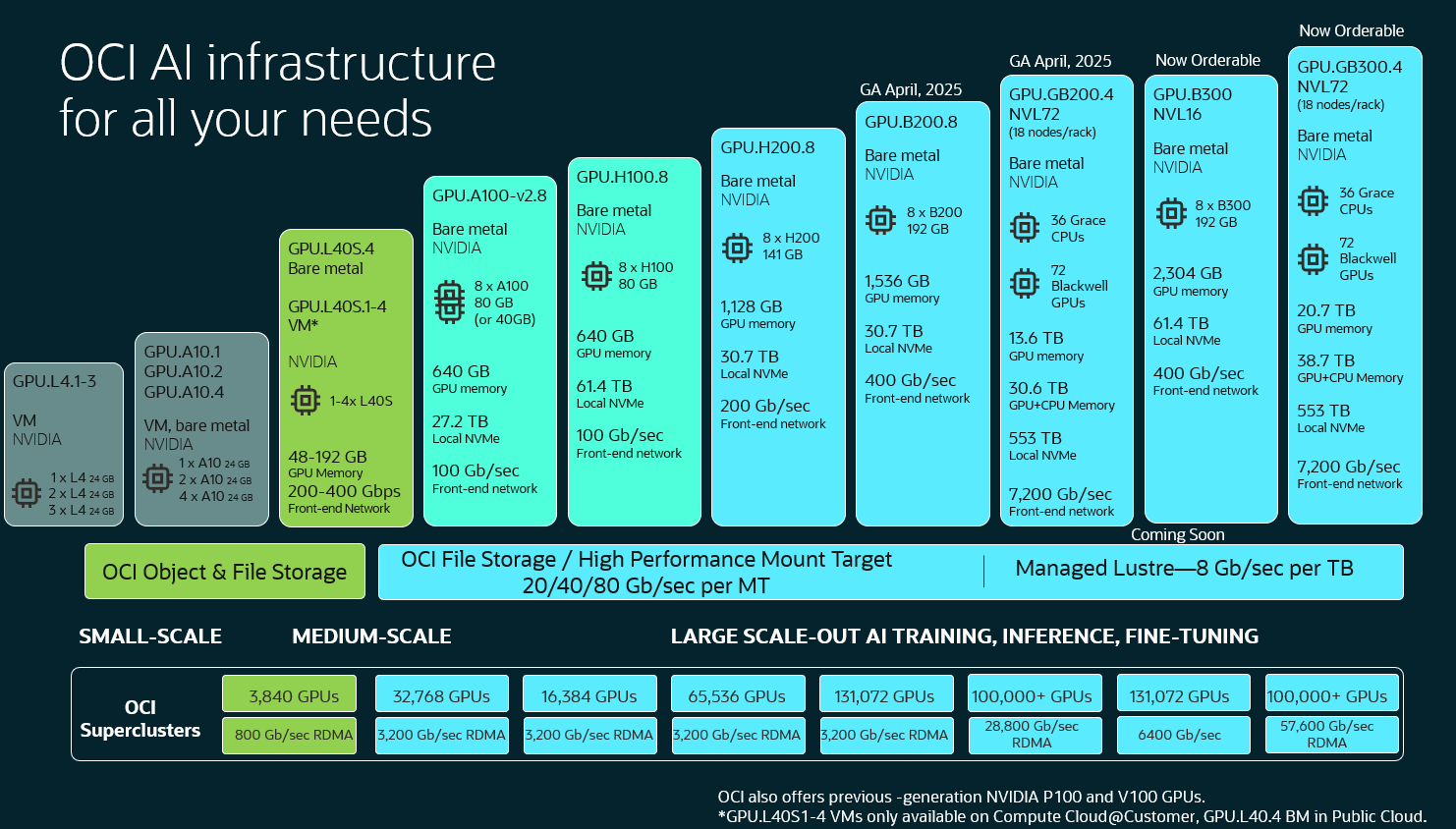

OCI offers a broad lineup of NVIDIA GPU-enabled solutions, designed to handle AI workloads of any scale, from small projects to massive enterprise applications. With open source models and frameworks, customers can use NVIDIA AI Enterprise in a Bring Your Own License (BYOL) model today and can soon use it natively from the Oracle Cloud Console. OCI also offers industry-leading scalability and performance with the world’s first and largest zettascale supercomputer in the cloud, featuring an unprecedented over 131,000+ NVIDIA Blackwell GPUs in a single OCI supercluster.

Oracle is uniquely positioned to provide sovereign AI to both governments and enterprises from edge to core to cloud. Large entities looking to unlock even greater value across their AI deployments can optimize GPU configurations across cloud deployment models in OCI’s Distributed Cloud, enabling the same security, pricing, and SLAs for a seamless experience across environments.

Edge

Because of high-performance, low-latency, sovereignty, or security concerns, sometimes customers need their compute, networking, and storage as close to the point of data generation as possible. Oracle’s portfolio of Distributed Cloud edge offerings provides the ability to deploy NVIDIA accelerated compute wherever you need it: in your own data center or even in remote environments. Customers that require ultra-low latency and a ruggedized enclosure can choose their preferred device from Oracle’s Roving Edge Infrastructure. These ruggedized devices deliver cloud computing and storage services at the edge of networks and in disconnected locations and now include the option of including up to three NVIDIA L4 GPUs in a single device. These options are ideal for data collection, basic machine learning (ML), edge inferencing, and support of small-scale LLMs of up to 15–20 billion parameter models.

An excellent example of the need for low-latency and high-performance edge computing comes in the hospital room. True Digital Surgery (TDS) designs, customizes, and develops image processing software in the field of image guided microsurgery and designs and develops medical device hardware. They chose Oracle’s Roving Edge Infrastructure for fast and secure bidirectional data transfer from their 3D digital surgical microscope in the operating room to the cloud. “True Digital Surgery is committed to improving surgical outcomes through meaningful innovation and data sharing,” said Aidan Foley, chairman and CEO of TDS. “Our collaboration with Oracle marks a significant milestone in our mission to redefine surgical practices in neurosurgery, advance data sharing, and enhance patient care.”

When more edge-deployed GPU processing power is critical, customers can deploy rack-scale AI platforms behind their firewall, in their data center, or in remote locations with Oracle Compute Cloud@Customer. Oracle Compute Cloud@Customer is a fully managed hybrid cloud solution that lets you use OCI services, including compute, storage, networking, and OCI Kubernetes Engine (OKE) and can be powered with 4–48 NVIDIA L40S GPUs. The solution supports medium-scale AI training, inference, and fine tuning of LLMs of up to 70 billion parameters. Customers looking to do retrieval-augmented generation (RAG) or semantic search on their business data can pair a Compute Cloud@Customer with an Exadata Cloud@Customer for an end-to-end solution.

RSS Hydro, a geospatial and modeling intelligence platform which is redefining flood and wildfire risk management to positively impact the world, partnered with NVIDIA and OCI to develop capabilities to predict and respond to extreme weather conditions that can help to prevent the loss of life, property, and infrastructure. Guy Schumann, founder and CEO, states: “RSS Hydro is used by national governments, local municipalities, utility and infrastructure companies, and enterprises with high-value assets in disaster mitigation and protection of critical infrastructure. OCI’s AI Infrastructure accelerated by NVIDIA L40S allows us to train our FloodSENSE ML model for accurate global flood detection in the most cost-effective and highly performant manner.”

Core: Enterprise Data Centers

Like customers with specific edge requirements, some customers want to reap the benefits of the cloud’s agility, scalability, and economics, without going to the public cloud. Oracle uniquely enables customers to deploy their own complete OCI cloud on-premises for maximum data residency and sovereign AI. Through OCI Dedicated Region, Oracle is the only hyperscaler that enables a customer to deploy an entire cloud region, with all 150+ core services available in the public cloud, in their own data center. This offering includes the same services, security, SLAs, and support structure, including the thousands of annual patches, updates, and upgrades Oracles applies to its own public cloud regions, at the same price as in the public cloud.

e& UAE announced its deployment of NVIDIA Hopper GPU clusters within its OCI Dedicated Region, hosted at e& UAE data centers to facilitate the localization and development of cutting-edge AI services, elevating the standard of offerings across its product portfolio and business operations. Hazem Gebili, senior vice president, enterprise service solution, e& UAE, remarked, “At e& UAE, we are committed to pioneering AI-driven innovation while ensuring data sovereignty, security, and operational control. The strategic collaboration with Oracle and NVIDIA enables us to accelerate our sovereign AI initiatives, empowering us to develop and deploy cutting-edge AI services tailored for government, enterprise, and smart city applications. By leveraging OCI Dedicated Region across our two UAE datacenters, we gain seamless access to over 150 on-premises cloud services, allowing us to modernize mission-critical operations and business support systems with AI-powered efficiencies. The integration of NVIDIA Hopper GPUs and NVIDIA AI Enterprise further strengthens our capabilities, enabling us to rapidly scale, innovate, and deliver next-generation generative AI solutions that redefine customer experiences and business transformation in the UAE.”

Oracle Alloy enables partners to transform their business model and become cloud service providers (CSPs) themselves, selling OCI’s cloud offerings in their own customized, branded service model offerings, with the guidance, experience, and support of OCI behind them.

After the successful migration of its financial software-as-a-service (SaaS) solutions to OCI Dedicated Region, Nomura Research Institute (NRI) experienced growing demand from its customers for managed cloud solutions that could support financial governance and digital sovereignty requirements, and launched its own Oracle Alloy cloud. “We rely on Oracle Alloy in our Tokyo and Osaka datacenters to advance our multicloud strategy and help our customers drive innovation,” said Shigekazu Ohmoto, senior corporate managing director, NRI. “To provide our customers with high-performance infrastructure within a dedicated cloud environment, we’ve deployed NVIDIA Hopper GPUs and plan to deploy NVIDIA AI Enterprise. This will help support our customer’s enterprise AI use cases, including generative AI and large language model development, while adhering to stringent governance, security, and data sovereignty requirements.”

Cloud: Public, Government, Sovereign

OCI offers a wide variety of deployment models and options for hyperscale cloud workloads. Each offering includes over 150 OCI services at the same price, with the same SLAs and support as in the public cloud. OCI provides these offerings based on customers’ sovereignty needs. Whether it be for specific Government requirements, EU compliance, or even top-secret security and data isolation requirements, Oracle provides multiple deployment options to enable Sovereign AI anywhere.

OCI was the first cloud provider to introduce bare metal instances, with 100% of resources for customer workloads while improving isolation and security. Only your workloads run on these dedicated instances, reducing overhead and improving your privacy posture. To help address data residency requirements, OCI offers a unique region centric realm design, for effective policies and residency description, with Zero Trust Security to enable data transfer to other regions. To learn more about the key tenets that Oracle believes cloud providers should offer to help address sovereign AI requirements, read Oracle’s “Five Key Pillars to Sovereign AI” Brief.

OCI offers the choice of using its public cloud infrastructure for its AI capabilities. Zoom Communications, Inc., an AI-first work platform for human connection, is using OCI to support Zoom AI Companion in Saudi Arabia. To support compliance with Saudi Arabian regulations while maintaining top-tier performance, Zoom optimized its AI models to run on efficient OCI GPU shapes. “Saudi Arabia is a key market for Zoom, and we continue to invest in Zoom Workplace and AI Companion to provide solutions that meet the unique needs of businesses here,” said Velchamy Sankarlingam, president of product and engineering at Zoom. “By optimizing AI Companion to operate efficiently with GPU shapes accelerated by NVIDIA in a local OCI region, we’re enabling Saudi companies to take full advantage of AI without facing constraints.”

For government workloads, Oracle offers Government Cloud regions that are designed for the sensitive information governments handle. Oracle also operates US National Security regions and Isolated Regions, which are disconnected from the internet to support classified processing needs by various government departments. Both government regions and National Security regions are operated by personnel who have been achieved government security clearance. OCI also offers an EU Sovereign Cloud in Frankfurt and Madrid, with full cloud regions physically and logically separated from other public cloud regions, operated by dedicated EU Sovereign legal entities with EU-resident personnel only. Utilizing this kind of regionally specific, sovereignty-first infrastructure, and operational capabilities enable companies not only to operate with digital sovereignty, but also train their models on local languages with the knowledge that their data and any associated personally identifiable information (PII) is staying within predetermined geographic boundaries.

An applied AI startup based in Brazil, WideLabs chose OCI to train the country’s first sovereign AI, Amazônia IA. WideLabs’ bespoke models include speech-to-text which accurately reflect regional dialects, multimodal text and image integration, and translation including sentiment analysis. Additionally, WideLabs introduced Amazônia360, a pioneering AI PaaS (Platform as a Service) tailored for the corporate market. This innovative platform is Brazil’s first sovereign AI PaaS, enabling public and private organizations to maintain full control over their data, customize AI solutions to their specific needs, and serve as the central AI brain of the organization. Utilizing all the mentioned AI models. “OCI’s AI infrastructure provides us with the highest efficiency for training and deploying our LLMs,” said Nelson Leoni, CEO of WideLabs; “Its scale, flexibility, and robust security measures are essential for driving innovation in. The sovereignty and data security offered by Oracle ensure our clients’ information is always protected in our country.”

Available as part of Oracle’s Distributed Cloud, customers in need of the most AI processing power (over a trillion parameter models) can choose from multiple options across OCI’s AI Superclusters. OCI Superclusters provide support for over 100,000 of the latest NVIDIA superchips in a single cluster, continuing to go beyond the scalability of other cloud providers. OCI Superclusters are offered for a variety of different GPU instances.

Next Steps

OCI’s Distributed Cloud portfolio integrates with Oracle Database, including Oracle AI Vector Search to easily bring AI-powered similarity search to your business data without managing and integrating multiple databases or compromising functionality, security, and consistency. Native AI vector search capabilities can also help large language models (LLMs) deliver more accurate and contextually relevant results for enterprise use cases using retrieval-augmented generation (RAG) on your business data. Oracle also offers a full suite of AI services built for business, helping you make better decisions faster and empowering your workforce to work more effectively. With classic and generative AI embedded in Oracle Fusion Cloud Applications and Oracle generative AI capabilities, customers can instantly access AI outcomes wherever they’re needed. Innovate with your choice of open source or proprietary large language models (LLMs). Leverage AI embedded as you need it across the full stack—apps, infrastructure, and more.

To get started on your own journey with AI, read more about the 5 Pillars of AI Sovereignty, and consider where you are and where you want to go. Focus on the ROI associated with your planed AI deployments and learn more about Oracle’s Distributed Cloud to determine a logical deployment approach. When you want more guidance or support, reach out to an expert on OCI and NVIDIA’s joint offerings to learn how you can train ML and AI models with up to 40% cost savings.

Learn more about Oracle’s other news and announcements from NVIDIA GTC 2025:

Read the Press Releases

- Oracle and NVIDIA Collaborate to Help Enterprises Accelerate Agentic AI Inference

- Oracle Expands Distributed Cloud Capabilities with NVIDIA AI Enterprise

Check out additional Blogs:

- Accelerating AI Vector Search in Oracle Database 23ai with NVIDIA GPUs

- Advancing AI Innovation: NVIDIA AI Enterprise and NVIDIA NIM on OCI

- Announcing General Availability of NVIDIA GPU Device Plugin Add-On for OKE

- Beyond Structured Data: The AI Revolution in Market Data Analytics

- DeweyVision Transforms Media Discovery with Oracle AI Vector Search

- First Principles: Inside Zettascale OCI Superclusters for next-gen AI

- Go from Zero to AI Hero – Deploy Your AI Workloads quickly on OCI