Today, we’re announcing the general availability of version 2.11 in Oracle Cloud Infrastructure (OCI) Search with OpenSearch. This update introduces AI capabilities through retrieval augmented generation (RAG) pipelines, vector database, conversational and semantic search enhancements, security analytics, and observability features.

The OpenSearch project launched in April 2021 derived from Apache 2.0 licensed Elasticsearch 7.10.2 and Kibana 7.10.2. OpenSearch has been downloaded more than 500 million times and is recognized as a leading search engine among developers. Thanks to a strong community that wanted a powerful search engine without havingƒ to pay a license fee, OpenSearch has evolved beyond pure search, adding AI, application observability, and security analytics to complement its search capabilities.

OCI introduced OCI Search with OpenSearch in May 2022 as a fully managed service that provides developers with all the advantages of OpenSearch combined with OCI’s ultra high-performance architecture. This strategy has proven attractive to our customers because they also gain OCI’s superior price-performance.

Let’s explore some of the highlights of features included in version 2.11 of OCI Search with OpenSearch.

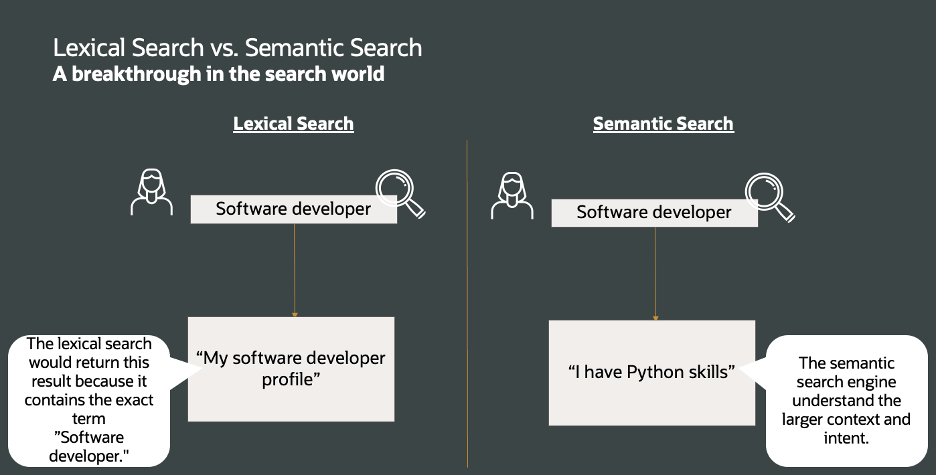

Semantic search

OCI Search with OpenSearch significantly enhances the accuracy of search results by using the power of vector database technology, k-nn (nearest neighbor) plugin, and RAG pipeline, effectively bridging the gap between large language models (LLMs) and private corporate data.

Thanks to semantic search, you no longer have to manually create lists of synonyms or heavy indexes of all your content. Semantic search now attempts to parse the intent, context, and other nuances of written material to deliver more accurate results. You can perform this search on your structured (text) and unstructured (images) content.

The benefits of semantic search in OCI Search with OpenSearch include the following examples:

- Enhanced accuracy: Analyzes context and intent

- Personalized results: Accounts for your search history

- Time efficiency: You find what you’re looking for faster

- Improved user experience: Provides a more intuitive search

Generative AI for conversational search

LLMs have transformed the face of technology. With version 2.11, you can now use natural language search through seamless integration of OCI Search and OCI Generative AI and Generative AI Agent services that use Cohere or the Meta Llama 2 LLM.

Conversational Search is a built-in conversational service utilizing OCI Generative AI with Cohere to simplify the user experience. The following graphic shows a short illustration, and for a brief demonstration, see Chatbot with RAG Using OCI Generative AI Agents.

Observability

A new Observability dashboard simplifies correlation and analysis of logs, metrics, and trace telemetry and supports fast time-to-resolution and a better experience for your end users. Log analysis has been the main use of OpenSearch by sysadmins, so that they can properly monitor their environment. With this new release, sysadmins can now go further and automate troubleshooting in real time, thanks to features such as anomaly detection, performance alerts, and data correlation.

OpenSearch Observability tools helps you detect, diagnose, and remedy issues that affect the performance, scalability, or availability of your software or infrastructure. Observability in OpenSearch 2.11 unifies metrics, logs, traces, and profiling, delivering AI- and machine learning (ML)-powered insights for enhanced performance optimization.

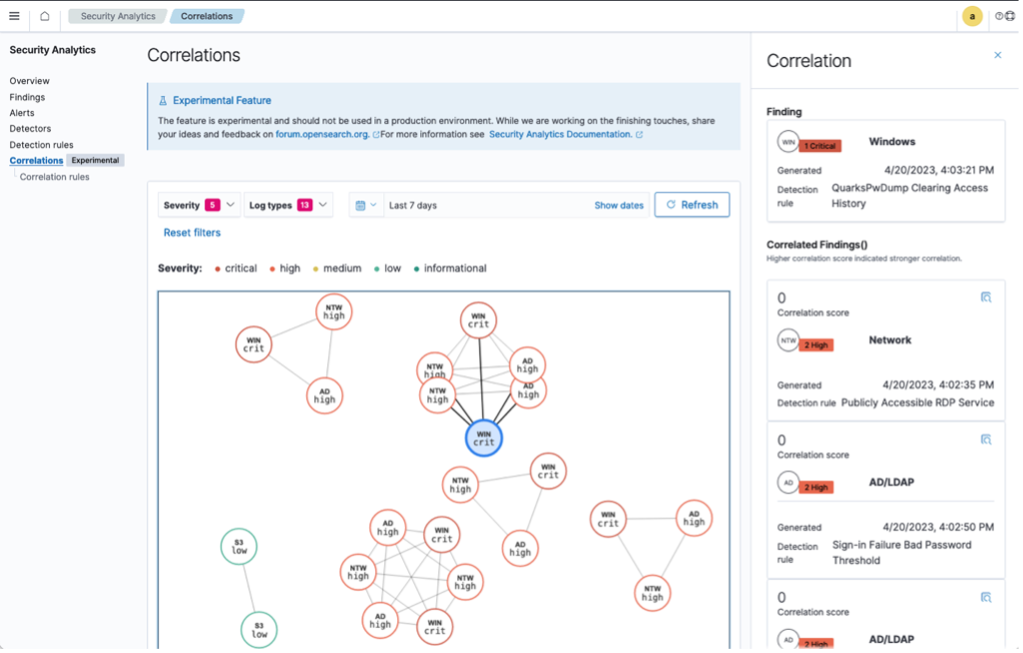

Security

A new out-of-the-box Security Analytics feature helps you detect, investigate, and respond to threats in near-real time. You can gather a wide variety of data sources, correlate them, and detect early potential threats with prepackaged or customizable detection rules that follow a generic, open source format. You can create your own notification process so that your security team is alerted in near-real time of potential issues.

OpenSearch Security Analytics facilitates investigation and response to security threats and automates threat detection and analysis using rule-based and ML-assisted controls.

Testimonials

Cohere

“Cohere’s partnership with Oracle enables enterprises to supercharge their businesses with AI,” said Abhishek Sinha, VP of Product at Cohere. “We’re excited about the latest upgrade to OCI Search with OpenSearch, making it easy to integrate Cohere’s Command and Embed LLMs via OCI’s Generative AI service. This update helps businesses stay competitive with accurate and scalable data retrieval systems.”

Prophecy

“OCI Search with OpenSearch is a key component of our EMITE solution,” says Steve Challans, CISO of Prophecy International. “It has proven to be not only scalable but also cost-effective, saving us over 50% compared to alternatives. Moreover, it is up to 20% faster than other solutions. Our collaboration with OCI OpenSearch team has been outstanding. We’re excited for the upcoming OpenSearch 2.11 features, especially the semantic search and OCI Generative AI service integration.”

Conclusion

These highlights provide an overview of the most important updates. Additional improvements included in version 2.11 of OCI Search with OpenSearch can be found here.

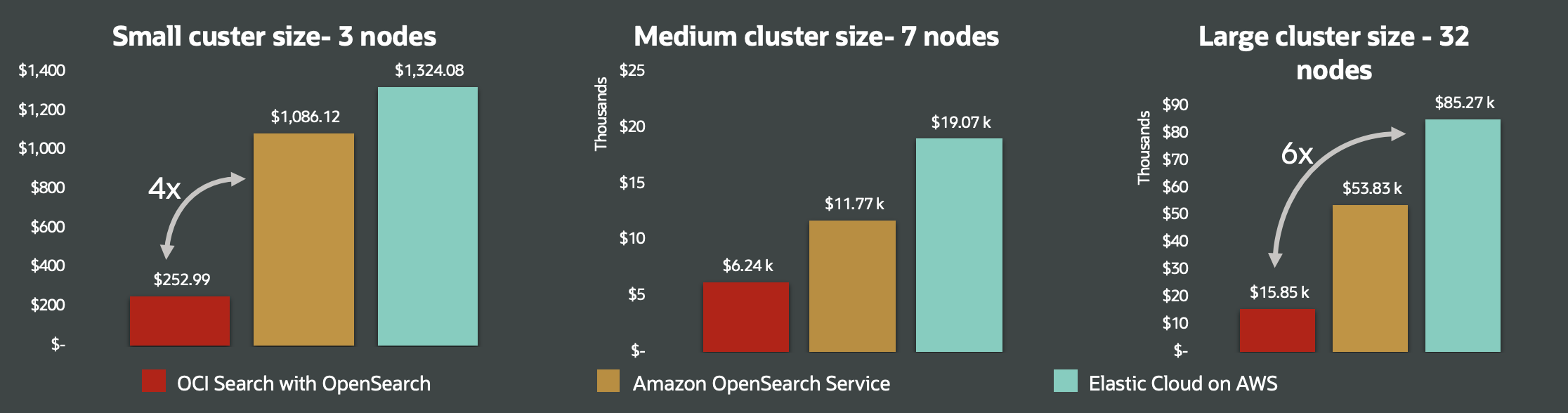

This upgrade comes at no extra charge. OCI continues its commitment of straightforward and simple global pricing—the OCI Search price remains based on the number of nodes instead of the number of cores, the latter approach being what other hyperscalers base their pricing on. Below are some typical price comparisons(*) for your reference:

Starting today, OCI Search with OpenSearch v2.11 will roll out across all OCI regions with semantic search integration, expanded security and observability options, and enhanced plugin support. We encourage you to upgrade or give it a try, so that you can deliver more value faster from your data for your organization. Sign up to OCI for free here.

Want to learn more on OpenSearch 2.11 on OCI? Join our webinar on Cloud Customer Connect on March 14 at 8 a.m. PT.

(*): Average price comparison done for a small, medium and large cluster with 16, 224 and 1024 vCPU using public pricing in AWS US-East on 1st March 2024 based on 2 VCPUs having comparable performance to 1 OCPU.