In this follow-up to our post about Deploying Lustre on Oracle Cloud Infrastructure, we cover the results of running the industry-recognized IOR benchmark for the Lustre file system on Oracle Cloud Infrastructure, and the cost to deploy Lustre on Oracle Cloud Infrastructure.

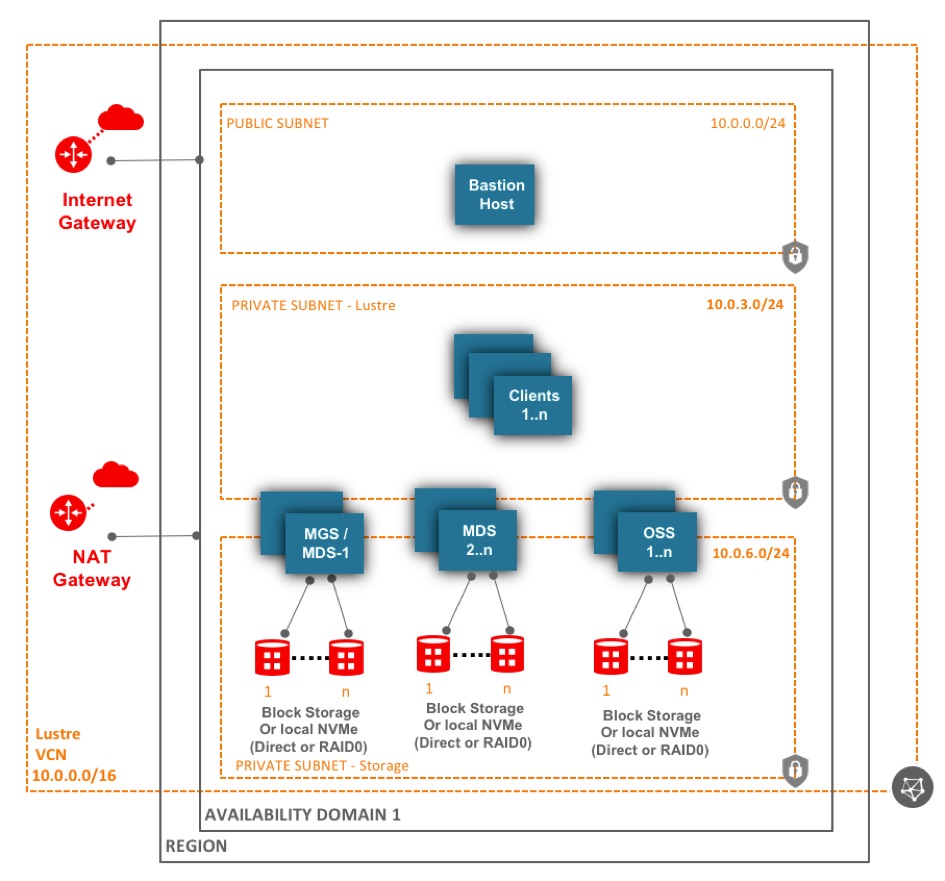

Architecture

The Oracle Cloud HPC team tuned the Lustre file system and cloud infrastructure to provide high-performance file servers at scale by using Oracle Cloud Infrastructure’s bare metal Compute, a 25-Gbps network, and network-attached Block Storage or NVMe SSDs locally attached to compute nodes.

This solution meets the needs of high-performance computing (HPC) workloads like machine learning/artificial intelligence (ML/AI), electronic design automation (EDA), and oil and gas applications, all of which see throughput as a common bottleneck. This solution architecture deploys in minutes, scales easily, costs a few cents per gigabyte per month (compute and storage combined), and provides performance that’s consistent with on-premises HPC file servers.

Figure 1: Lustre File System Architecture on Oracle Cloud Infrastructure

Performance

As mentioned in our previous blog post, you can deploy Lustre on Oracle Cloud Infrastructure by using an automated Terraform template. In addition to provisioning the infrastructure and deploying Lustre, the template tunes the infrastructure and Lustre file system for the best performance.

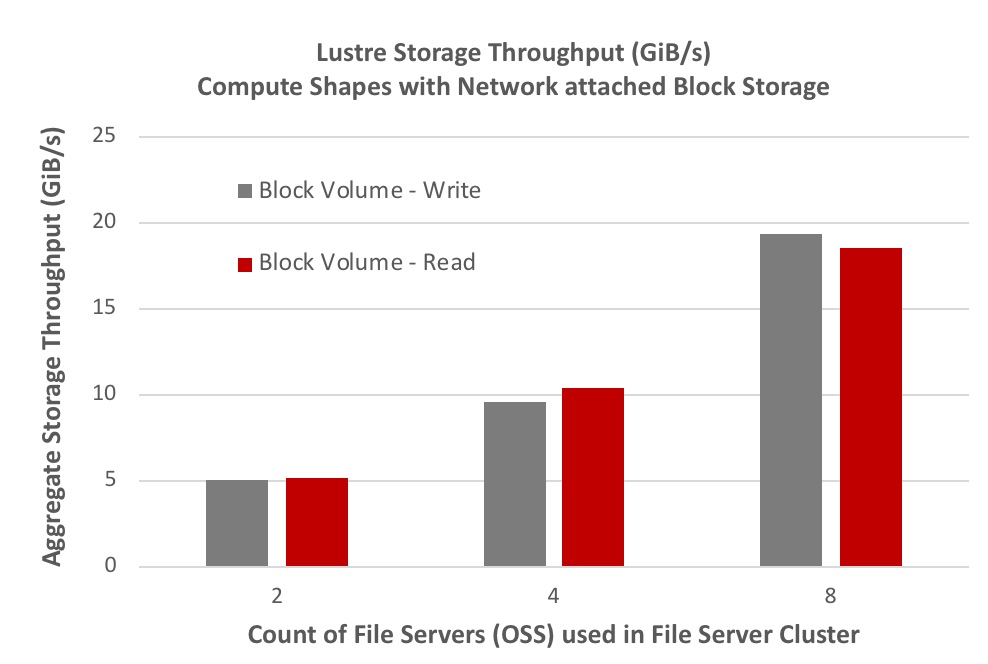

Working with Open Source Lustre, our solution achieves performance that hasn’t been seen in other public clouds. The following graph shows the throughput achieved by different-sized file servers while running the IOR performance benchmark. For example, on a configuration as small as two Object Storage Server (OSS) nodes, the Lustre file system on Oracle Cloud Infrastructure provides over 5 GiB/s throughput.

Figure 2: Aggregated Storage Throughput (Block Volume) Versus Number of File Servers

Performance with Network-Attached Block Volumes

Figure 2 shows the aggregate storage throughput of a Lustre file system built using block volumes network-attached to a bare metal compute node with two physical NICs (25 Gbps each). One NIC was used for storage traffic to block volumes, and the other NIC was used for LNET. Object Storage Servers were built using a BM.DenseIO2.52 compute shape, but you can also use the BM.Standard2.52 compute shape. A total of 16 block volumes (balanced elastic performance tier) of 800 GB each were configured as RAID0 to create one Object Storage Target (OST) on each OSS.

We can provide this level of performance because our block storage is backed by NVMe SSD media, and our data centers have a fast, flat network architecture. We believe so strongly in the capabilities of our storage offering that our block storage performance is backed by a unique performance SLA.

In the preceding performance test, block volumes with the balanced elastic performance tier were used. This option provides linear performance scaling with 60 IOPS/GB up to 25,000 IOPS per volume. Throughput scales at 480 KBPS/GB up to a maximum of 480 MB/s per volume. All volumes 1 TB and greater provide the maximum 480 MB/s on the balanced elastic performance tier.

You can also use block volumes with the higher performance elastic tier. This option provides the best linear performance scale with 75 IOPS/GB up to a maximum of 35,000 IOPS per volume. Throughput also scales at the highest rate at 600 KBPS/GB up to a maximum of 480 MB/s per volume. All volumes 800 GB and greater provide the maximum 480 MB/s throughput.

A Lustre Management Server (MGS) and Metadata Server (MDS) were deployed on the same compute node (BM.DenseIO2.52) with one Metadata Target (MDT) using a network-attached block volume of 800 GB. Lustre clients were deployed on VM.Standard2.24 compute shapes. LNET was configured to use 25 Gbps TCP/IP (ksocklnd), and Lustre was configured to use Bulk RPC (16 MB), a stripe count of 1, and a stripe size of 1 MB. For more information about configuration and tuning, see our automated Terraform deployment template.

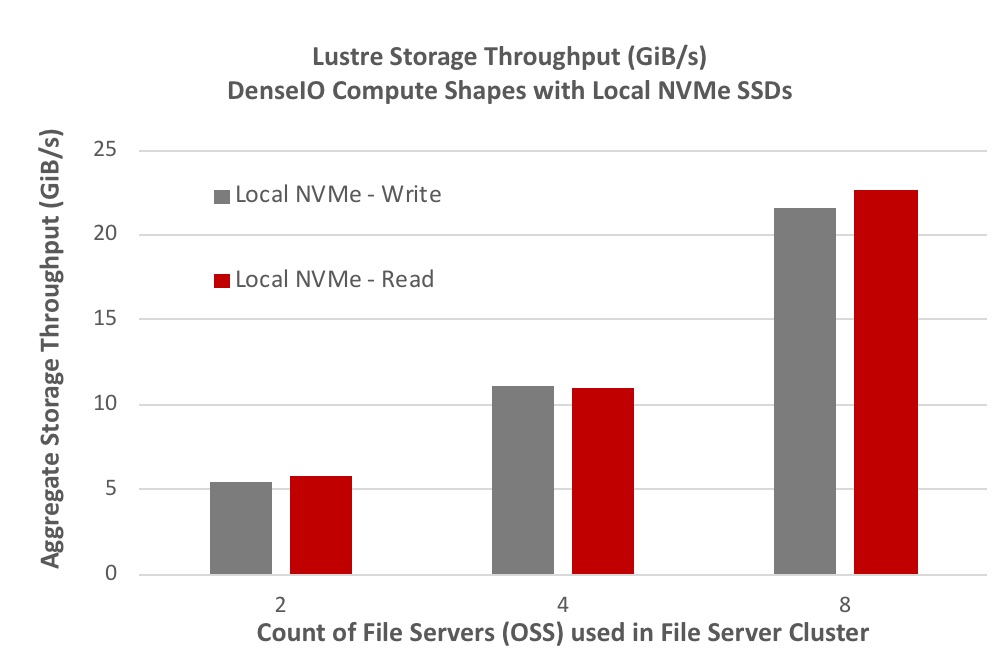

Performance with Local NVMe SSDs

Figure 3 shows the aggregate storage throughput of a Lustre file system built using NVMe SSDs locally attached to a bare metal DenseIO compute node with two physical NICs (25 Gbps each). One NIC was used for storage traffic to block volumes, and the other NIC was used for LNET. Object Storage Servers were built using a BM.DenseIO2.52 compute shape, which has 8 local NVMe SSDs of 6.4 TB each. SSDs were combined to create a RAID0 OST of 51.2 TB on each OSS.

Figure 3: Aggregated Storage Throughput (Local NVMe SSD) Versus Number of File Servers

A Lustre MGS and MDS were deployed on the same compute node (BM.DenseIO2.52) with one MDT of 51.2 TB using 8 local NVMe SSDs of 6.4TB each (RAID0). Lustre clients were deployed on VM.Standard2.24 compute shapes. LNET was configured to use 25 Gbps TCP/IP (ksocklnd), and Lustre was configured to use Bulk RPC (16 MB), a stripe count of 1, and a stripe size of 1 MB. For more information about configuration and tuning, see our automated Terraform deployment template.

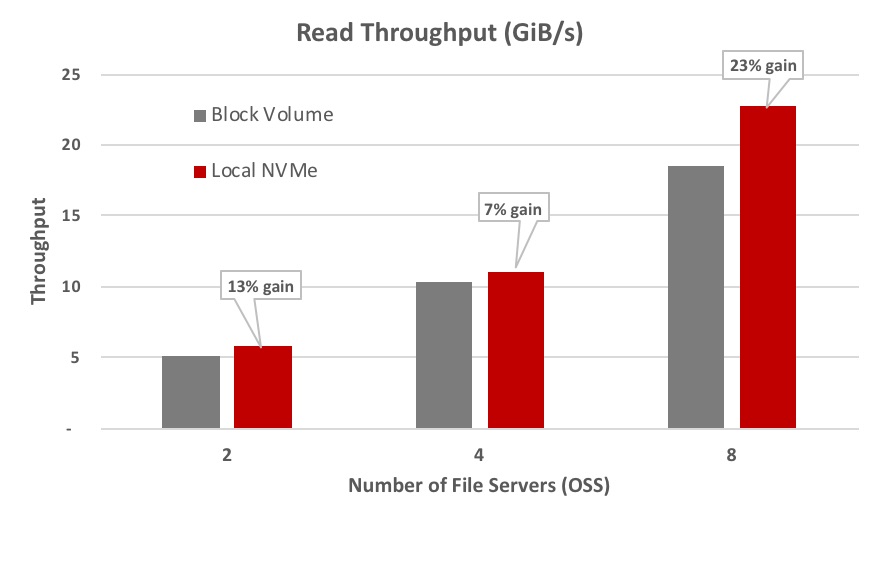

Higher Throughput Using Local NVMe Versus Block Storage

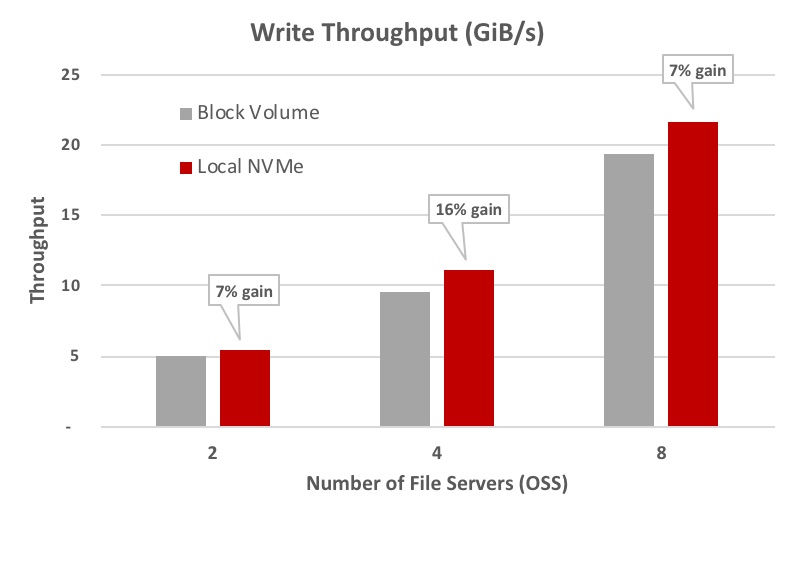

Our IOR performance benchmark showed that using local NVMe SSDs, directly attached to file server nodes, provided higher storage throughput (7% to 23% gain) for read/write compared to network-attached block volume storage. The following graphs show the additional gain in read/write throughput when local NVMe SSDs are used.

Figure 4: Read Throughput Using Local NVMe SSDs Versus Network-Attached Block Volumes

Figure 5: Write Throughput Using Local NVMe SSD Versus Network-Attached Block Volumes

Amazing Performance at One-Tenth the Price

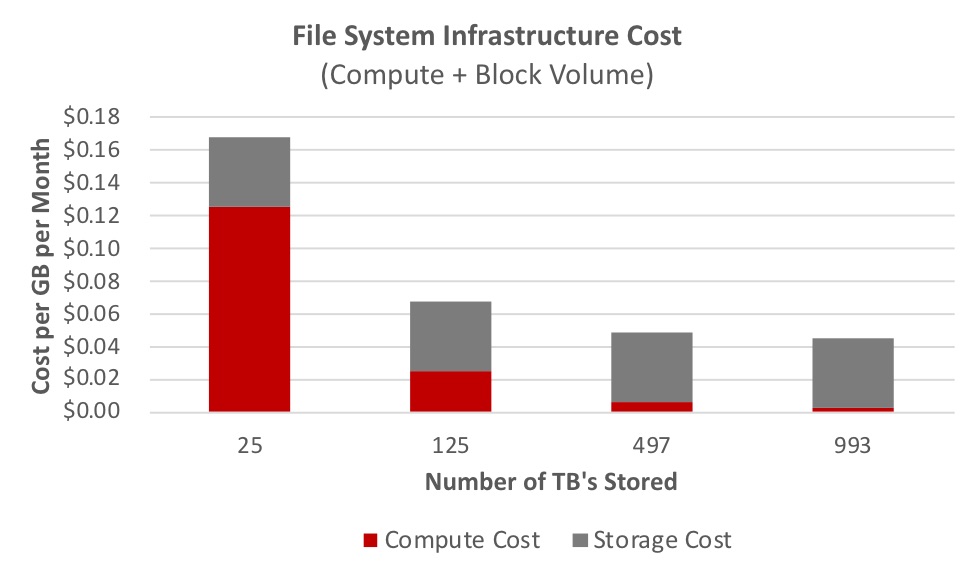

The impact of Oracle Cloud Infrastructure’s performance and scalability directly impacts the bottom line for file servers. As you increase storage capacity and throughput, cost per GB per month decreases. Cost includes compute and storage cost. The following graph shows cost per GB per month for a file system using Intel and AMD bare metal compute shapes.

Figure 6: Cost per GB per Month for a Bare Metal Compute Shape with Block Volume Storage

If you plan to use a bare metal DenseIO shape with 51.2 TB Local NVMe SSDs, then the cost per GB per month is $0.095 for compute and storage combined.

Next Step

In the coming weeks, we’ll follow up with another post on how to run Lustre on a 100-Gbps RDMA network on Oracle Cloud Infrastructure.

Try It Yourself

Every use case is different. The best way to know if Oracle Cloud Infrastructure is right for you is to try it. To get started with a range of services, including compute, storage, and network, you can select either the Oracle Cloud Free Tier or a 30-day free trial that includes US$300 in credit.