Oracle E-Business Suite (EBS) is one of the leading product lines of Oracle. EBS achieves higher performance by distributing and load balancing the application services across multiple application tier nodes and servers. These servers need the same file system to read and write concurrently, which requires a shared file system that can be mounted on all the application tier nodes. A shared file system also improves high availability and better resilience against node failures.

Many customers run EBS application on Oracle Cloud Infrastructure (OCI) and many more in the process of migrating it from on-premises or other cloud providers. OCI File Storage service is a fully managed elastic file system that scales automatically up to 8 exabytes. You can access it through an NFSv3 mount point. OCI File Storage has the following benefits:

-

Easy to deploy: You can create and mount a shared file system on OCI Compute instances in a few clicks. For detailed steps, refer to Creating File Systems and Mounting File Systems.

-

Elastic: You can start writing to the file system without provisioning storage upfront. The file system starts from first byte and automatically scales as it’s being written up to 8 Exabytes. You pay only for the data stored in File Storage, so you don’t need to provision storage, with no upfront cost and no storage capacity planning hustle.

-

Fully managed: It eliminates the maintenance efforts for the operators such as patching the servers.

-

Highly available: Data in the file system is always accessible. The content of the file system is fully owned and controlled by the users.

-

Durable: Data in the file system is highly consistent and persistent.

This blog helps Oracle Applications database administrators and architects, who install and maintain EBS systems, by giving some deployment scenarios of EBS on OCI utilizing File Storage where you need a shared file system and providing best practices in using File Storage for optimal performance.

Deployment scenarios

Whether it’s a lift-and-shift from on-premises or another cloud providers to OCI or a new implementation in OCI, File Storage provides the shared file system that works the same way as a traditional network attached storage (NAS) appliance or a custom-built network file system (NFS) server, making it seamless from an operations perspective.

Many deployment approaches and designs of incorporating OCI File Storage with EBS system topology are possible, depending on your EBS operational needs. The EBS database tier can also utilize File Storage for RMAN backups and export and import destinations.

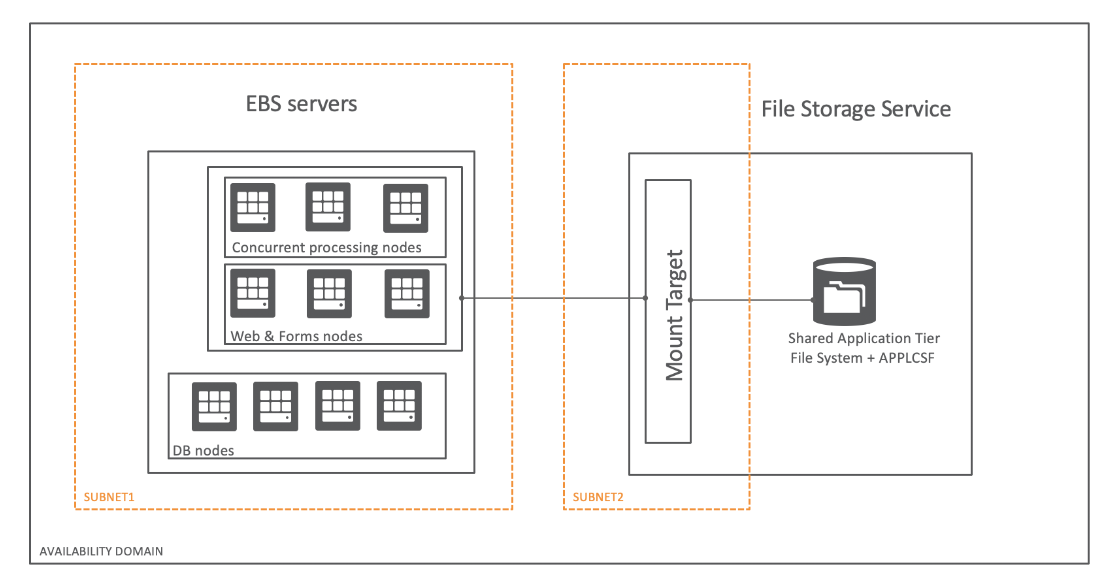

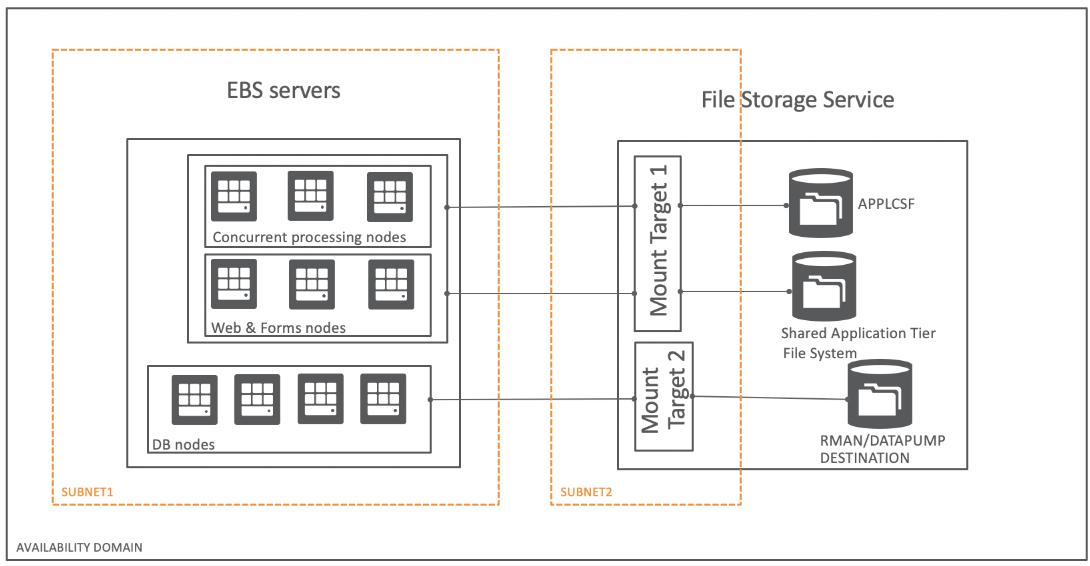

The following images gives high-level sample topologies with File Storage in an EBS deployment.

Figure 1: Single File Storage mount target and file system used with multiple EBS application, web, and database servers

Figure 2: Multiple file systems and mount target for high IOPS requirement

You can find more network topologies in implementing EBS on OCI File Storage in E-Business Suite on OCI scenarios.

Best practices

Based on our experience from many EBS deployments on OCI File Storage, with various EBS userbase sizes and concurrent-processing volume, we have formulated some best practices for optimal EBS on File Storage performance.

Planning stage

-

Region and availability domains: Choose an OCI region closest to the on-premises data center or other cloud provider where you’re currently running your EBS environments that need to be migrated. We also recommend housing all the instances and mount targets in the same availability domain. You can have instances and mount targets in different availability domains or regions by having local peering or remote peering connections, but this configuration can increase the latency and impact performance.

-

Compute instance image: Every Oracle Linux image comes up with a Linux kernel version, which shows slight variances in performing operating system calls. Oracle Linux 6 and 7 with kernel versions earlier than 4.14.35.1902.301.1 have an NFS client bug that causes the directory traversing to go into a loop, causing delays. So, OS operations, such as ls, du, find, rsync, chmod, and chown, might take a long time, especially when the directory has many files. Always ensure that you’re using the latest Oracle Linux image for EBS servers.

-

Compute instance shape: Larger shapes with higher network bandwidth and CPU power can utilize concurrent operations to improve performance. File Storage performs better with concurrent operations and large read and write sizes, taken care of by rsize and wsize NFS mount options. The following table shows the time taken for EBS admin tasks in two instances of different shapes.

-

Migration options: File Storage doesn’t currently have a file transfer service. Rsync is usually used for file system sync. Depending on the size, the shared file system of the EBS environment to be migrated, rsync’s performance is acceptable for many customers. For a faster transfer rate, we recommend fpsync. Fpsync is an open source utility that does rsync in parallel by spawning parallel rsync processes. Fpsync needs more compute power to achieve the parallel transfers. The rpm is available within the yum repository of OCI Oracle Linux. For the steps to copy file system data from on-premises to OCI File Storage using fpsync, see On-premises to File Storage Service sync.

-

Number of EBS environments in a single file system: When a file is created, a file identifier is generated for the file by the NFS server. File Storage does the same but uses a 64-bit design So, means the file identifier always grows despite file deletion in File Storage. Programs that are 32-bit have limitations in creating files when the file identifier has reached 2**(32-1), or 4,294,967,295, where the file creation fails.

In EBS, you may be periodically purging older files like concurrent managers/requests log and out files. But because of this phenomena, programs in EBS such as Developer’s Oracle Home executables which are 32-bit, cannot create new file. Some of the impacts are tnsping will fail, application services startup script will fail to complete successfully, etc.

This situation happens when there are too many EBS environments stored in a single file system. So when migrating to OCI, ensure not to migrate all your EBS environments to a single File System but use multiple file systems. Also when growing a EBS shared file system in a FSS file system, ensure to use new file system whenever you perform EBS environment refresh.

Run stage

The following best practices for running EBS on OCI are categorized based by infrastructure layers.

Configuring ingress and egress rules in the Network layer: The mount target of OCI File Storage is a network endpoint that gives access to the file system through one or more export path. The mount target can be in the same or different subnet and virtual cloud network (VCN). For information on setting up ingress and egress rules depending on which subnet and VCN the mount target and the Compute instances are hosted, refer to Configuring to VCN security rules. Check the connectivity to the mount target and export by running the command, showmount -e <MountTarget_IPaddress>, in the instance. An empty list not showing the export paths means that you have no access to the file system from the instance.

The NFS layer has the following best practices:

-

Setting the reported size of the mount target: When you create the file system, it comes with a default logical max size limit of 8 exabytes. E-Business Suite, which has 32-bit programs like Oracle Universal Installer (OUI), can’t read 8-exabyte values during free space checks and will fail. The reported size field on the Mount Target page allows user-defined values. You can change the value at any time and don’t need to remount a file system or reboot the instances where the file system is mounted. This setting is at the export set level, so the change applies to all the exports under the mount target.

-

Using export options: While the network security rules or network security gateway (NSG) determines what Compute instances can access the mount target, the export option with the source CIDR and access mode further restricts the instances and their access to the file system. For example, we can allow instances of 10.0.0.1/24 CIDR to access the mount target, and the default export option having 0.0.0.0/0 source CIDR allows all the instances under 10.0.0.1/24 accessing the file system. But we can specify which of the 10.0.0.1/24 can have read-write and read-only access to the file system. To better understand how to control client access to your file system, see Working with NFS export options.

-

Mounting: We recommend not using explicit NFS mount options that make the NFS client (instance) and server (File Storage) automatically negotiate for NFS mount options. After mounting without mount options, you can see the default values picked up by running the command, grep <MountTarget_IPaddress> /proc/mounts. We recommend the following best practices:

-

For optimal read and write performance, don’t set the value for rsize and wsize NFS mount options to less than 1,048,576. When unspecified, the default value is 1048576 for rsize and wsize.

-

For 12.1.3, you need the nolock mount option because of OACore component design requirements. Because 12.2 runs on weblogic server, nolock isn’t required.

-

E-Business Suite’s concurrent processing log and out directory tend to store huge numbers of files, which can deteriorate directory traversing performance. Commands such ls, du, find, and rsync do directory traversing and require more time. Use the nordirplus mount option (-o nordirplus) in the mount command. This command changes the directory traversing method internally, which can lead to better performance.

-

Avoid nested mounting: While subdirectory mounting is allowed, avoid nested mounting because it can create ambiguity and causes file/folder lookup issues. An example for nested mounting is System A mounted as /mnt/File Storage-A/ mount point and File System B mounted as /mnt/File Storage-A/folder-B.

-

The application layer has the following best practices:

You can use EBS storage scheme option to restrict the number of files in a single log and out folder. The storage scheme creates a sub-directory structure for concurrent processing requests based on date, product, count of files, and so on. This way, the number of files in a single sub-directory can relatively small without compromising on the retention policy of concurrent processing log and out files. Refer to section “Configuration for Concurrent Processing Log and Output File Locations” section in My Oracle Support Doc ID 2794300.1.

Table 1: Performance comparison EBS 12.2 administrator tasks running in two different shapes

| Sl no |

Administrative task |

Command |

VM.Standard2.2 |

VM.Standard2.24 |

| 1 |

Copy EBS app tier from local disk to File Storage |

parcp -P <threads> /u01/install/APPS/fs1 /fss/install/APPS where <threads> is number of threads that depends number of cpu cores. parcp is part of File Storage parallel tools developed by the File Storage team and it parallel copies folders |

11 min threads: 32 |

5 min threads: 96 |

| 2 |

Configure the EBS environment |

perl adcfgclone.pl appsTier dualfs |

116 min |

99 min |

| 3 |

Generate forms |

workers=$((`nproc`*2)) adadmin workers=$workers interactive=n restart=y stdin=n menu_option=GEN_FORMS |

50 min |

7 min |

| 4 |

Generate reports |

workers=$((`nproc`*2)) adadmin workers=$workers interactive=n restart=y stdin=n menu_option=GEN_REPORTS |

12 min |

3 min |

| 5 |

Generate product jar files |

workers=$((`nproc`*2)) adadmin workers=$workers interactive=n restart=y stdin=n menu_option=GEN_JARS |

22 min |

13 min |

| 6 |

Relinking application binaries |

workers=$((`nproc`*2)) adadmin workers=$workers interactive=n restart=y stdin=n menu_option=RELINK |

7 min |

6 min |

| 7 |

JSP compilation |

perl ojspCompile.pl –compile –flush |

33 min |

24 min |

| 8 |

Online patching prep phase |

adop phase=prepare |

134 min |

57 min |

| 9 |

Online patching fs_clone phase |

adop phase=fs_clone force=yes |

157 min |

132 min |

| 10 |

Prepare app tier for cloning |

perl adpreclone.pl appsTier |

18 min |

11 min |

Try it yourself

With the following steps, you can build an EBS system with a database tier on local disk and application tier on File Storage in OCI.

-

Oracle Cloud Marketplace has demo install images for Oracle E-Business Suite releases from 12.2.8 to 12.2.11, and the steps to delpoy a single node EBS environment are provided in My Oracle Support Document 2764690.1

-

Create File Storage resources by following the Creating File systems document and mount the File System in the EBS instance using the Mounting File Systems document.

-

When the File Storage mount point is available in the instance, copy the run edition directory fs1 to the File Storage mount point. You can use cp, rsync, or tar to copy. Explore and utilize fss-parallel-tools for a faster copy.

-

When the copy is done, run the command, adcfgclone, to reconfigure the EBS application file system.

-

To add a new application node for a multinode experience, refer to Cloning Oracle E-Business Suite Release 12.2 with Rapid Clone (Doc ID 1383621.1) section 5.3 Adding a New Application Tier Node to an Existing System.

An E-Business Suite environment with a database on boot volume and multinode application tier on Oracle Cloud Infrastructure File Storage service is now ready for your exploration!

To learn more, see the following resources:

-

Learn more about File Storage Service and its features like snapshots, clones, replication from the OCI File Storage service documentation.

-

If you come across any issue with File Storage service, whether a mounting issue, file access issue, or performance issue, your go-to page is Troubleshooting your File System before creating a service request.