As part of our continuing commitment to open standards and supporting a broad and varied ecosystem, we’re pleased to announce that Portworx has extended its Storage Platform for Kubernetes support to Oracle Cloud Infrastructure Container Engine for Kubernetes.

Container Engine for Kubernetes helps you deploy, manage, and scale Kubernetes clusters in the cloud. With it, organizations can build dynamic containerized applications by incorporating Kubernetes with services running on Oracle Cloud Infrastructure.

This post was written by a guest contributor, Gou Rao, CTO, Portworx.

As increasingly complex applications move to Kubernetes, it’s essential that IT teams have solutions for data protection—disaster recovery (DR), backup, high availability (HA)—in place for business continuity in the face of widespread outages. Without these solutions, most enterprise applications simply can’t run in containers. Even so-called greenfield applications, which are designed from the beginning to run as microservices, can’t escape the business requirements that mandate periodic backups or the ability to recover from complete data center failure.

But data protection in a Kubernetes world is not as simple as taking what worked well for VMware environments and porting it to Kubernetes. Data protection for containerized apps running on Kubernetes requires a Kubernetes-native solution. This post outlines why. A future post will show how you can implement a full data protection solution on Kubernetes by using Container Engine for Kubernetes and the Portworx Kubernetes storage solution.

The Limits of Traditional DR for Kubernetes Applications

Traditional data protection solutions like DR and backup and restore are often implemented at the VM level. This works well when a single application runs on a single VM. Backing up the VM and backing up the application are synonymous. Containerized applications like those that run on Kubernetes, however, are much different.

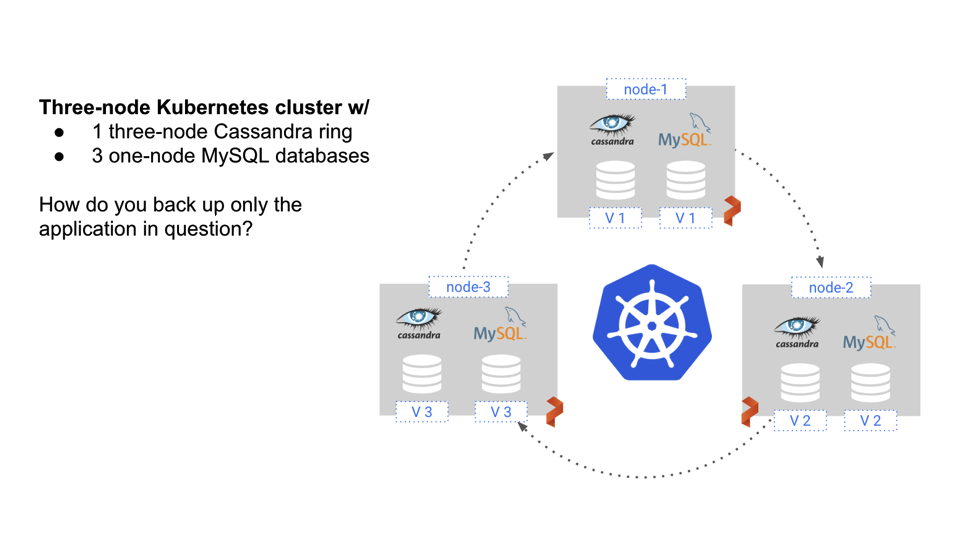

A single VM often runs many pods, and not all of those pods are part of the same application. Likewise, a single application is often spread over many VMs. This distribution of application components over a cluster of servers is a basic architectural pattern of containerized applications. So, backing up a VM is no longer sufficient—it’s both too much and too little. Too much, because if you want to back up only App 1, your VM backup might also contain data for App 2 and App 3. Too little, because even if you back up the entire server, parts of App 1 are running on different VMs that are not captured by your VM-based backups.

To solve this problem, DR for Kubernetes requires a solution that is:

- Container-granular

- Kubernetes namespace-aware

- Application consistent

- Capable of backing up data and application configuration

- Optimized for your data center architecture with synchronous and asynchronous options

The Portworx Enterprise Storage Platform is designed to provide this with PX-DR and PX-Backup. Both provide a Kubernetes native experience for data protection. Let’s see how it works by using PX-DR as an example.

Container-Granular DR for Kubernetes

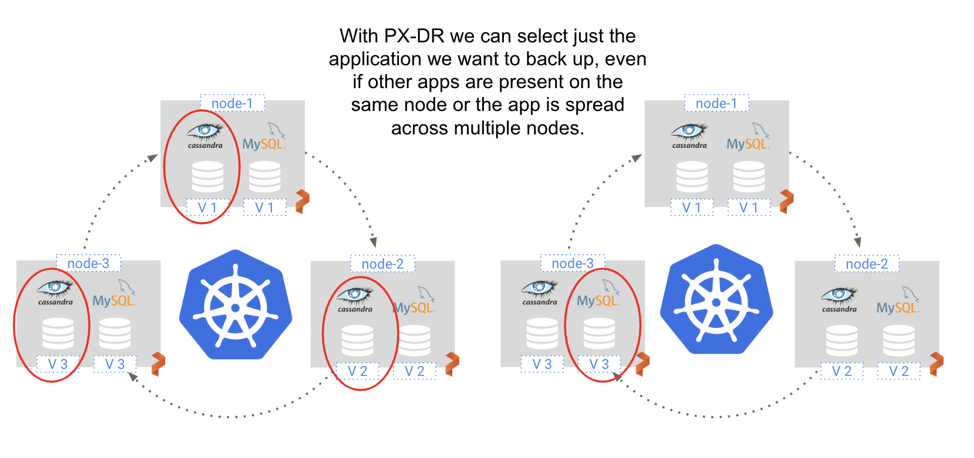

PX-DR is a container-granular approach to DR. Instead of backing up everything that runs on a VM or bare metal server, you can use it to back up specific pods or groups of pods running on specific hosts.

The following diagram shows a three-node Kubernetes cluster, with a three-node Cassandra ring and three individual MySQL databases.

With PX-DR, you can focus on just the pods that you want to back up. For instance, you can back up just the three-node Cassandra ring or just one of the MySQL databases. Container granularity lets you avoid the complications of extract, transform, and load (ETL) procedures that would be required if you backed up all three VMs in their entirety. And by backing up only specific applications, you can minimize storage costs and keep recovery time objectives (RTO) low.

DR for an Entire Kubernetes Namespace

The concept of container granularity can be extended to entire namespaces. Namespaces within Kubernetes typically run multiple applications that are related in some way. For instance, an enterprise might have a namespace related to a division of the company. Often, you want to back up the entire namespace, not just a single application running in that namespace. Traditional backup solutions have the same problems outlined earlier—namespaces bridge VM boundaries. PX-DR, however, lets you back up entire namespaces, no matter where the pods that compose a namespace run.

Application-Consistent Data Protection for Kubernetes

PX-DR is also application consistent. Consider the preceding example. The three Cassandra pods are a distributed system. Taking a snapshot of them in a way that allows for application recovery without the risk of data corruption requires that all pods be locked during the snapshot operation. VM-based snapshots can’t achieve this, nor can serially executed individual snapshots.

Portworx provides a Kubernetes group snapshot rules engine that allows operators to automatically run the pre- and post-snapshot commands required for each particular data service. For Cassandra, for example, you run the nodetool flush command to take an application-consistent snapshot of multiple Cassandra containers.

…

apiVersion: stork.libopenstorage.org/v1alpha1

kind: Rule

metadata:

name: cassandra-presnap-rule

spec:

- podSelector:

app: cassandra

actions:

- type: command

value: nodetool flush

…Backing Up Data and Application Configuration for Kubernetes Applications

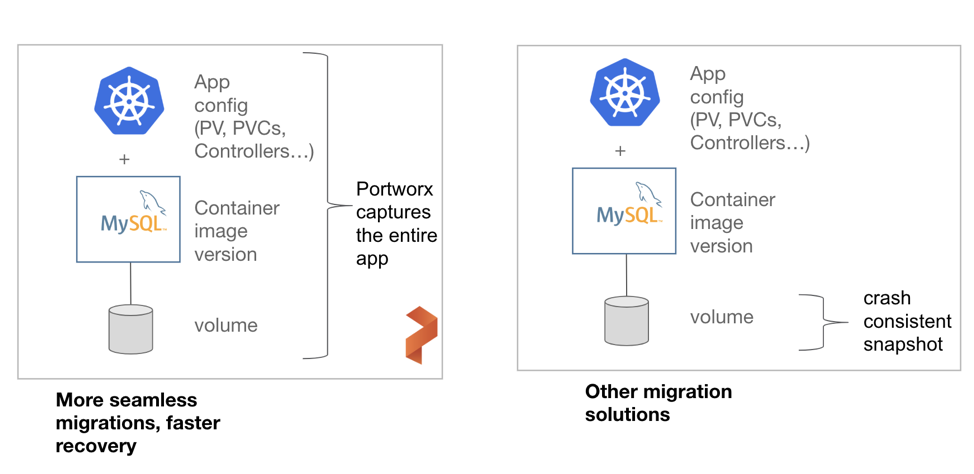

We’ve established the importance of container granularity, namespace awareness, and providing application-consistent backups. Now, let’s look at why DR for Kubernetes requires a solution of data and application configuration.

Backing up and recovering an application on Kubernetes requires two things: data and application configuration. If you back up only the data, recovering the application takes a long time because you have to rebuild the application configuration in place, which increases RTO. If you back up only the app configuration—all those YAML files that define your deployments, your service accounts, your PVCs—you can spin up your application, but you won’t have your application data. Neither is sufficient; you need both.

PX-DR captures both application configuration and data in a single Kubernetes command. To recover your Kubernetes application after a failure, you use kubectl -f apply myapp.yml, because recovering the application is the same process as deploying it initially.

Synchronous or Asynchronous DR for Kubernetes?

Picking the right DR strategy for Kubernetes requires an understanding of your goals and your data center architecture. Based on these goals, you pick a synchronous or an asynchronous disaster recovery model. In some cases, you might even pick both because each provides a different layer of resiliency.

For example, a bank with an on-premises data center connected directly to a cloud region might require a zero recovery point objective (RPO) DR for an important customer engagement application, along with an RTO of < 1 minute. In this case, they will want synchronous PX-DR because the low latency between their two environments will let them provide zero data loss.

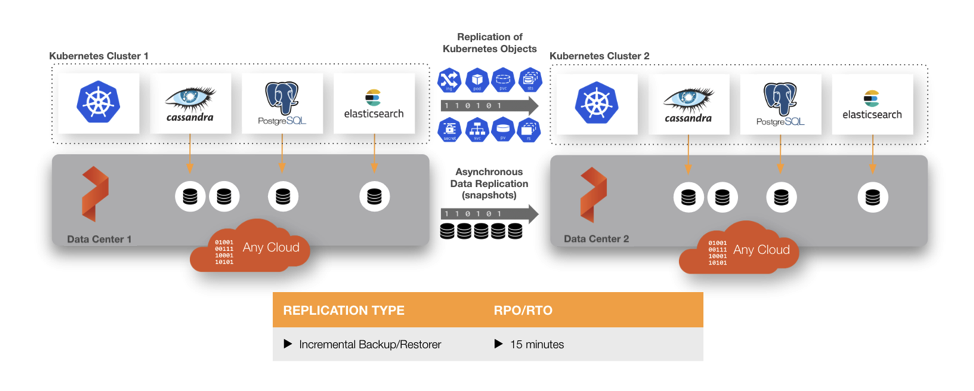

Alternatively, a manufacturing company with data centers on the east and west coasts of the US might have an application that requires a low RTO, but hourly backups are sufficient to meet their RPO goals. In this case, an asynchronous PX-DR model using continuous, incremental backups will work.

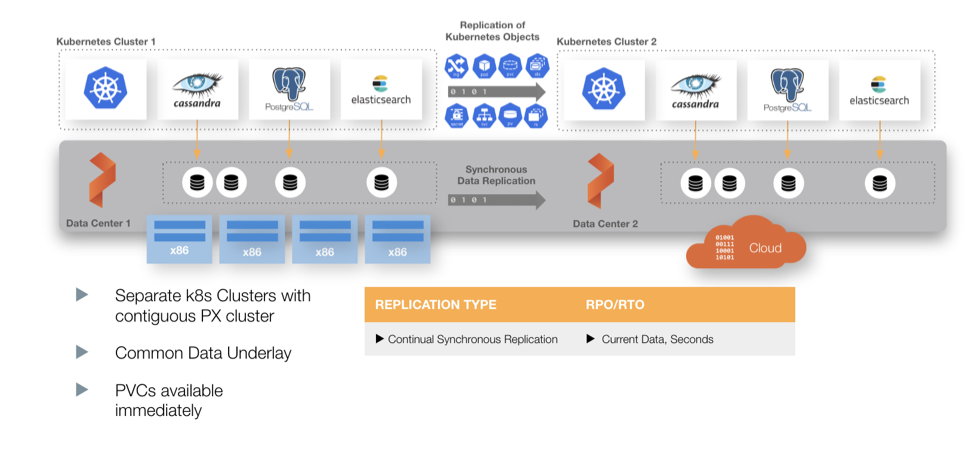

The following table lists the requirements for synchronous and asynchronous PX-DR.

| Application and Infrastructure Requirements |

Synchronous PX-DR |

Asynchronous PX-DR |

| Number of Portworx clusters |

1 |

2 |

| Needs a compatible object store to move data? |

No |

Yes |

| Maximum roundtrip latency between data centers |

< 10 ms |

> 10 ms |

| Data guaranteed to be available at both sites (zero RPO) |

Yes |

No |

| Low RTO |

Yes |

Yes |

Here’s what these architectures might look like in both cases.

Wide Area Network Architecture, Different Regions (> 10 ms Roundtrip Latency Between Sites)

Metro-Area Architecture, Across Different Availability Domains (< 10 ms Roundtrip Latency Between Sites)

Conclusion

Data protection for Kubernetes requires a Kubernetes native approach. By leveraging the Portworx Enterprise Storage Platform for Kubernetes, enterprises can confidently run even the most mission-critical applications in containers with the same robust data protection that they’re used to having for their traditional application.

To learn more about how to leverage Portworx Storage Platform for Kubernetes services with Container Engine for Kubernetes to protect your data and apps, sign up for an Oracle Cloud trial account, and then request a Portworx demo.