We’re pleased to announce that OnSpecta has extended its Deep Learning Inference Engine (DLS) support to the new offering of Oracle Cloud Infrastructure (OCI) Compute shapes powered by Arm.

Developers can now use OnSpecta’s DLS to accelerate the performance of their trained artificial intelligence (AI) models on Arm-based servers. With no loss of accuracy and no need for retraining, OnSpecta’s software optimizes AI models to their deployment environment. It seamlessly works with major frameworks like TensorFlow, ONNX, and PyTorch.

“OnSpecta significantly accelerates the performance of AI inference workloads on your OCI instance when deployed on the Ampere A1 compute platform,” says Indra Mohan, CEO of OnSpecta. “Our Deep Learning Inference Engine (DLS) optimizes the performance of trained neural networks, resulting in up to 10-times lower latency, 10-times higher throughput, and appreciable cost savings. OnSpecta’s customers can use DLS for all types of AI workloads, including object detection, video processing, medical image applications, and recommendation engines. They can experience as much as a three-times cost performance gain over alternative solutions when using OCI Arm-based Ampere A1 compute shapes.”

Why OnSpecta DLS?

AI workloads are computing intensive. Customers want to optimize performance to achieve the lowest possible cost for their inference instances without writing complex optimization code. OnSpecta’s DLS accelerates trained AI models deployed on Arm-based servers on Oracle Cloud.

The Ampere A1 compute platform is a great fit for AI inference workloads because its Arm-based architecture delivers superior performance per watt of power, resulting in a meaningfully lower total cost of ownership (TCO) compared to alternatives. OnSpecta’s DLS improves performance by up to 10 times and provides ready-to-use optimization and acceleration on Arm-based servers deployed on OCI.

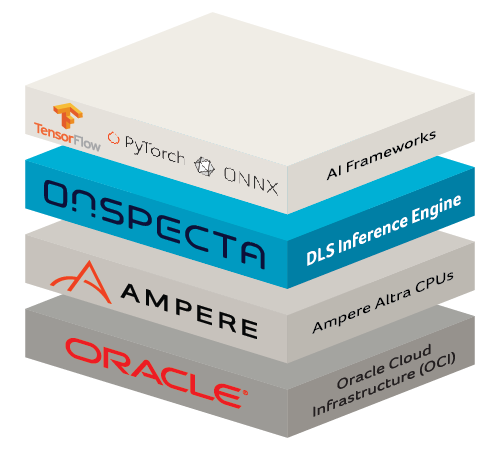

DLS resides between the framework and the hardware and works in your deployment environment with no API changes required.

Figure 1: DLS resides between the framework and the hardware

AI inference applications, powered by OCI Arm-based Ampere A1 instances with OnSpecta’s inference engine, deliver three times the cost performance of alternative solutions that are currently available.

We’re excited about this combined offering because we can now offer the following benefits our customers:

-

Up to 10 times improved AI performance

-

Faster time to market by reducing model deployment time from weeks to hours

-

Reduced costs by using more performant and less expensive hardware

Want to learn more?

Combining Oracle Cloud Infrastructure Arm-based Compute shapes with OnSpecta’s DLS gives developers the best inference performance on an Arm platform for popular deep learning frameworks like TensorFlow and ONNX. To learn more about OnSpecta DLS or to get hands-on experience, see the following resources:

-

Access the Oracle Arm Resources Portal.

-

Get started immediately with OnSpecta on Arm-based Ampere A1 instances by using OCI Free Tier services. These services provide access to the highest-capacity free Arm compute and storage resources available in the market.