Oracle and AMD have collaborated to deliver some of the most price-performant CPU and GPU solutions available on the market today—bringing powerful compute options to customers worldwide. Following the launch of AMD Instinct™ MI300X GPU-based bare metal instances in 2024, Oracle is now excited to introduce OCI Compute Bare Metal instances powered by AMD Instinct™ MI355X GPUs.

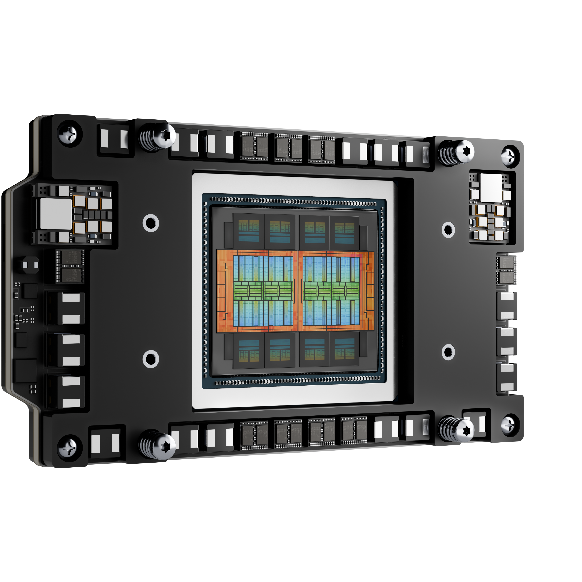

The Instinct MI355X represents a significant leap forward over the previous generation across multiple dimensions, delivering substantial gains in memory capacity, GPU performance, system resources, and overall scalability, including:

- GPU Memory and Bandwidth – With 288GB GPU memory (1.5X higher than the previous generation) 8TB/s bandwidth (a 51% increase), the Instinct MI355X handles larger models and reduces memory bottlenecks for faster AI training and inference.

- Floating Point Precision Formats – Built on advanced CDNA4, Instinct MI355X supports FP4, FP6, and FP8 precision formats, optimizing efficiency and delivering 2.5X FP8/FP16 performance improvements over the previous generation.

- System Resources – The Instinct MI355X system boosts performance with 14% more CPU cores (128 vs. 112) and provides 53% more memory capacity (2.3 TB HBM3e vs. 1.5 TB HMB3), making it better equipped for handling demanding workloads and large in-memory datasets. Additionally, local storage increases 100%, to 61.44 TB, providing faster access to larger datasets and models, and reducing reliance on slower external storage.

- Networking and Scalability – With a 400Gbps front-end network (4x faster than the previous generation) and liquid-cooled racks supporting up to 64 GPUs, the AMD Instinct MI355X offers superior throughput, lower latency, and enhanced compute density for large-scale AI deployments.

With this launch, Oracle becomes the first hyperscaler to publicly offer AMD MI355X GPUs; and the only hyperscaler to offer both MI355X and MI300X; reinforcing our commitment to providing cutting-edge infrastructure and a wide range of compute options for AI, HPC, and other demanding workloads.

OCI Compute’s Instinct MI355X GPU bare metal instances:

- Instance Name: BM.GPU.MI355X.8

- GPUs: 8 x AMD Instinct™ MI355X Accelerators (288 GB Matrix Core)

- GPU Memory: 2.3 TB HBM3e

- CPU and System Memory: 128 cores 5th Gen AMD EPYC™ Processors with 3 TB DDR5

- Local Storage: 61.44 TB

- Network: 400 Gbps Front-End and 3,200 Gbps Cluster Network

- Industry-leading GPU cloud compute pricing, at $8.60 per hour

Customers are choosing AMD Instinct™ MI355X GPUs on Oracle Cloud Infrastructure to power large-scale generative AI, real-time inference, and complex model training—especially for multimodal AI models that require significant compute power. As AI applications handle larger and more complex datasets, teams require solutions designed specifically for large-scale training and deployment.

That’s where the zettascale OCI Supercluster comes in. With a high-throughput, ultra-low latency RDMA cluster network, it scales up to 131,072 GPUs, making it the largest hyperscale AI supercomputer in the cloud. Instinct MI355X GPUs deliver nearly three times the compute power and 50% more high-bandwidth memory than the previous generation—giving customers the performance and scalability they need to accelerate their AI breakthroughs faster than ever before.

Benefits and Ideal Use Cases

Architectural Advantages: The Instinct MI355X chiplet architecture with HBM3E enables efficient AI and HPC performance. With 288 GB of memory and 8 TB/s bandwidth, it handles larger models with less reliance on slower system resources. The new CDNA 4 architecture doubles dense AI throughput over CDNA 3 through optimized matrix cores and dataflow scheduling.

ML/AI Model Inference, Training, and Development: Ideal for the largest LLMs and out-of-the-box GPU acceleration frameworks ( i.e. TensorFlow, PyTorch, ONNX, Runtime, and Triton). It delivers high throughput for agentic AI, multi-model inference, Mixture of Expert (MoE) models, and long-context tasks like RAG and summarization—thanks to its large memory and high bandwidth.

HPC Workloads: Graph Neural Networks (GNNs), Computer Aided Engineering (CAE), Simulation-Based Product Development, Digital Twins, Genomic Sequencing and Analysis, Climate Modeling, Fluid Dynamic Simulations, Financial Modeling and Simulation, Massive-Scale Data Analysis and Modeling.

Open Ecosystem: Instinct MI355X works on ROCm™, AMD’s open-source GPU computing platform, making it a developer-friendly option for those looking to leverage existing community-based AI and HPC tools. ROCm also has several ways to port CUDA code and applications to ROCm without extensive re-writes/code conversions.

Customers Benefiting from OCI & AMD Price-Performance

Absci accelerates AI-driven drug discovery with Oracle and AMD

Absci, a clinical-stage biotech company, is partnering with Oracle Cloud Infrastructure (OCI) and AMD to accelerate generative AI-driven drug discovery, including large-scale molecular dynamics and antibody design. By leveraging OCI’s AI infrastructure and AMD Instinct™ GPUs, Absci has streamlined its compute stack, reduced inter-GPU latency to 2.5 µs, and achieved terabytes-per-second throughput for data handling—all without hypervisor overhead.

“Our mission is to push the boundaries of how we design new therapeutics,” said Sean McClain, founder and CEO, Absci. “With OCI and AMD, we are pairing our cutting-edge AI models with best‑in‑class infrastructure. This collaboration accelerates our ability to bring novel therapeutics to patients while laying the technical foundation for the next generation of AI‑powered drug‑discovery workflows.”

Seekr develops accurate, explainable AI securely with Oracle and AMD

Seekr, an AI company focused on trusted AI, has signed a multi-year deal with Oracle Cloud Infrastructure (OCI) to accelerate enterprise AI and develop next-gen models and agents. The collaboration combines SeekrFlow™, OCI’s high-performance infrastructure, and AMD GPUs to enable faster, more efficient model training at global scale.

“OCI was the obvious choice as our international infrastructure partner,” said Rob Clark, president, Seekr. “Developing next-generation vision-language foundation models for top satellite providers and nation-states analyzing decades of imagery and sensor data requires massive raw GPU compute capacity. Oracle and AMD both came to the table with the infrastructure, top performance multi-node training compute, international presence, and the mindset that makes this possible.”

Getting Started

With the launch of OCI Compute featuring AMD MI355X GPUs, Oracle advances AI infrastructure performance, scalability, and cost efficiency. Join leading customers leveraging AMD GPUs on OCI for top-tier performance and industry-leading pricing. Get started today.

Read the Oracle AI World 2025 Press Release to learn more about the full OCI and AMD AI Infrastructure lineup.