For the steps to fine-tune an LLM using dstack, see Fine-Tune and Serve Large Language Models on Oracle Cloud Infrastructure with dstack.

The success of GPT by OpenAI has demonstrated the potential of generative AI and has motivated enterprises across all industries to explore integrating large language models (LLMs) into their products and services. However, the actual adoption of LLMs by enterprises has revealed numerous significant challenges.

Why open source LLMs

Control and privacy

While services such as OpenAI radically simplify the use of LLMs and guarantee quality, they pose risks for enterprises. If an enterprise doesn’t own the weights of the LLM, the model’s behavior can change unexpectedly, jeopardizing the core of the enterprise’s products and services. Even slight changes in model behavior can cause unpredictable quality degradation. Additionally, using services like OpenAI involves sharing proprietary and customer data with third parties.

Customization and costs

GPT and similar models are designed to be versatile, achieving high quality across diverse use cases. This generalization, combined with a token-based pricing model, can lead to exorbitant costs when enterprises use LLMs at a large scale. Moreover, such services don’t allow for extensive customization for very specific domains.

Until recently, few alternatives to OpenAI and similar services existed. However, the release of Meta’s Llama 2, and more recently Llama 3, has provided enterprises with viable options to overcome these challenges.

Llama is changing the game

Thanks to the quality of Llama and similar models and their high customization potential, enterprises can fine-tune open source models for specific tasks. This approach allows enterprises to use smaller models cost-effectively without compromising quality. More importantly, owning the weights of the model grants enterprises full control over its behavior, eliminating the risk of unexpected changes.

Furthermore, owning the weights enables enterprises to deploy LLMs in their secure environments, ensuring that their data and customer data aren’t shared with third parties.

While open source LLMs offer a significant advantage in building custom models, training and deploying LLMs in the cloud can be complex.

Simplifying training and deploying LLMs with dstack

dstack is an open source container orchestration platform that simplifies the training and deployment of LLMs in the cloud. It’s tightly integrated with Oracle Cloud Infrastructure (OCI) and streamlines the process of setting up cloud infrastructure for distributed training and scalable deployment of LLMs.

For example, you can specify a configuration for a training task or a model deployment, and dstack handles setting up the required infrastructure and orchestrating the containers. This capability allows you to use your own scripts and any open source frameworks.

The following example shows a fine-tuning task using three nodes, each with two NVIDIA A10 Tensor Core GPUs, to fine-tune the Gemma 7B model:

type: task

image: "python:3.11"

env:

- ACCEL_CONFIG_PATH

- FT_MODEL_CONFIG_PATH

- HUGGING_FACE_HUB_TOKEN

- WANDB_API_KEY

commands:

- conda install cuda

- pip install -r requirements.txt

- accelerate launch \

--config_file recipes/custom/accel_config.yaml \

--main_process_ip=$DSTACK_MASTER_NODE_IP \

--machine_rank=$DSTACK_NODE_RANK \

--num_processes=$DSTACK_GPUS_NUM \

--num_machines=$DSTACK_NODES_NUM \

--main_process_port=8008 \

scripts/run_sft.py recipes/custom/config.yaml

ports:

- 6006

nodes: 3

resources:

gpu: a10:2

shm_size: 24GB

Key Features of dstack

dstack offers the following benefits and capabilities:

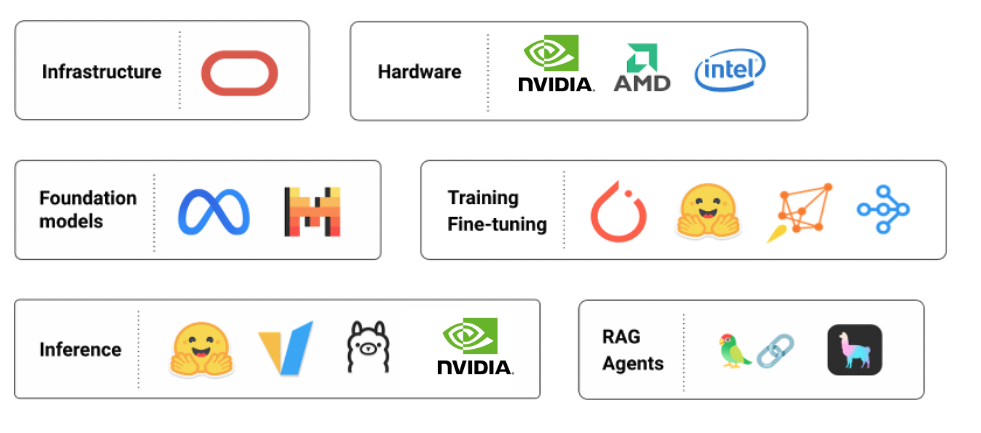

• Libraries integration: dstack seamlessly integrates with any open source frameworks, such as HuggingFace’s ecosystem, Accelerate, PEFT, TRL, TGI, and others.

• Infrastructure setup: dstack manages the infrastructure, whether for distributed training or scalable deployment.

• Resource allocation: dstack makes it easy to specify the number of GPUs and nodes for fine-tuning jobs or the number of replicas and autoscaling rules for service deployment.

Conclusion

Open source LLMs and frameworks for training and deployment are pivotal in enabling enterprises to adopt generative AI in a cost-effective and straightforward manner. By combining dstack, OCI, and the open source ecosystem of frameworks and LLMs, your team can accelerate the adoption of AI and harness its full potential.

Try an Oracle Cloud Free Trial! A 30-day trial with US$300 in free credits gives you access to Oracle Cloud Infrastructure. You can also reproduce a fine-tuning useg dstack on Oracle Cloud Infrastructure by following the tutorial, Fine-Tune and Serve Large Language Models on Oracle Cloud Infrastructure with dstack.