As AI workloads mature from training-focused experiments to real-time, production-scale systems, enterprises are starting to look for infrastructure that meets the performance demands of each stage — from model training to retrieval-augmented generation (RAG) and real-time inference.

S3-compatible storage has emerged as a standard interface for modern AI platforms, thanks to its flexibility and cloud-native integration. However, workloads like RAG, interactive inferencing, and agentic AI often require high-concurrency, low-latency access to object data — pushing the performance envelope further.

At Oracle, we collaborate closely with technology partners to provide best-in-class storage solutions tailored to the evolving needs of our customers. By drawing on our partners’ specialized expertise, we facilitate our customers to benefit from the efficient, scalable, and high performance storage options available. In this blog, we will share the results of a joint Proof of Concept (POC) between Oracle and Oracle Partner Network member DDN, highlighting the architecture and performance outcomes of using the DDN Infinia solution on Oracle Cloud Infrastructure (OCI) IaaS Compute.

Infinia is a software-defined, S3-compatible KV store optimized for GPU-driven AI, delivering high IOPS, low latency, and scalable throughput. DDN Infinia offers:

- Extreme S3 Performance: Ultra-low latency, high throughput, and millions of IOPS (Obj/sec)

- Unified Data Access: Connects multi-modal data across environments without silos

- Massive Scale: Supports 200,000+ GPUs and exabyte-scale datasets

- Native Multi-Tenancy: Isolate and manage workloads with QoS and dynamic scaling

1. Deployment Architecture

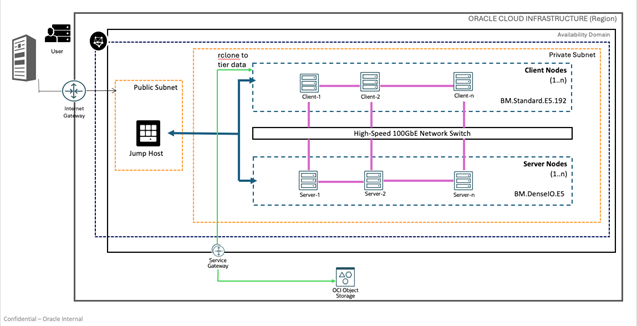

This POC demonstrates the scalability and performance of DDN Infinia software-defined storage running on OCI bare metal servers to support high-throughput and low latency S3 workloads for AI/ML, media, and HPC. We recommend using BM.DenseIO.E5 and BM.DenseIO.E4 as server nodes.

Figure 1: OCI Deployment Architecture for DDN Infinia Scale-Out Setup

2. Benchmark Setup

2.1 Server Nodes: 6 x OCI Compute BM.DenseIO.E5.128

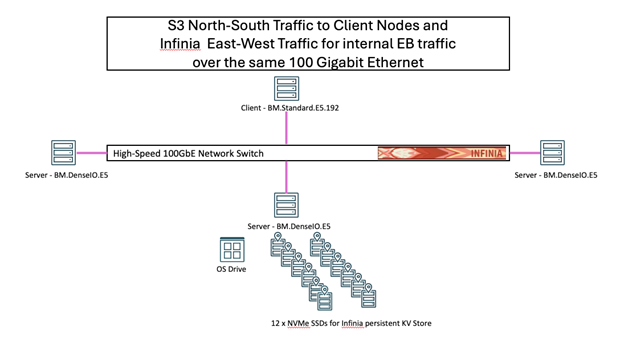

Figure 2: DDN Infinia POC Setup at OCI – Server Config

DDN Infinia was deployed across six BM.DenseIO.E5 nodes (2 × AMD EPYC 9J14 CPUs, 128 OCPUs total, 1.5 TB RAM, 12 × 6.8 TB NVMe SSDs, and a single 100 GbE high-speed networking interface), forming a single logical storage cluster that exposed a unified S3-compatible namespace. We recommend a minimum of six server nodes for the initial Infinia storage configuration for high-availability as well as no interruptions to data access in the event of one or more server node being taken offline (due to software/hardware maintenance). The setup provided ~450 TB of pre-protection usable capacity with full support for erasure coding, metadata indexing, and distributed high performance S3 object access.

2.2 Client Nodes: 6 x BM.Standard.E5.192

The S3 client nodes were provisioned on OCI BM.Standard.E5.192 shape to simulate AI/ML applications accessing DDN Infinia storage via S3 protocol. Each node included: 2 x 96-core AMD EPYC CPUs (192 OCPUs), 2.2 TB RAM and a single 100 GbE high-speed networking interface.

The client nodes can be any GPU nodes or CPU only nodes. You can do storage tiering by combining Infinia and low-cost Object Storage to transfer in / transfer out data based on your workload requirements.

Client nodes generated high concurrency GET/PUT workloads using industry tools like AWS cli, s5cmd, and warp benchmark, emulating AI applications performing inference, RAG, and real-time streaming I/O.

2.3 Data Protection

In the above setup, out of the total 490 TB NVMe storage, 450 TB is the pre-protection usable storage pool. The ~40 TB difference is normal system reserve for: consolidated audit trail, cluster metadata, rebuild/journal space, virtual hot spare, fragmentation overhead.

The table below demonstrates raw and usable capacity for some typical scenarios using OCI BM.DenseIO.E5 nodes.

| Nodes | Raw Capacity (TB) | Usable, Pre-Protection (TB) | Usable, EC 8+3P (TB) | Usable, EC 16+3P (TB) | Usable, 4-Way Replication (TB) |

|---|---|---|---|---|---|

| 6 | 489.6 | 450 | 327 | – | 112.5 |

| 10 | 816 | 761 | 553 | 642 | 190 |

| 12 | 979.2 | 917 | 666 | 774 | 229 |

| 16 | 1306 | 1223 | 889 | 1030 | 306 |

| 32 | 2611.2 | 2444 | 1776 | 2052 | 611 |

We recommend EC 8+3P for clusters less than 10 nodes and EC 16+3P for clusters with 10+ nodes and recommended for production. 4-way replication is used for tiny/small IO and metadata. If you need support for calculating capacity, please reach to OCI HPC GPU Storage team.

3. Performance Results

3.1 S3 Metadata and Data Performance

sThe S3 performance testing focused on Obj/sec for small objects as well as metadata operations, throughput for large objects, latency as well as Time-to-First-Byte (TTFB) for the S3 Object access.

| S3 Operation | Obj/Sec | Throughput | Latency |

|---|---|---|---|

| PUT | 52 K/s | 27.6 GiB/s | 4 milli-seconds |

| GET | 225 K/s | 34.6 GiB/s | 1.7 milli-seconds |

| S3 Metadata Operation | Obj/Sec |

|---|---|

| LIST | 194 K/s |

| STAT | 345 K/s |

| Time-to-First-Byte | 5 milli-seconds |

|---|

Table 1: Aggregate DDN Infinia S3 performance on OCI across the six BM.DenseIO.E5

Now we compare the performance of DDN Infinia on OCI using the below S3 tools / benchmarks:

- Warp: This is built for speed—it runs tons of parallel requests to max out the network and hits close to the theoretical limit of the 100 Gbps NIC of the BM.DenseIO.E5. Thus, we see 10.6 GiB/s throughput with Warp.

- AWS CLI cp: This is written in Python and isn’t really optimized for high-speed transfers or heavy parallelism. It just can’t keep up with the hardware, so the best it managed was 2.2 GiB/s.

- s5cmd cp: This is written in Go, which handles concurrency much better than Python, so it’s able to push more data in parallel and get better performance than AWS CLI cp, but it still doesn’t quite reach what Warp can do. This lands in the middle at 4.7 GiB/s.

The more optimized the tool is for parallelism and efficient data transfer, the closer you get to saturating your network link. That’s why we see such a big gap between these results.

| Benchmark Tool | Single S3 Client to Single S3 Server Throughput |

|---|---|

| warp benchmark | 10.6 GiB/s |

| AWS CLI cp | 2.2 GiB/s |

| s5cmd cp | 4.7 GiB/s |

Table 2: Single S3 Client to Single S3 Server DDN Infinia throughput on OCI

On OCI, DDN Infinia consistently delivered:

- Sustained High Obj/sec (IOPS),

- Sustained High Throughput,

- Low latency and TTFB in the lower single-digit milli-seconds, and

- The Storage performance scales with scale-out OCI server node counts.

3.2 Performance Scalability

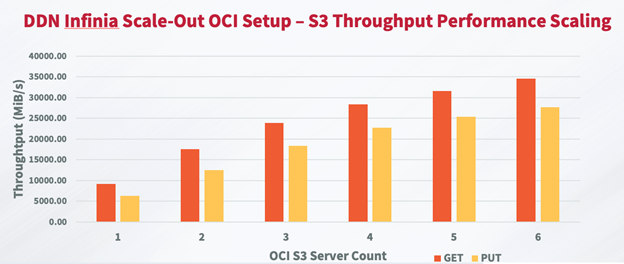

The Infinia S3 performance and scalability was assessed using the popular warp benchmark. DDN Infinia’s S3 throughput scales with the scale-out OCI server node counts (Figure 3) until we saturated the network bandwidth between the nodes (100 GbE high-speed networking interface). The aggregate S3 throughput is balanced across the participating scale-out OCI server nodes with each OCI server node sustaining ~4.6 GiB/s for PUT and ~5.7 GiB/s for GET operation.

Figure 3: DDN Infinia Scale-Out OCI Setup – S3 Throughput Performance Scaling

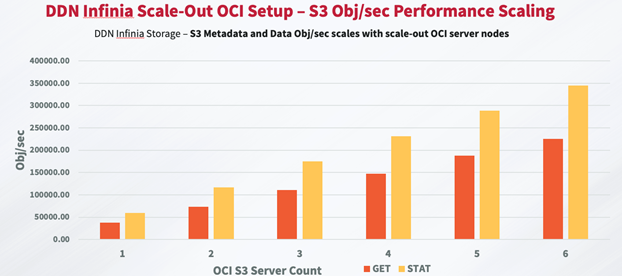

DDN Infinia’s S3 Metadata and Data Obj/sec performance scales with scale-out OCI server nodes (Figure 4). The aggregate S3 metadata and data Obj/sec performance is balanced across the participating scale-out OCI server nodes.

Figure 4: DDN Infinia Scale-Out OCI Setup – S3 Obj/sec Performance Scaling

3.3 Cost Comparison

Let’s compare the cost of building 490 TB NVMe storage capacity on different cloud providers.1

| Cloud Provider | Compute Shape | Cost/Hr to build 490TB NVMe Infinia Storage | Region | Cost |

|---|---|---|---|---|

| OCI | BM.DenseIO.E5.128 | $71.46 | All Regions | 1x |

| AWS | i3en.24xlarge | $97.63 | us-east-1 (Northern Virginia) | 1.4x |

| AWS | i3en.24xlarge | $108 | eu-west-1 (Ireland) or us-west-1 (Northern California) | 1.5x |

| AWS | i3en.24xlarge | $116.64 | eu-central-1 (Frankfurt) | 1.6x |

| AWS | i3en.24xlarge | $114.91 | ap-northeast-1 (Tokyo) or ap-northeast-3 (Osaka) | 1.6x |

| AWS | i3en.24xlarge | $118.80 | me-central-1 (UAE) | 1.7x |

| GCP | z3-highmem-88-highlssd | $187.60 | us-east4 (Northern Virginia) | 2.6x |

| GCP | z3-highmem-88-highlssd | $193.90 | europe-west4 (Netherlands) | 2.7x |

| GCP | z3-highmem-88-highlssd | $229.60 | europe-central2 (Warsaw) | 3.2x |

| GCP | z3-highmem-88-highlssd | $234.64 | asia-northeast1 (Tokyo) or asia-northeast2 (Osaka) | 3.3x |

| GCP | z3-highmem-88-highlssd | $221.20 | me-central1 (Doha) | 3.1x |

Table 3: Cost Comparison across Cloud Provider

[1] – Price calculations were done using retail pricing as of June 30th, 2025.

Unlike other cloud providers, OCI pricing for compute is same across all regions. From the above table we can see that OCI BM.DenseIO.E5 offers a lower-cost option ($71.46/hr) to meet a total 490 TB NVMe storage (usable unprotected capacity) requirement for DDN Infinia solution.

On OCI it maintains cost-efficiency even as the required capacity scales up because:

-

High storage per node (81.6 TB)

-

Competitive per-node hourly retail pricing ($11.91)

-

Fewer nodes needed to meet capacity

4. Conclusion

The joint POC between Oracle and DDN confirmed that DDN Infinia on OCI delivers linear scalability, high S3 throughput, ultra-low latency, and massive concurrency. Together, OCI and DDN solutions can provide a cloud-native stack built for:

- Low Infrastructure Cost on OCI – The OCI BM.DenseIO.E5 shape delivers cost-effective NVMe storage and sustaining cost-efficiency as capacity requirements grow.

- High Performance Scalable Data Storage – DDN Infinia’s low latency, low TTFB, scaling and high metadata Obj/sec (IOPS), as well as scaling and high data throughput can serve as ideal storage for high performance applications and accelerate AI LLM inference as well as RAG workloads.

- Faster Time-to-Insight, Higher GPU Efficiency – By eliminating I/O bottlenecks and delivering sustained high performance object access, Infinia enables the GPUs and CPUs on OCI remain fully utilized. This translates into shorter training times, faster AI inference, and lower cost per inference.

- Optimized for OCI’s High Performance Architecture – Running on OCI’s dense bare metal compute and 100 GbE network fabric, Infinia takes the full advantage of Oracle’s infrastructure — delivering consistent, stable, reliable performance at scale.

To learn more, contact Pinkesh Valdria at Oracle or ask your Oracle Sales Account team to engage the OCI HPC GPU Storage team. Also, visit DDN website to learn more about the Infinia on OCI solution or contact oracle@ddn.com.