We’re excited to announce the general availability of the NVIDIA GPU device plugin add-on in Oracle Cloud Infrastructure (OCI) Kubernetes Engine (OKE). This add-on provides greater control, flexibility, and visibility for customers running GPU-intensive workloads in their OKE clusters.

OKE Add-ons

Many OKE customers use NVIDIA GPUs for running their AI training and inferencing workloads. For Kubernetes to utilize GPUs, a GPU device plugin is required to advertise GPU resources to workloads, exposing details like the number of GPUs in a node and GPU health. With this release, you can enable and manage the NVIDIA GPU device plugin as a dedicated OKE add-on. This change simplifies GPU resource management, enhances transparency, and allows tailored configuration for specific needs. The NVIDIA GPU device plugin is automatically installed for all new OKE clusters, but the add-on to manage it is only available for OKE enhanced clusters and not OKE basic clusters.

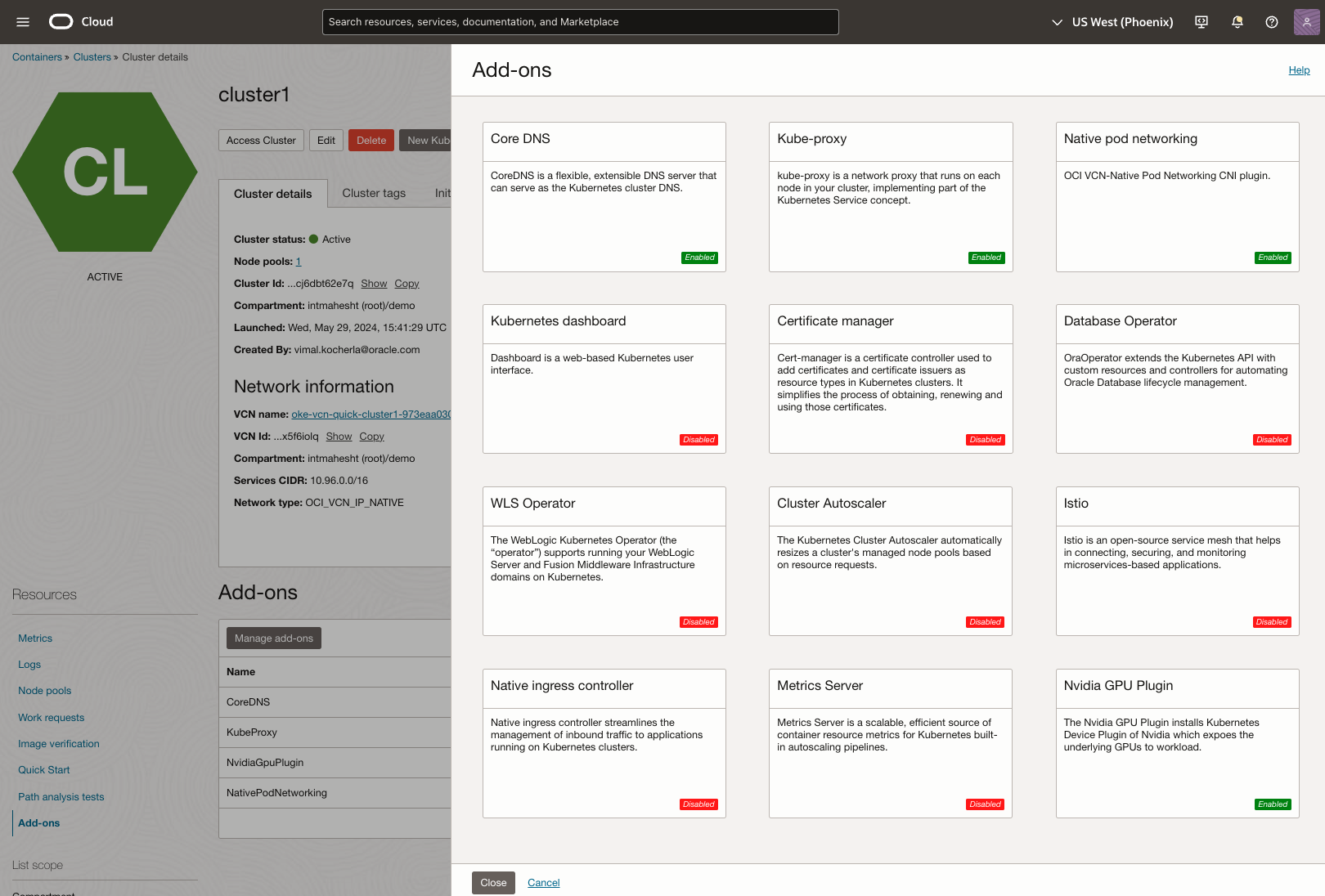

OKE add-ons are modular features that extend Kubernetes cluster functionality. They help offload the management of cluster operational software to Oracle, simplifying lifecycle management and enabling you to easily install, configure, and update critical components without more operational overhead. Migrating the NVIDIA GPU device plugin component to the add-ons framework provides you with more configuration attributes for more granular control.

Enabling Add-ons

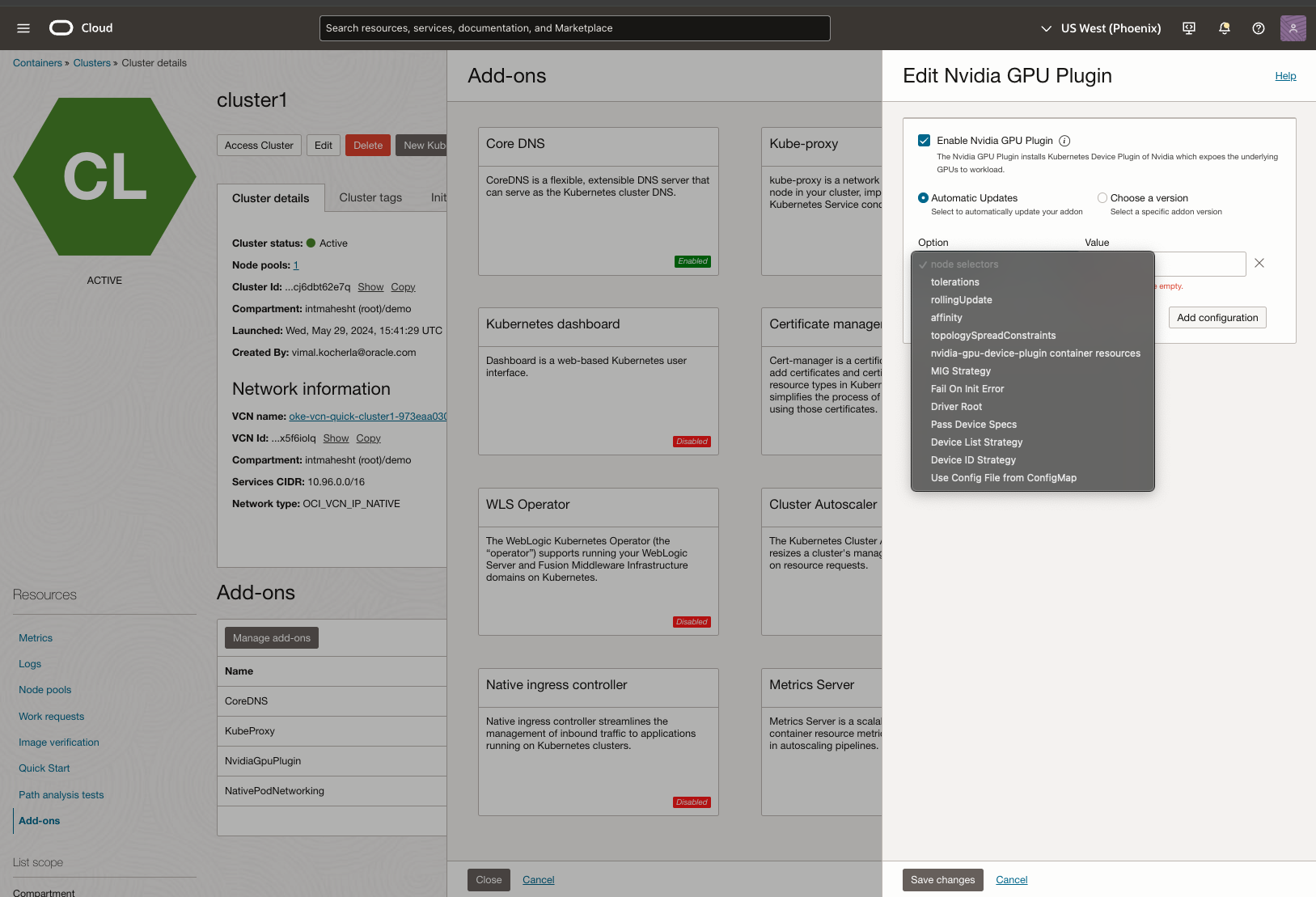

You can enable the NVIDIA GPU device plugin from the Oracle Cloud Console, CLI, software developer kit (SDK), and REST API. In the Console, you can view and configure your add-ons from the Add-ons tab of your OKE cluster resource.

From there, you can enable or disable the NVIDIA GPU device plugin for your cluster and select the configurations you want. You can opt into and out of automatic updates by Oracle. Oracle automatically updates the device plugin add-on for the vendor you choose and keeps it up to date as new versions launch. Alternatively, you can select an add-on version from the list of supported versions and apply common Kubernetes arguments for pods. For available configuration arguments and documentation, see NVIDIA GPU Plugin add-on configuration arguments.

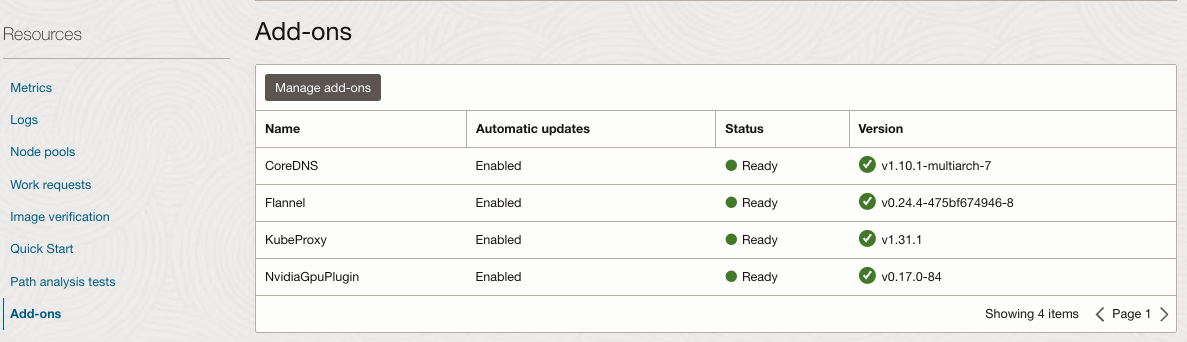

When enabled, you can check the status of the NVIDIA GPU device plugin add-on.

This add-on can also be used as part of the OCI AI Blueprints.

Conclusion

We hope that this update helps users configure and manage their GPU-intensive workloads more effectively. As always, we’re actively exploring more integrations and solutions to better serve our customers. For more information, see the following resources: