Oracle Container Engine for Kubernetes (OKE) provides a robust managed environment for deploying, operating, and scaling your containerized applications running on Oracle Cloud Infrastructure (OCI). While OKE makes container orchestration easier, developers need to account for scheduling Kubernetes pods on the appropriate nodes to ensure effective utilization of the Kubernetes cluster and the underlying resources. You can manage this process with the balancing forces of Kubernetes: Taints and tolerations. Let’s delve into different options for automatically tainting Kubernetes nodes in OKE.

Setting the stage: Node affinity, taints, and tolerations

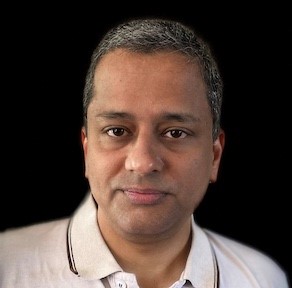

Node affinity is the property of pods that attract them to specific set of nodes, while taints are the property set on nodes that allow a node to repel a set of pods. Tolerations, as the name implies, allow the scheduler to schedule pods with matching taints. Taints and tolerations work together to ensure that the pods aren’t scheduled in undesired nodes.

The Kubernetes documentation discusses the following common use cases in more detail:

-

GPUs are at a premium, and with taints and tolerations, you can ensure that the pods that need GPUs alone are scheduled on that Compute shape and not the other generic workloads.

-

While each node pool has a homogenous shape, you can have multiple node pools of different shape types for different use cases, such as ARM for single threaded workloads, AMD or Intel for the general workloads, and GPU for GPU workloads.

-

Enable segregation based on group, purpose, and so on

-

Enable per-pod contextualized eviction behavior when node problems arise

Taints typically have effects of either NoSchedule or NoExecute. You can also place a less restrictive effect of PreferNoSchedule.

Taint and tolerations flow diagram

The challenge at hand

To ensure that the pods are scheduled on the appropriate nodes or evicted appropriately, the worker nodes need the appropriate taints that work with the pod’s tolerations. Having the taints at startup avoids the unnecessary hassle of having to clean up or evict nodes from the incorrect worker node. The blog expands on how you can add the taints at node creation.

Custom cloud-init to the rescue

Apply the known taint properties to the worker nodes when you create them, either as part of scale-up or during node pool creation, to ensure the appropriate pod placement.

OKE uses the industry-standard cloud-init for initializing and configuring cloud instances. Typically, OKE installs a default cloud-init startup script on the OCI Compute instance that constitutes the node pool in OKE. The cloud-init configures the Compute instance as a Kubernetes worker node.

The default cloud-init might not suit all use cases. So, OKE offers ability to run a custom cloud-init that includes the provided default cloud-init to enable a variety of use cases. To understand the details of custom cloud-init, refer to the blog, Container Engine for Kubernetes custom worker node startup script support. One of the typical use cases for custom cloud-init is enabling automatic taints during the node addition. While many other use cases exist for custom cloud-init, some popular examples include resizing the root volume and setting the worker node to a specific time zone.

Enabling automatic tainting with cloud-init

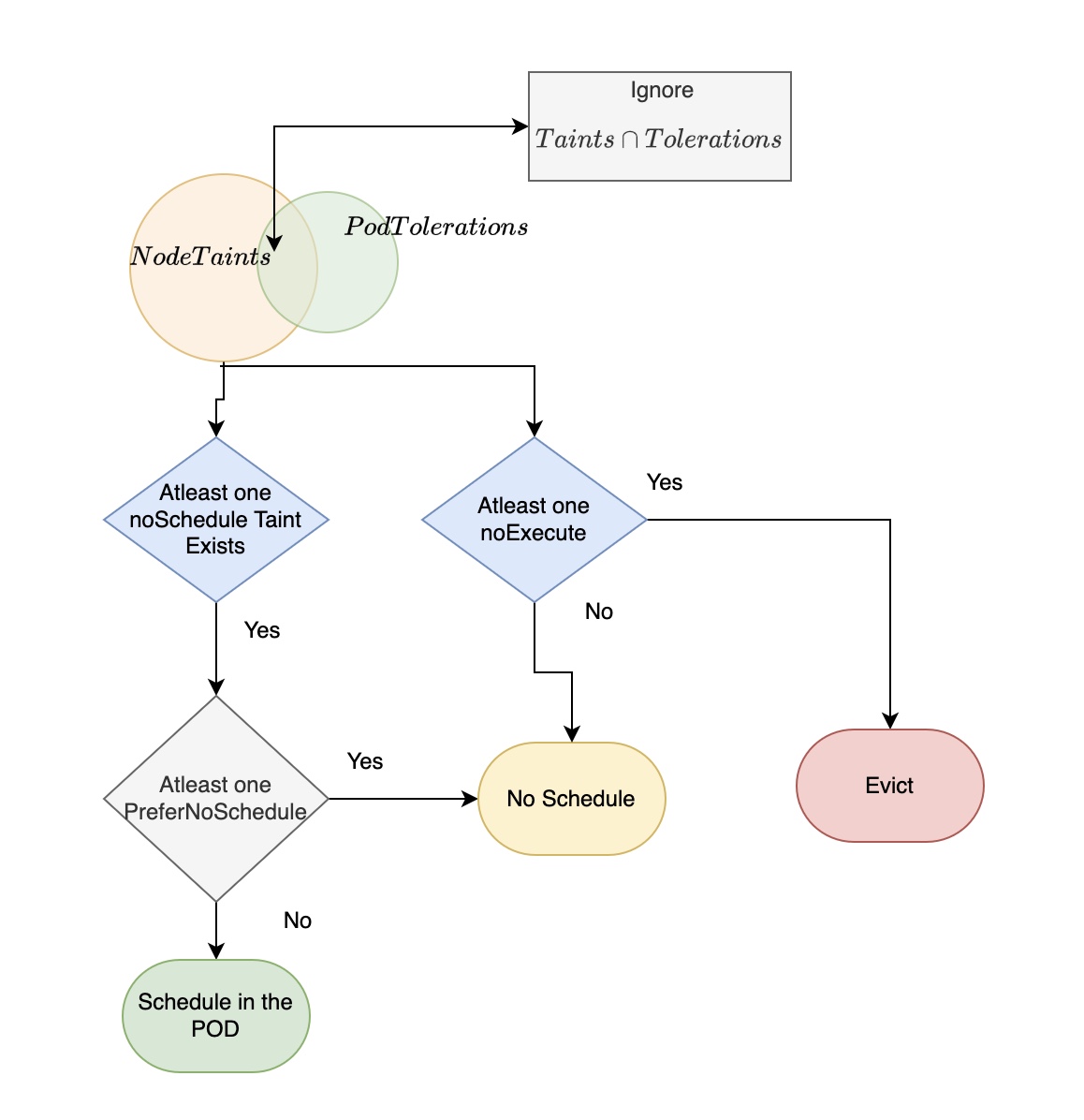

The default cloud-init script joins the worker node to the OKE cluster. The cloud-init script is available as a metadata on the worker node. You can invoke the taint by passing them as the kubelet extra ARGs. You can add custom cloud-init and automatic tainting in multiple ways, including the Oracle Cloud Console or Terraform.

Each node pool can have a specific cloud-init, and every node in a node pool has the same cloud-init settings.

The following code block shows the cloud-init content for adding a worker node to the cluster and adding the taints.

#!/bin/bash

curl --fail -H "Authorization: Bearer Oracle" -L0 http://169.254.169.254/opc/v2/instance/metadata/oke_init_script | base64 --decode;/var/run/oke-init.sh

bash -x /var/run/oke-init.sh --kubelet-extra-args “—node-labels=key=value –register-with-taints=key=value:action”

For example, to ensure that the pods are scheduled only in the nodes that are tagged for production, run the following command:

bash -x /var/run/oke-init.sh --kubelet-extra-args "--node-labels=env=prod --register-with-taints=env=prod:NoSchedule"”

Above code block can be used as a cloud-init for creating nodepool from Terraform as well by specifying userdata in here. Below are the steps.

cat > modules/oke/userdata/cloud-init.sh << !

#!/bin/bash

# DO NOT MODIFY

curl --fail -H "Authorization: Bearer Oracle" -L0 http://169.254.169.254/opc/v2/instance/metadata/oke_init_script | base64 --decode -->/var/run/oke-init.sh

## run oke provisioning script

bash -x /var/run/oke-init.sh --kubelet-extra-args "--node-labels=env=test --register-with-taints=env=prod:NoSchedule"

touch /var/log/oke.done

!

Add the Terrform code block as shown below

# in Terraform Block, source the above cloud-init

# Terraform code declaration

user_data = base64encode(file("modules/oke/userdata/cloudinit.sh"))

# in the resource "oci_containerengine_node_pool" add the below code

node_metadata = { user_data = var.user_data }

The following image shows an example of adding cloud-init through the Oracle Cloud Console:

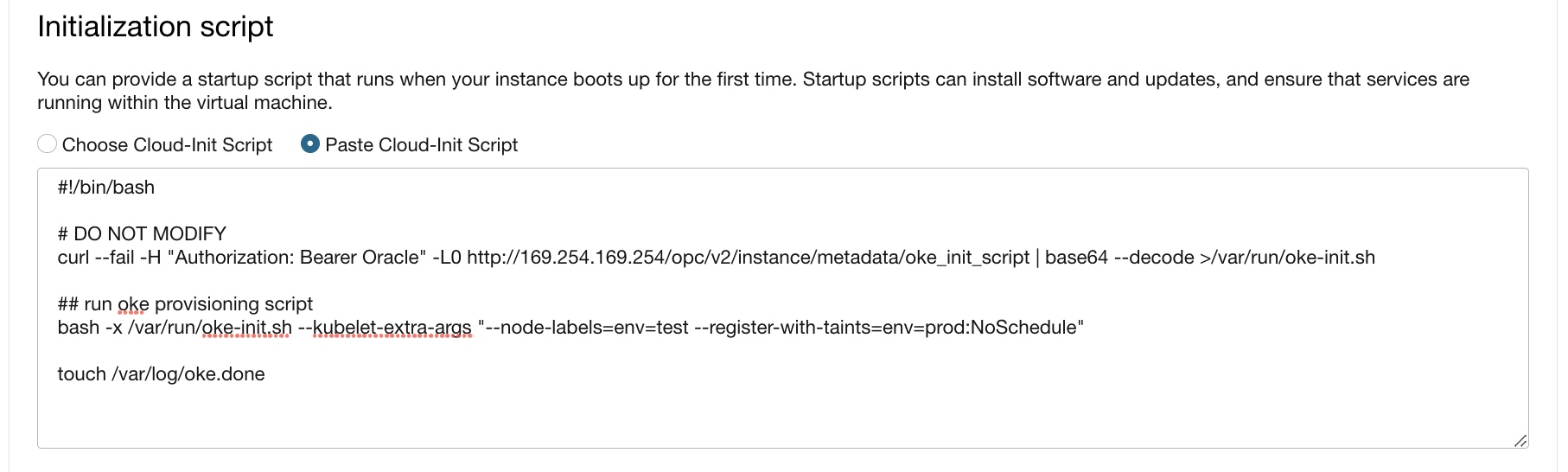

The following command gives the taint information for validation and gives the following output:

kubectl get nodes -o json | jq '[.items[]|.metadata.labels.hostname,.spec.taints]'

A pod created with toleration of env:prod:NoSchedule is scheduled in Node Pool 1, while a pod that has a toleration of env:test:NoSchedule is scheduled in Node Pool 2.

Conclusion

OKE with custom cloud-init and automatic tainting not only provides robust options for the Kubernetes cluster to be optimally used, but also provides control in the hands of the developers and site reliability engineers to effectively manage their Kubernetes environment. For more information on OKE, see the documentation.

Get started with Oracle Cloud Infrastructure today with our Oracle Cloud Free Tier and get trained and certified on OCI.