Original post can be found on Cloud Native blog.

Even though cloud-native solutions are dominating new developments it is commonly the case that legacy systems still need to be integrated with newly developed systems. With legacy systems also comes the need for more legacy-based integration and one of the popular mechanisms to interface files (batch files) between systems has been FTP and SFTP.

As part of the Oracle Integration Cloud Service it is possible to leverage the build-in FTP and SFTP capabilities, however, if you only need a simple SFTP server where clients can upload files one can also rely on a more basic setup.

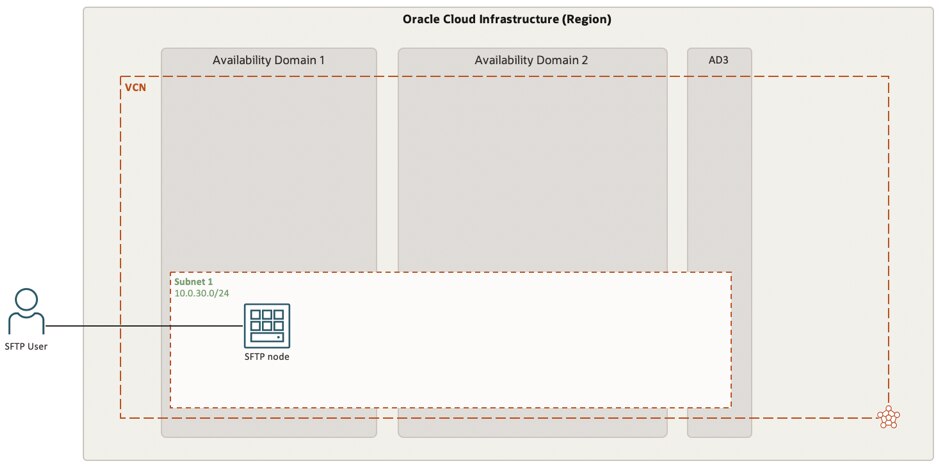

Basic SFTP

Basic SFTP services can be deployed using a standard virtual machine deployed as a compute instance on Oracle Cloud. In general, SFTP services are not compute intensive and a small X86-based or Arm-based compute shape could most likely suffice.

As outlined in the above diagram a simple and basic deployment of an SFTP service will satisfy the need for the service. This solution will however not be highly available and will have limited guaranteed availability of both the service as well as the data entrusted on the local file system. From this viewpoint, it is highly advised to ensure a highly available solution deployment.

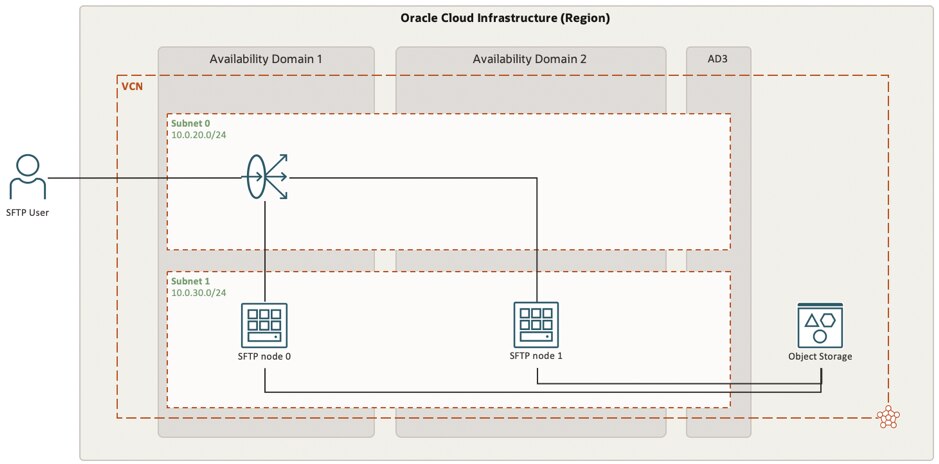

Basic SFTP made high available

As stated above a basic deployment of a single compute node running an SFTP service for end-users might satisfy the need for the service however it is most likely not meeting availability standards. The prime areas that need improvement are both the availability of the service as well as the availability of the persistent storage. The below diagram shows the deployment of the SFTP service based upon two compute nodes in combination with object storage for shared persistent storage of the uploaded files as well as a load balancer to distribute user sessions over the two compute nodes.

The above-shown deployment has a number of specific configurations to take into consideration. As a guidance, the below points need to be taken into account;

- Both SFTP nodes will require the same local user and public key for authentication. As the load balancer will balance the user session to either one of the two nodes we need a single authentication.

- Both SFTP nodes will require to have the same object storage bucket mounted as part of the SFTP root to ensure files are available regardless of which node is used. Mounting s3fs-fuse as outlined in this Oracle blog post.

- Both SFTP nodes will require to be registered as backends in the load balancer backend set via the local IP address to be able to expose them as a single IP to the outside world.

- Load balancer needs to be configured as a “network load balancer” (do not select type “load balancer”).

- The load balancer needs to be configured with a 5-Tuple Hash policy. This policy distributes incoming traffic based on 5-Tuple (source IP and port, destination IP and port, protocol) Hash.

- The load balancer needs to be configured with “Preserve IP Source” on.

In addition to the above-mentioned points, a possible annoyance can be, if one is not ensuring that both nodes have the same ECDSA key fingerprint, that you have to ensure SFTP clients will not enforce strict Host Key checking. In Linux clients, you can force this with -o StrictHostKeyChecking=no as an option to the sftp command. If you want to prevent this you will need to ensure equality between the ECDSA keys on both nodes. You can configure this on the SFTP node side as part of the local sshd config. In general, disabling strict host key checking when initiating a session to a remote host should not be promoted from a security point of view.

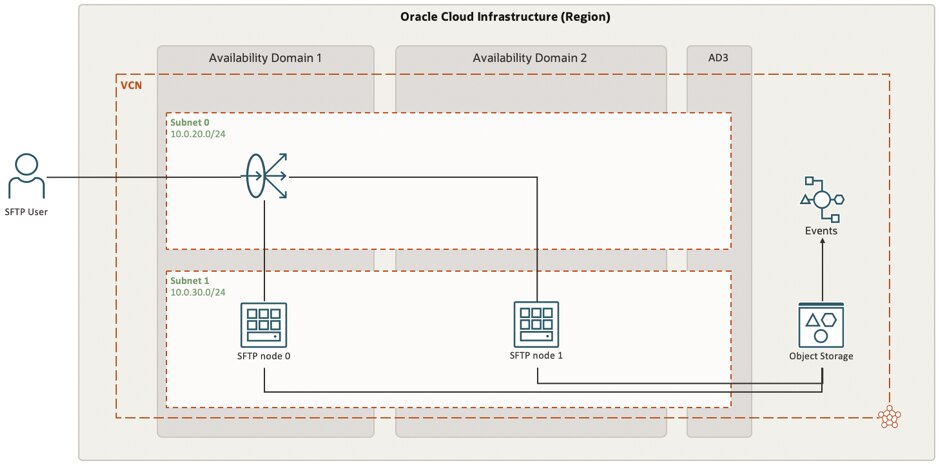

Basic SFTP and event-driven architectures

As part of a growing number of cloud-native architectures, a drive to move to an event-driven architecture is observed. Without adopting an event-driven strategy SFTP users will upload files to the SFTP service and files will be stored in an object storage bucket which will trigger a cloud-native event.

The trigger of a file being entrusted to an object storage bucket resulting in an event can be used to inform application logic about the fact a new file is available. The application logic can take the needed actions on the newly uploaded file. This makes it a more direct, a more event-driven, solution as opposed to a model where application logic is polling the storage bucket to check if a new file has been uploaded.

The mentioned events/event notifications which are being initiated by uploading a new file to the object storage are CloudEvents as they are defined in the CNCF cloudevents.io open standards. For the creation of a new object in a storage bucket, the OCI Object Storage will emit a “create object” CloudEvent as part of the “Object Event Type” set. A full and detailed view of the events and event types can be located in the Oracle Documentation.

Following the concept of event-driven architectures as part of cloud-native design provides the option to ensure the event related to the upload of a new file via SFTP can be forwarded to a lower level where you can define the logic to take action upon the file. One of the potential targets to send the event towards can for example be OCI Cloud Functions (also known as Oracle Functions) that will provide a serverless deployment which will only be triggered in case it is needed.

By adopting the model of taking action via serverless function based upon a CloudEvent we have moved a relative traditional SFTP functionality into the cloud-native era while still honoring the need for the more traditional way of interfacing which some legacy systems in the wider landscape might still require.