Oracle Database 23ai introduces AI Vector Search, a breakthrough that integrates artificial intelligence directly into the database, with developers to perform advanced data processing and create vector embeddings without the need to leave the database environment. This feature enables advanced semantic searches in Oracle APEX applications by transforming data into high-dimensional vectors for context-aware similarity searches. Unlike keyword searches, AI vector search understands the meaning behind the data to fetch relevant results based on semantics. Oracle Database 23ai, with vector support, enhances Oracle’s converged database technology, providing native support for modern data types and transactions, including JSON, spatial, graph, and now vectors.

With Oracle Database 23ai, there is no need for external LLMs or any additional resources outside the database. Everything from vector embeddings to storing and calculating vector distances is handled natively within the database. This makes it easy to integrate advanced semantic search features into your APEX applications.

Oracle APEX and Oracle Database 23ai – The Next-Gen Data Platform

Oracle APEX, the world’s most popular low-code enterprise development platform, and Oracle Database 23ai represent the ultimate platform for the creation of next-generation data-driven applications. The integrated AI capabilities of Oracle 23ai, along with the APEX’s ease of use and rapid development features, enable developers to create sophisticated, next-generation data-driven applications with AI capabilities quickly and efficiently. This synergy allows developers to leverage advanced technologies like semantic search and RAGs using basic SQL and APEX skills.

We’ll demonstrate this powerful combination of Oracle APEX and Oracle Database 23ai by implementing semantic search in a social media app.

Semantic search in a social media app

In this demo, we will be using an APEX application that lets you find images based on their descriptions. For example, if you search for “Mumbai,” you’ll see all the images with “Mumbai” in their descriptions right away. This is just a normal keyword search. However, if you search for Bombay, even though the word “Bombay” isn’t explicitly mentioned in any of the descriptions, this APEX app powered by AI Vector Search will still display images from Mumbai. This is because it understands that “Mumbai” and “Bombay” refer to the same place and hence are semantically similar. AI Vector Search goes beyond just matching the keywords; it grasps the context and meaning behind the words.

In this blog, we’ll show you how you can build this semantic search with Oracle APEX.

The image descriptions mentioned in this demo are generated using “GPT-4o” vision models. The process for creating these descriptions is outside the scope of this blog post.

NOTE: You can download the export of the social media demo app here.

Background

Before we look into the building blocks of this demo app, let’s take a step back and understand the basics of Vectors and Vector Search.

What are Vectors?

In the fields of data science and AI, vectors are used to represent data points in a high-dimensional space. Every data point, such as a word, image or user profile, can be considered as a vector in that space. Vectors also permit comparison among these data points by using measures of similarity such as cosine function and Euclidean distance. This is useful for building recommendation systems, clustering, search, etc.

In AI Vector Search, data is transformed into high-dimensional vectors, allowing advanced semantic search capabilities. This implies that the search engine comprehends the context and meaning of the query and locates semantically similar outcomes.

What is ONNX?

ONNX (Open Neural Network Exchange) is an open-source format for machine learning model representation. It enables interoperability between various machine learning frameworks by allowing you to train models in one framework and then deploy or perform further processing with another framework. It is a standard way to represent deep learning and machine learning models that are both framework-agnostic and highly interoperable. Basically, ONNX’s main aim is to allow developers to switch among different machine learning tools without going through model re-implementation.

Oracle Database 23ai ships with an in-house ONNX runtime engine. You can import externally trained ONNX files into the Oracle Database and perform in-database vector embedding, where the database applies deep learning embedding models to convert data into vectors. This allows for native integration and utilization within the database, simplifying the deployment process and enhancing AI capabilities directly within the database environment.

Implementation

Now, let’s look at the building blocks of this demo app and learn how to implement semantic search in your APEX apps.

Prerequisites

To take advantage of AI Vector Search in Oracle Database 23ai and build Semantic Search in your APEX apps, you need:

- Oracle Database 23ai – You can provision an always free ADB instance with Database 23ai. Learn more about this availability here.

- Oracle APEX 24.1 – This latest Oracle APEX version 24.1, is generally available for download and is being rolled out to OCI APEX Application Development and Autonomous Database Cloud Service regions across the globe. Learn more here.

Load ONNX Model into the Database

To load the ONNX model into the database, we first upload the file to object storage and create the necessary credentials to access this file from the Autonomous Database. Let’s get to it:

- Upload the ONNX file to object storage. You can convert pre-trained models (like the Hugging Face transformers model) to ONNX format. Read more about this here and here.

You can download the pre-built ONNX model using this link. Read this blog post for more details.

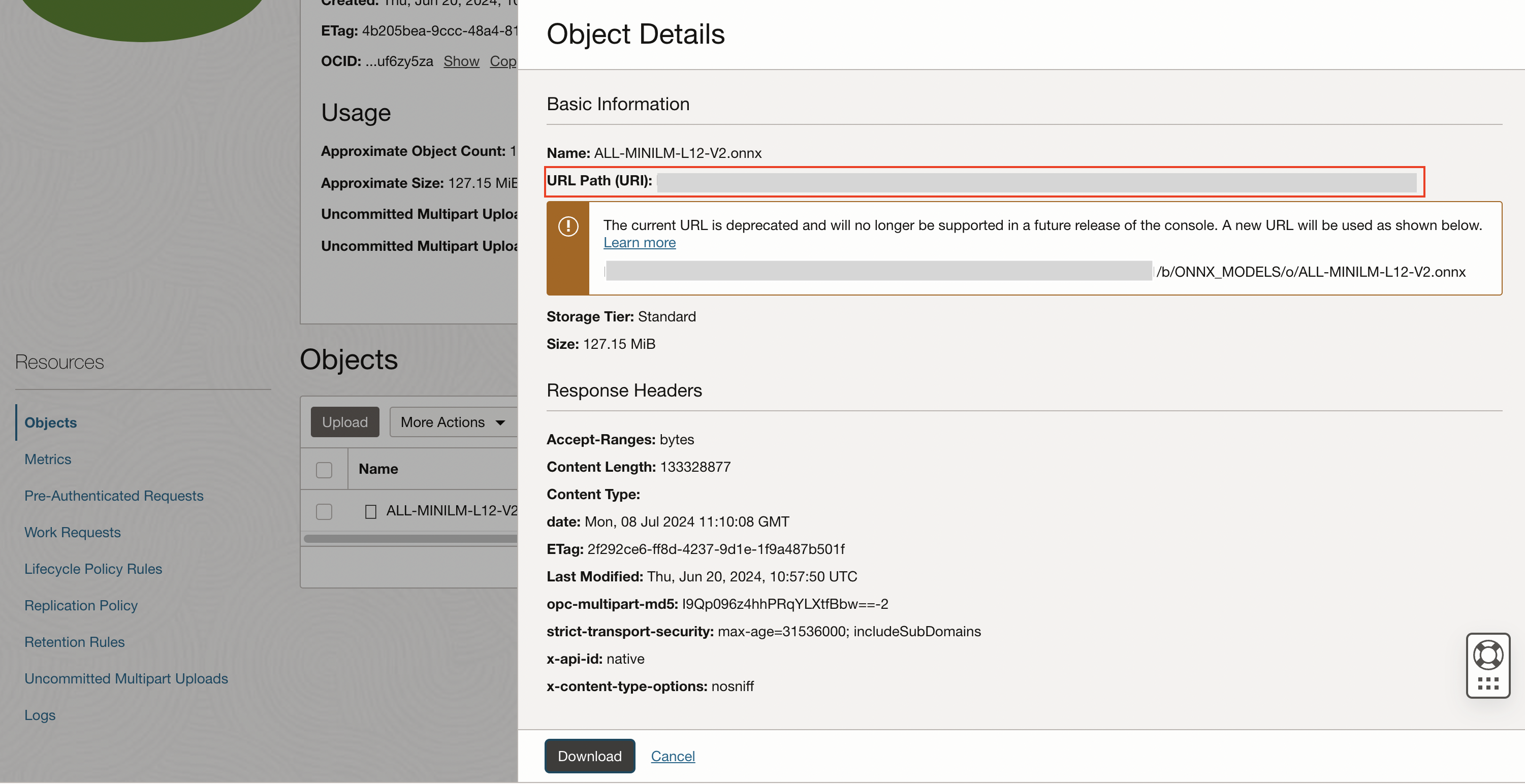

Note down the Object URI of the file uploaded to object storage.

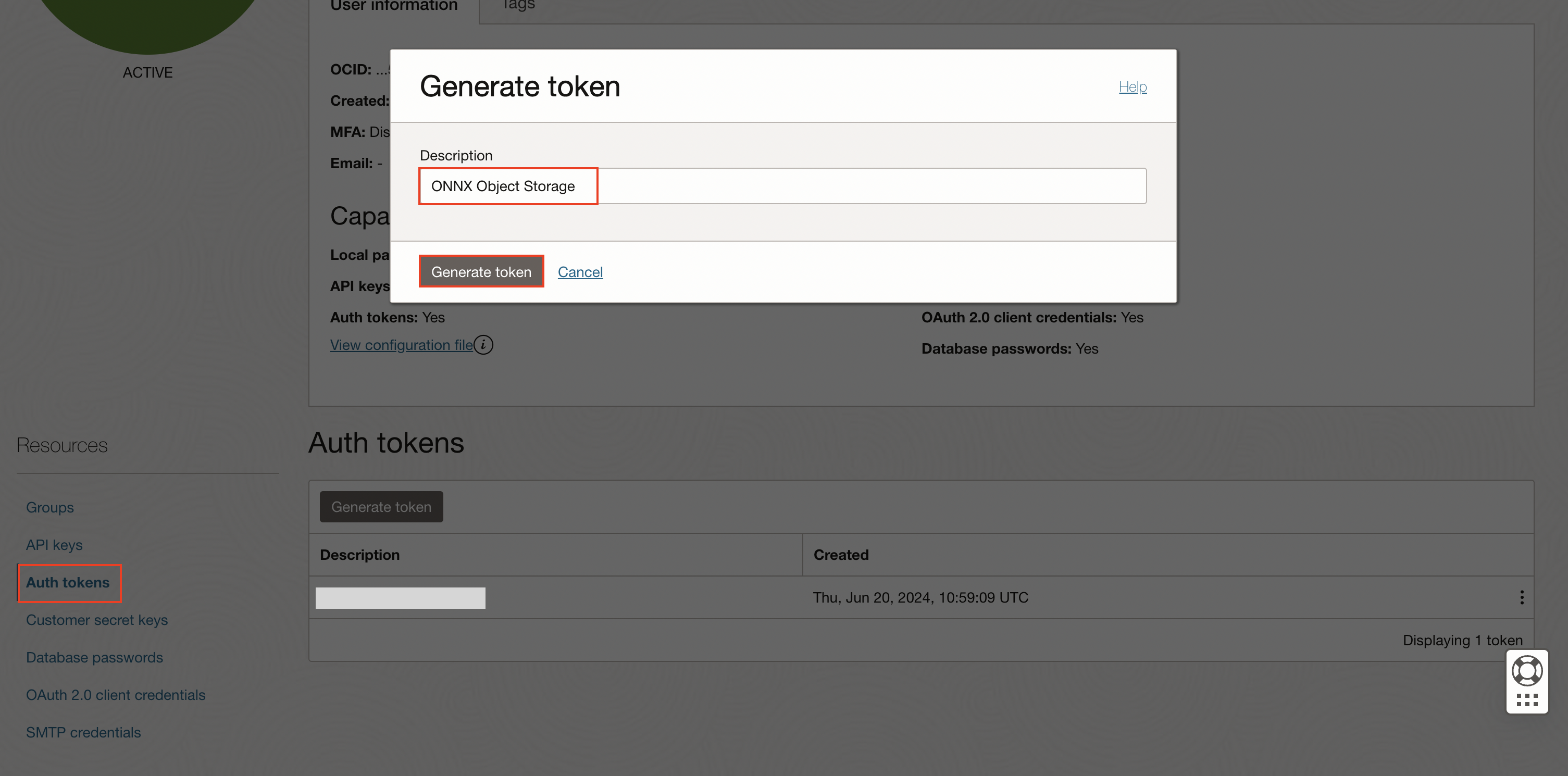

Fig 2. Copy the URL Path(URI) of the file uploaded - After uploading the ONNX file to object storage, we create an Auth Token to access the file uploaded to object storage above.

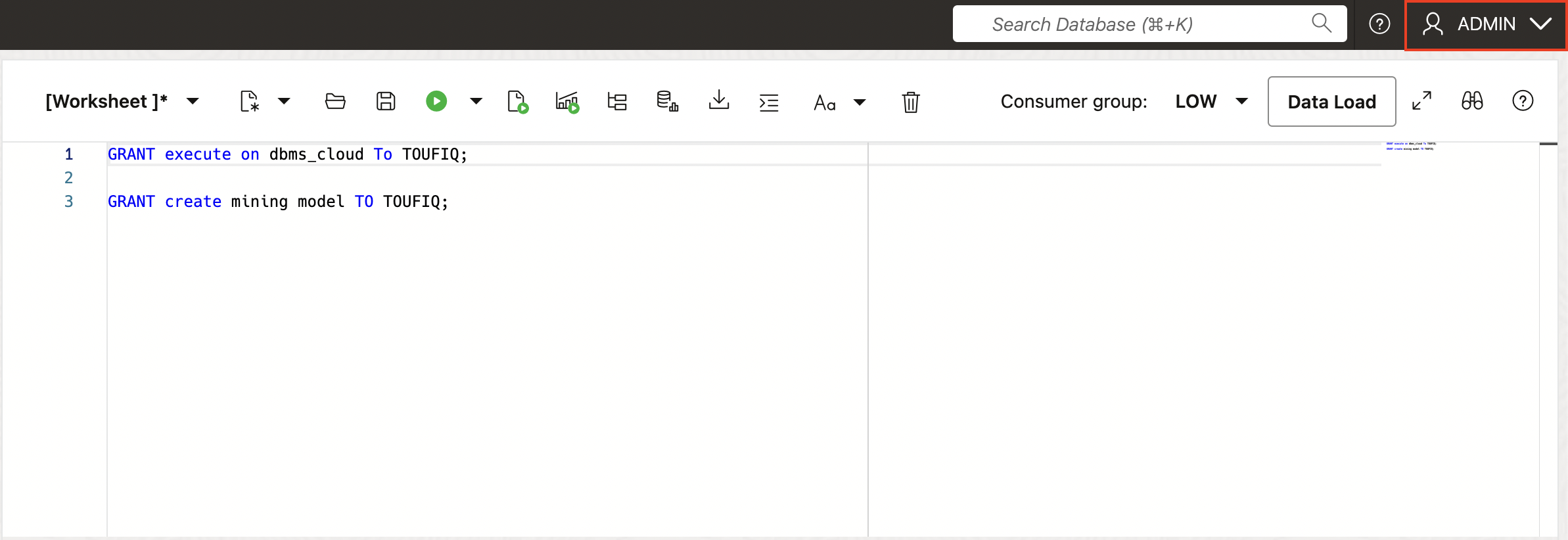

Fig 3. Creating Auth Token to access the file on object storage - Next, we grant necessary privileges to the parsing schema as user ADMIN. The user needs to connect to the database as ADMIN using Database Actions.

We grant the privilege to execute dbms_cloud to the workspace parsing schema logged in as ADMIN. This allows the parsing schema to create credentials to access the ONNX file stored in the Object Storage while loading it into the database.

GRANT execute on dbms_cloud To <Your Schema Name>;

- And, we grant the privilege to create mining models to the schema logged in as ADMIN.

GRANT create mining model TO <Your Schema Name>;

This grant allows the user to create and manage mining models within the database which is necessary to run deep learning embedding models natively inside the database.

Fig 4. Granting privileges to parsing schema

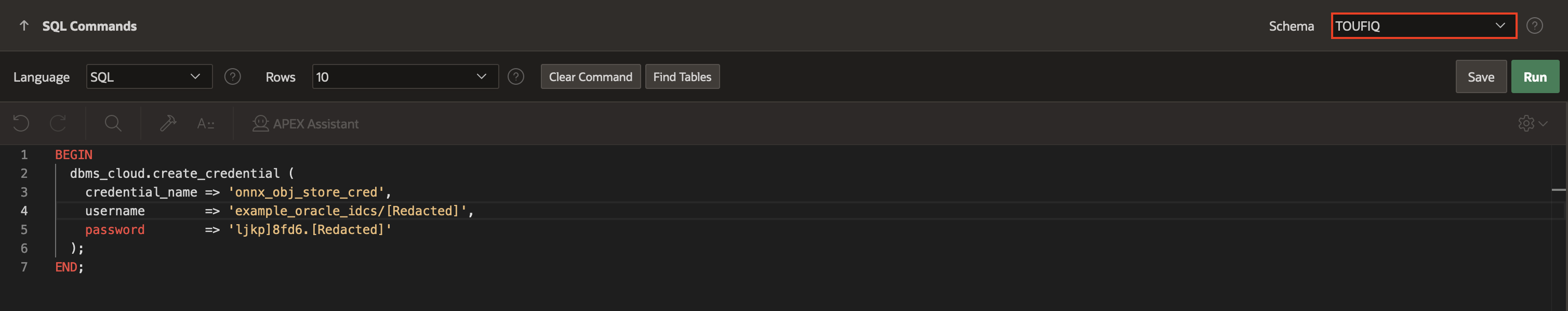

With the necessary privileges granted to the parsing schema, we now move to SQL Commands within SQL Workshop to load the ONNX file into the database as an ONNX model.

We start by creating a credential based on the Auth Token created above to access the file in object storage from the Autonomous Database. This ensures that the Oracle Database can securely access and interact with the file uploaded to object storage.

BEGIN dbms_cloud.create_credential ( credential_name => 'onnx_obj_store_cred', username => '<Your username>', password => '<AUTH Token>' ); END;

This PL/SQL snippet creates a credential named onnx_obj_store_cred using the dbms_cloud.create_credential procedure. The credentials (username and AUTH Token) are used for authentication with OCI Object Storage.

Fig 5. Creating a credential using dbms_cloud.create_credential procedure

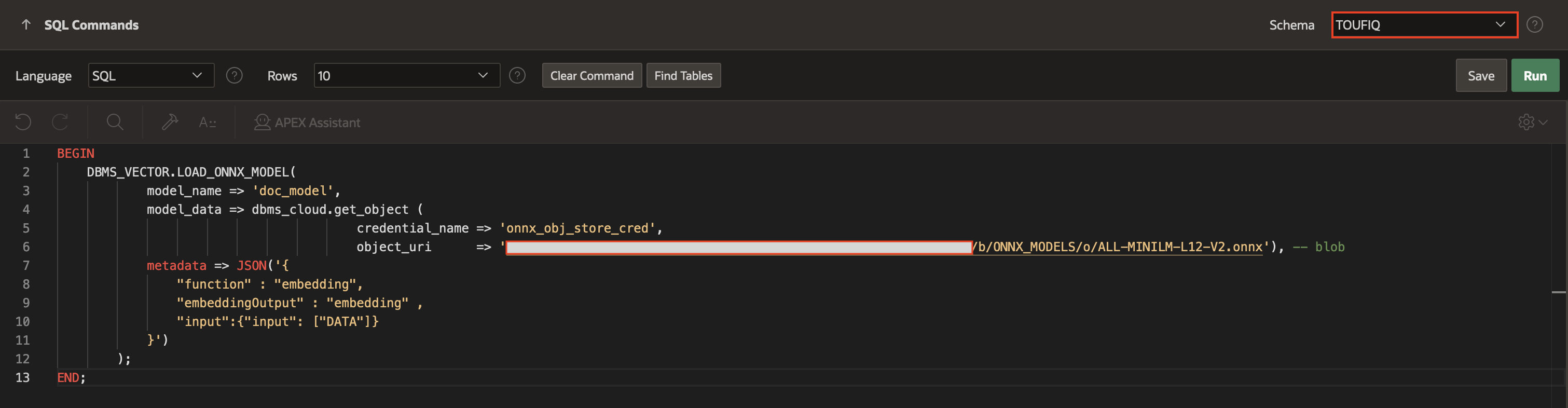

And finally, we load ONNX embedding model into the database using DBMS_VECTOR.LOAD_ONNX_MODEL:

BEGIN DBMS_VECTOR.LOAD_ONNX_MODEL( model_name => 'doc_model', model_data => dbms_cloud.get_object ( credential_name => 'obj_store_cred', object_uri => '<Enter Your Object Storage URI>'), -- blob metadata => JSON('{ "function" : "embedding", "embeddingOutput" : "embedding" , "input":{"input": ["DATA"]} }') ); END;

Fig 6. Loading the ONNX Embedding model into the database

Here, we assign the name “doc_model” to the ONNX model being imported. We fetch the ONNX file as a blob from the object storage using dbms_cloud.get_object by passing the object URI of the ONNX file and passing it on to the model_data parameter.

There are also other ways to load/import these ONNX models into the database. For more details, refer to the documentation.

With this, we now have an ONNX model loaded into the database ready to convert text to vectors.

Convert Image Descriptions to Vectors Using the ONNX Model

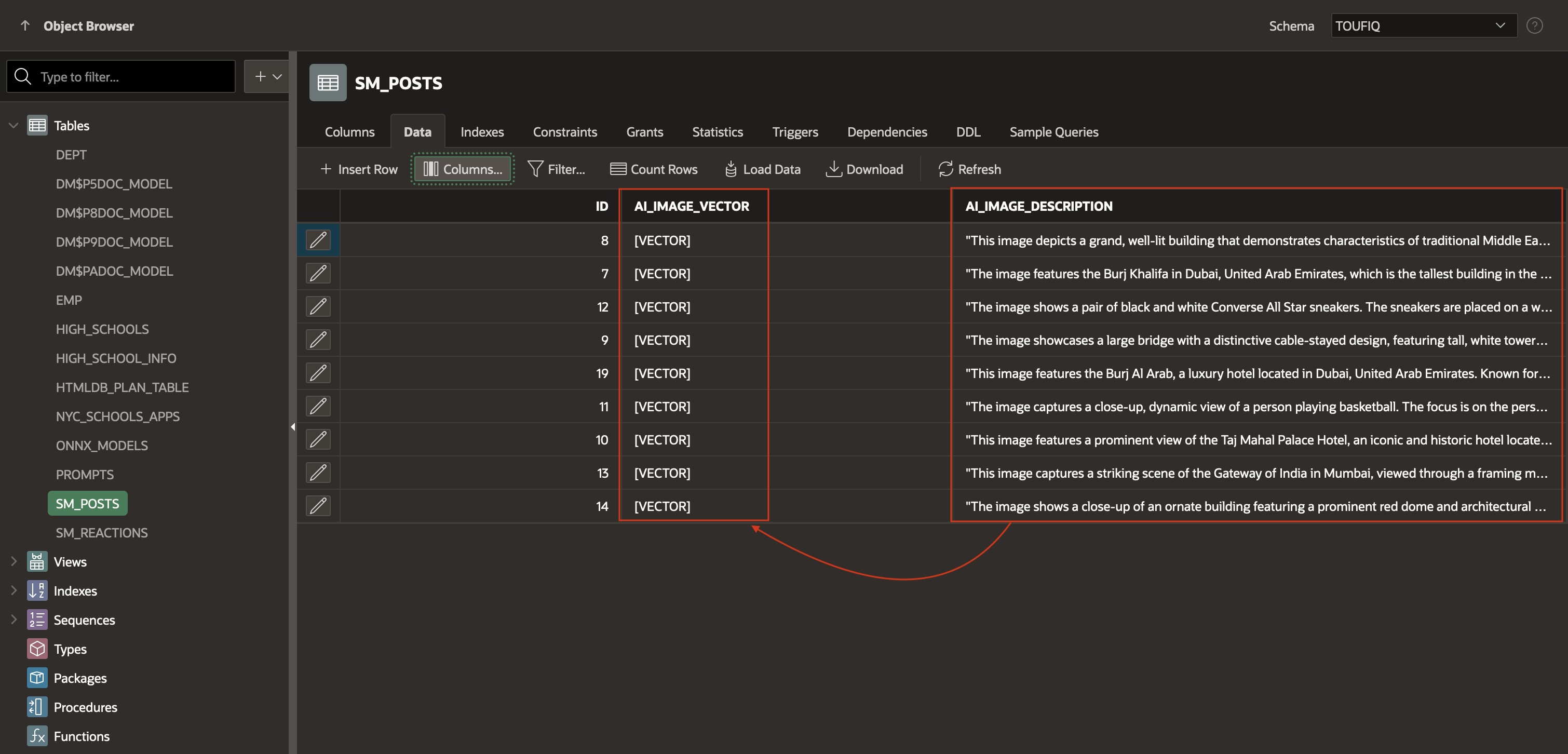

- We can now use the “doc_model” ONNX model to convert image descriptions into vectors and store them in the database. We use the native SQL function VECTOR_EMBEDDING to convert the values in AI_IMAGE_DESCRIPTION into Vectors using the doc_model ONNX model.

UPDATE SM_POSTS SET AI_IMAGE_VECTOR = TO_VECTOR(VECTOR_EMBEDDING ( DOC_MODEL USING AI_IMAGE_DESCRIPTION AS DATA ));

Fig 7. SM_POSTS table showing AI Image Description and corresponding vectors - Now that we have vectors, we can use them to perform semantic searches. In this demo, we can do that in the source query of the Cards Region :

SELECT A.*, TO_CHAR(ROUND(VECTOR_DISTANCE,2), '0.99')AS VECTOR_DISTANCE_DISPLAY FROM (SELECT p.id, p.user_name, p.comment_text, p.file_blob, p.file_mime, p.post_date, p.REACTIONS, p.USER_REACTION_CSS, p.CREATED, ( CASE WHEN :P1_SEARCH IS NOT NULL AND :P1_VECTOR_SEARCH = 'Y' THEN VECTOR_DISTANCE ( TO_VECTOR(VECTOR_EMBEDDING (doc_model USING :P1_SEARCH AS data)), ai_image_vector ) ELSE NULL END ) AS vector_distance, ai_image_description FROM mv_SM_POSTS p WHERE (:P1_VECTOR_SEARCH <> 'Y' AND :P1_SEARCH IS NOT NULL AND UPPER(ai_image_description) LIKE UPPER('%'||:P1_SEARCH||'%')) OR :P1_VECTOR_SEARCH = 'Y' OR :P1_SEARCH IS NULL ORDER BY vector_distance ASC, p.CREATED asc) A

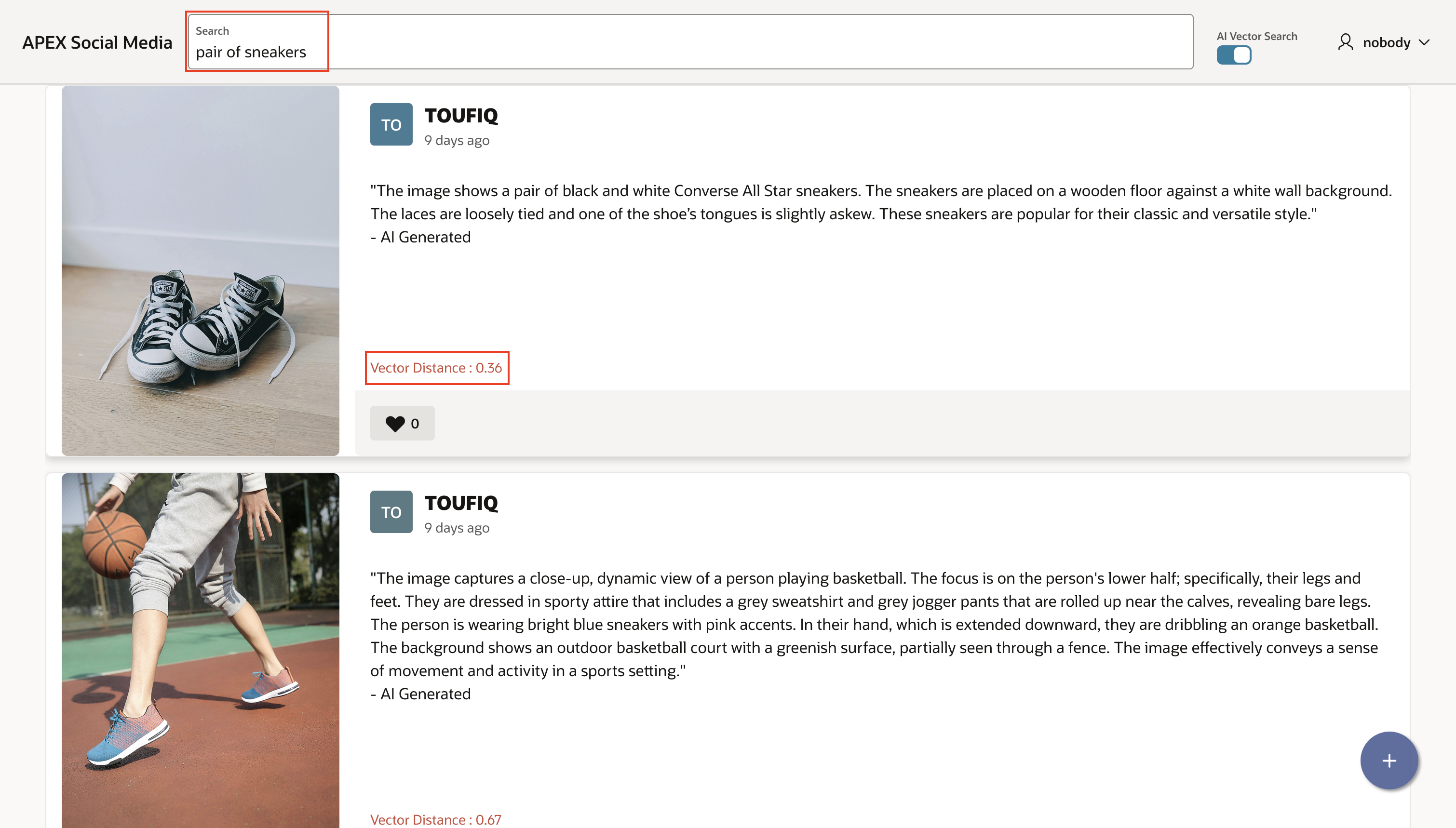

When the user types in a search term into the search field (P1_SEARCH), we first convert the user’s search term into a vector, also known as a query vector, and then calculate the vector distance to compare it with the stored vectors. Based on this vector distance, we sort the results to ensure the most relevant images appear at the top.

When users type keywords into the search bar, they see pictures and descriptions that are semantically linked to the keywords appearing on top. For instance, if they are searching for a “pair of sneakers”, even if this exact phrase isn’t in the description, the semantic search will return relevant results.

Summary

AI Vector Search Integration with Oracle APEX and Oracle Database 23ai enables developers to build smart, context-aware apps with improved search capabilities. This seamless combination simplifies the development and delivers highly relevant results, which makes it an invaluable tool for modern data-driven applications. Thank you for reading and happy coding!