Oracle Database 23ai, version 23.7, introduces built-in support for ONNX pipeline models, including CLIP (Contrastive Language-Image Pre-training) multi-modal embedding models, enabling seamless processing of both image and text inputs within a unified vector space. This advancement moves beyond traditional text-based semantic search, allowing developers to create systems that search through uploaded images using either text descriptions or image queries.

In this blog post, we explore how to use the CLIP multi-modal embedding model, deployed as ONNX models in Oracle Database 23ai, to build a hybrid search application with Oracle APEX. This application enables users to search uploaded images using either text or image inputs. By embedding images and text into a shared vector space, the system efficiently retrieves the most relevant results using vector similarity search, delivering a seamless and intuitive visual search experience.

Hybrid Search with ONNX Multi-Modal Embedding Models

To implement a hybrid search system, CLIP multi-modal embedding model is leveraged within Oracle Database 23ai and Oracle APEX. The CLIP model accepts text or image inputs and generates embeddings. This approach enables us to search from the uploaded images effectively, offering the following key capabilities:

- Text Search: When a user enters a textual query, the CLIP text model converts the text into an embedding and compares this text embedding with the embeddings of stored images to find the most semantically relevant content.

- Image Search: When a user uploads an image, the CLIP image model is used to generate an embedding for the image. This embedding is compared to the stored image embeddings in the database to retrieve visually similar images.

- Unified Search: Whether a user enters text or uploads an image, the system intelligently compares the corresponding embeddings to the stored content, providing relevant results based on the similarity between the input and the data stored.

Use Case: Hybrid Semantic Search in an Automotive Parts Application

We’ll demonstrate this with an example — APEX Social Media Search app.

Users can post images to the app and later search through these uploaded images using either:

-

Text Queries (e.g., “tire”).

-

Image Queries (e.g., A picture of a wheel).

[Download the Image Semantic Search App here] – Note: This application requires the CLIP ONNX model to be loaded and functional in Oracle Database 23ai.

Implementation:

Prerequisites

To implement AI Vector Search in Oracle Database 23ai and build Semantic Search capabilities in your Oracle APEX applications, you need to ensure the following prerequisites are met:

- Oracle Database 23ai (version 23.7 and above)

AI Vector Search is a feature introduced in Oracle Database 23ai, with initial support starting in version 23.4. However, multi-modal support, essential for this semantic search implementation, was introduced in version 23.7. You must use version 23.7 or higher to enable the vector search capabilities required.- Option: You can provision an Always Free Autonomous Database (ADB) instance running Oracle Database 23ai to meet this requirement at no additional cost. Learn more about availability here.

- Oracle APEX 24.1 and above

Oracle APEX version 24.1 or later is required to integrate seamlessly with the AI Vector Search features of Oracle Database 23ai.

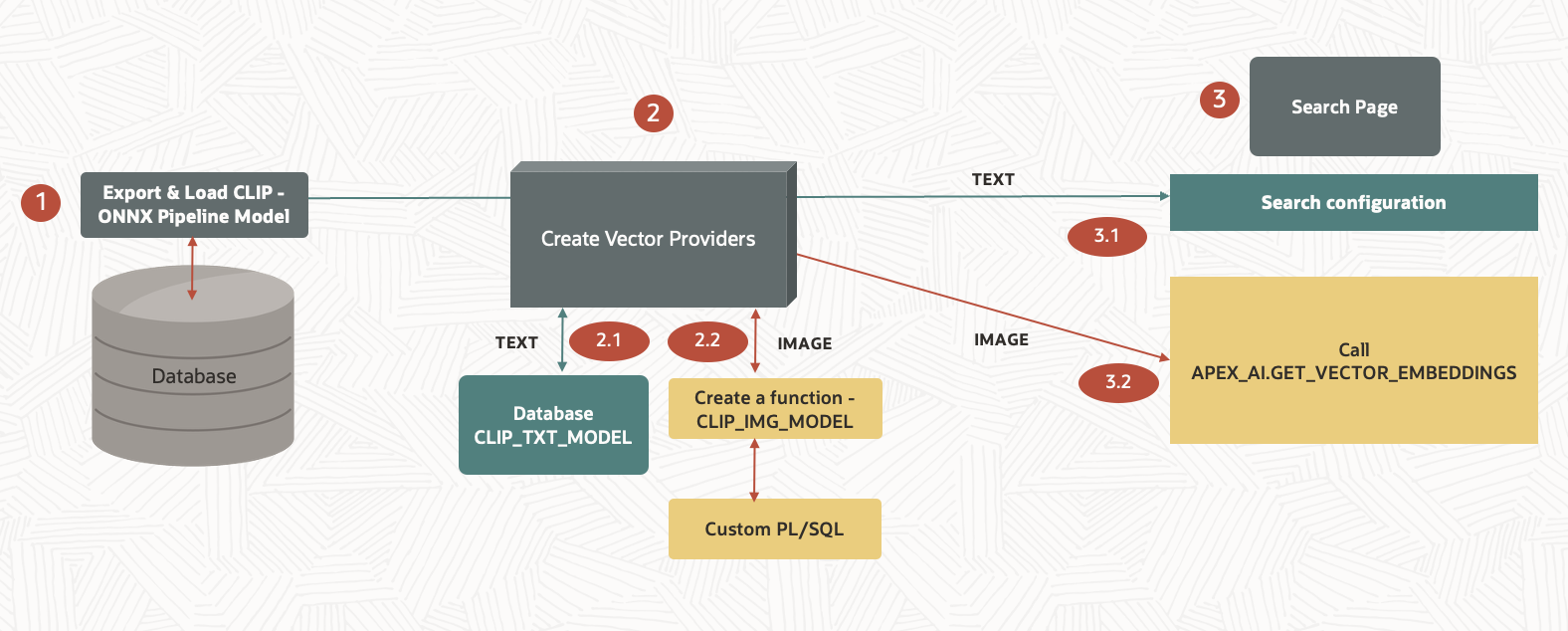

Order of Operations

Step 1: Load CLIP Models

Let’s begin by loading the CLIP ONNX models onto the database:

-

clip_txt_model: Processes text inputs to generate embeddings. -

clip_img_model: Processes image inputs to generate embeddings

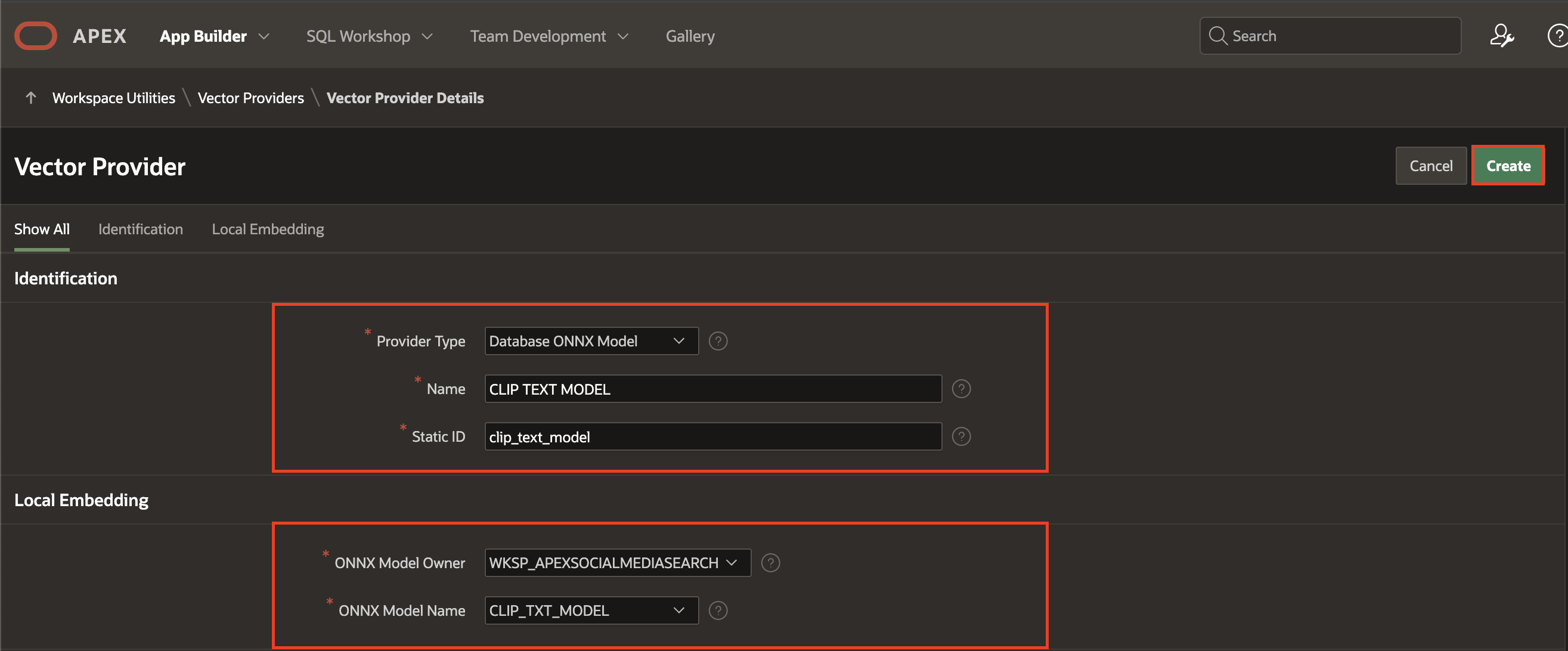

Step 2: Create Vector Providers

-

Text Vector Provider:

Let’s create a vector provider in APEX with the following configurations:-

Type: Database

-

Model:

clip_txt_model

-

This provider handles text inputs for semantic search.

-

Image Vector Provider:

SinceAPEX_AI.GET_VECTOR_EMBEDDINGSaccepts only CLOB inputs, a workaround is required to process image (BLOB) data.-

Convert BLOB to CLOB: Use

apex_web_service.blob2clobbase64()to convert the image BLOB to a Base64-encoded CLOB. - Custom PL/SQL Function: Create a PL/SQL function that:

- Accepts the Base64-encoded CLOB.

- Decodes it back to BLOB using

apex_web_service.clobbase642blob()(since clip_img_model expects a blob input) - Processes the BLOB with

clip_img_modelto generate the embedding.

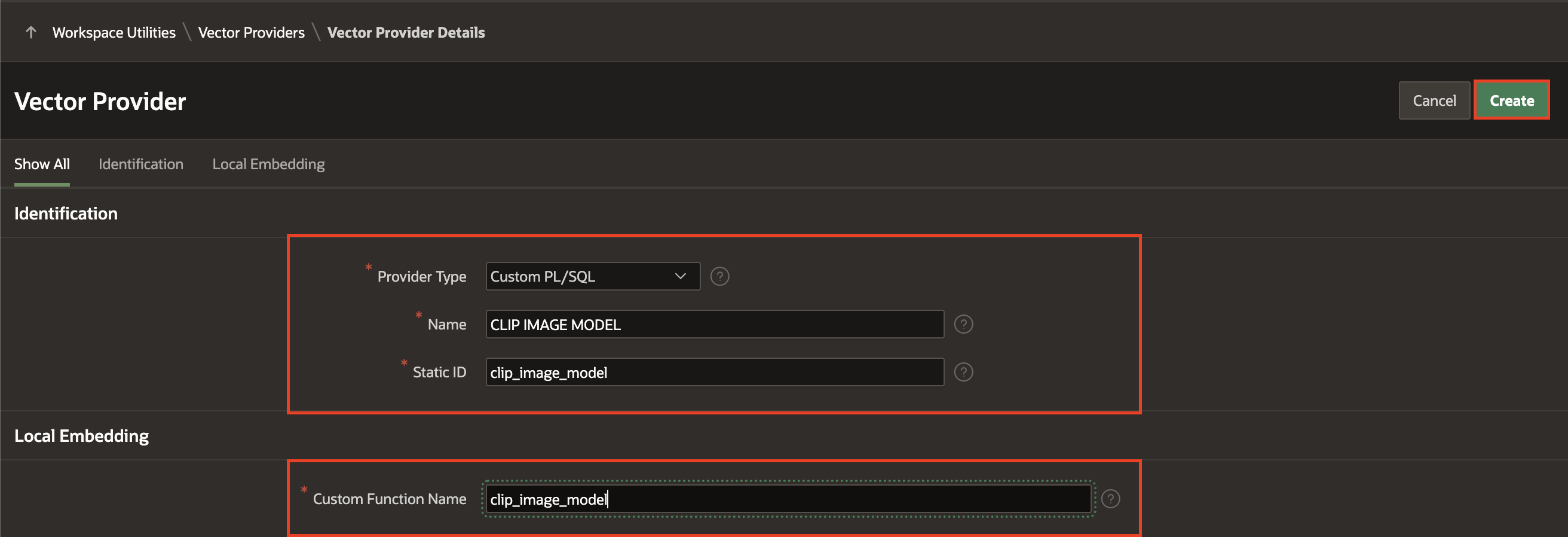

- Vector Provider: Create a vector provider in APEX with:

- Type: Custom PL/SQL

- Function: The custom function created above.

-

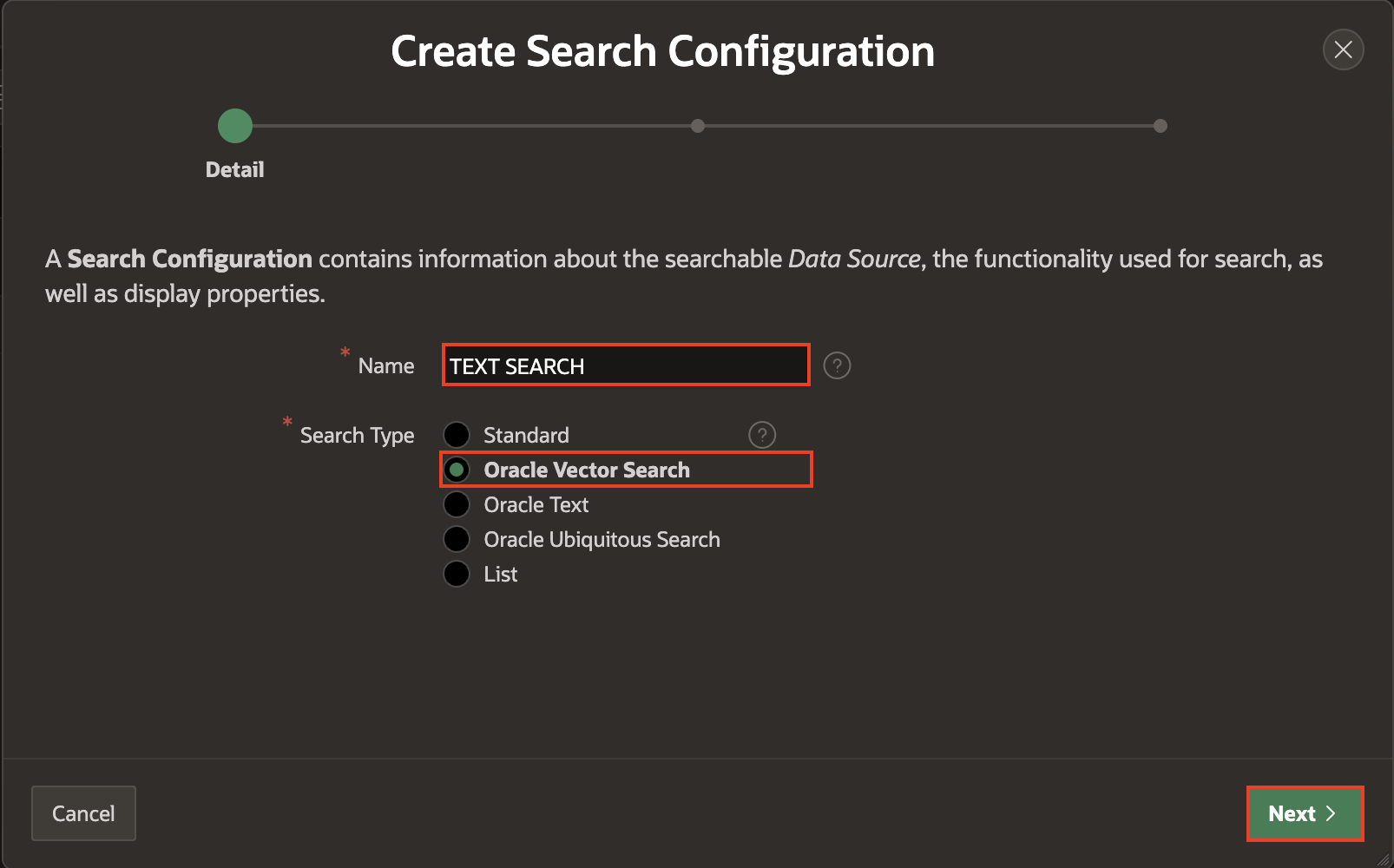

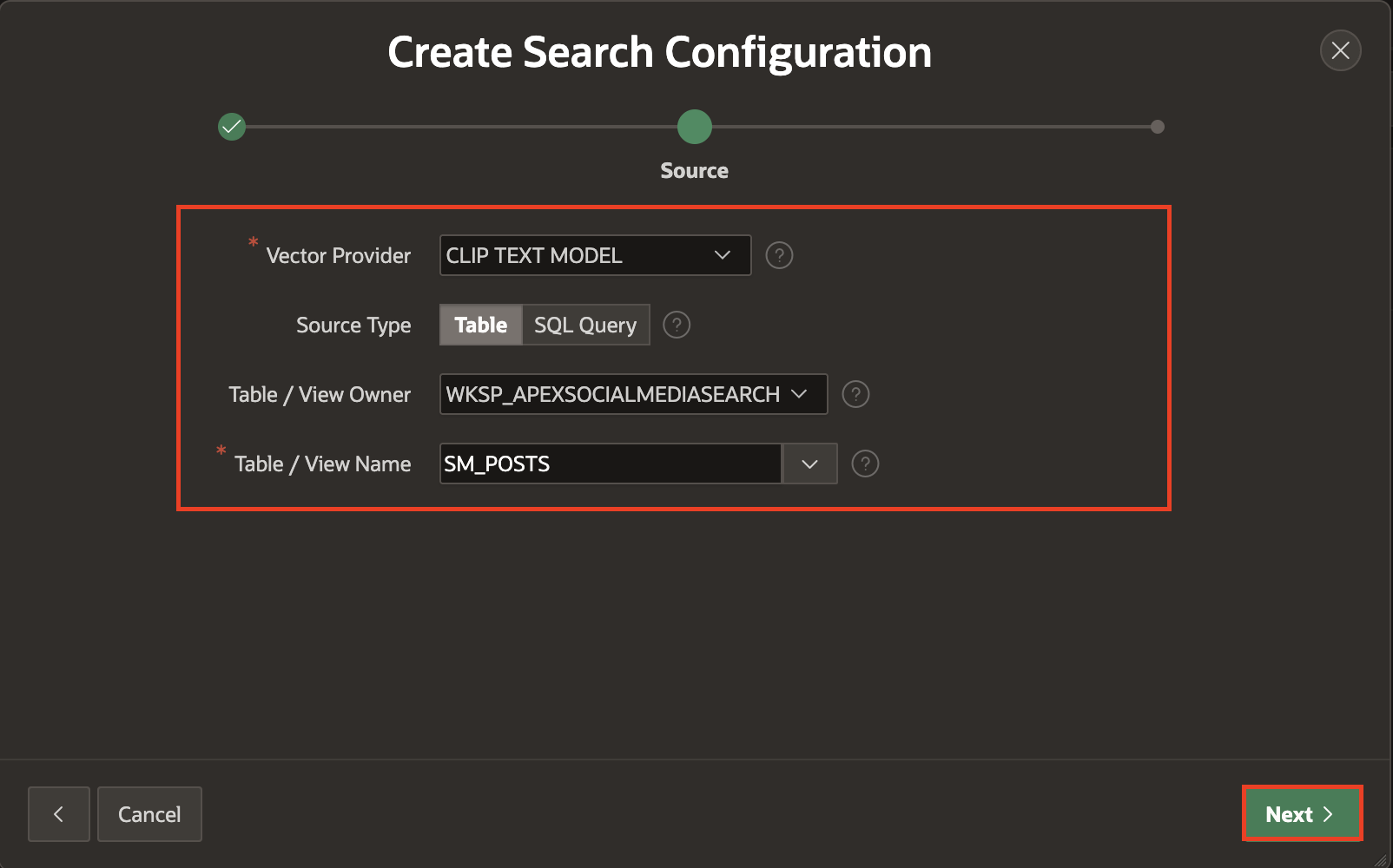

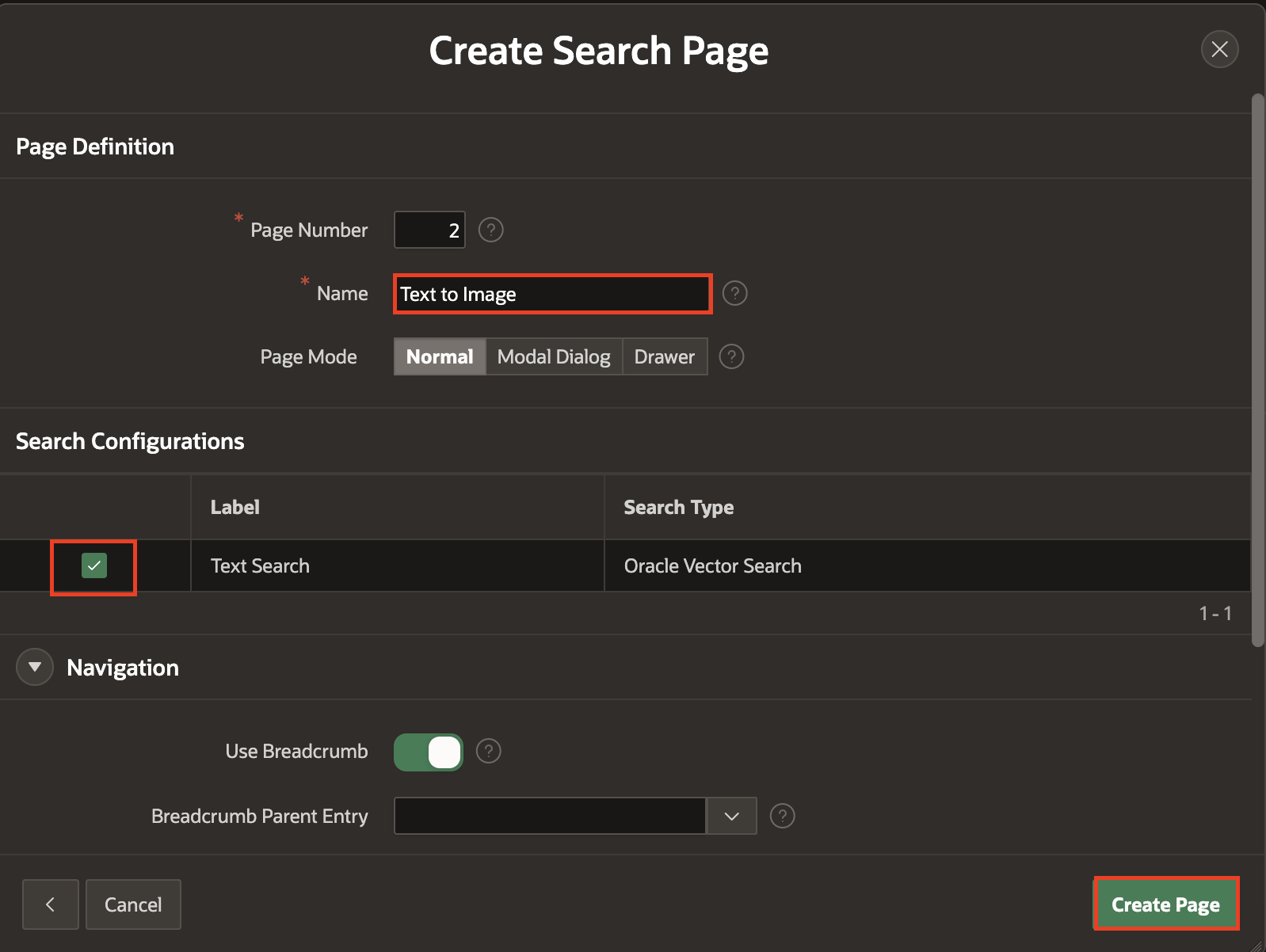

Step 3: Build the APEX Search Page

Design a search page in APEX that allows users to input either text or upload an image. The page should:

-

For Text Input:

-

Use the text vector provider to generate the query embedding. Create a search Configuration referring the text model and create a Search Page.

-

-

For Image Input:

-

Convert the uploaded image BLOB to a Base64-encoded CLOB.

-

Use

APEX_AI.GET_VECTOR_EMBEDDINGSwith the image vector provider to generate the query embedding.

-

The application then compares the query embedding with the precomputed embeddings in sm_posts.ai_image_vector using vector distance metrics (e.g., cosine similarity) to retrieve and display the most relevant results.

Step 4: Precompute and Store Image Embeddings

For each image uploaded (e.g., in the sm_posts table), you compute its vector embedding using clip_img_model. And then, store the resulting embedding in a vector column, such as sm_posts.ai_image_vector. This pre-computation facilitates efficient similarity searches during query time.

Implementation Steps:

1. Grant Privileges in Database

The database schema needs specific privileges to work with ONNX models:

-

EXECUTE ON DBMS_CLOUD: Allows access to ONNX files in Object Storage.

-

CREATE MINING MODEL: Permits loading and using ONNX models.

Log in as ADMIN using Database Actions and run:

GRANT execute on dbms_cloud To <Your Schema Name>; GRANT create mining model TO <Your Schema Name>;

2. Load CLIP ONNX Pipeline Models on Database

First, Extract the preconfigured image model zip files. The zip files will contain two pipelines called clip_vit_base_patch32_txt.onnx and clip_vit_base_patch32_img.onnx, which can be used for image and text embeddings respectively.

Upload the two CLIP ONNX files to Object Storage. Generate Pre-Authenticated Requests (PARs) for both files, then load them into the database:

To do so, first create a credential to access the files in Object Storage:

BEGIN dbms_cloud.create_credential (

credential_name => 'onnx_obj_store_cred',

username => '<Your username>',

password => '<AUTH Token>' );

END;

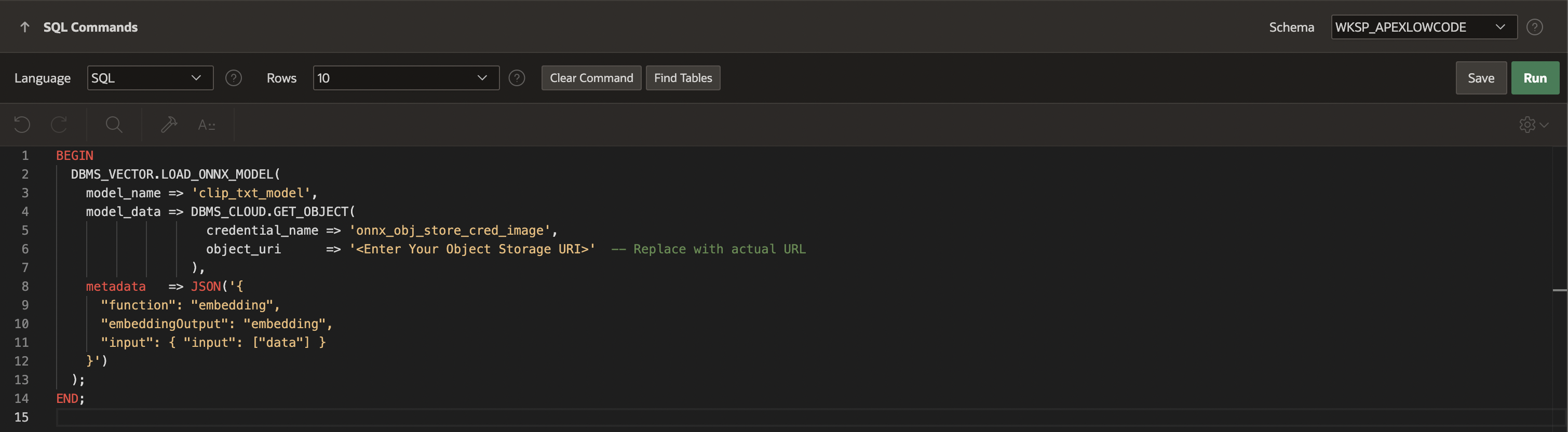

Load the ONNX models from SQL Commands in Oracle APEX:

For the Text model :

BEGIN DBMS_VECTOR.LOAD_ONNX_MODEL( model_name => 'clip_txt_model', model_data => DBMS_CLOUD.GET_OBJECT( credential_name => 'onnx_obj_store_cred', object_uri => '<Enter Your Object Storage URI>' ), metadata => JSON('{ "function": "embedding", "embeddingOutput": "embedding", "input": { "input": ["data"] } }') ); END;

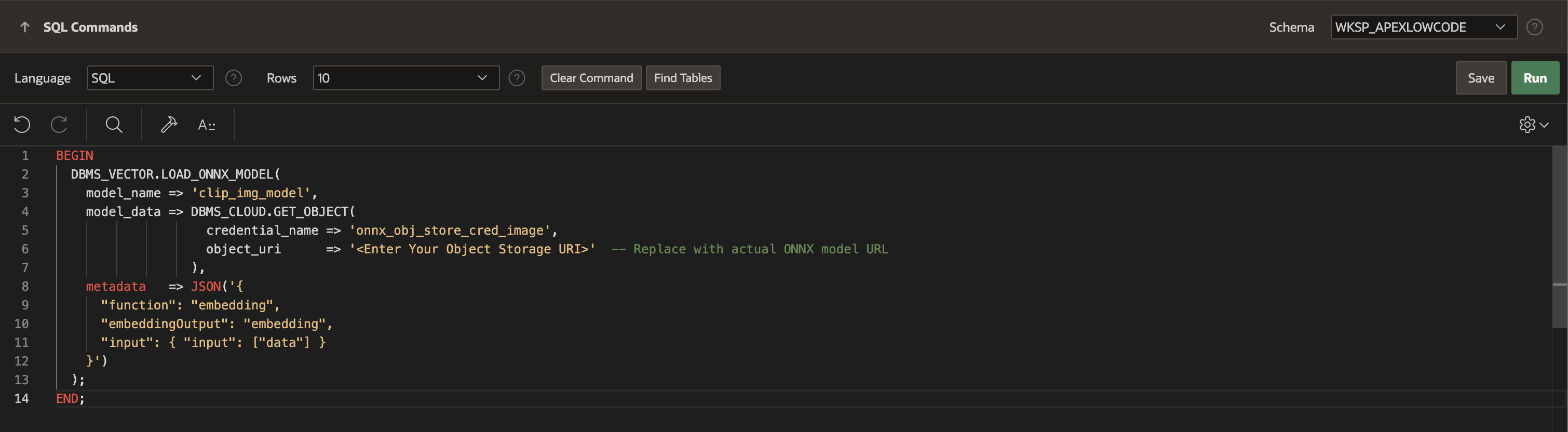

For the Image model :

BEGIN DBMS_VECTOR.LOAD_ONNX_MODEL( model_name => 'clip_img_model', model_data => DBMS_CLOUD.GET_OBJECT( credential_name => 'onnx_obj_store_cred', object_uri => '<Enter Your Object Storage URI>' ), metadata => JSON('{ "function": "embedding", "embeddingOutput": "embedding", "input": { "input": ["data"] } }') ); END;

3. To search Images using Text query

- Create the Vector Provider for Text Model

- Create a Search Configurations – Oracle Vector Search

- Create a Text Search Page in the application referring the above created Text – Search Configuration.

4. For Image to Image query:

-

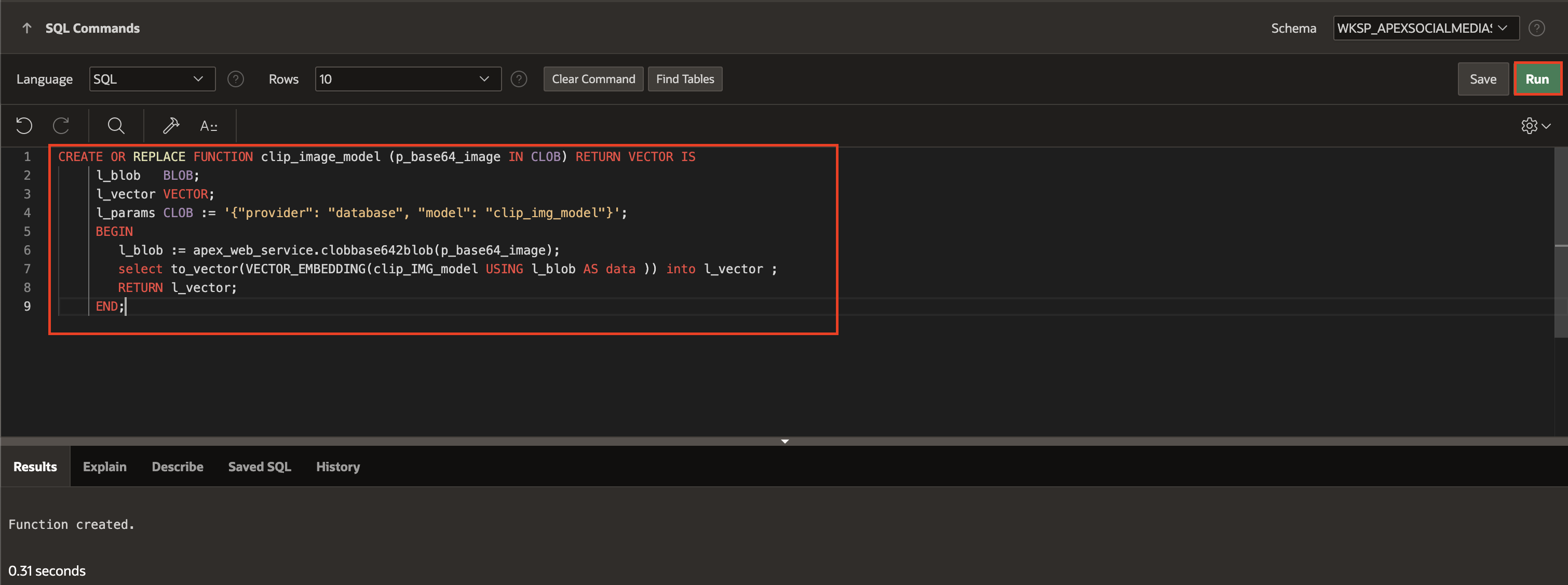

Create a PL/SQL Function for a custom vector provider:

|

-

Create a Vector Provider for Image Model:

-

Convert Uploaded Image to Vectors Using the ONNX Model

Generate and store embeddings for existing post images so that search queries run efficiently against precomputed vectors. You can now use the “clip_image_model” Vector provider to convert existing/uploaded images into vectors and store them in the database and use the native function apex_ai.get_vector_embeddings to convert the values in FILE_BLOB into Vectors.

Add a new column AI_IMAGE_VECTOR to your SM_POSTS table to store the computed vector embeddings.

ALTER TABLE SM_POSTS ADD AI_IMAGE_VECTOR VECTOR;

Run the following PL/SQL block to convert the image to a vector and update the AI_IMAGE_VECTOR column:

BEGIN

UPDATE sm_posts

SET ai_image_vector = apex_ai.get_vector_embeddings (

p_value => apex_web_service.blob2clobbase64(file_blob),

p_service_static_id => 'clip_image_model');

END;-

Hybrid Query:

Implement a single SQL query that detects a image input, call the Vector Provider, retrieves the vector embeddings and filters result based on the query.

Source query of the Cards Region :

WITH vector_image AS (

SELECT apex_ai.get_vector_embeddings (

p_value => apex_web_service.blob2clobbase64(blob_content),

p_service_static_id => 'clip_image_model') as img_vector

FROM apex_application_temp_files

WHERE filename = :P3_FILENAME

ORDER BY created_on DESC

FETCH FIRST 1 ROWS ONLY

)

SELECT A.*,

TO_CHAR(ROUND(vector_distance, 3), '0.999') AS vector_distance_display

FROM (

SELECT

p.id,

p.post_comment,

p.file_blob,

p.file_mime,

p.file_name,

p.created,

p.created_by,

p.updated,

p.updated_by,

CASE

WHEN EXISTS (SELECT 1 FROM vector_image) THEN

COSINE_DISTANCE(

(SELECT img_vector FROM vector_image),

p.ai_image_vector

)

ELSE null

END AS vector_distance

FROM sm_posts p

) A

ORDER BY A.vector_distance, A.created DESC;

Summary: A Seamless Hybrid Search Experience

Oracle Database 23ai (version 23.7) introduces built-in support for ONNX pipeline models, including CLIP multi-modal embedding models, enabling hybrid search systems that process both text and image inputs in a unified vector space. By generating embeddings with CLIP, we achieve accurate image-text similarity comparisons, improving search precision and user experience. Integrating these models with Oracle APEX simplifies content discovery, allowing users to search seamlessly using embeddings generated by CLIP multi-modal embedding models.

For more details on generating embeddings from CLIP multi-modal embedding models in Oracle Database, refer to the official documentation: ONNX Pipeline Models for CLIP Multi-Modal Embedding.