![]()

Vertical Pod Autoscaler (VPA) automates the adjustment of resource requests and limits for containers running in pods on a cluster you’ve created using Container Engine for Kubernetes (OKE).

VPA improves cluster resource utilization:

- Sets requests automatically based on usage to ensure appropriate resources are available for each pod.

- Maintains ratios between limits and requests specified in the initial configuration of containers.

- Scales down pods that are over-requesting resources, based on their usage over time.

- Scales up pods that are under-requesting resources, based on their usage over time.

VPA Components

When you deploy VPA in your Kubernetes cluster, three main components are installed:

- Recommender

- Function: Monitors resource usage of pods and generates recommendations for CPU and memory requests based on historical and current data.

- Updater

- Function: Periodically checks running pods to ensure their resource requests match the recommendations and updates them if necessary.

- Admission Controller

- Function: Adjusts the resource requests of new pods when they’re created by applying VPA recommendations.

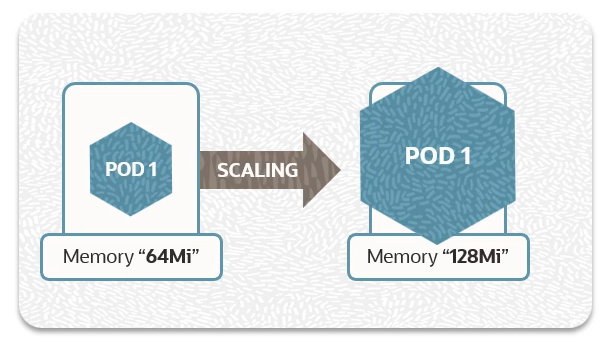

The diagram illustrates the scaling process in OCI using VPA.

Prerequisites

- Oracle Cloud Account: Ensure you have an active Oracle Cloud account.

- OCI CLI: Install and configure the OCI Command Line Interface (CLI).

- Kubernetes Cluster: Configure a Kubernetes cluster on OCI with at least 2 nodes to provide redundancy and handle resource adjustments efficiently.

Overview of Steps

- Verify the Kubernetes Metrics Server installation.

- Download and deploy VPA.

- Deploy a sample application.

- View the scaling operation.

- View the recommendation.

- Clean up.

Step 1: Verify the Kubernetes Metrics Server Installation

Ensure the Kubernetes Metrics Server is installed and running on your cluster:

If not found, deploy the Metrics Server before proceeding.

Step 2: Download and Deploy the Vertical Pod Autoscaler

Download the VPA source code from GitHub:

If previously deployed, delete the existing VPA:

Deploy the VPA:

Verify the VPA pods are running:

Step 3: Deploy the Sample Application

Create a deployment for Nginx with initial resource requests and limits:

Create a file nginx-deployment.yaml

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "500m"

memory: "128Mi"

Apply the deployment:

Create a VPA configuration for the Nginx deployment:

Create a file nginx-vpa.yaml

kind: VerticalPodAutoscaler

metadata:

name: nginx-vpa

spec:

targetRef:

apiVersion: “apps/v1”

kind: Deployment

name: nginx-deployment

updatePolicy:

updateMode: “Auto”

Apply the VPA configuration:

Step 4: View the Scaling Operation

VPA analyzes the original pods and, if the CPU and memory reservations are inadequate, relaunches the pods with updated values. VPA doesn’t modify the deployment template but updates the actual requests of the pods.

Monitor the pods and wait for VPA to start a new pod with a new name:

When a new pod starts, view its CPU and memory reservations:

In the requests section, you can see the new CPU and memory reservations. For example:

requests:

cpu: “200m”

memory: “100Mi”

This indicates that the original pod was under-resourced, and VPA has corrected the reservations with more appropriate values.

Step 5: View the Recommendation

Check the VPA recommendations:

The output shows the recommended resource requests and limits based on observed usage.

Container Recommendations:

Container Name: nginx

Lower Bound:

cpu: "100m"

memory: "50Mi"

Target:

cpu: "200m"

memory: "100Mi"

Uncapped Target:

cpu: "200m"

memory: "100Mi"

Upper Bound:

cpu: "500m"

memory: "128Mi"

Step 6: Clean Up

Remove the sample application:

Delete the VPA deployment:

Conclusion

By following these steps, you can set up and monitor automatic vertical scaling of pods in OCI, ensuring your applications efficiently use CPU and memory resources. Vertical Pod Autoscaler helps optimize resource usage, maintain application performance, and reduce operational overhead.

Call to Action

Once you’ve tried this yourself, share your results in the Oracle Analytics Community.

To find out more, read Kubernetes Clusters and Node Pools in the Kubernetes documentation.