Data Privacy & Protection for AI: A fundamental barrier to deploying GenAI

Enterprises seek to use foundation models for a range of high-impact applications. Time to value and deployment is critical, making all the difference in creating or maintaining a business’ competitive advantage. To build highly impactful AI use cases, organizations must be able to safely use their proprietary confidential data in these models. However, in the era where data is the most important strategic asset that an enterprise owns, genuine apprehensions about data exposure and ownership can limit enterprises’ use of large language models (LLMs) to only public or nonsensitive private data.

According to a recent Gartner report that surveyed IT executives on the risks of generative AI (GenAI), their top concern was data privacy. The partnership between Protopia AI and Oracle to deliver a critical component of data protection and ownership for secure and efficient adoption of generative AI services enables new heights in enterprise AI.

Decoupling data ownership from the machine learning process with Protopia AI

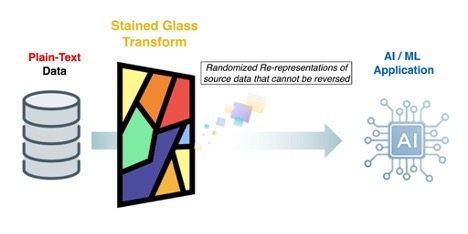

Protopia AI’s Stained Glass Transform (SGT) enables data protection by decoupling data ownership from the ML Process. Enterprises can then maintain the privacy of sensitive information when using LLMs. Generating randomized representations of the plain-text data within the enterprise’s trust zone and only sending the transformed data to the AI application enables this protection.

The rerepresentations are randomized embeddings of the original data that preserve the information needed for the target LLM without exposing unprotected prompts and queries, context, or fine-tuning data. They’re also not reversible to the original plain-text format. This format retains the privacy and ownership of confidential information within the enterprise trust zone and enables efficient processing of the AI application. Teams can now achieve transformative and beneficial outcomes with their AI, while worrying less about data leakage and exposure.

Enterprises can enhance their ability to evaluate LLMs for their business with confidential data and accelerate their decision-making to identify which LLMs work best with their enterprise’s data. Furthermore, they can cut down on the approval cycles for new LLM updates and features that can be time-consuming assessments, which can lead to delayed time-to-market for product teams building high impact applications using foundation models.

Data protection for LLMs in the real world

Achieving business outcomes from GenAI requires the ability to efficiently map the power of advanced language comprehension to vertical specific use cases. Oracle’s Generative AI services focus on delivering such use-cases in a managed and easy to deploy manner for a variety of industries such as healthcare, finance, public sector, energy and many others have helped enterprises quickly grow the footprint of Gen AI adoption.

In this section, we review examples various industry use cases and LLM lifecycle phases to understand how Protopia AI SGT opens the doors to new possibilities through safe and secure adoption of LLMs in the real world.

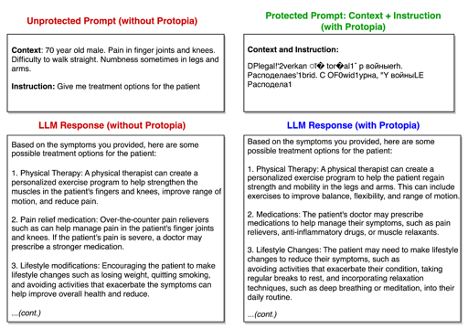

In the following examples, the left side of each figure shows the unprotected prompts, questions, instructions, source documents, and relevant material in plain text. The right side in each figure is intended to show the protection provided by using SGT while functionality with the LLM is retained. The protected prompt in each example shows what the information sent to the LLM would look like if the SGT representation were reconstructed to the best possible approximation of human readable text. This reconstruction doesn’t occur, nor is it needed for the LLM to function during operation. It’s meant to illustrate the protection of the original raw information that using SGT provides. Furthermore, each example shows the response that the LLM would generate with unprotected text versus Protopia’s Transformed and protected input. In each case, we observe that the LLM can produce the same response as generated with a dangerous unprotected prompt.

Prompt protection in healthcare

The healthcare industry is poised for fundamental transformation through automating administrative tasks, improving communication speed, using electronic health records, and creating personalized treatment plans through the widespread use of LLMs. The capability to efficiently analyze vast amounts of literature, records, and notes has enabled healthcare professionals to extract valuable insights to expedite diagnosis and create treatment plans. Building robust privacy mechanisms and data protection around these tools may become necessary for ethics and compliance to regulations. The following figure shows an example of instruction-following applied to assisting a patient through a chat bot.

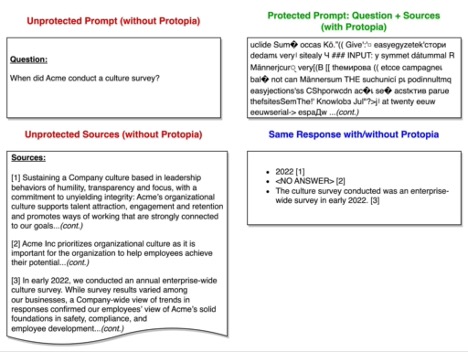

Protected RAG pipelines in Finance

The finance industry has often been a lead adopter of advancements in data science and machine learning (ML) because of the copious amounts of data they amass and curate for effective and quick decision-making. They have embraced ML in areas such as risk assessment, algorithmic trading, customer service, and fraud detection. LLMs have further empowered the ability to analyze large volumes of financial documents and support research and development activities. While solutions like retrieval-augmented generation (RAG) are popular in financial use cases that require explicit understanding of what sources the information provided by the LLM come from, this method comes with the added responsibility of remaining vigilant to the potential of source documents leaking sensitive information outside the trust zone of the enterprise.

The following example demonstrates how to securely implement RAG by only transferring randomized rerepresentations of the source documents to the LLM and eliminate the possibility of sensitive source documents being leaked in an unprotected manner outside of the enterprise.

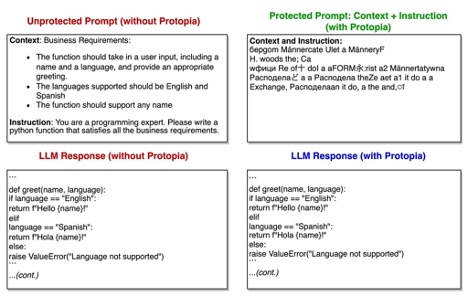

Protected copilot in software development

LLMs are disrupting software development practices across industries by enabling the generation of code drafts, code correction, refactoring, generating test cases for existing code. However, getting access to these amazing efficiency capabilities usually involves exposing code or design documents and requirements to the LLM. Leaking trade secrets and intellectual property through plain-text code can be a large barrier to entry in taking full advantage of GenAI for software development.

The following example demonstrates how to use SGT to not fall behind in software development advancements by preventing the need to send unprotected code or design documents to LLMs.

Protecting fine-tuning data to specialize LLMs

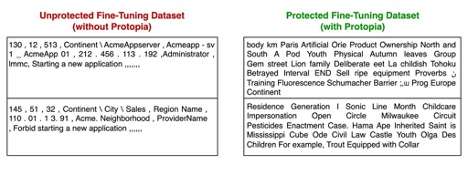

SGT isn’t limited to protecting data at inference time. It can also protect data used to fine-tune a foundation model. The process for creating the transformation for fine-tuning datasets is the same as for inference data. The next section in this post discusses how to achieve this goal on Oracle Cloud Infrastructure (OCI). The transformation is created for the foundation model to be fine-tuned without accessing the fine-tuning data. After the SGT has been created and trained for the foundation model, the fine-tuning dataset is transformed to randomized rerepresentations that are then used to fine-tune the foundation model. This process is explained in more detail in the accompanying tech brief.

In the following example, an enterprise customer needed to fine-tune an existing model for network log anomaly detection. They used SGT to transform the sensitive fine-tuning dataset to randomized embeddings, which they used to fine-tune their foundation model. They found that their detection model fine-tuned on the transformed representations performed with almost identical accuracy, compared to the scenario of fine-tuning the foundation model on the unprotected fine-tuning dataset. The following figure shows two examples of plain-text data records from the fine-tuning dataset and a reconstruction to text of those same data records from the transformed fine-tuning dataset.

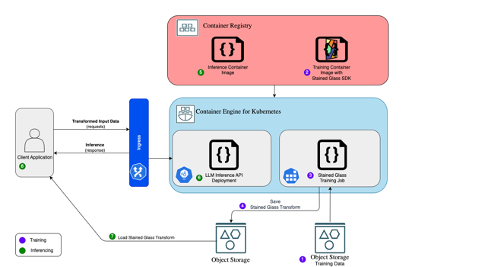

Stained Glass Transform on OCI

Enterprises on OCI can seamlessly use Protopia AI SGT within their existing practices for training LLMs to create Stained Glass transformation for their models. This process is performed once and creates the transformation to protect the foundation model intended. As the following figure shows, Stained Glass Transform is then deployed within the enterprise’s trust zone to modify each prompt before it leaves the enterprise’s perimeter, irrespective of the chosen deployment option, such as software as a service (SaaS) and cloud-based deployment. This configuration help ensure that enterprises can continue prompting LLMs, while retaining ownership of their plain-text queries and context, eliminating privacy and ownership concerns.

Conclusion

Through OCI Generative AI services and OCI AI infrastructure-based inference solutions, Oracle is making efficient deployment possible for their enterprise customers by seamlessly integrating versatile LLMs for use cases such as writing assistance, summarization, and chat, specialized by industry. Protopia AI Stained Glass Transform represents a groundbreaking solution to data privacy and can accelerate the adoption of LLMs in enterprises using OCI. With this technology in place, enterprises can confidently utilize the power of LLMs for better predictions and outcomes while maintaining control over their sensitive information. This innovative approach is set to transform the landscape of enterprise AI adoption.

To learn more about this technology, read further in the technical brief and connect with Protopia AI to get access and try it on your enterprise data. You can also find more information about Oracle Cloud Infrastructure AI Infrastructure.