Oracle Machine Learning Services (OML Services) on Oracle Autonomous Database now supports batch scoring for in-database models using REST endpoints. With OML, you already know you can build in-database models that reside in your database schema, where deployment is immediate via SQL queries for both batch and real-time scoring applications. With OML Services, those same models can now be deployed through REST endpoints hosted in Oracle Autonomous Database to enable batch scoring.

Initially, OML Services scoring supported singleton and small batch scoring using synchronous REST APIs, where input data was provided as part of the payload and the output returned in the response. The addition of asynchronous requests in the OML Services REST API enables scoring of large batches of data that reside in the database. Both the data source and scoring results remain in the user’s schema and scoring occurs directly in the database for optimal scalability and efficiency.

OML Services supports batch scoring for regression, classification, clustering, and feature extraction machine learning techniques.

OML Services Batch Scoring Basics

You start a batch scoring job by sending a POST request to the /omlmod/v1/jobs endpoint. You’ll need the model ID in OML Services of the model you want to use for scoring, which you can obtain by sending a GET request to the deployment endpoint and specifying the model URI. The model URI is the one you specified when deploying the model via the OML AutoML UI or a REST client.

To create a job, specify the job name and type, schedule frequency, maximum number of runs, the model ID, input data table name, and an output table name to store the results. You also include supplemental columns like a unique id for your records for joining with other tables or simply to identify the associated scores.

Here’s an annotated example to give you an idea of what you can specify when creating a batch scoring job for classification. For full details, see the example notebook in our OML GitHub repository.

$ curl -X POST "${omlservice}/omlmod/v1/jobs" \

--header "Authorization: Bearer ${token}" \

--header 'Content-Type: application/json' \

--data '{

"jobSchedule": {

"jobStartDate": "2023-03-13T21:10:23Z", # job start date and time

"jobEndDate": "2023-03-17T21:10:23Z", # job end date and time

"repeatInterval": "FREQ=DAILY", # job frequency

"maxRuns": "4" # max runs within the schedule

},

"jobProperties": {

"jobName": "NN_MOD1", # job name

"jobType": "MODEL_SCORING", # job type; MODEL_SCORING

"modelId": "35a97c7b-0ff4-4940-96f5-bfb29f64d223", # ID of the model used for scoring

"inputData": "CUSTOMERS360", # table or view to read input for the batch scoring job

"outputData": "CLASS_PRED1", # output table to store results in the format {jobId}_{outputData}

"supplementalColumnNames": ["CUST_ID", "AFFINITY_CARD"], # array of columns from input data to identify output rows

"jobServiceLevel": "MEDIUM", # database service level for the job – default: LOW

"topN": 2, # filters the results by returning the highest N probabilities

"topNDetails": 2, # return the top N most important predictors and their corresponding weight

"recompute": "true" # flag to determine whether to overwrite the result table

}'

After running your job, you can check if the asynchronous job finished by sending a request to view its details.

$ curl -X GET "${omlservice}/omlmod/v1/jobs/${jobid}" \

--header 'Accept: application/json' \

--header 'Content-Type: application/json' \

--header "Authorization: Bearer ${token}"

When complete, view the results in the result table, which has the table name: {jobid}_{outputData} – in this example, ‘OML$385E413C_7219_41A4_B5A2_DC6B91B3DD8E_CLASS_PRED1’.

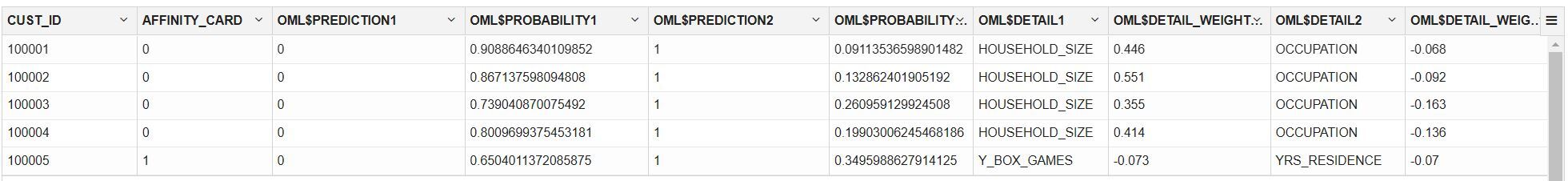

SELECT * FROM OML$385E413C_7219_41A4_B5A2_DC6B91B3DD8E_CLASS_PRED1 ORDER BY CUST_ID FETCH FIRST 5 ROWS ONLY;

You’ll notice that the output provides the scoring results in a single row, as opposed to the “transactional” representation provided by the SQL API, an added convenience. In addition to the prediction probability of the possible outcomes of 0 and 1, and their corresponding probability, we’ve asked for prediction details, which indicates the predictors that most contribute to the result along with their corresponding weight.

For the first customer with ID 100001, the actual target value is in column AFFINITY_CARD. The probability of 0 is in column OML$PROBABILITY1 (0.909) and probability of 1 is in column OML$PROBABILITY2 (0.091). HOUSEHOLD_SIZE has the most influence on this prediction with weight 0.446 followed by OCCUPATION with weight -0.068, which indicates the direction of the impact.

OML Services

OML Services on Autonomous Database makes it easy for you to manage, deploy, and use machine learning models from a REST API. OML Services supports your MLOps needs with model management, deployment, and monitoring, while benefiting from system-provided infrastructure and an integrated database architecture. The model management and deployment services on Autonomous Database enable you to deploy in-database machine learning models from both on-premises Oracle Database and Autonomous Database for classification, regression, clustering, and feature extraction machine learning techniques.

We’ve optimized OML Services for scoring/inferencing in support of streaming and real-time applications. And, unlike other solutions that require provisioning a VM for 24 – 7 availability, OML Services is included with Oracle Autonomous Database, so users pay only for the additional compute when producing actual predictions.

For more information

Check out this OML Office Hours session with a recent OML Services session on data and model monitoring illustrating the REST API and demonstrations. See the OML Services documentation for additional details and examples. Also see example notebooks in our OML GitHub repository. Learn more about Oracle Machine Learning and try OML Services on Oracle Autonomous Database using your Always Free access or explore via the OML Fundamentals hands-on workshops on Oracle LiveLabs.