Day to day operations of your HCM cloud environment result in transient data in several areas that can (and should) be periodically cleaned up.

Keeping the transient data to a minimum can help with overall performance and can also help with environment refresh times.

Most people are aware of this transient data in the staging tables of the HDL loads, but there are a number of other areas that also accumulate transient data.

I’ll briefly address some the more important areas to look at, and how to ensure this extra data stays cleaned up. This article is the first entry in a series of articles, with further articles discussing functional areas such as Benefits.

HDL and HSDL

Both HCM Data Loader and the HCM Spreadsheet Data Loader use staging tables while importing and loading data. Over time these tables can get quite large, so it’s important to keep this data to a minimum.

Staging Table Basics

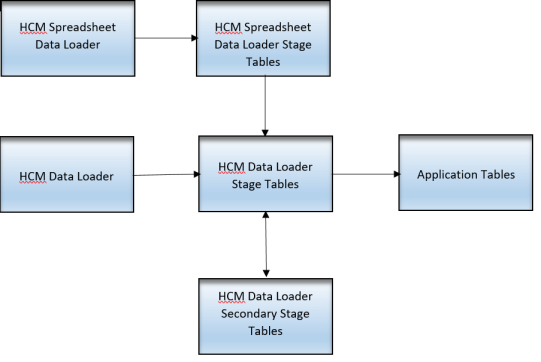

Data that is being brought into HCM is first loaded into stage tables, and once there it’s then loaded to the application tables.

The Spreadsheet Loader, besides using the HDL staging area, uses an additional stage table area.

Extended Retention

For those objects that support rollback, there is yet another, secondary area that is used to preserve data from ordinary purging. In the Import and Load UI, data sets that are retained in this area are indicated by a green tick in the Extended Retention column. One can also designate a specific data set to be an Extended Retention Data set via a SET parameter EXTEND_DATA_SET_RETENTION. Note, though, that by setting this parameter it increases the load time of that data set.

Stage data is managed by either purging or archiving the data in these tables.

- Purge – removes all data, source and output files

- Archive – removes data lines but preserves status, record counts and errors.

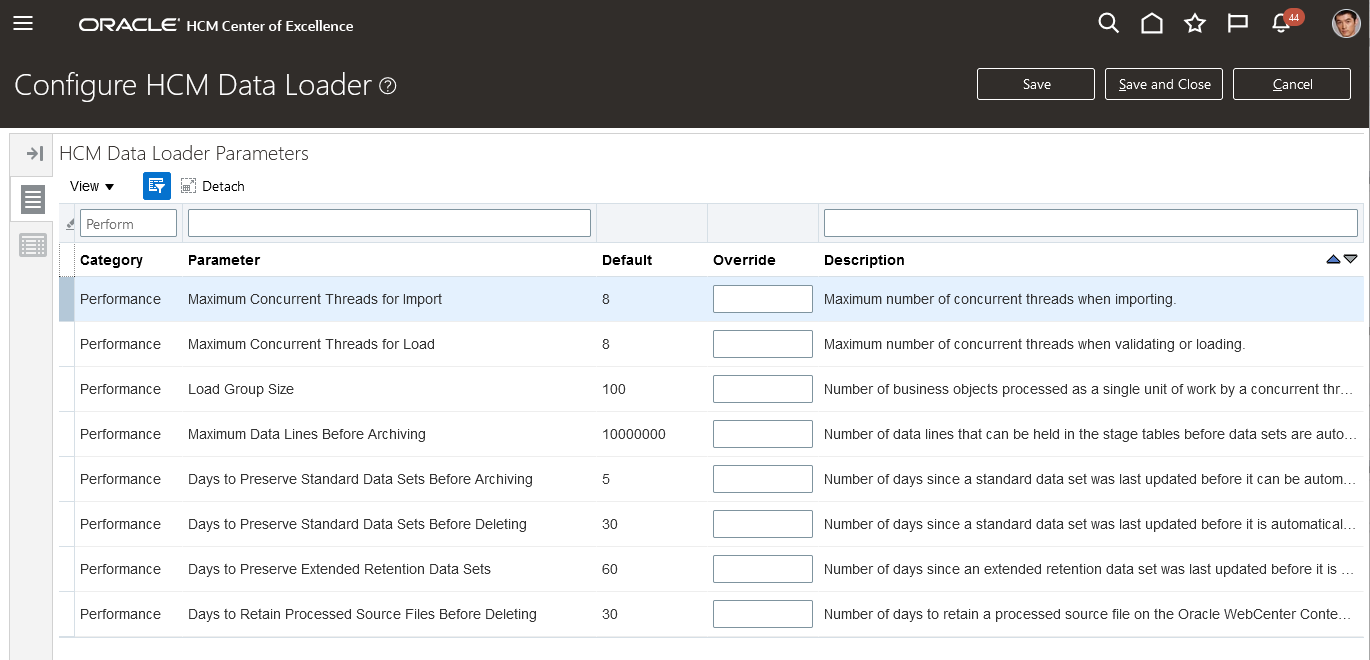

Fortunately these processes have become more automated, although it’s important to understand and tune the default parameters for purging and archiving. There are also cases when one would want to run these jobs manually.

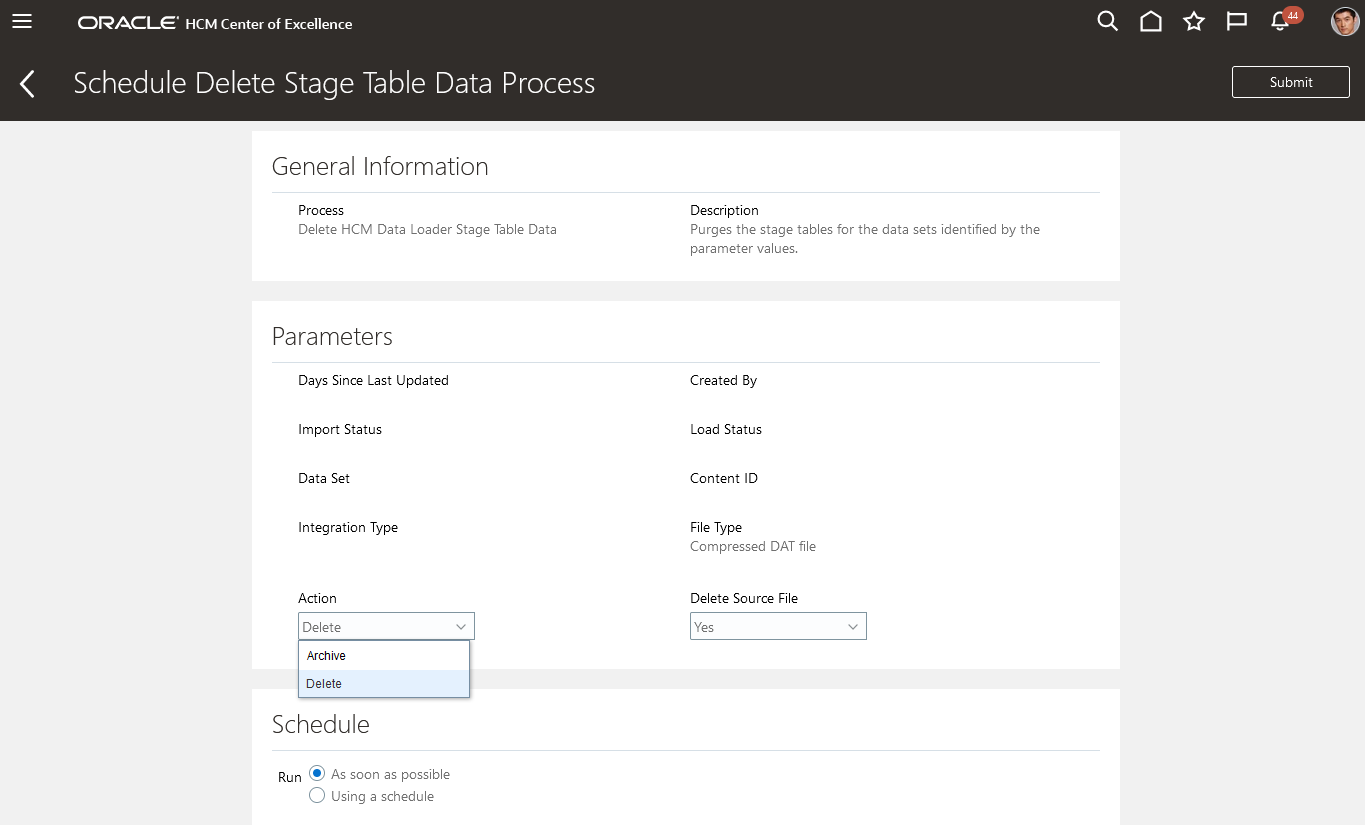

From the UI, the Delete Stage Table Data or Delete Spreadsheet Stage Table Data tasks can be invoked to purge or manually archive data sets. One can also manage specific data sets using the Delete button in the Import and Load Data UI. Behind the scenes these tasks run the Delete HCM Data Loader Stage Table Data or Delete HCM Spreadsheet Data Loader Stage Table Data processes that can be seen in the Schedule Processes area.

When a purge job is run, if there is no schedule for the underlying process one is created to run daily. One cannot schedule an archive process – this needs to happen manually.

The default behavior is to:

- Purge standard data sets that have not been updated in 30 days and extended data sets that have not been updated in 60 days

- Additional archiving if the number of data lines is greater than the set parameter (5 million) and the number of days is greater than the archive parameter (5 days)

- Archiving starts with older data sets first, stopping when lines drop below threshold

The fewer lines stored in the staging tables the better, so it’s good to tune these parameters for your environment’s usage patterns.

It’s also best practice to purge between large HDL loads and when doing bulk activities such as cutover or other large data loads.

HCM Extracts

HCM Extracts that are run as part of integrations or during the payroll processing tasks generate a lot of transient data. This should be periodically cleaned up, and there are two main processes that accomplish this.

Purge HCM Extracts Archive

This allows for removing HCM Extract archive data. In this case “HCM Extract archive data” refers to transient data used in the generation of the delivered output. The purge process removes data older than 90 days, and can be run for specific Extracts definitions or for all, and can be run in Report or Commit mode.

Starting in 22A, there is an automatic purge of older HCM Extracts archive data. All HCM Extracts archive data are retained for 90 days from the date the archives are created. Archives generated by customer-defined full run extracts that are older than 90 days are automatically purged to improve the efficiency of extract runs. For this case, the automatic purge is enabled for the runs that are triggered after your 22A upgrade.

The was this new process works is as follows.

All the archive data (full and changes-only) currently resides in the PAY_ACTION_INFORMATION table.

With this new feature, the archive data associated with the customer-defined full extracts will flow into a new table; PAY_TEMP_ACTION_INFORMATION.

Data in this new temp table will be dropped after 90 days. This process is much more efficient and quicker compared to the traditional purge archive process that we currently have.

Changes-only extracts will continue to use PAY_ACTION_INFORMATION table. The new process changes only the way archives are stored for full extracts.

Delete HCM Extracts Documents

This process purges specific types of HCM Extracts documents that are older than 90 days. This does not purge the Payments, Payroll Archive, and Payslip extract types, and it only purges customer defined Extracts and extracts with a predefined retention period. It can be run for all Custom Extracts or for a specific extract definition, and in report or commit mode. You cannot change the defined retention period of 90 days.

For reference, see the following topics in the HCM Extracts documentation (https://docs.oracle.com/en/cloud/saas/human-resources/22c/fahex/):

Workflow Tasks in final status automatically get copied to archive tables daily. Archiving ensures the task is retained for auditing, data retention, analytics or other purposes. The archive will keep task details, history, attachments and comments. This archive data is available to be viewed via the OTBI subject area Human Capital Management – Approval Notification Archive Real Time.

After archiving, if a task is not modified in 30 days then that task gets purged from the workflow task area. At this point neither the task nor approval history are visible in the BPM Worklist or in the Notifications and Approvals work area. The data still resides in the Archive area though and can be viewed as mentioned via OTBI.

Approval Transactions

To improve the performance of the Transaction Console, you can set up archiving of the completed transactions to an archive area so that these completed transactions don’t show in the Transaction Summary area. To do this you should run the Archive Transaction Console Completed Transactions scheduled process. Note that to be archived, that transaction must be in a completed state older than the retention period and additionally all associated tasks must have been purged (see Workflow Tasks)

ATOM feeds

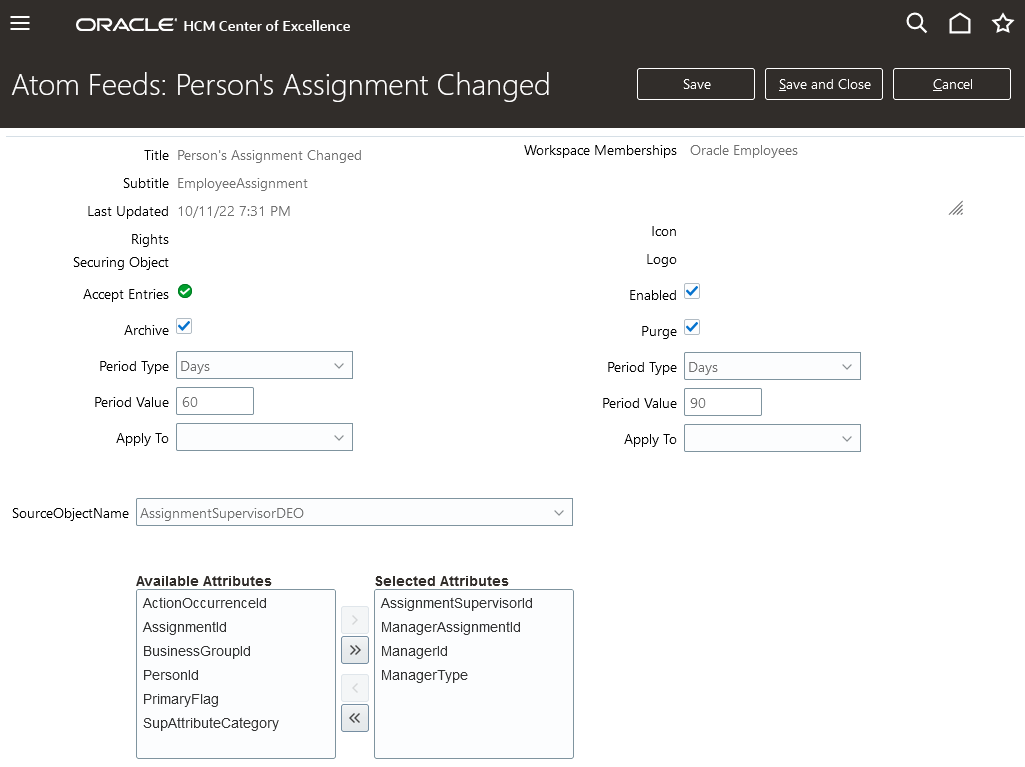

ATOM feeds are automatically archived and purged by a job that runs every month. You can also run an on-demand job Purge Atom Feed Entries from Oracle Fusion Schema

Archiving the feeds keeps the entries in the database table, but marks those entries so that they don’t appear in normal feeds.

Purge removes the entries entirely from the underlying tables.

Each feed can have a different timeframe for archive and purge but the default is 60 days for archive and 90 days for purge.

User request feeds have a different default, which is 90 days for archive and 1 year for purge.

From the Manage HCM ATOM feeds UI, you can adjust the archive and purge periods for individual feeds.

Summary

Some of these processes have been automated for you, but others will still need to be scheduled to be run regularly. Key actions for you are to double-check settings for HDL Stage table purging, and to ensure ATOM feeds are not changed from the defaults. Additionally, please ensure that the jobs Delete HCM Extracts Documents and Archive Transaction Console Completed Transactions are scheduled.

| Area | Action needed | Actions done for you |

|---|---|---|

| HDL and HSDL |

Double-check settings for Max data lines and days to preserve data. If you want to archive rather than purge data, this must be done manually. |

Stage Tables are automatically purged. |

| HCM Extracts |

Schedule Delete HCM Extracts Documents job. |

HCM Extracts Archive data for full custom extracts are automatically purged after 90 days. |

| Workflow Tasks |

No action needed |

Workflow tasks in final statuses are automatically archived and purged |

| Approval Transactions |

Schedule Archive Transaction Console Completed Transactions job to improve performance of the Transaction Console |

|

| ATOM feeds |

Ensure ATOM feed settings are not changed from the defaults. |

ATOM feeds will automatically be archived and purged according to the settings in Manage HCM ATOM feeds |