Introduction

One of Oracle’s top 10 predictions for developers in 2019 was that a hybrid model that falls between virtual machines and containers will rise in popularity for deploying applications.

Kata Containers are a relatively new technology that combine the speed of development and deployment of (Docker) containers with the isolation of virtual machines. In the Oracle Linux and virtualization team we have been investigating Kata Containers and have recently released Oracle Container Runtime for Kata on Oracle Linux yum server for anyone to experiment with. In this post, I describe what Kata containers are as well as some of the history behind this significant development in the cloud native landscape. For now, I will limit the discussion to Kata as containers in a container engine. Stay tuned for a future post on the topic of Kata Containers running in Kubernetes.

History of Containerization in Linux

The history of isolation, sharing of resources and virtualization in Linux and in computing in general is rich and deep. I will skip over much of this history to focus on some of the key landmarks on the way there. Two Linux kernel features are instrumental building blocks for the Docker Containers we’ve become so familiar with: namespaces and cgroups.

Linux namespaces are a way to partition kernel resources such that two different processes have their own view of resources such as process IDs, file names or network devices. Namespaces determine what system resources you can see.

Control Groups or cgroups are a kernel feature that enable processes to be grouped hierarchically such that their use of subsystem resources (memory, CPU, I/O, etc) can be monitored and limited. Cgroups determine what system resources your can use.

One of the earliest containerization features available in Linux combine both namespaces and cgroups was Linux Containers (LXC). LXC offered a userspace interface to make the Linux kernel containment features easy to use and enabled the creation of system or application containers. Using LXC, you could run, for example, CentOS 6 and Oracle Linux 7, two completely different operating systems with different userspace libraries and versions on the same Linux kernel.

Docker expanded on this idea of lightweight containers by adding packagaging, versioning and component reuse features. Docker Containers have become widely used because they appealed to developers. They shortened the build-test-deploy cycle because they made it easier to package and distribute an application or service as a self-contained unit, together with all the libraries needed to run it. Their popularity also stems from the fact that they appeal to developers and operators alike. Essentially, Docker Containers bridge the gap between dev and ops and shorten the cycle from development to deployment.

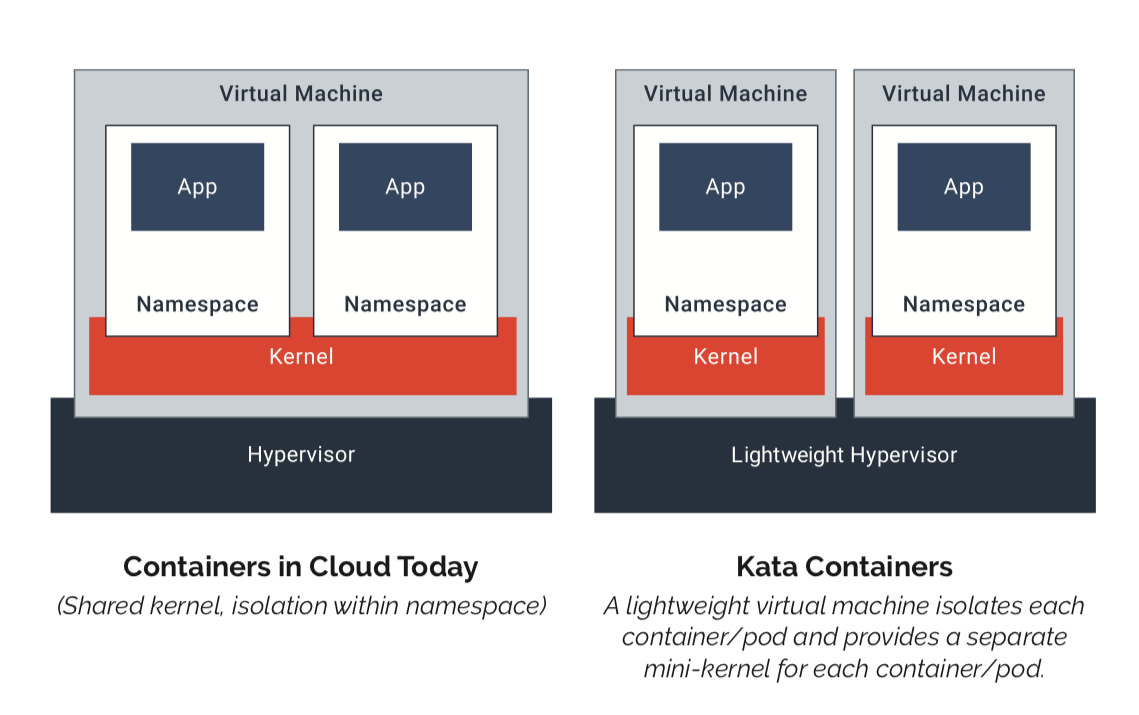

Because containers —both LXC and Docker-based— share the same underlying kernel, it’s not inconceivable that an exploit able to escape a container could access kernel resources or even other containers. Especially in multi-tenant environments, this is something you want to avoid.

Projects like Intel® Clear Containers Hyper runV took a different approach to parceling out system resources: their goal was to combine the strong isolation of VMs with the speed and density (the number of containers you can pack onto a server) of containers. Rather than relying on namespaces and cgroups, they used a hypervisor to run a container image.

Intel® Clear Linux OS Containers and Hyper runV came together in Kata Containers, an open source project and community, which saw its first release in March of 2018.

Kata Containers: Best of Both Worlds

The fact that Kata Containers are lightweight VMs means that, unlike traditional Linux containers or Docker Containers, Kata Containers don’t share the same underlying Linux kernel. Kata Containers fit into the existing container ecosystem because developers and operators interact with them through a container runtime that adheres to the Open Container Initiative (OCI)specification. Creating, starting, stopping and deleting containers works just the way it does for Docker Containers.

In summary, Kata Containers:

- Run their own lightweight OS and a dedicated kernel, offering memory, I/O and network isolation

- Can use hardware virtualization extensions (VT) for additional isolation

- Comply with the OCI (Open Container Initiative) specification as well as CRI (Container Runtime Interface) for Kubernetes

Installing Oracle Container Runtime for Kata

As I mentioned earlier, we’ve been researching Kata Containers here in the Oracle Linux team and as part of that effort we have released software for customers to expermiment with. The packages are available on Oracle Linux yum server and its mirrors in Oracle Cloud Infrastructure (OCI). Specifically, we’ve released a kata-runtime and related compontents, as well an optimized Oracle Linux guest kernel and guest image used to boot the virtual machine that will run a container.

Oracle Container Runtime for Kata relies on QEMU and KVM as the hypervisor to launch VMs. To install Oracle Container Runtime for Kata on a bare metal compute instance on OCI:

Install QEMU

Qemu is available in the ol7_kvm_utils repo. Enable that repo and install qemu

sudo yum-config-manager --enable ol7_kvm_utils sudo yum install qemu

Install and Enable Docker

Next, install and enable Docker.

sudo yum install docker-engine sudo systemctl start docker sudo systemctl enable docker

Install kata-runtime and Configure Docker to Use It

First, configure yum for access to the Oracle Linux Cloud Native Environment – Developer Preview yum repository by installing the oracle-olcne-release-el7 RPM:

sudo yum install oracle-olcne-release-el7

Now, install kata-runtime:

sudo yum install kata-runtime

To make the kata-runtime an available runtime in Docker, modify Docker settings in /etc/sysconfig/docker. Make sure SELinux is not enabled.

The line that starts with OPTIONS should look like this:

$ grep OPTIONS /etc/sysconfig/docker OPTIONS='-D --add-runtime kata-runtime=/usr/bin/kata-runtime'

Next, restart Docker:

sudo systemctl daemon-reload sudo systemctl restart docker

Run a Container Using Oracle Container Runtime for Kata

Now you can use the usual docker command to run a container with the --runtime option to indictate you want to use kata-runtime. For example:

sudo docker run --rm --runtime=kata-runtime oraclelinux:7 uname -r Unable to find image 'oraclelinux:7' locally Trying to pull repository docker.io/library/oraclelinux ... 7: Pulling from docker.io/library/oraclelinux 73d3caa7e48d: Pull complete Digest: sha256:be6367907d913b4c9837aa76fe373fa4bc234da70e793c5eddb621f42cd0d4e1 Status: Downloaded newer image for oraclelinux:7 4.14.35-1909.1.2.el7.container

To review what happened here. Docker, via the kata-runtime instructed KVM and QMEU to start a VM based on a special purpose kernel and minimized OS image. Inside the VM a container was created, which ran the uname -r command. You can see from the kernel version that a “special” kernel is running.

Running a container this way, takes more time than a traditional container based on namespaces and cgroups, but if you consider the fact that a whole VM is launched, it’s quite impressive. Let’s compare:

# time docker run --rm --runtime=kata-runtime oraclelinux:7 echo 'Hello, World!' Hello, World! real 0m2.480s user 0m0.048s sys 0m0.026s # time docker run --rm oraclelinux:7 echo 'Hello, World!' Hello, World! real 0m0.623s user 0m0.050s sys 0m0.023s

That’s about 2.5 seconds to launch a Kata Container versus 0.6 seconds to launch a traditional container.

Conclusion

Kata Containers represent an important phenomenon in the evolution of cloud native technologies. They address both the need for security through virtual machine isolation as well as speed of development through seamless integration into the existing container ecosystem without compromising on computing density.

In this blog post I’ve described some of the history that brought us Kata Containers as well as showed how you can experiment with them yourself with packages using Oracle Container Runtime for Kata.