What is RAG?

An excellent introduction to Retrieval Augmented Generation (RAG) can be found here

We can build the UI of the Knowledge Assistant using Langchain, OCI Generative AI, and store data in Oracle 23ai Vector DB.

Customers can easily build the chatbot using Oracle 23ai Vector DB and OCI Generative AI.

To test these functionalities, you can visit our GitHub repository for the python rag chatbot. Follow the instructions in the README file to install the appropriate versions of the required software libraries.

Code and functionalities may change as a result of customer feedback.

Building a Full-Stack RAG Chatbot with OCI Generative AI and Oracle Vector Database (Python Powerhouse)

In the realm of chatbots, where responsiveness and knowledge are paramount, Retrieval-Augmented Generation (RAG) offers a compelling solution. This approach combines the power of large language models (LLMs) with the precision of database retrieval, making chatbots more informative and up-to-date. This blog delves into crafting a full-stack RAG chatbot using Oracle Cloud Infrastructure (OCI) Generative AI and Oracle Vector Database, all orchestrated by the versatile Python language.

Why OCI and Oracle 23ai Vector DB?

- OCI Generative AI: This managed service streamlines LLM integration, providing pre-trained models like Cohere and Meta that can be fine-tuned for specific tasks.

- Oracle Vector Database: This innovative database excels at semantic search, enabling the chatbot to find the most relevant information from your knowledge base using vector embeddings.

- Python: Python’s extensive libraries like Transformers and Gensim facilitate building the LLM and retrieval components, making development efficient.

Building Blocks of the Chatbot:

-

Knowledge Base Preparation:

- Structure your data (documents, FAQs) for easy processing.

- Preprocess the text, including cleaning, tokenization, and stemming/lemmatization.

-

Vectorization:

- Use Cohere embedding or a similar library to create vector embeddings for your knowledge base documents.

- Store these embeddings in Oracle Vector Database for efficient retrieval.

-

LLM Integration with OCI Generative AI:

- Fine-tune a pre-trained LLM model on your specific domain or chatbot purpose.

- Utilize the OCI Generative AI Python SDK to interact with the LLM for text generation.

-

Retrieval Engine:

- Develop a Python function to search the Oracle Vector Database based on user queries.

- Retrieve the most relevant documents (top K) based on vector similarity.

-

Chatbot Framework and User Interface:

- Choose a framework like Streamlit or Flask to build the user interface for interacting with the chatbot.

- Integrate the LLM generation, retrieval engine, and response formatting logic.

Putting it All Together:

- User Input: The user interacts with the chatbot interface, posing a question.

- Query Processing: The query is preprocessed and vectorized.

- Retrieval: The vectorized query is used to search the Oracle Vector Database for relevant documents.

- LLM Generation: The retrieved documents (or snippets) are fed into the LLM, prompting it to generate a response.

- Response Formatting: The response is refined and formatted for presentation to the user.

Now let’s elaborate on each step for the python rag chatbot which is available in Github.

- Step 1: User put a question on chatbot

Internally, we push the question along with the RAG chain in our Python code.

response = get_answer(rag_chain, question)

Step 2: How to build the RAG chain

Building the RAG chain involves multiple steps, as outlined below:

- Load a list of documents. For e.g. currently our github code supports the pdf

all_pages = load_all_pages(BOOK_LIST) // BOOK_LIST = [BOOK1, BOOK2, BOOK3, BOOK4, BOOK5, BOOK6]

- Split pdf pages in chunks

document_splits = split_in_chunks(all_pages) // CHUNK_SIZE = 1000, CHUNK_OVERLAP = 50

- Load embeddings model

whenever user decide to go for local embedding model, he/she can use hugging face embedding or he/she can go for cohere embedding

embedder = create_cached_embedder()

def create_cached_embedder(): ## Initializing Embeddings model...

fs = LocalFileStore("./vector-cache/") # Introduced to cache embeddings and make it faster

if EMBED_TYPE == "COHERE": ## Loading Cohere Embeddings Model...

embed_model = CohereEmbeddings(

model=EMBED_COHERE_MODEL_NAME, cohere_api_key=COHERE_API_KEY

)

elif EMBED_TYPE == "LOCAL":

print(f"Loading HF Embeddings Model: {EMBED_HF_MODEL_NAME}")

model_kwargs = {"device": "cpu"}

# changed to True for BAAI, to use cosine similarity

encode_kwargs = {"normalize_embeddings": True}

embed_model = HuggingFaceEmbeddings(

model_name=EMBED_HF_MODEL_NAME,

model_kwargs=model_kwargs,

encode_kwargs=encode_kwargs,

)

# the cache for embeddings

cached_embedder = CacheBackedEmbeddings.from_bytes_store(

embed_model, fs, namespace=embed_model.model_name

)

return cached_embedder

- Create a Vector Store and store embeddings within the Oracle 23ai database.

We are providing an option in our chatbot where customers can configure the different databases for storing embeddings. The following code demonstrates this feature:

vectorstore = create_vector_store(VECTOR_STORE_NAME, document_splits, embedder)

Let’s say we are going to use oracledb we have to pass the store_type as “ORACLEDB” in the config rag file

def create_vector_store(store_type, document_splits, embedder):

global vectorstore

print(f"Indexing: using {store_type} as Vector Store...")

if store_type == "ORACLEDB":

connection = oracledb.connect(user="ADMIN", password="XXXXXX", dsn="XXXXXXX")

vectorstore = OracleVS.from_documents(

documents=document_splits,

embedding=embedder,

client=connection,

table_name="oravs",

distance_strategy=DistanceStrategy.DOT_PRODUCT

)

print(f"Vector Store Table: {vectorstore.table_name}")

elif store_type == "FAISS":

# modified to cache

vectorstore = FAISS.from_documents(

documents=document_splits, embedding=embedder

)

elif store_type == "CHROME":

# modified to cache

vectorstore = Chroma.from_documents(

documents=document_splits, embedding=embedder

)

return vectorstore

- Create a retriever

It will act as a decoder and provide the response in plain text

By default we have disabled the reranking in our chatbot code.

# added optionally a reranker

retriever = create_retriever(vectorstore)

- Build the OCI GEN AI LLM using below code

if llm_type == "OCI":

llm = OCIGenAI(

model_id="cohere.command",

service_endpoint="XXXXXXX",

compartment_id="mycompartmentId",

model_kwargs={"max_tokens": 200},

auth_type='SECURITY_TOKEN',

)

- Kindly define the prompt ( as for now hard coded…)

template = """Answer the question based only on the following context:

{context}

Question: {question}

"""

rag_prompt = ChatPromptTemplate.from_template(template)

Build the entire RAG chain

print("Building rag_chain...")

rag_chain = (

{"context": retriever, "question": RunnablePassthrough()} | rag_prompt | llm

)

Step 3:

User questions are processed along with the RAG chain, and the retriever finds the answer using the Gen AI LLM.

def get_answer(rag_chain, question):

response = rag_chain.invoke(question)

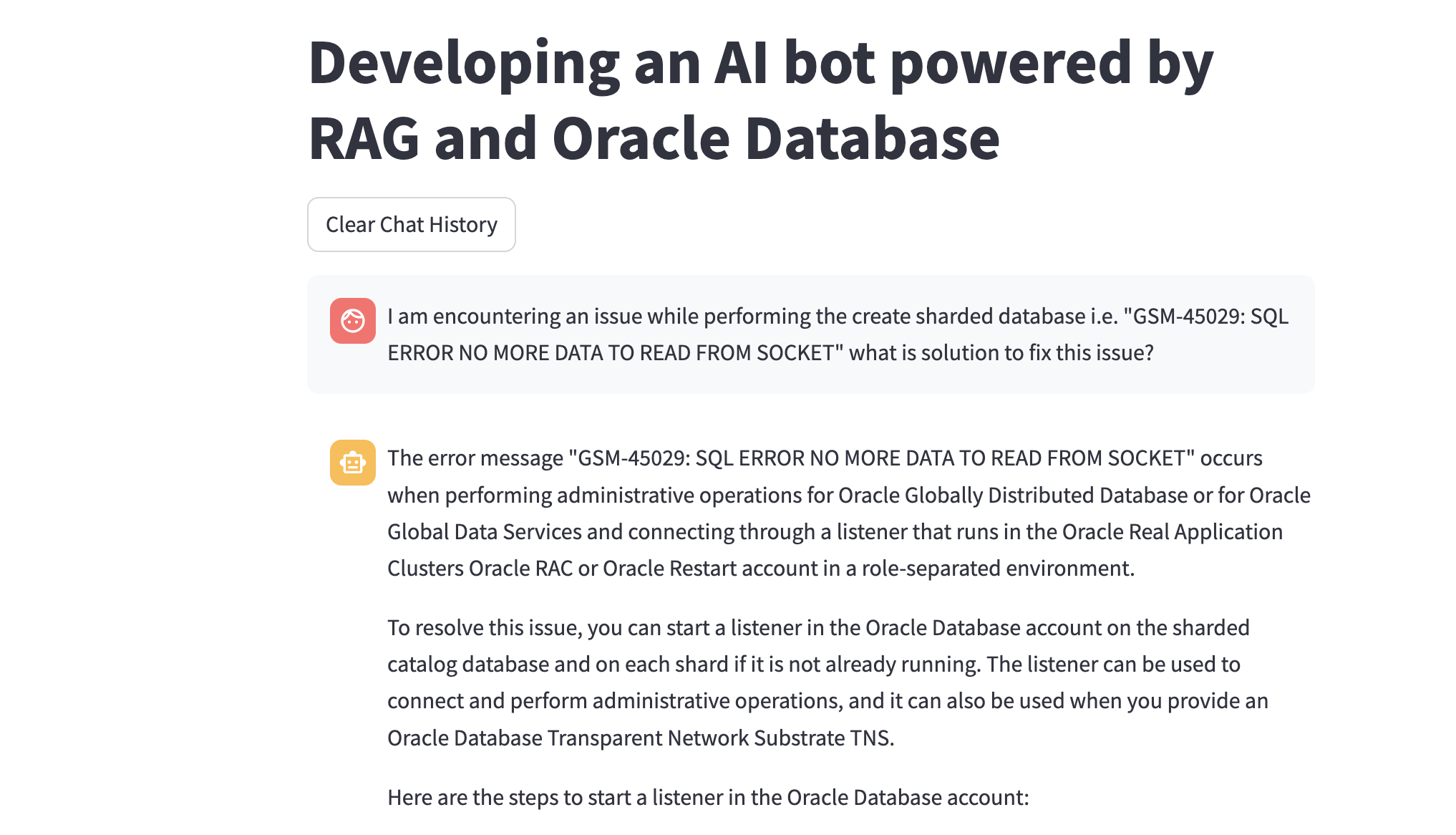

Highlighting one of the Customer use Case solved using chatbot

Benefits of a Full-Stack RAG Chatbot:

- Accurate and Up-to-Date Responses: Combines LLMs with retrieval for reliable answers.

- Scalability: The system can be seamlessly scaled to accommodate larger datasets and user loads.

- Flexibility: New information can be readily added to the knowledge base, keeping the chatbot updated.

- Customization: The LLM can be fine-tuned for specific domains or conversational styles.

- Python Powerhouse: Python’s rich ecosystem of libraries empowers this project. From data manipulation to vectorization and LLM interaction (Transformers), Python provides the tools to streamline development.

Why Oracle 23ai?

The “best” vector database depends heavily on specific use cases, performance requirements, integration needs, and cost constraints. It’s crucial to evaluate these factors for your particular application.

That said, let’s analyze the three options we have mentioned in the demo:

ChromaDB, Oracle 23ai DB, and FAISS DB

ChromaDB is a popular open-source vector database designed for flexibility and ease of use. It’s often preferred for rapid prototyping and smaller-scale projects due to its Python-centric nature.

Oracle 23ai DB is a proprietary vector database integrated into the Oracle database platform. It offers robust performance and scalability, especially for large-scale enterprise applications. However, it might have a steeper learning curve and higher costs associated with Oracle licensing.

FAISS DB is less known, and there’s limited public information available. Without more details about its features and capabilities, it’s difficult to provide a comprehensive comparison.

Key Factors to Consider

When choosing a vector database, focus on these aspects:

- Performance: Evaluate query latency, throughput, and scalability to meet your application’s demands.

- Scalability: Consider how the database can handle increasing data volumes and query loads.

- Features: Assess the availability of features like similarity search, indexing, filtering, and integrations.

- Cost: Compare pricing models, including licensing fees, cloud storage costs, and operational expenses.

- Ease of use: Evaluate the database’s API, documentation, and community support.

- Integration: Consider how the database integrates with your existing infrastructure and applications.

Recommendations

- Start with ChromaDB: If you’re new to vector databases or working on a smaller project, ChromaDB is a good starting point due to its simplicity and active community.

- Evaluate Oracle 23ai DB: For large-scale enterprise applications with high performance and integration needs, Oracle 23ai DB might be worth considering, especially if you already have an Oracle database infrastructure.

- Research FAISS DB: If you have specific requirements that align with FAISS DB’s capabilities, gather more information and conduct thorough testing.

Additional Considerations

- Open-source vs. proprietary: Evaluate the trade-offs between flexibility, cost, and support.

- Cloud vs. on-premises: Consider the deployment options based on your infrastructure and security requirements.

- Benchmarking: Conduct performance tests with your specific data and workloads to make an informed decision.

Conclusion

Leveraging OCI Generative AI, Oracle Vector Database, and Python empowers you to build a robust RAG chatbot that delivers engaging and informed user experiences across various domains. For enterprise-scale RAG chatbots, Oracle 23ai DB often emerges as a strong contender due to its performance, scalability, and integration with Oracle infrastructure.