Introduction

In general terms, asynchronous patterns offer more robust, flexible and loosely coupled architectures. They tend to be more reliable and tolerant because the act of producing and consuming the message will occur asynchronously and rarely simultaneously. On the other hand, complexity can increase, as we start thinking more in terms of events and less in terms of static data living somewhere. This ultimately results in eventual consistent systems, ie, systems that aren’t always consistent, but will eventually be.

An important tool to implement this type of pattern is a tool which will assume the role of a messaging system. This tool can rely on a highly available cluster or being implemented with a database. You have probably at some point worked or heard about with JMS, Kafka, AQs, MQs. etc.

These systems tend to be very powerful and used in architectures with important operational requirements, concerning high-availability, scalability, storage durability, throughput, loosely coupled componentes, etc. If you’re deciding to use this type of pattern, you’ll have to consider that besides the increase in the complexity of the architecture, you will have to deal with specific risks such as lost messages, unordered or duplication of messages, etc.

Also, if you’re considering to use a Message System to provide your architecture with High Availability and Scalability, you should also guarantee that the messaging system itself is scalable and highly available. Duhh…I know 😐

OCI Streaming Service

In this regard, the OCI Streaming Service can be an excellent option.

By the way, if you’re interested in getting started with async patterns and K8s, consider signing up for an Oracle Cloud Free Tier account!

Being a fully manage service, you won’t need to think about managing the service itself, nor its life-cycle, patching, or upgrades. You also won’t need to think in terms of its scalability or high-availability—that’s guaranteed by Oracle Cloud Infrastructure with impressive SLAs.

You just need to create the stream (with the console or with OCI REST API) and start publishing and consuming high-volume data streams.

On top of that OCI Streaming is compatible with the following Kafka APIs:

- Producer (v0.10.0 and later)

- Consumer (v0.10.0 and later)

- Connect (v0.10.0.0 and later)

- Admin (v0.10.1.0 and later)

- Group Management (v0.10.0 and later)

This allows you to use applications written for Kafka to send messages to and receive messages from the Streaming service without having to rewrite your code.

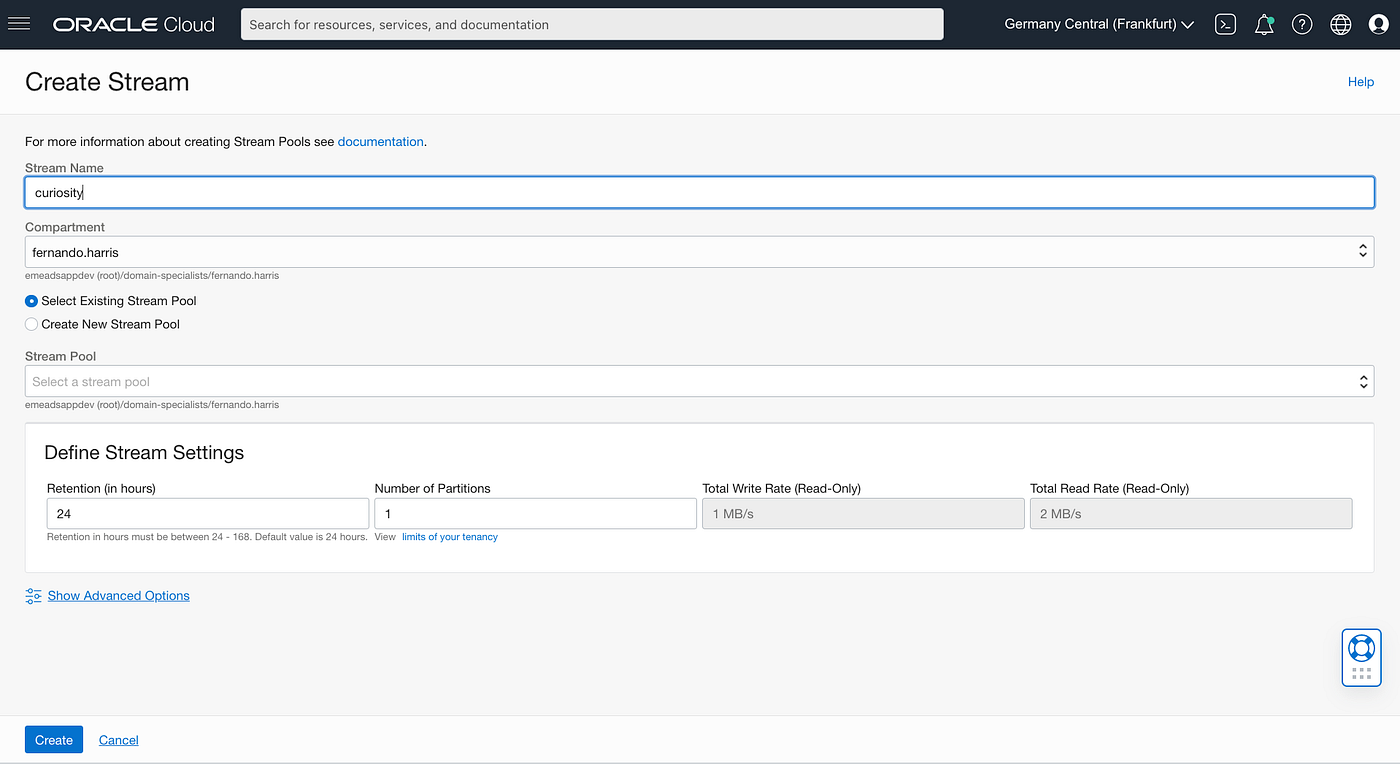

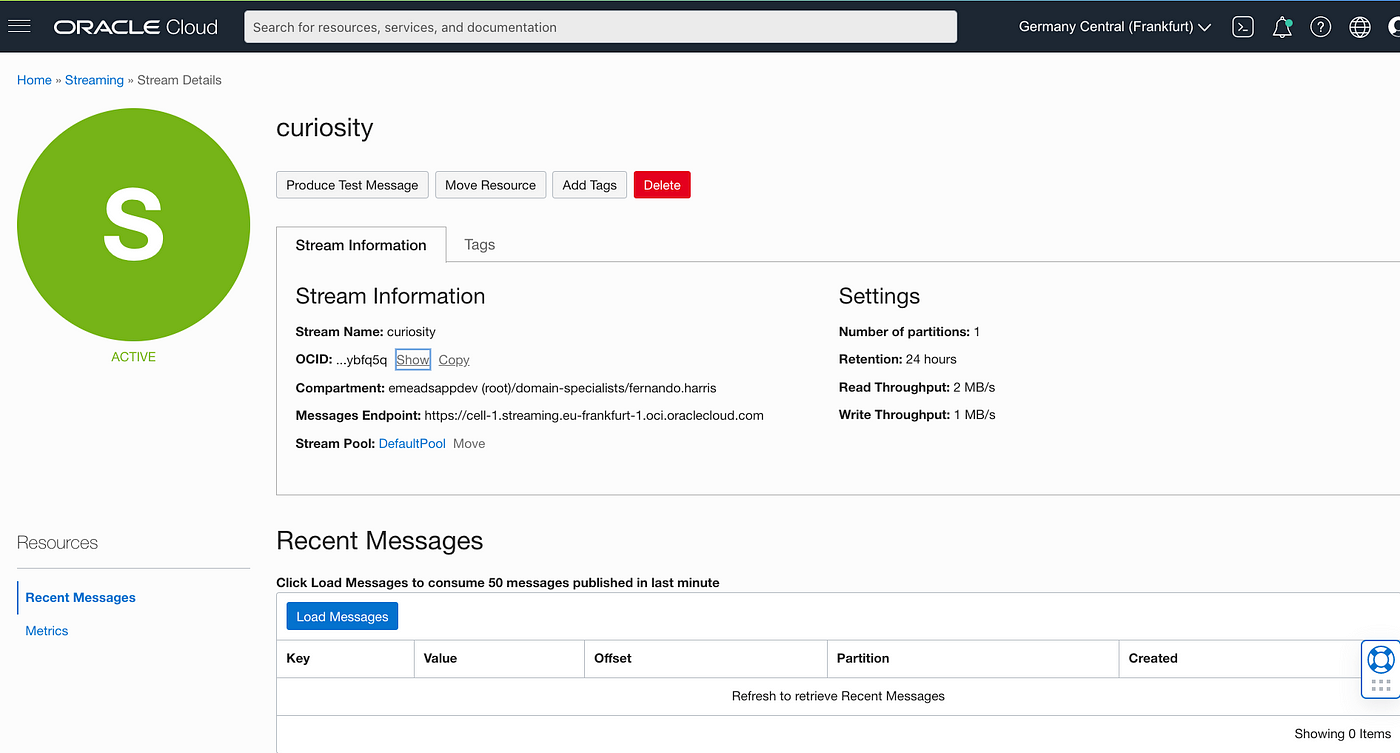

Create a Stream

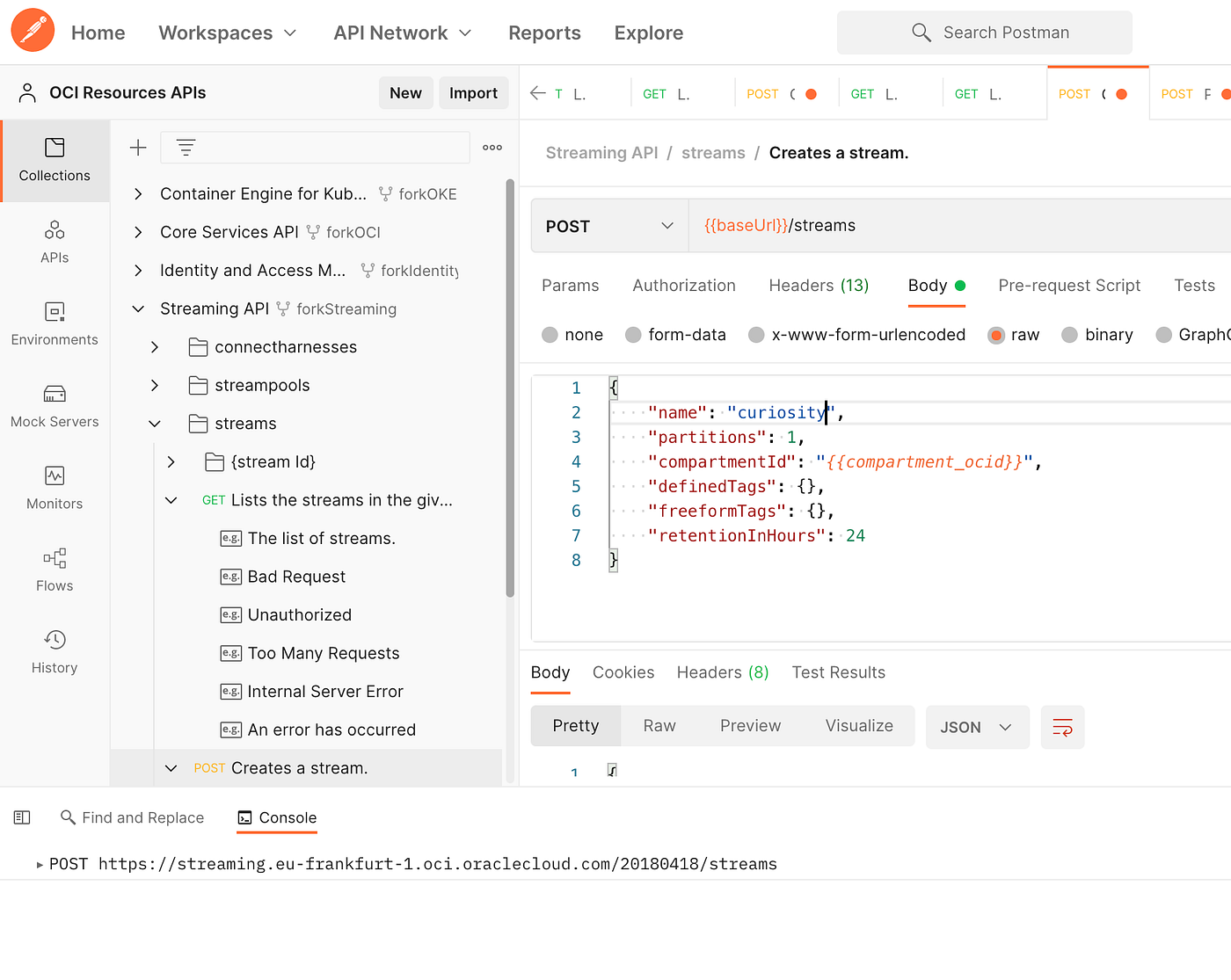

You can create a Stream with the Console or with the REST API:

- OCI Console > Analytics & AI > Messaging > Streaming

2. OCI Rest API>Post to Create Stream

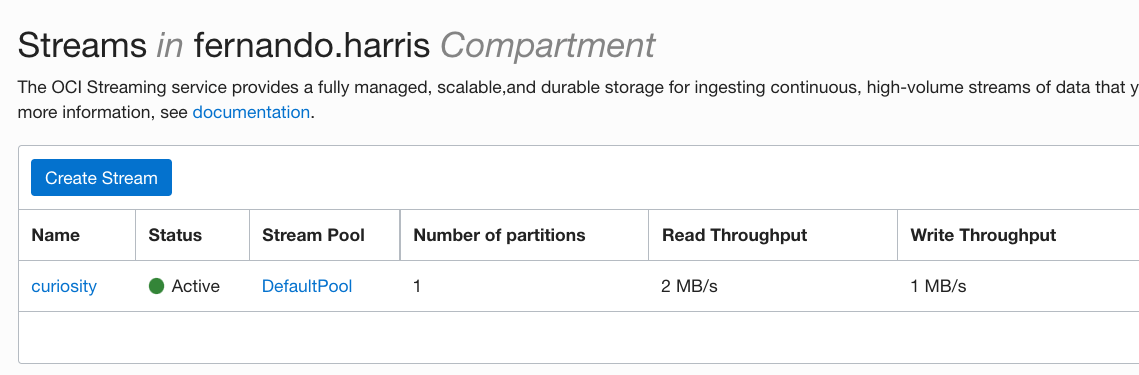

For both, the result is the same:

Explaining the exercise

We are going to publish and consume messages against the stream called curiosity we just created. Our end goal is to have two spring-boot micro-services. A first service — curiosityms– with a publisher role deployed locally in my laptop, and a second –consumerms– with a consumer role deployed in an Oracle Container Engine (aka OKE) k8s cluster. Both micro-services will have to manage authentication in a slightly different way, since they are actually running in different places.

The Publisher micro-service

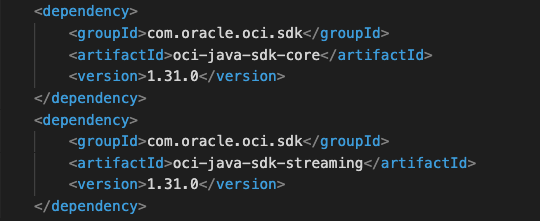

Maven Dependencies

Let’s start by declaring the OCI Maven dependencies we will need to develop both the publisher and the consumer:

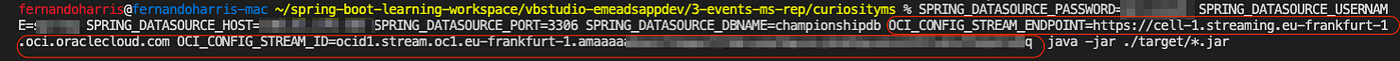

As we mentioned, the publisher micro-service will be running locally.

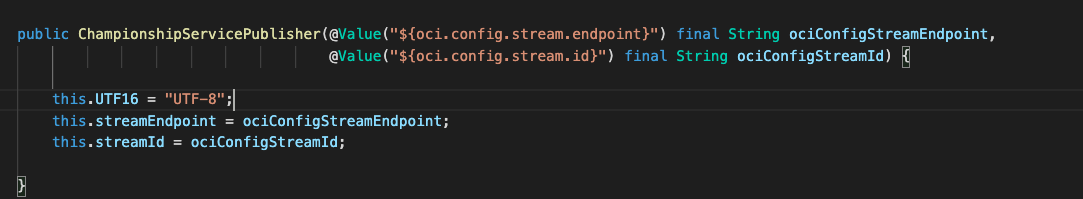

Let’s say we have a class called ChampionshipServicePublisher.

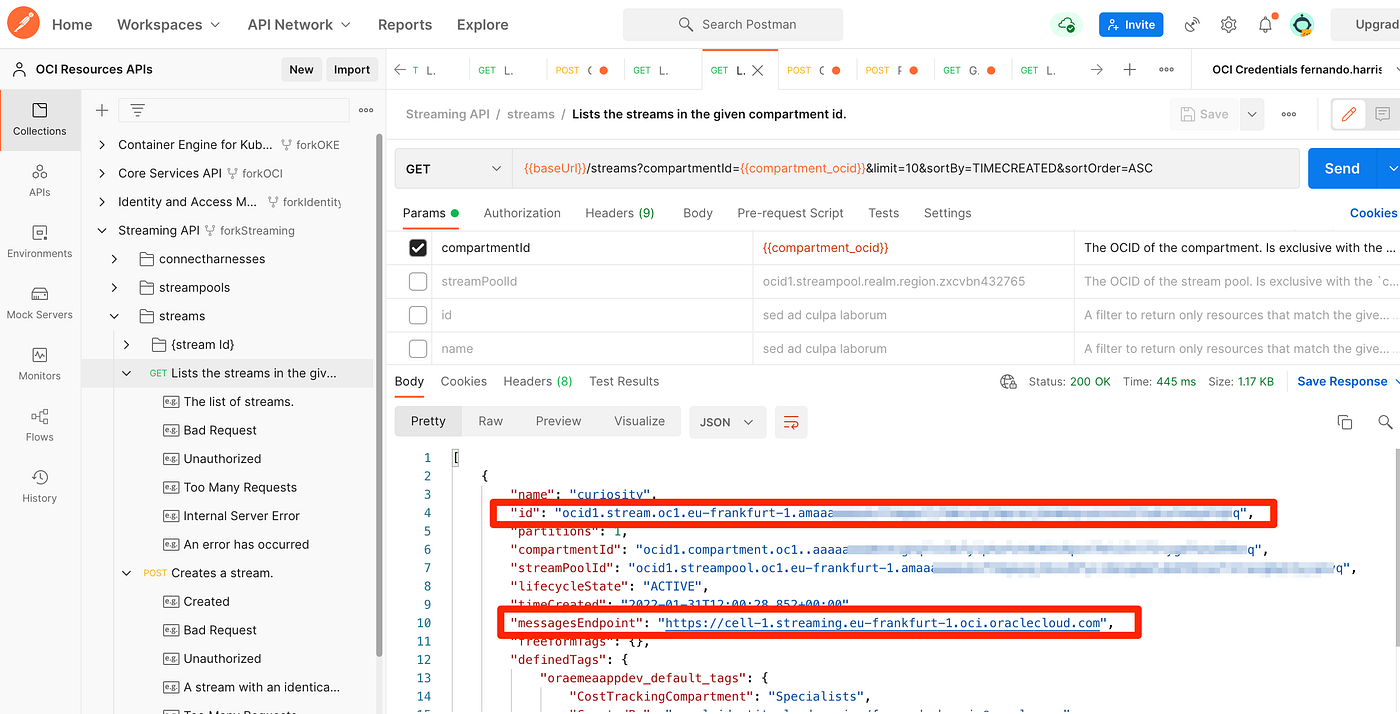

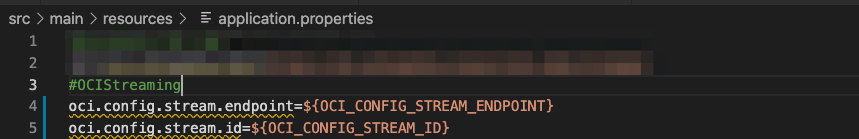

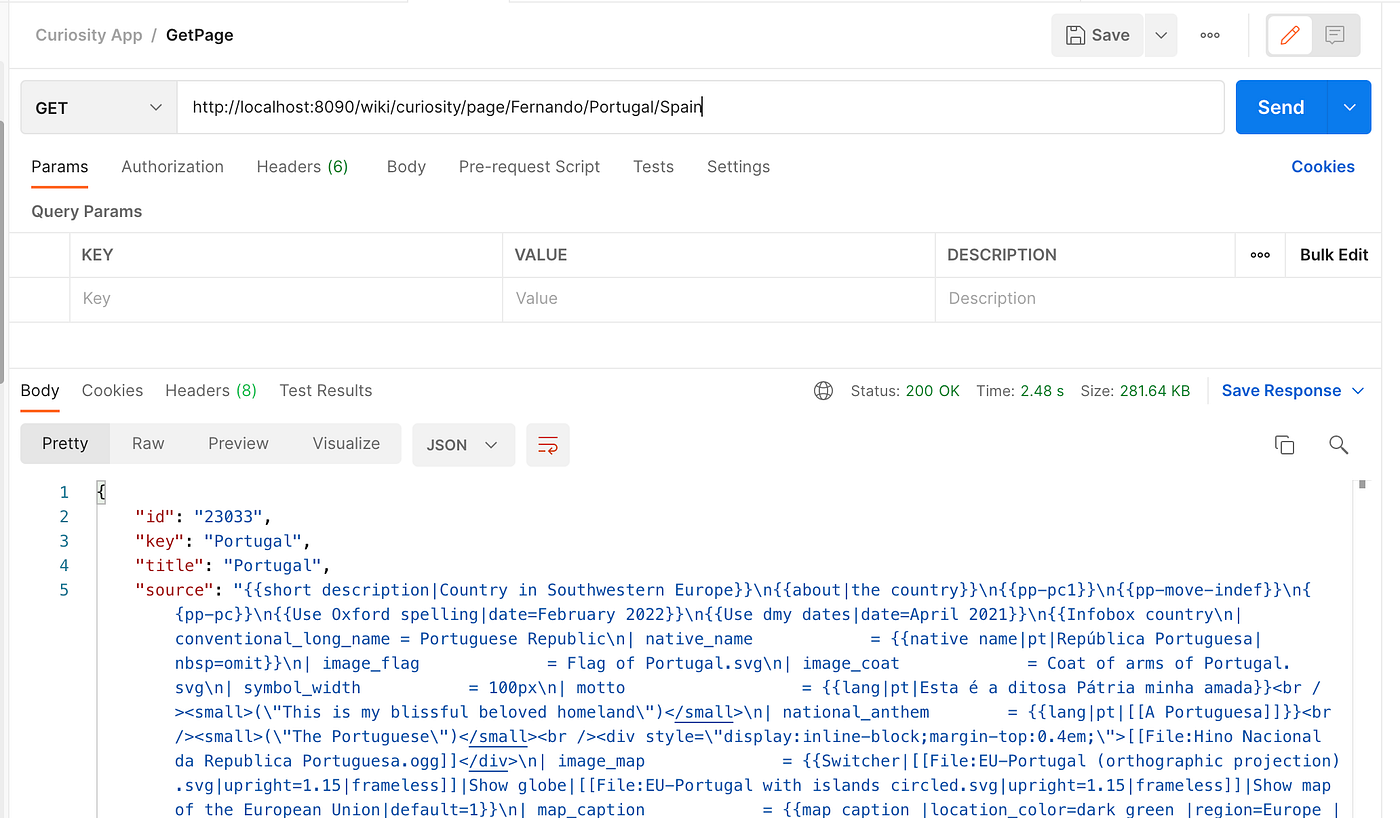

This class has a simple constructor to prepare the Stream endpoint and id. The Stream endpoint and its id can be obtained with the REST API or the Console. I’m using OCI REST API in the picture below:

Let’s keep this information in the application.properties file as configuration variables which will get their values from a configuration file or environment variables dynamically. This way we guarantee that code is independent from configuration and hence we guarantee environment parity between different deployment targets. This would ultimately allow us to use different streams in OCI for different deployments with the same code:

Our class ChampionshipServicePublisher has a simple constructor which dynamically annotates the values from the OCI Streaming configuration in application.properties file:

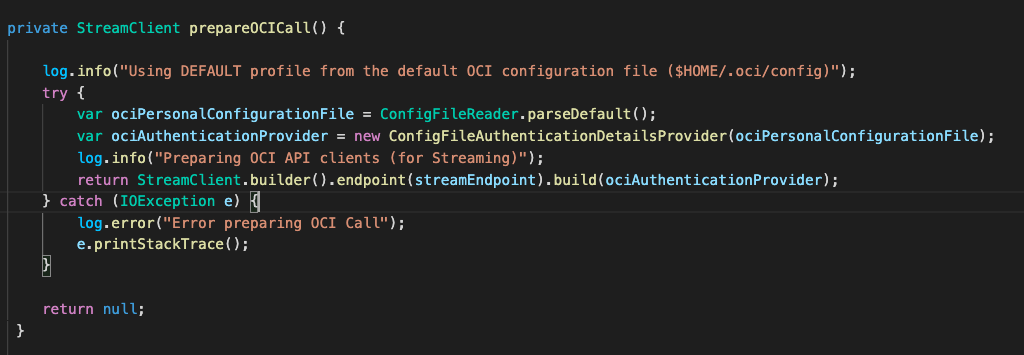

We also created a method called prepareOCICall() which will manage OCI authentication and return a StreamClient. Since we are building this service on my laptop, I will use my personal OCI configuration file and API Key — both kept locally — to authenticate against Oracle Cloud Infrastructure:

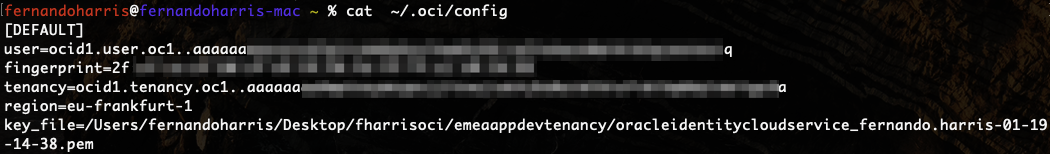

The OCI config file will make a direct reference to my personal PEM key and is by default located at: ~/.oci/config

Tackled the authentication challenge and we can basically start producing messages. Method publishMessageToStream() gets that responsibility:

This method does 3 things:

- Calls the authentication process with a call to prepareOCICall(),

- Gets and prepares the event which will be published to the Stream

- Publishes to the stream.

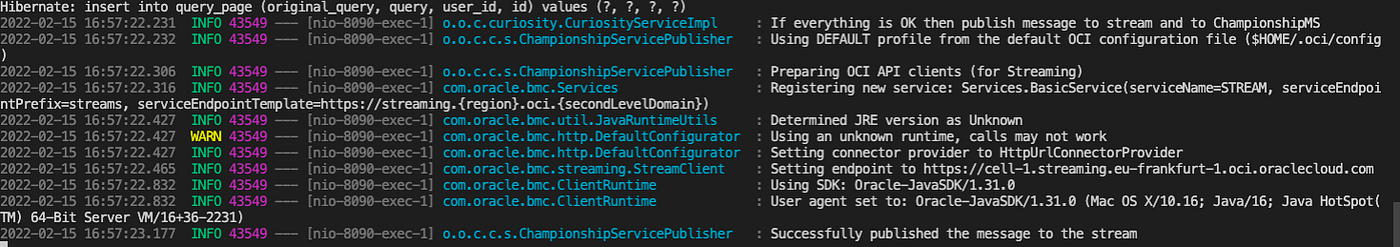

Let’s compile and run the code. Our micro-service will be running at localhost port 8090:

Executing 3 requests will produce 3 messages:

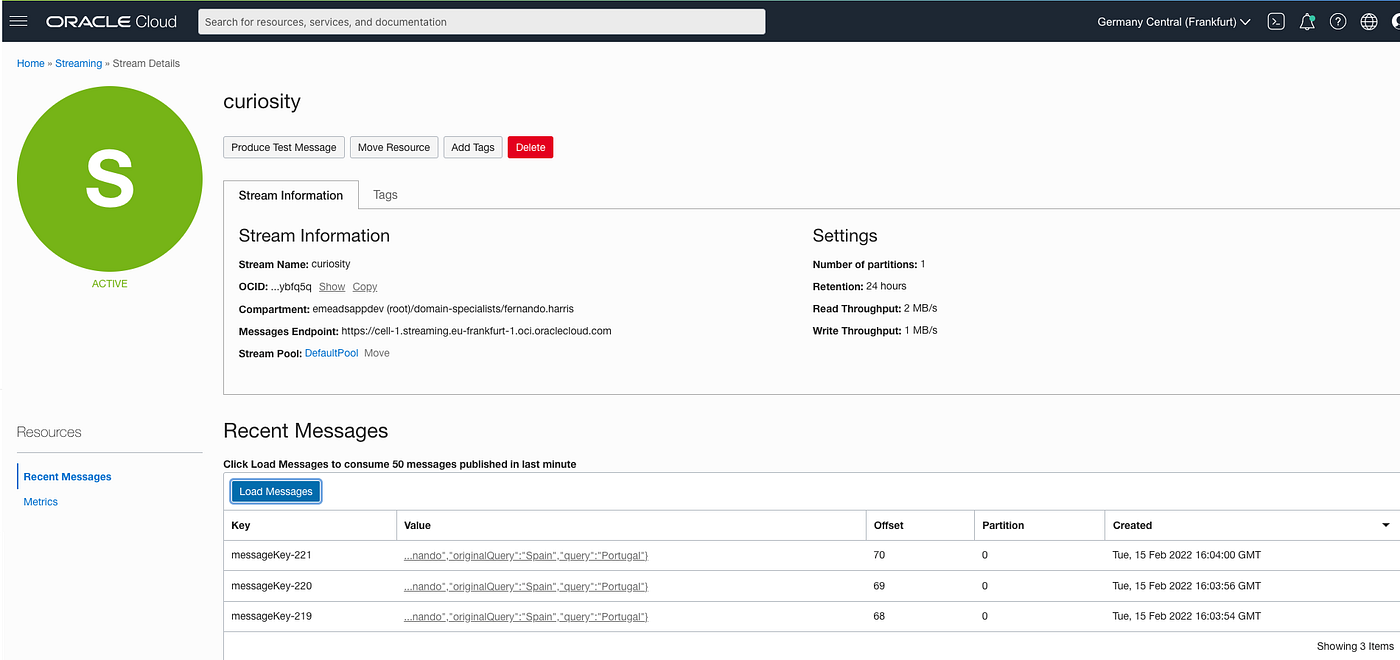

Check OCI Streaming console or REST API to confirm that the messages were published successfully:

The Consumer micro-service authentication process

The consumerms micro-service will assume the role of a subscriber and will be running in OKE in Oracle Cloud. This means that its authentication process can be slightly different.

As you noticed, when running locally, we can use a local OCI config file and a local PEM key to get authenticated and eventually publish the messages.

If we follow a similar approach, and since we are going to run our service in Kubernetes, we would need to “emulate” that situation within a container. Basically the container running our service would need to have a OCI config file and respective PEM key. Well, needless to say that this is not recommended.

The best-practice is to use OCI Instance Principals and Dynamic Groups.

Dynamic groups allow you to group Oracle Cloud Infrastructure compute instances as “principal” actors (similar to user groups). You can then create policies to permit instances to make API calls against Oracle Cloud Infrastructure services. When you create a dynamic group, rather than adding members explicitly to the group, you instead define a set of matching rules to define the group members. For example, a rule could specify that all instances in a particular compartment are members of the dynamic group. The members can change dynamically as instances are launched and terminated in that compartment.

Instance principals will let you run compute instances with secure identities and being managed as a proper principal type in Oracle Identity and Access Management. With this type of approach you don’t have to “transport” and “rotate” your credentials. It’s much safer and efficient. Lets see how to do that for the OCI Streaming and Kubernetes:

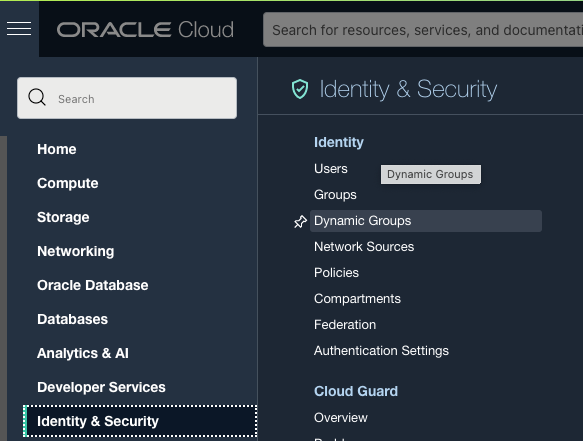

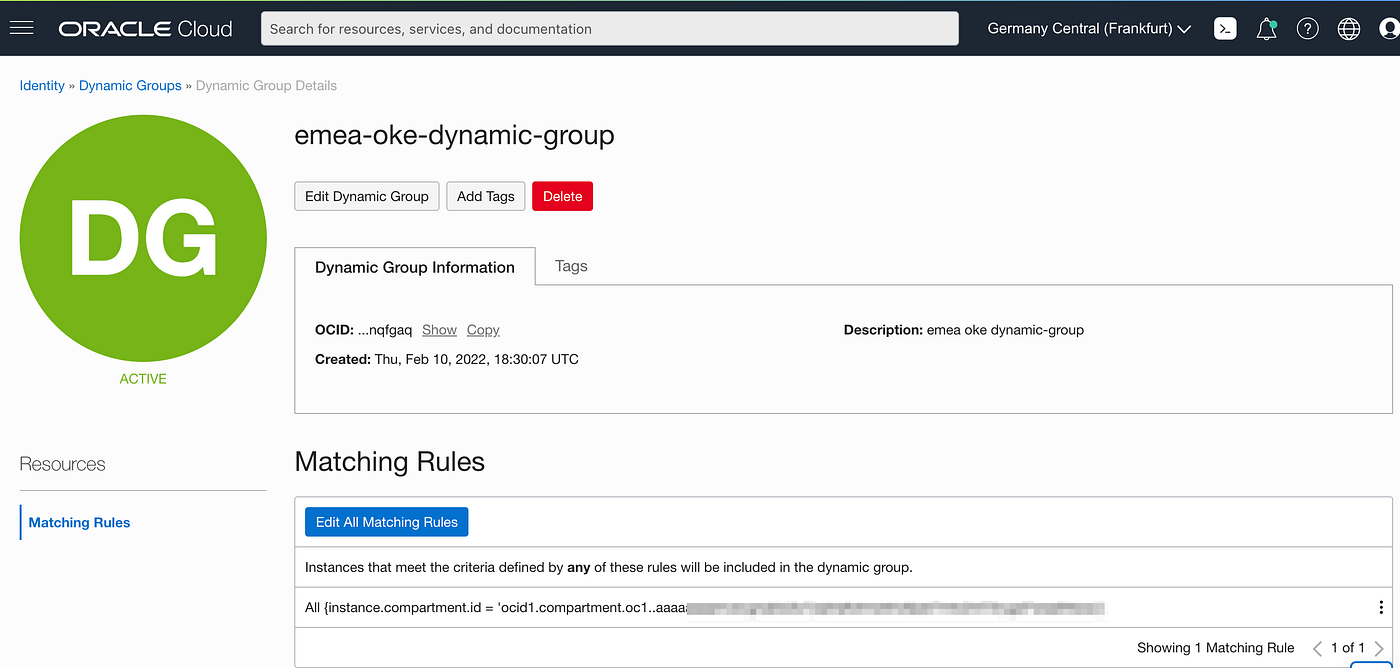

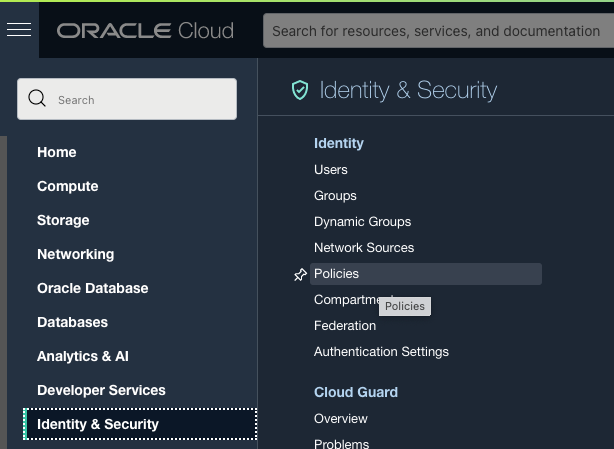

Creating a Dynamic Group

In the OCI Console go to:

Identity & Security > Dynamic Groups

Click Create Dynamic Group:

And declare the Matching Rules to dynamically include in this group specific instances.

In natural language, I’m simply saying that all instances belonging to the logical compartment where my Kubernetes Cluster is should be included in this Dynamic Group. This is handy if I need to create new worker nodes on my k8s cluster. But we could actually be more precise, and instead of declaring a rule impacting over all compartment, simply identify the specific compute instances we want to get included. Both approaches would work for this example:

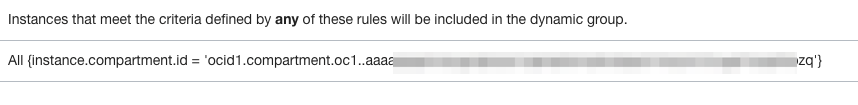

Once again, whatever your OCI user is allowed to do with the console, you should be able to do it with the REST API, including creating Dynamic Groups and respective rules:

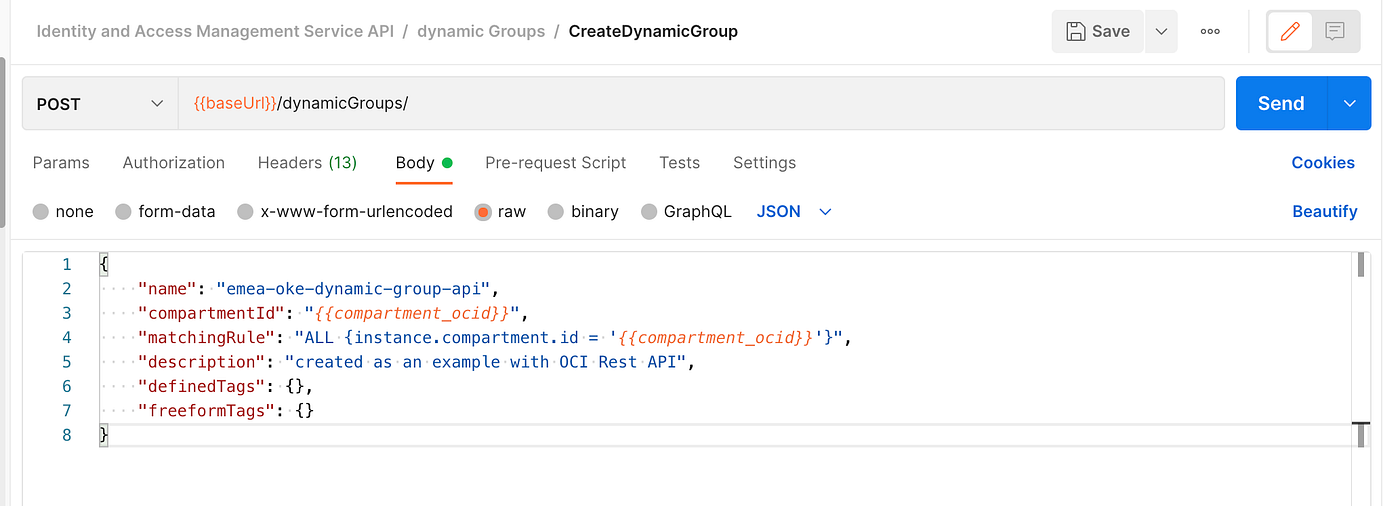

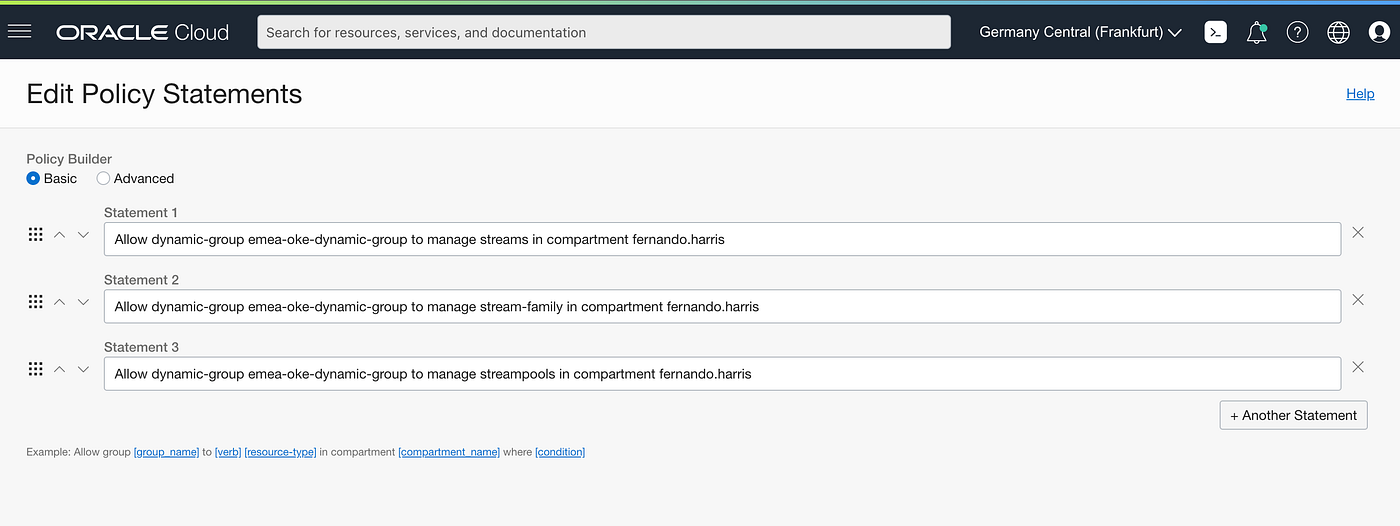

Create Policies to Allow the Dynamic Group to have access to Streams in a specific Compartment

Now that we have a Dynamic Group we have to declare what the members of this Dynamic Group are allowed to do. And we achieve that with Policies:

In the Console, go to

Identity & Security > Policies

Make sure you are working on the right compartment and click Create Policy. Next , create the statements:

Declare the policies needed to give access to Streams to the member of the Dynamic Group:

Allow dynamic-group emea-oke-dynamic-group to manage streams in compartment fernando.harris

Allow dynamic-group emea-oke-dynamic-group to manage stream-family in compartment fernando.harris

Allow dynamic-group emea-oke-dynamic-group to manage streampools in compartment fernando.harris

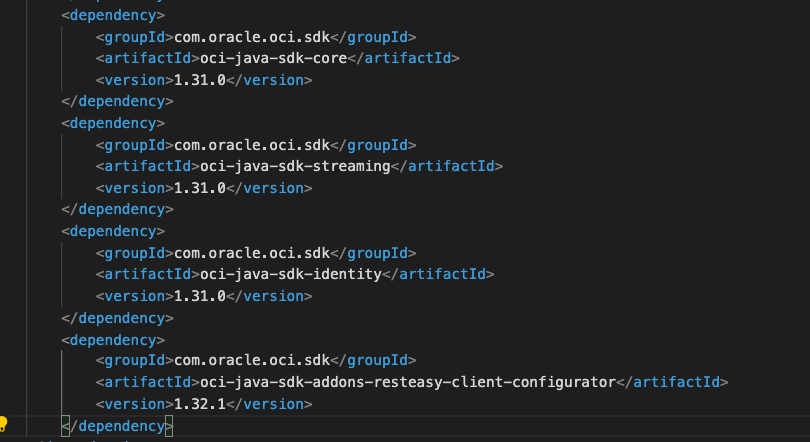

Coding the consumerms micro-service

The consumerms micro-service will need a couple of classes more to play with the Instance Principals. So, besides the OCI sdk core classes and sdk for streaming, we’ll also need som oci sdk identity classes and an addon for a resteasy client configurator:

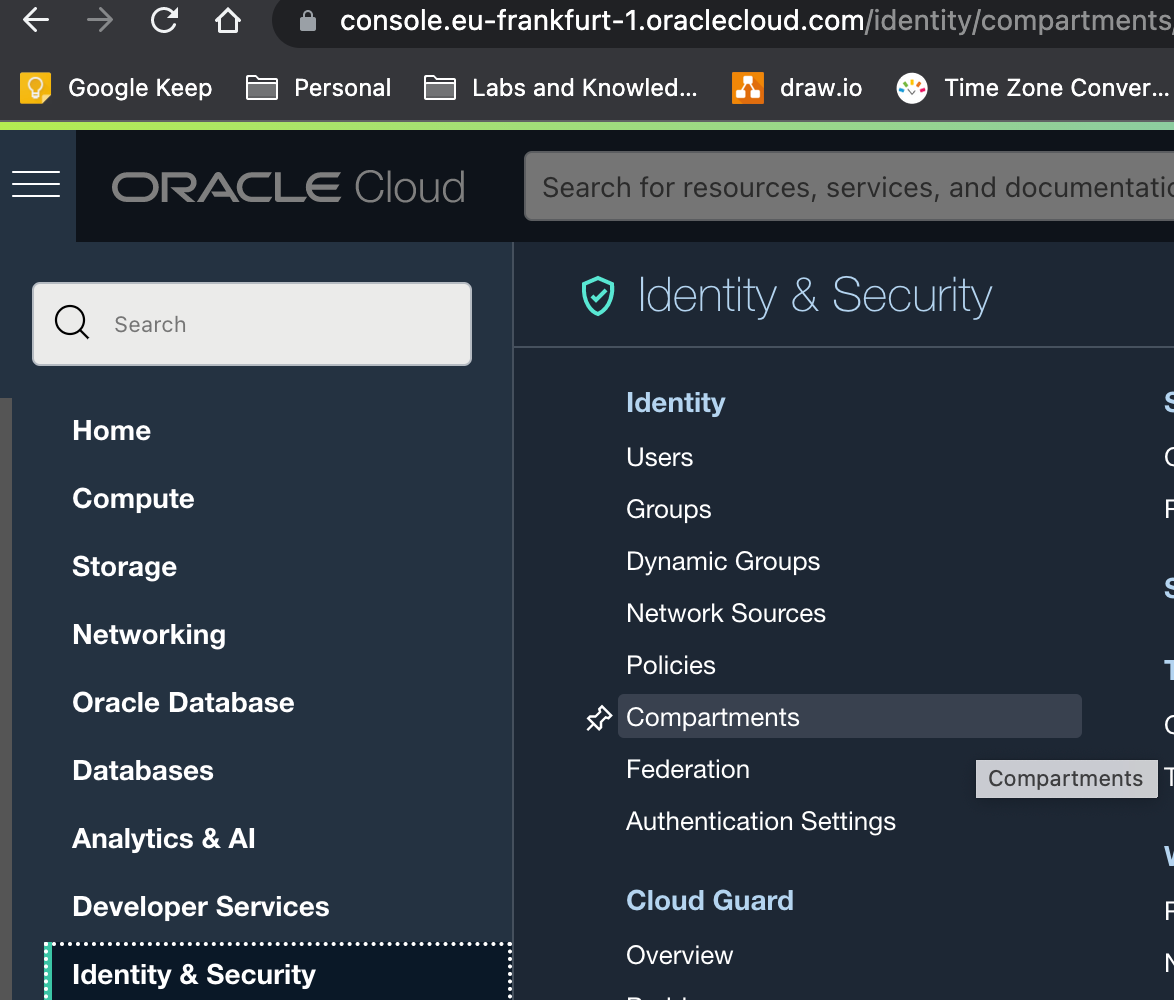

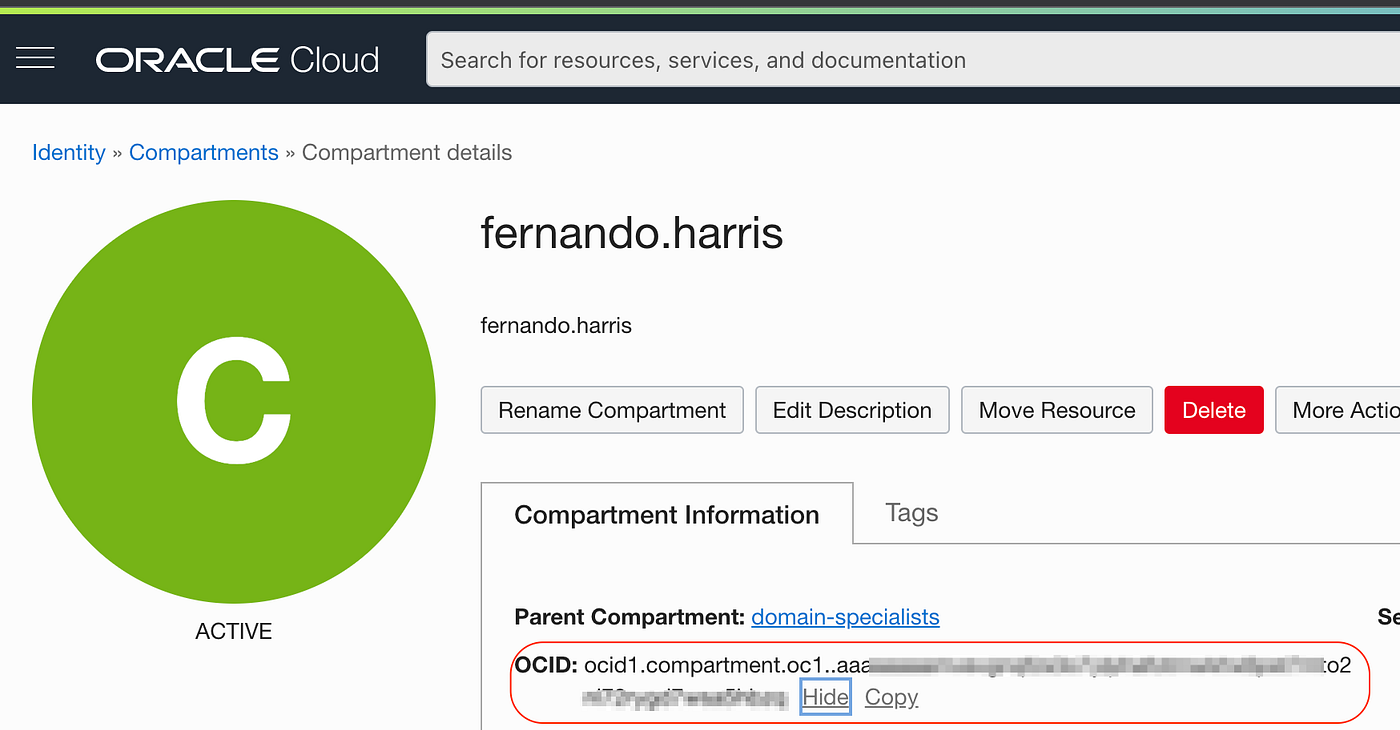

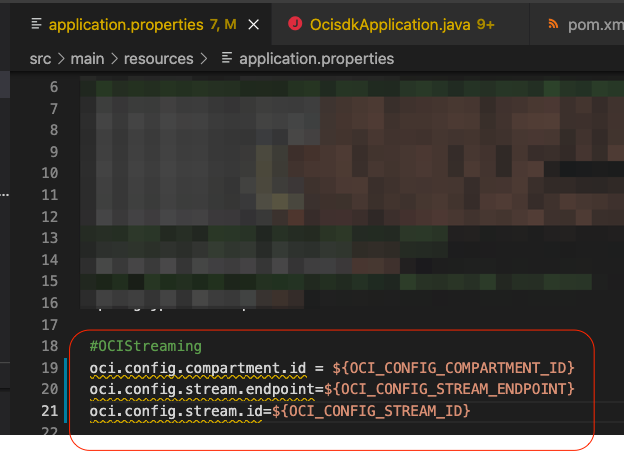

Once again let’s keep the OCI Streaming information in the application.properties file as configuration variables. Time time, besides the endpoint and id we also need the compartmentId. In this case, we are talking about the compartmentId of the compartment fernando.harris where the stream curiosity is running:

Go to Identity & Security > Compartments:

Chose the compartment and copy its id:

Now, prepare the application.properties file :

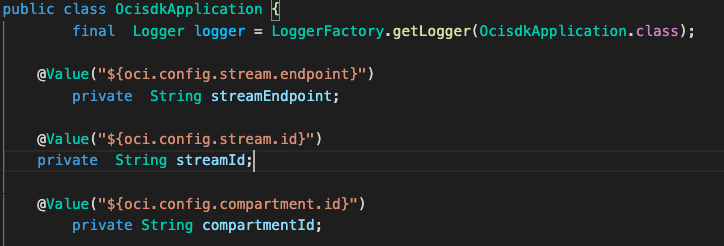

Our class OcisdkApplication will dynamically assign the values from the OCI Streaming configuration in the application.properties file as environment variables through @value annotation:

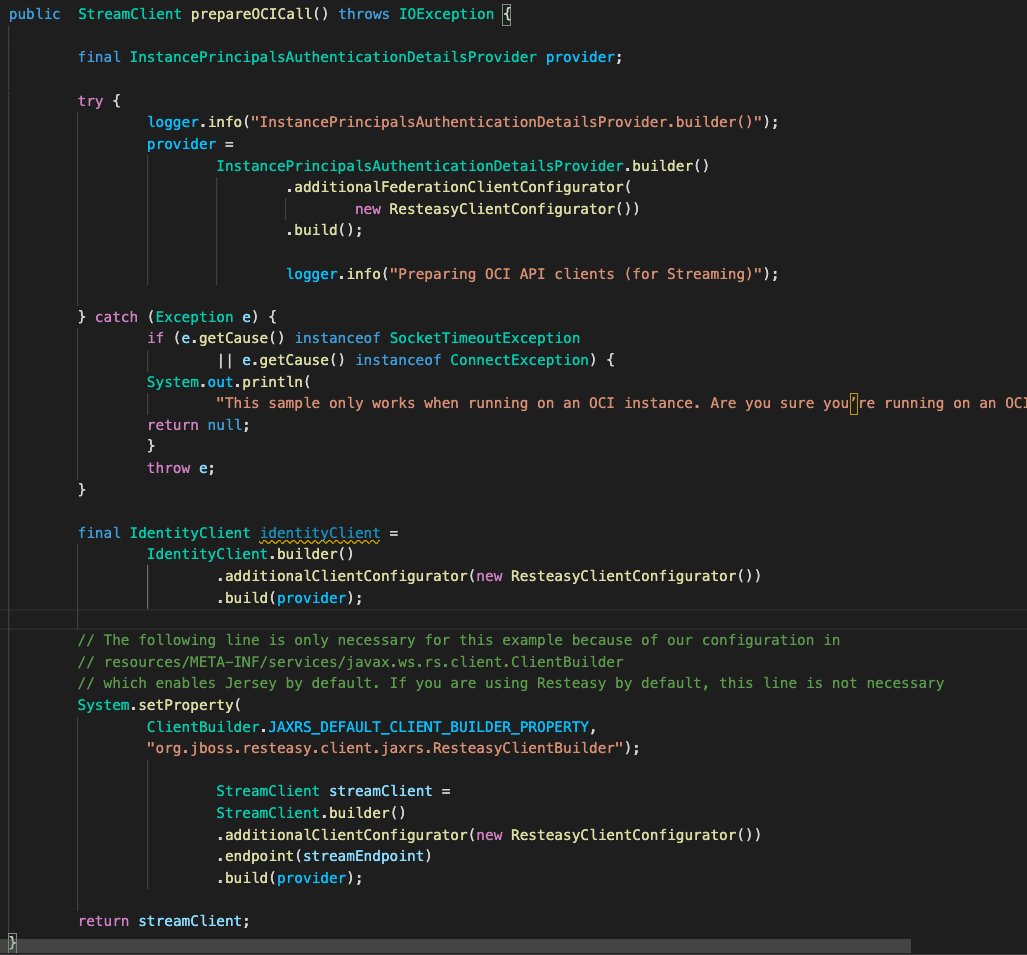

Since now we intend to build and run this micro-service on OCI within Container Engine (OKE), we will not need to use an OCI configuration file and API Key to authenticate against Oracle Cloud Infrastructure. If this was the case, we would be following a big risk keeping that type of information inside our container image. So, we are going to use the Instance Principal concept we explained above. Lets see how to implement this code, and once again we have a method called prepareOCICall() which will deal with the authentication process:

This method will make use of the InstancePrincipalsAuthenticationDetailsProvider to authenticate with Instance Principals and then build a streamClient with it associated with the streamEndpoint.

This method does 3 things:

- calls the authentication process with a call to prepareOCICall(),

- prepares to consume the event which should be already published to the Stream

- and finally consumes the event from the stream.

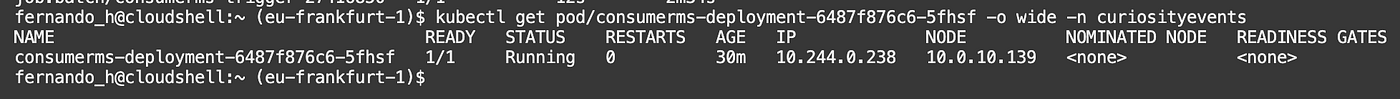

This time the code will be running in the kubernetes cluster:

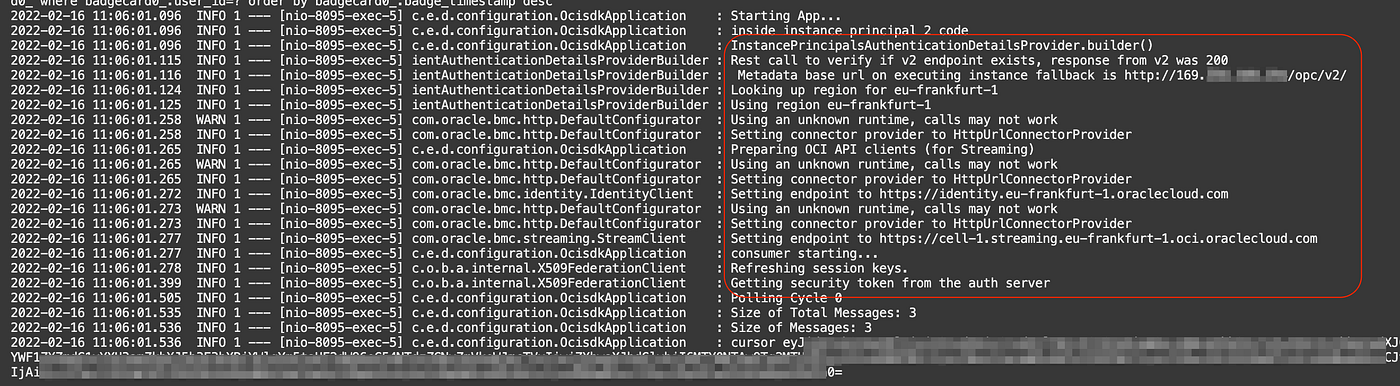

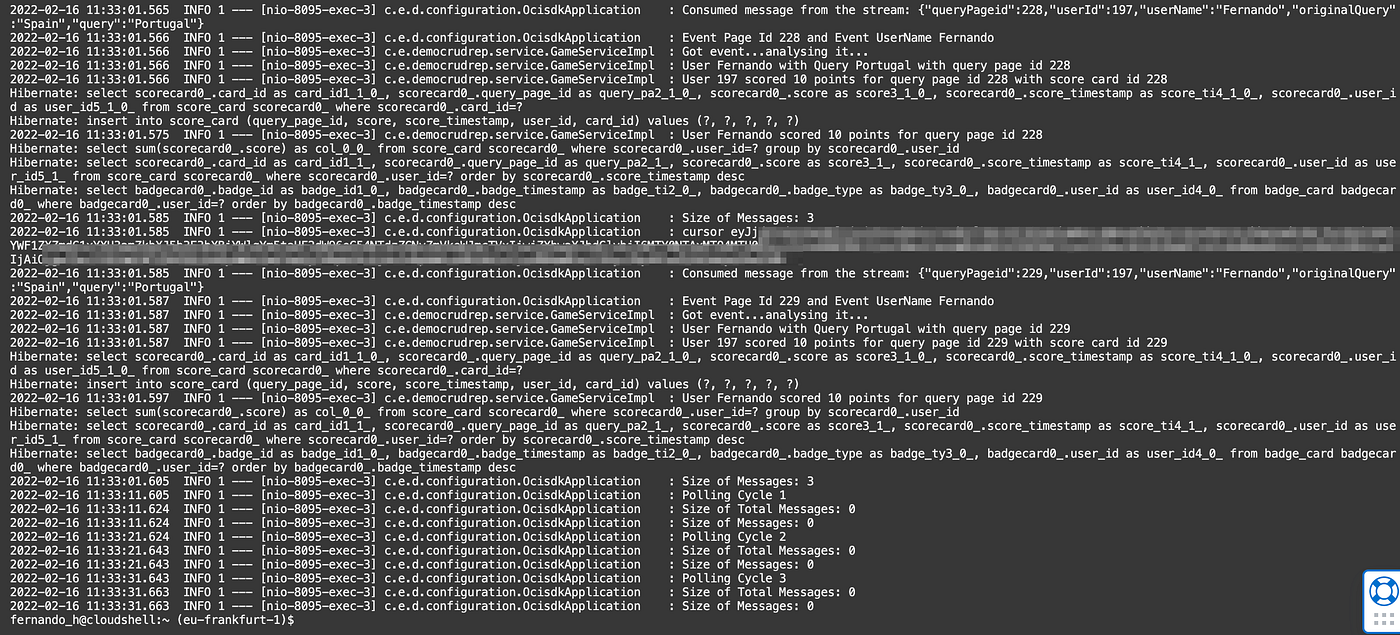

Checking the logs:

Eventually, the service will consume the messages:

And that’s it.

Have fun trying it! Here you can get the consumerms code in my Github.

Join the conversation!

If you’re curious about the goings-on of Oracle Developers in their natural habitat, come join us on our public Slack channel! We don’t mind being your fish bowl 🐠

References:

Practical Oracle Cloud Infrastructure: Infrastructure as a Service, Autonomous Database, Managed Kubernetes, and Serverless By Michał Tomasz Jakóbczyk