One of the best ways to export data out of your Autonomous Database (ADB), whether to transfer data to a database lying in a different region or even keep a copy of the data in a single place for other teams in your organization to access, is via Oracle Data Pump. Recently we released a much requested feature enabling the ability to export data from your ADB Instance directly to your Oracle Object Storage bucket.

For this walkthrough, I assume you have:

- A Oracle Cloud Account (Sign Up for a trial if you don’t have one. It’s totally free!)

- An Autonomous Database Instance with some data in it (Follow the labs this ADB Workshop if you don’t have one)

Once you have logged in to your Oracle Cloud account with an ADB Instance, lets dive right in to these steps below to export your data.

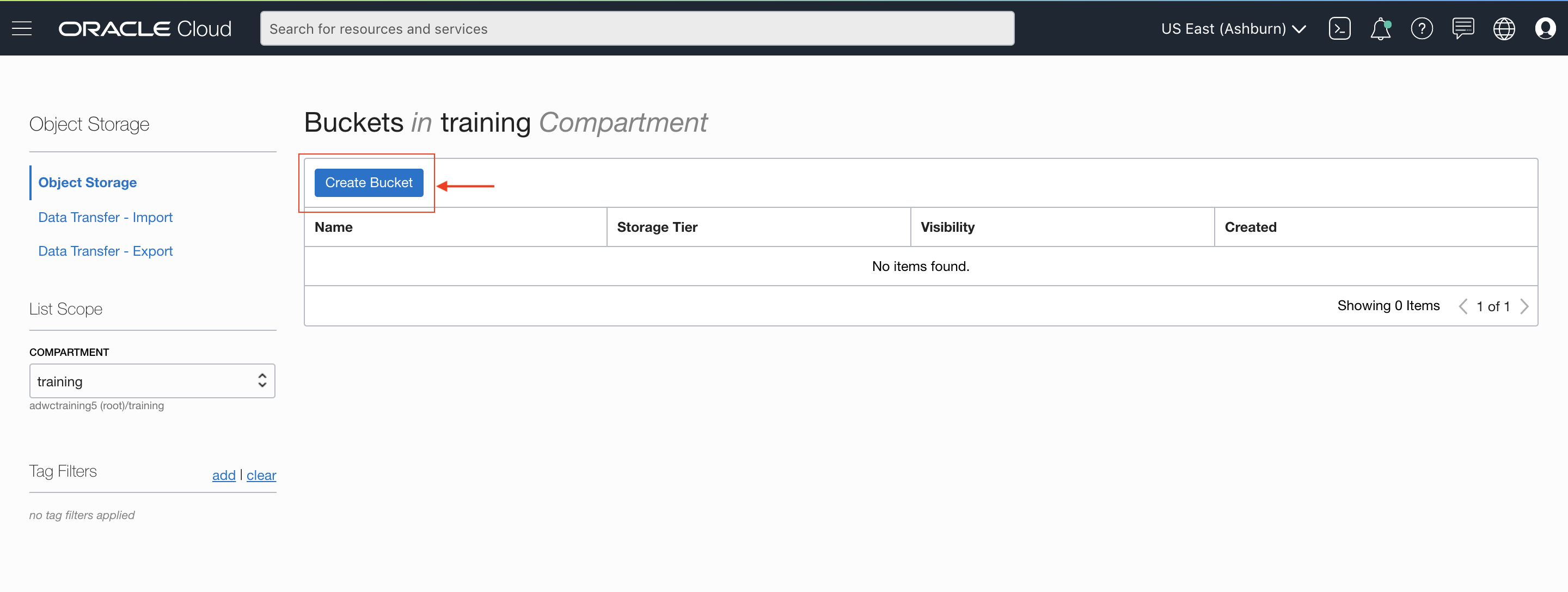

Step 1: Create an Object Storage bucket

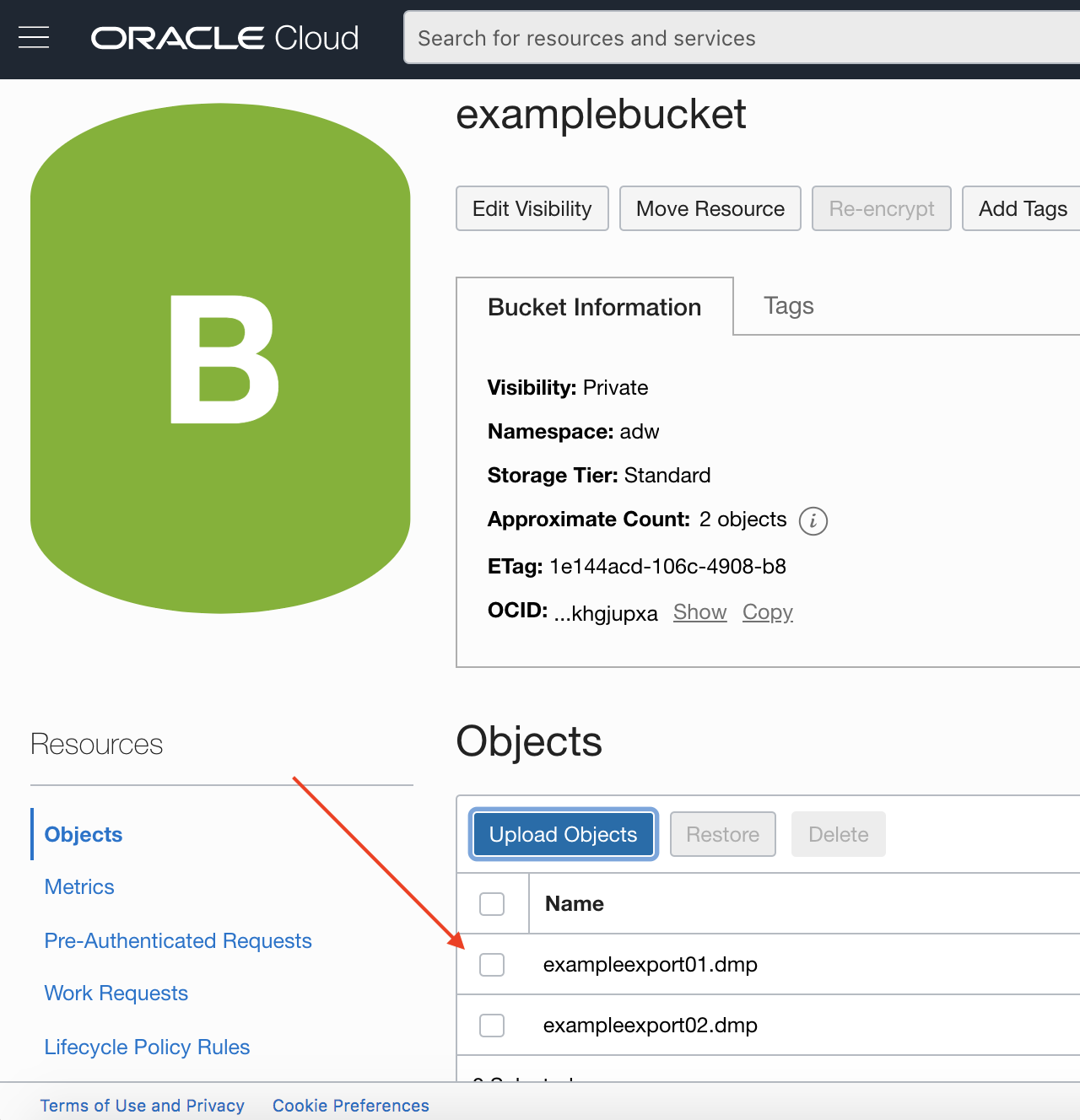

Using the hamburger menu on the left, navigate to your Object Storage. Select a compartment which you would like to put your data in and hit “Create Bucket”. The default options work well; In the popup, enter a bucket name (we will use ‘examplebucket’) and continue on to create the bucket . This bucket will hold your exported dump files.

Note: Data Pump Export supports both OCI Object Storage and OCI Classic Object Storage.

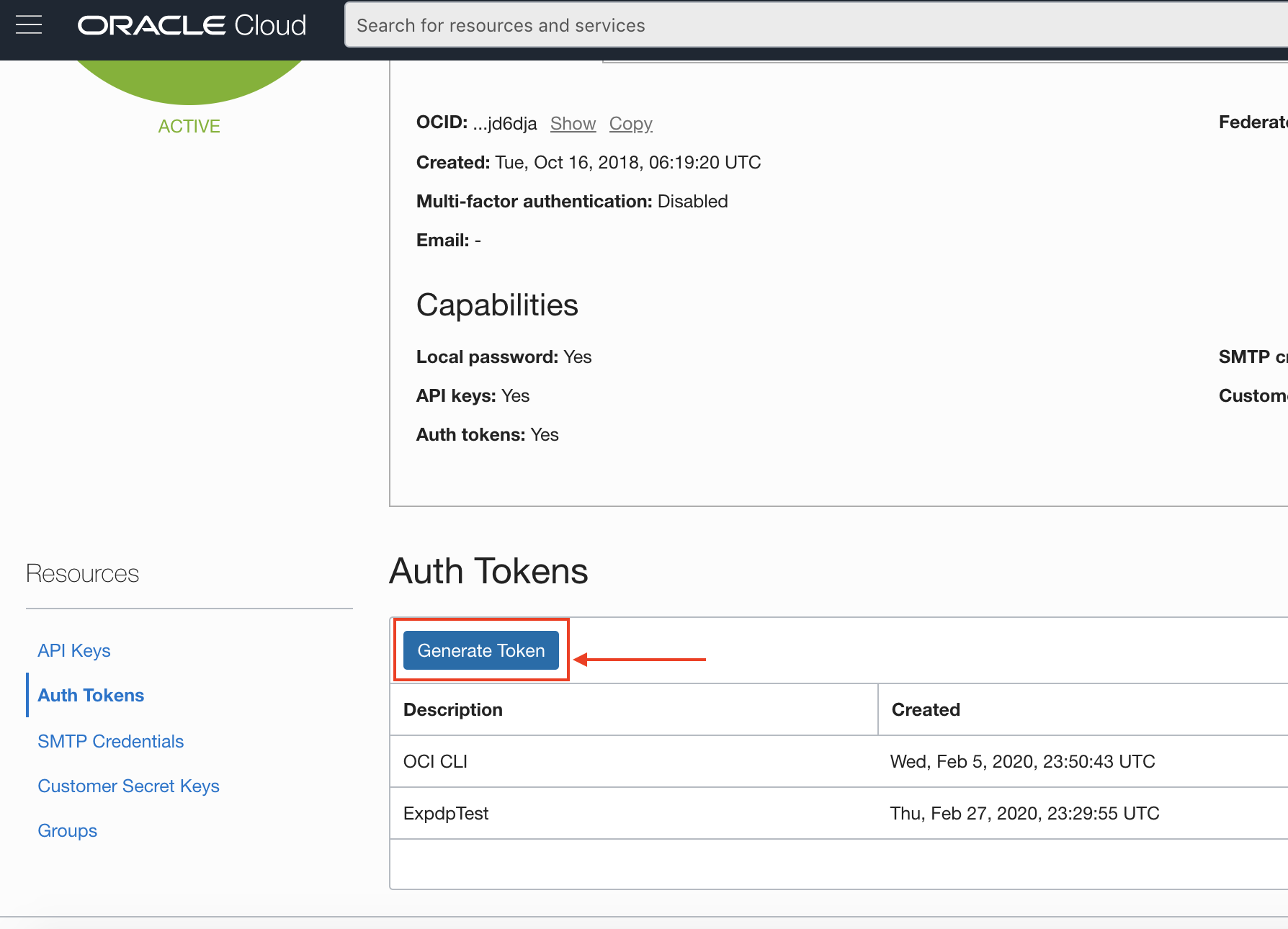

Step 2: Create a Credential: Authorization between your ADB instance and Object Storage

You will need to generate an auth token password for a new credential, navigate the left side menu to Identity -> Users -> Your User. In your selected user, on the bottom left, you will see “Auth Tokens”. When clicked, you will see an option generate a token. Note it down somewhere secure, since you cannot view this later.

Note: You must use a Swift auth token based credential here, and cannot use an OCI native Signing Key credential.

Navigate to your existing Autonomous Database instance (it may be ADW or ATP) via the left-hand menu. In your instance’s console, go to the “Tools” tab and navigate to SQL Developer Web. Login with your ADMIN Database User.

Copy the following script, in your worksheet. Fill in your Cloud User‘s username and Auth Token as password, and run it to create your user credential.

Note: If you are using an identity domain other than the default domain, you will need to prefix your username with your account’s identity domain as in the example below.

BEGIN

DBMS_CLOUD.CREATE_CREDENTIAL(

credential_name => 'EXPORT_CRED',

username => 'identitydomain/someone@oracle.com',

password => 'erioty6434900z……'

);

END;

Step 3: Set your credential as the Default Credential (for Data Pump v19.6 and below)

Next, in SQL Developer Web, run the following command after replacing in your database user:

ALTER DATABASE PROPERTY SET DEFAULT_CREDENTIAL = '<Database User Name>.EXPORT_CRED'

You have now set your database’s default credential.

Note: I am assuming here you are running Data Pump version 19.6 or below since that is what is currently available on most platforms. In versions 19.7 and above, you will not need to set a default credential and be able to run your exports with a credential parameter!

Step 4: Install Oracle Data Pump

If you’re ahead in the game and already have a recent installation of Oracle Data Pump, skip to Step 5. If not, the easiest method to install Data Pump is via Instant Client. Download that as well as the Tools Package, which includes Oracle Data Pump, for your platform from Oracle Instant Client Downloads. Follow the documentation for step-by-step installation details.

Step 5: Run Oracle Data Pump Export

The Data Pump utility is standalone from the database. Once installed, in your terminal / command line we will run the command below. Notice, we are using the “default_credential” from Step 3 as the first argument in the ‘dumpfile’ parameter.

The second argument is pointing to your Object Storage bucket’s URI. You may use a Swift URI if you have one, instead of an OCI Native URI as is in the example below. In this URI, replace the:

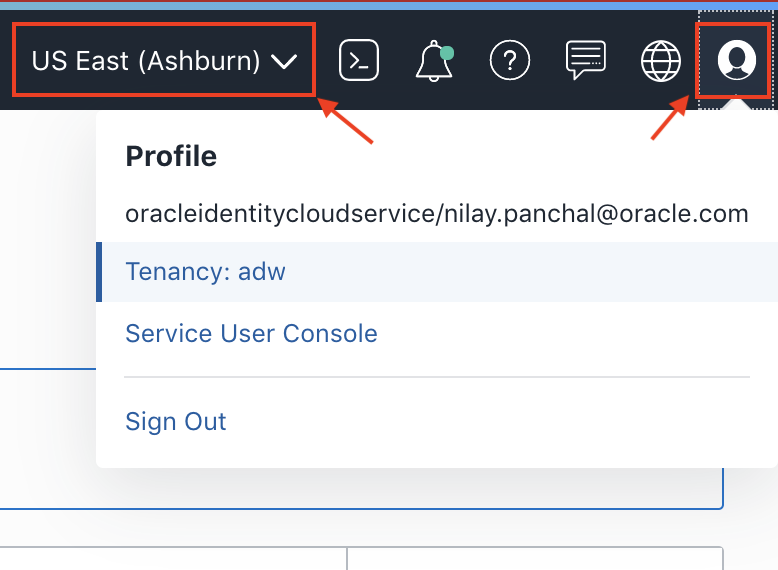

- <region ID> with the region you are using. You can identify this by looking at the top right corner of your Oracle Cloud page. Go into “Manage Regions” or look at this page to get your region’s identifier.

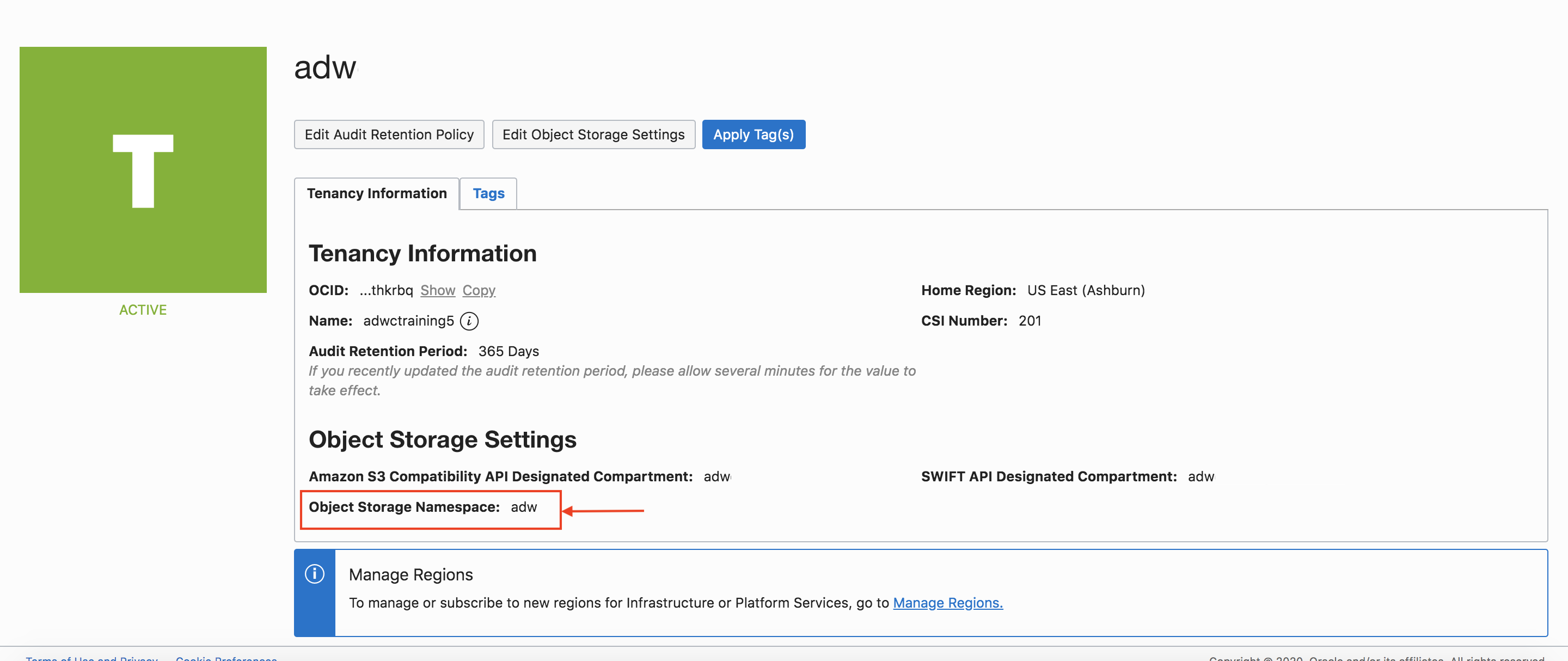

- <namespace> with Object Storage namespace. This can be found in Profile -> Tenancy (top right corner of the Oracle Cloud page). Look for your “Object Storage Namespace”, it may be the same as your tenancy name or a different ID.

Here we used the bucket name “examplebucket”, if you used a different bucket name also replace that in the URI. With encryption enabled, you will be prompted for a password. For more information on the export parameters and file size limits have a look at the documentation.

expdp admin/password@ADWC1_high \ filesize=5GB \ dumpfile=default_credential:https://objectstorage.<regionID>.oraclecloud.com/n/<namespace>/b /examplebucket/o/exampleexport%U.dmp \ parallel=16 \ encryption_pwd_prompt=yes \ logfile=export.log \ directory=data_pump_dir

Of course, depending on the size of your database, this database export can take minutes or hours.

Final Step & Thoughts

That’s a wrap! Once completed successfully, you can navigate back to your Object Storage bucket we created in Step 1 and you will see your newly exported dump files. The file names begin with “exampleexport”. Since exporting to object store uses chunks for optimum performance, you may see additional files other than your dump “.dmp” files in your bucket. Don’t delete them, but you may ignore them and should only have to interact with your dump files to access your data.

Note: If you followed this guide to a T but are seeing an authorization or a URI not found error, you (or your Cloud Admin) may need to grant object storage access to your Cloud User or Group.

Here, we looked at exporting your data to the object store using the popular Data Pump utility running on your machine or server, which makes for a simple workflow to include in your data pipeline.

In coming weeks, we will also support running Data Pump import/export (using the ORACLE_DATAPUMP driver) from within the database using your PL/SQL scripts using DBMS_CLOUD, so keep your eyes peeled for more Data Pump goodness! ?