Oracle GoldenGate is engineered to deliver low-latency, high-throughput replication across diverse environments. But even with well-tuned processes and robust infrastructure, replication performance often hinges on a less visible factor: the underlying network.

Whether you’re replicating across OCI, on-prem data centers, or through VPN tunnels in hybrid architectures, network misconfigurations can quietly undermine GoldenGate’s efficiency. Issues like packet loss, fragmentation, or buffer misalignment can cause Extracts to lag and Replicats to stall despite everything appearing healthy on the surface.

This guide explores the most critical network considerations that impact GoldenGate performance, including MTU sizing, bandwidth validation, buffer tuning, and Oracle Net SDU alignment while offering insights into best practices and configuration patterns that help ensure reliable performance across distributed environments.

1. MTU Basics: Ensuring Smooth and Stable Replication

Overview

MTU defines the maximum size of a packet that can traverse the network without being fragmented. In modern cloud networks like OCI, MTU 9000 (jumbo frames) is commonly enabled to support high-throughput workloads. However, legacy network components, such as firewalls, VPN tunnels, or physical switches, often default to MTU 1500 or lower.

MTU settings are configured not only at the OS or interface level but also across physical devices like switches, routers, and firewalls, making full-path MTU alignment a cross-team effort.

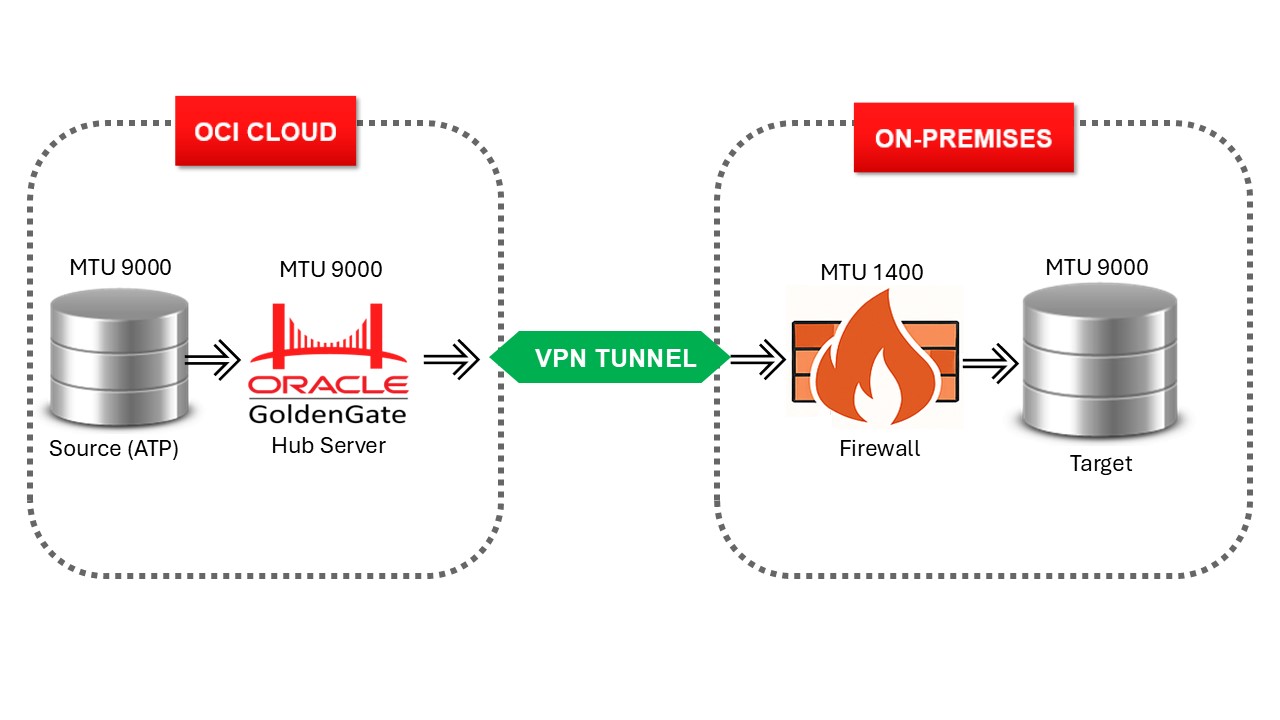

Figure 1 illustrates a common GoldenGate hybrid setup where MTU mismatches and blocked ICMP create fragmentation risks across a VPN tunnel.

Figure 1: Replicating from ATP in OCI to on-premises over VPN.

As illustrated in Figure 1, differing MTU values between OCI and the on-prem firewall introduce fragmentation risk if ICMP is blocked.

When large packets encounter a hop with a smaller MTU, and ICMP Type 3 Code 4 (“Fragmentation Needed”) is blocked, Path MTU Discovery (PMTUD) fails. The sender receives no signal to reduce packet size, leading to silent packet drops and unstable GoldenGate replication.

Refer to IANA ICMP Parameters for Type 3 and Code 4: https://www.iana.org/assignments/icmp-parameters/icmp-parameters.xhtml

Practical Impact

While MTU issues are often overlooked, they can cause real production instability. The following real-world example demonstrates how an MTU mismatch and blocked ICMP caused intermittent replication failures, despite all GoldenGate processes appearing healthy.

Real World Scenario

In a production deployment, Oracle GoldenGate was replicating from a cloud database (ATP) to an on-premises Oracle 11g system over an IPSec VPN. The GoldenGate host in OCI was configured with MTU 9000 (jumbo frames), while the on-premises VPN firewall enforced a smaller MTU 1400. ICMP fragmentation messages were blocked by the network security policy.

Replication initially ran without issues, but the Replicat process began failing intermittently with ORA-03113: end-of-file on communication channel. Investigation revealed the process was stuck on “SQL*Net message from client“, and a pstack showed the main thread blocked on a network system call, indicating a stalled connection caused by silent packet drops. Restarting the process provided only temporary relief, as the issue would recur once packets exceeded the allowable MTU, until the mismatch was resolved.

Temporarily lowering the MTU to 1400 on the GoldenGate host restored stability. The root cause: an MTU mismatch combined with blocked ICMP, which suppressed Path MTU Discovery (PMTUD), resulting in undetected packet fragmentation and silent drops.

This case underscores a critical point: replication failures aren’t always rooted in GoldenGate itself, they can stem from invisible network-layer issues like MTU fragmentation and ICMP suppression.

How to Test MTU Compatibility

Use the following command to validate packet size compatibility across the full network path:

- -s 8972: Payload size (~9000 bytes total with headers)

- -M do: Disables fragmentation

Failure indicates an MTU mismatch or blocked ICMP feedback.

Note: Default ping payload is 56 bytes, which won’t trigger fragmentation. Use -s 8192 or similar to simulate large packet behavior.

Best Practices

- Standardize MTU across NICs, VPN endpoints, routers, and switches. Use MTU 1500 as a general fallback if jumbo frame support isn’t guaranteed end-to-end. However, in VPN or tunnel-based networks, the effective MTU may be lower (e.g., 1400). Always validate the actual MTU path and base your tuning accordingly.

- Allow ICMP Type 3 Code 4 through all network layers to enable dynamic MTU adjustment.

- Persist MTU settings at the OS level (e.g., sudo ip link set dev ens3 mtu 1400).

- Validate MTU behavior after any infrastructure changes, upgrades, or security policy updates.

2. Benchmark the Network: Bandwidth and Latency Tests

GoldenGate throughput hinges on network consistency. These tools simulate and measure data paths under realistic load.

iperf3: Raw TCP throughput

This provides a clean measurement of the max bandwidth over the link. Ideal for validating raw network capacity.

Start the Server on Target Host:

Sample Output

[root@oggtstsrv2]# iperf3 -s

———————————————————–

Server listening on 5201

———————————————————–

Run the Client on Source Host:

Sample Output

[root@oggtstsrv1]# iperf3 -c xxx.xxx.xxx.2

Connecting to host xxx.xxx.xxx.2, port 5201

Accepted connection from xxx.xxx.xxx.1, port 55886

[ 5] local xxx.xxx.xxx.1 port 55890 connected to xxx.xxx.xxx.2 port 5201

[ 5] local xxx.xxx.xxx.2 port 5201 connected to xxx.xxx.xxx.1 port 55890

[ ID] Interval Transfer Bitrate

[ 5] 0.00-1.00 sec 3.35 GBytes 28.8 Gbits/sec

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 3.35 GBytes 28.8 Gbits/sec 90 672 KBytes

[ 5] 1.00-2.00 sec 3.34 GBytes 28.7 Gbits/sec 0 846 KBytes

[ 5] 1.00-2.00 sec 3.34 GBytes 28.7 Gbits/sec

[ 5] 2.00-3.00 sec 3.61 GBytes 31.1 Gbits/sec

[ 5] 2.00-3.00 sec 3.62 GBytes 31.0 Gbits/sec 0 846 KBytes

[ 5] 3.00-4.00 sec 3.58 GBytes 30.7 Gbits/sec

[ 5] 3.00-4.00 sec 3.58 GBytes 30.7 Gbits/sec 0 885 KBytes

[ 5] 4.00-5.00 sec 3.62 GBytes 31.1 Gbits/sec

[ 5] 4.00-5.00 sec 3.62 GBytes 31.1 Gbits/sec 0 885 KBytes

[ 5] 5.00-6.00 sec 3.62 GBytes 31.1 Gbits/sec

[ 5] 5.00-6.00 sec 3.62 GBytes 31.1 Gbits/sec 0 905 KBytes

[ 5] 6.00-7.00 sec 3.68 GBytes 31.6 Gbits/sec

[ 5] 6.00-7.00 sec 3.68 GBytes 31.6 Gbits/sec 0 905 KBytes

[ 5] 7.00-8.00 sec 3.61 GBytes 31.0 Gbits/sec

[ 5] 7.00-8.00 sec 3.61 GBytes 31.0 Gbits/sec 0 906 KBytes

[ 5] 8.00-9.00 sec 3.39 GBytes 29.1 Gbits/sec 0 909 KBytes

[ 5] 8.00-9.00 sec 3.39 GBytes 29.1 Gbits/sec

[ 5] 9.00-10.00 sec 3.56 GBytes 30.5 Gbits/sec

– – – – – – – – – – – – – – – – – – – – – – – – –

[ ID] Interval Transfer Bitrate

[ 5] 0.00-10.00 sec 35.4 GBytes 30.4 Gbits/sec receiver

[ 5] 9.00-10.00 sec 3.56 GBytes 30.5 Gbits/sec 0 909 KBytes

– – – – – – – – – – – – – – – – – – – – – – – – –

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 35.4 GBytes 30.4 Gbits/sec 90 sender

[ 5] 0.00-10.00 sec 35.4 GBytes 30.4 Gbits/sec receiver

iperf Done.

———————————————————–

Server listening on 5201

———————————————————–

In this example, we measured over 30 Gbps throughput, with minimal retries. A healthy link.

Checkpoints:

- Retransmits (Retr) indicate packet loss or congestion

- Consistent Cwnd (congestion window) = stable flow

- Use before and after tuning TCP buffers1

Note: iperf3 defaults to port 5201 and doesn’t require root access unless binding to a port below 1024.

oratcptest: Oracle Net-Aware Latency + SDU Efficiency

A powerful tool for emulating Oracle Net traffic; ideal for testing latency and bandwidth throughput.

oratcptest is a versatile utility for emulating Oracle Net traffic and is particularly useful for validating network performance across key GoldenGate components (Extract, Replicat, and Distribution Path). It simulates payloads similar to GoldenGate’s internal Oracle Net communication patterns, allowing you to test for latency, and bandwidth throughput.

Start the Server:

Sample Output:

[oracle@oggtstsrv2$ java -jar oratcptest.jar -server xxx.xxx.xxx.2 -port=1575

OraTcpTest server started.

[A test was requested.]

Message payload = 1 Mbyte

Disk write = NO

Socket receive buffer = (system default)

Run the Client:

Sample Output:

[oracle@oggtstsrv1]$ java -jar oratcptest.jar xxx.xxx.xxx.2 -port=1575 -duration=120s -interval=20s -mode=SYNC

[Requesting a test]

Message payload = 1 Mbyte

Payload content type = RANDOM

Delay between messages = NO

Number of connections = 1

Socket send buffer = (system default)

Transport mode = SYNC

Disk write = NO

Statistics interval = 20 seconds

Test duration = 2 minutes

Test frequency = NO

Network Timeout = NO

(1 Mbyte = 1024×1024 bytes)

(19:42:21) The server is ready.

Throughput Latency

(19:42:41) 1769.994 Mbytes/s 0.565 ms

(19:43:01) 2285.598 Mbytes/s 0.438 ms

(19:43:21) 2215.939 Mbytes/s 0.451 ms

(19:43:41) 2363.234 Mbytes/s 0.423 ms

(19:44:01) 2319.590 Mbytes/s 0.431 ms

(19:44:21) 2389.303 Mbytes/s 0.419 ms

(19:44:21) Test finished.

Socket send buffer = 1313280 bytes

Avg. throughput = 2223.815 Mbytes/s

Avg. latency = 0.450 ms

Excellent baseline results here: sub-millisecond latency and over 2 GB/sec sustained throughput.

Use SYNC mode to simulate Extract and Paths, and ASYNC mode to simulate Parallel Replicat. Adjust payload settings to reflect realistic message sizes.

More info: MOS Doc 2064368.1 – How to Use oratcptest

Note: Tools like iperf3 and oratcptest are useful for gauging raw network capacity but do not account for GoldenGate-specific processing overhead (e.g., trail file reads, buffering, commit logic). Treat these results as baseline measurements rather than expected replication throughput.

3. BDP Fundamentals: Preventing Throughput Bottlenecks

What is BDP?

Bandwidth-Delay Product (BDP) defines the optimal amount of unacknowledged data (in-flight) that can be on the wire to fully utilize a network link without stalling.

If your socket buffer is smaller than the BDP, the sender can’t keep the pipe full, limiting throughput.

BDP Formula

BDP = (Bandwidth in bits/sec × RTT in seconds) ÷ 8

Where:

- Bandwidth is the link capacity (e.g., 1 Gbps = 1,000,000,000 bits/sec)

- RTT (Round-Trip Time) is the average round-trip delay between source and target

- Division by 8 converts bits to bytes

How to Measure RTT

Use ping to get the RTT. Run it from the GoldenGate source host to the target:

Sample Output:

In this case, use the average RTT (0.643 ms), which is 0.000643 seconds.

BDP Example

Let’s say:

- Bandwidth: 1 Gbps = 1,000,000,000 bits/sec

- RTT: 0.643 ms = 0.000643 sec

BDP = (1,000,000,000 × 0.000643) ÷ 8 = 80,375,000 ÷ 8 = 10,046,875 bytes ≈ 10 MB

So, to fully utilize a 1 Gbps link with 0.643 ms RTT, you should size your socket buffers to at least 10 MB.

Note: A common guideline is to size the socket buffer to approximately 2x – 3x BDP to prevent underruns and maximize throughput.

Apply BDP in GoldenGate and Linux

GoldenGate parameter:

Linux Kernel Tuning:

sysctl -w net.ipv4.tcp_rmem=”4096 131072 10485760″

sysctl -w net.ipv4.tcp_wmem=”4096 131072 10485760″

Make persistent via /etc/sysctl.conf

Note: Do not reduce tcp_rmem and tcp_wmem values if they are already configured with higher values on the source and target hosts. These defaults are often tuned upward on Exadata or optimized environments and should be preserved.

In classic architecture, TCPBUFSIZE is specified in the RMTHOST parameter to define socket send buffer size.

In MA deployments, manual buffer tuning is typically not required. Most modern Linux systems enable TCP auto window scaling by default. This allows the kernel to dynamically adjust socket buffer sizes based on network conditions.

To verify TCP window scaling is enabled:

- If the result is

1, auto-scaling is enabled. - If it is

0, it is disabled and should be enabled to allow dynamic growth of socket buffers.

To enable it (as root):

Note: You can make this setting persistent by adding the line below to /etc/sysctl.conf:

4. Oracle Net SDU: Enhancing Data Transfer Performance

GoldenGate uses Oracle Net under the hood. Poorly tuned SDU values can lead to packet fragmentation and reduced throughput, especially in hub-based and wide-area deployments.

Understanding SDU

Session Data Unit (SDU) determines the maximum size of Oracle Net packets before fragmentation. By default, SDU is set to 8192 bytes (8KB), which is often too small for high-throughput replication.

Oracle recommends increasing the SDU to the highest permissible value when communicating over high-latency or high-throughput links to reduce the number of packets and improve efficiency.

Starting with Oracle 12c Release 2, the maximum allowable SDU size has been increased from 65535 bytes to 2 MB (2097152 bytes). Using larger packets is beneficial because it minimizes Oracle Net protocol overhead, reduces CPU usage, and improves throughput across wide-area and cloud-based networks, especially for high-volume GoldenGate traffic.

Recommended SDU

This leverages the full 2 MB capacity available in Oracle 12.2 and newer versions. Always verify that both the source and target databases, as well as all listeners, support this upper limit.

Setting SDU in listener.ora will apply globally to all DB connections, which may not be ideal. For better control, configure SDU using a dedicated listener for GoldenGate or set it at the session level using sqlnet.ora in $ORACLE_HOME/network/admin.

Additionally, in RAC environments using SCAN listeners, SDU cannot be adjusted on a per-client basis. In these cases, using sqlnet.ora or a dedicated listener is the recommended approach.

Align Across:

sqlnet.ora

listener.ora

SID_LIST_LISTENER =

(SID_LIST =

(SID_DESC =

(SID_NAME = GGSRCDB)

(ORACLE_HOME = /u01/app/oracle/product/23.0.0/dbhome_1)

(SDU=2097152)

)

)

tnsnames.ora

GG_SRC_DB =

(DESCRIPTION =

(SDU=2097152)

(ADDRESS=(PROTOCOL=TCP)(HOST=oggtstsrv2)(PORT=1521))

(CONNECT_DATA=(SERVICE_NAME=ggsrcdb))

)

Make sure the SDU setting is consistent across all layers (client, listener, server). If there’s a mismatch between the client and server SDU settings, a lower value will be used for the SDU size.

Note: On Autonomous Database platforms in OCI, customers cannot modify listener.ora, so SDU tuning is limited to what’s pre-configured.

For a more detailed discussion on Oracle Net SDU tuning strategies, including additional use cases and testing methods, refer to the following documentation URL and blog article:

- Oracle Documentation – Configuring Session Data Unit (SDU)

- Optimizing Network Performance in Hub-Based Oracle GoldenGate Extract Configurations

Quick Reference: Optimization and Benchmarking Summary

| Category |

What to Do |

| MTU |

Align MTU across all network hops; allow ICMP Type 3 Code 4 |

| iperf3 |

Measure TCP bandwidth baseline; monitor retransmits |

| oratcptest |

Simulate Oracle Net load; validate SDU and latency |

| BDP |

Calculate ideal socket buffer; apply TCPBUFSIZE, sysctl |

| SDU |

Tune and align across listener.ora, sqlnet.ora, tnsnames.ora |

Key Takeaways

Replication performance is often gated not by Extracts or Replicats themselves; but by the stability and configuration of the network underneath them. Issues like MTU mismatch, insufficient socket buffers, or mismatched SDU settings can create bottlenecks that are difficult to diagnose without proper tools.

While database errors like ORA-03113 may hint at network fragmentation, GoldenGate itself may not always surface network-layer issues clearly in logs. Symptoms like frequent retries or stalled Replicats can be indirect indicators.

Use tools like iperf3 and oratcptest not as tuning levers, but as benchmarking instruments, to validate the current state of the network before and after applying changes. They provide critical visibility into raw throughput, packet efficiency, and Oracle Net responsiveness, enabling informed tuning decisions.

By combining benchmarking with concrete tuning actions, such as aligning MTU, calculating BDP for buffer sizing, and configuring SDU at maximum supported values, you can ensure that GoldenGate performs optimally across even the most complex hybrid or WAN environments.

Take the time to baseline these elements and monitor their behavior. It will pay dividends in uptime, throughput, and operational clarity.