Oracle GoldenGate and Solace Product Management teams are happy to announce micro-integration solution between GoldenGate Data Streams and Solace PubSub+ to power Solace Event Mesh with change data capture records from Oracle GoldenGate. By utilizing GoldenGate Data Streams and Solace PubSub+ brokers, organizations can set up an Event Mesh powered by the best of event broker and real-time data replication technologies. Organizations can also discover, catalog and manage the end-to-end lifecycle of the GoldenGate Data Streams with the Solace Event Portal, a part of the Solace Platform.

Overview of the Solace Event Mesh

An event mesh is a network of interconnected event brokers that enables the distribution of event information among applications, cloud services, and devices within an enterprise. Event mesh addresses several challenges and requirements in modern distributed architectures:

- Mission Critical Applications: Mission-critical applications must communicate reliably and promptly to share the vital data they need to process the interactions and transactions that drive the business. Event Mesh infrastructure is designed to transport events smoothly and seamlessly across any environment, including between clouds.

- Enhanced Decision-Making Capabilities: All data that enters the event mesh is available on demand in a secure, reliable manner. This rich, real-time data source can be leveraged to inform important business decisions surrounding operations, supply chain, finance, marketing, etc.

- Capitalize on a superior customer experience: Having applications, people, and systems with access to real-time enables businesses to create personalized experiences for customers and respond to requests instantly.

Solace enables an event mesh with PubSub+ event brokers. The Solace PubSub+ Event Broker is a comprehensive messaging solution designed to support real-time, event-driven messaging across a wide variety of environments, including on-premises, cloud infrastructures, and hybrid configurations.

Overview of the GoldenGate Data Streams

GoldenGate Data Streams is an AsyncAPI based asynchronous messaging capability that is at the heart of GoldenGate’s distributed data mesh architecture solution. Data Streams AsyncAPI Channels enable loosely-coupled architectures where microservices and client applications can communicate without being tightly integrated. GoldenGate Data Streams leverages AsyncAPI and CloudEvents JSON payload formats so that client applications can efficiently subscribe to GoldenGate change data streams using a Publish / Subscribe model.

GoldenGate Data Streams and Solace Integration

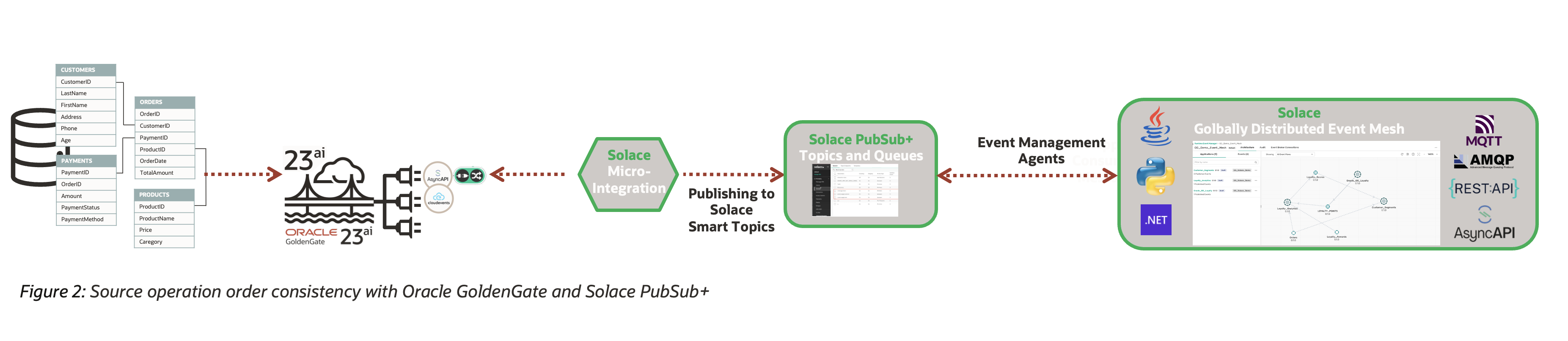

GoldenGate Data Streams and Solace Micro Integration is an AsycnAPI integration layer that subscribes to GoldenGate Data Streams AsyncAPI channel and publishes the messages to Solace PubSub+ Queues with high throughput and low latencies. It is built on spring cloud architecture which is a prominent architecture for micro service deployment.

With this partnership, Solace extends the notion of enterprise class reliability, and performance for pub/sub architectures where a database originates transactions. With this integration, there is continuity in transaction integrity from the source to each consumer. Both products working together delivers highly reliable, low latency, and fault tolerant messaging architecture. The result is a real-time distributed event mesh powered by GoldenGate and Solace PubSub+ which provides four distinct advantages.

- Source operation order consistency: For Oracle customers, maintaining the source order consistency while publishing messages to streaming platforms is crucial.

In Kafka based message streaming platforms, GoldenGate supports order consistency at the source table level by sending source operations from a source table with the same message key to the same partition. However, when a transaction extends across multiple tables, maintaining order consistency becomes challenging, as each partition moves at different speeds.

GoldenGate Data Streams and Solace PubSub+ Micro Integration directly addresses this challenge by using Solace Smart Topics. On the GoldenGate side, order is preserved during the extract process, and operations are written into trail file while maintaining the source order. GoldenGate Data Streams then publishes the records with this preserved order. On the Solace PubSub+ side, a smart topic subscribes to GoldenGate Data Streams channel to consume operations from multiple source tables. In Solace Run Time, users can create subscriptions that receives messages from smart topics based on the subscription design. If need, a single subscription can receive all the messages available in the smart topic or can receive selected source operations based on the source table definition.

- Exactly Once Semantics: GoldenGate supports ‘exactly once’ in normal conditions; however, in recovery scenarios, ‘at least once’ is supported with Kafka based message streaming platforms. In these platforms, GoldenGate groups multiple operations into a single message and flushes them all at once to increase throughput, while moving the checkpoint accordingly. Therefore, in the event of recovery, GoldenGate can guarantee ‘at least once’ delivery.

GoldenGate Data Streams and Solace PubSub+ Micro Integration guarantees ‘exactly once’ semantics under both normal conditions and recovery scenarios, using the redelivered flag and distributed trace capabilities. GoldenGate Data Streams publishes a unique position value with each message, which micro integration uses for checkpointing. In case of failure, micro integration can restart from the exact point of failure using this unique position value, thereby avoiding duplicate records and guaranteeing ‘exactly once’ semantics.

- Schema Object Management: To help clients to interpret the records in the data streaming service, GoldenGate Data Streams publishes JSON schema for all records in the channel. Using micro integration, users can map schema records, using event schemas stored in the event portal into specific queues for tracking schema changes and gathering more details about the source operations. In general, there are four types of records in Data Streaming service:

- Stream Schema Record: Stream metadata record is about the entire stream in the data streaming service. GoldenGate Data Streams send out one stream metadata record at the beginning of current session. This record contains information regarding the source database, the producer, the source trail, the hardware machine information etc.

- DML and DDL Schema Record: For DML records, since columns are different in each table, JSON Schema record needs to be tailor-made for each table. GoldenGate Data Streams send out one JSON Schema record per table here. Also, when the table shape changes, a new DML schema will be sent to the users as well.

- Object Schema Record: Aside from DDL and DML records, GoldenGate Data Streams send out metadata records in the data streaming services to provide metadata about the data records for each table. Object Metadata Records contain information about the table and additional properties that are not carried in the DML records (such as nullable and primary key information).

- Event API Product and Lifecycle Management: Developers and Architects need to have the ability to discover what Data Streams are available as Event API Products. Providers of the Data Stream must manage the lifecycle of the Event API Products they make available using the Async API standard. The Solace Event Portal can catalog the Golden Gate Data Stream Events endpoint, schema and other lifecycle details so that event streams published by the Micro-integration can be accessed as Event API Products. Discovery, versioning, promotion and other enterprise capabilities are supported via the event portal.

Conclusion:

GoldenGate Data Streams and Solace PubSub+ Micro Integration provides a unique event mesh solution which can handle very high throughputs while addressing some of the core challenges including preserving transaction boundary and exactly once semantics.