Introduction

In this blog we will describe the new Kubernetes-based approach for multicloud and on-premise deployment of the Oracle Blockchain Platform released as OBP EE 24.1.3 and describe some of the benefits the new architecture brings compared to the previous approach based on virtual machine appliances and Docker Swarm-orchestrated containers. While this blog focuses on the Kubernetes deployment architecture, this release also provides:

- Currency with the latest Hyperledger Fabric 2.5.7 LTS release

- Interoperability with OCI Blockchain Platform

- The latest capabilities of Blockchain Application Builder, including extensive tokenization and digital assets support

- Feature parity with OCI Blockchain Platform, which is detailed in the What’s New documentation.

Some of the benefits introduced by the most recent OBP EE version include:

- Simpler, more nimble deployment model taking advantage of Kubernetes clusters.

- Simplified deployment and configuration management on a variety of Kubernetes platforms, including Oracle Kubernetes Engine (OKE), Red Hat OpenShift, Azure Kubernetes Service (AKS), and developer-oriented Minikube.

- Enhanced resilience thanks to Kubernetes self-healing functionality.

- Simplified topology configuration management within the Kubernetes server mesh by leveraging Istio SNI (Server Name Indication) traffic routing.

- Additional security layer due to TLS-encryption of all internal traffic.

- Support for new Hyperledger Fabric capabilities, like ability to run chaincode as an external service.

Comparing the New Kubernetes Architecture with Previous OBP EE Version

Oracle Blockchain Platform Enterprise Edition (OBP EE) is a customer-managed permissioned blockchain solution based on Hyperledger Fabric, which is meant to be deployed in customer data centers (on-premise), 3rd party clouds, or on the Oracle Cloud Infrastructure (OCI) when customers prefer to manage the underlying blockchain resources rather than take advantage of the Oracle-managed Blockchain-as-a-Service version of the same platform available as OCI Blockchain Service. Note that both Oracle-managed BaaS nodes and customer-managed OBP EE nodes can interoperate in the same Hyperledger Fabric network.

The previous version of OBP EE provided downloadable virtual machine (VM) images to users, who would then spin them up on their infrastructure of choice. The VMs are provided pre-configured to run the OBP EE containers in the Docker Swarm environment spanning the set of VMs comprising customer managed deployment. The OBP EE component container images are themselves already part of the downloaded VMs. Users would then interact with the “OBP EE Platform Manager” to complete the setup and spin up blockchain “instances” on the host VMs.

The new version of OBP EE replaces the concept of VM images available as appliances with the popular and flexible Kubernetes as the container deployment and management platform. This approach requires users to have a Kubernetes cluster available – either created ad-hoc or shared with other services. The OBP EE components are available as container images from the Oracle Container Registry, or they can be downloaded to a container registry of choice accessible by the Kubernetes cluster. Such container images are then used by the included bootstrapping scripts to spin up the initial OBP EE components – specifically a new version of the OBP EE Platform Manager as well as dedicated Kubernetes operators. At that point, similarly to the previous approach, user can interact with the Platform Manager’s Web UI or REST API to create and manage blockchain instances, which will be hosted on the Kubernetes cluster itself.

Details about how OBP EE for Kubernetes is set up and how OBP EE instances are created and managed are documented here.

Comparing the new Kubernetes-based architecture to the previous one

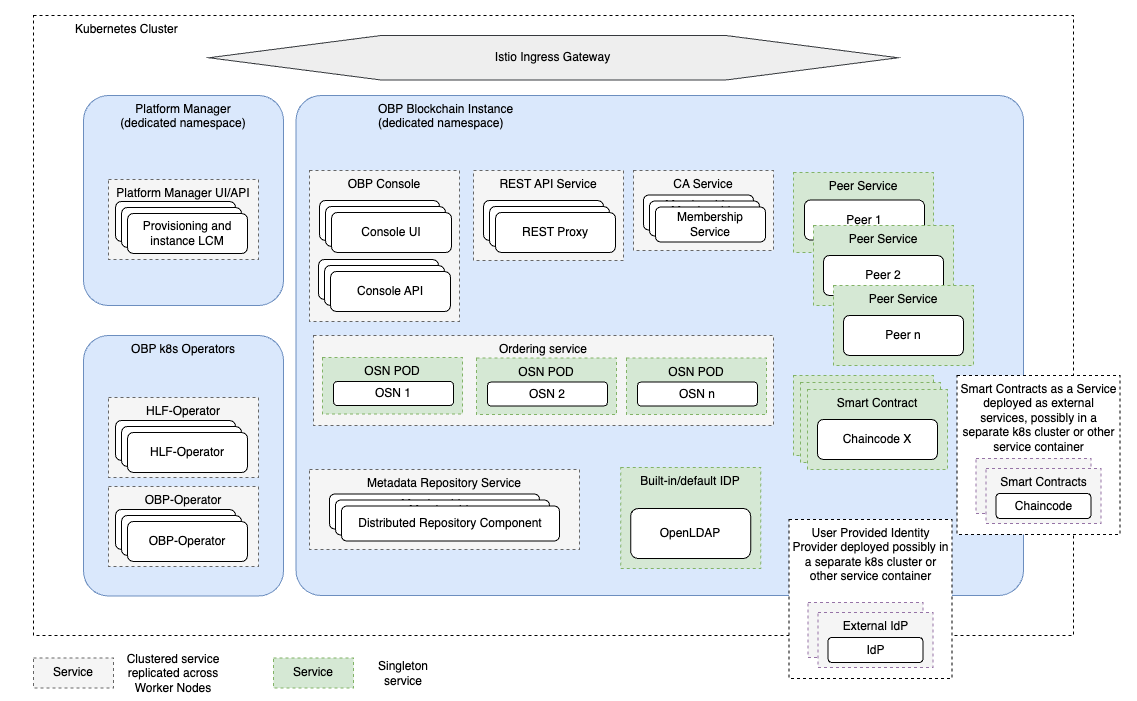

The diagram below describes the components and networking of OBP EE in a Kubernetes cluster.

There are a few areas which is important to highlight when comparing this new architecture to the previous OBP EE solution:

- The new Platform Manager in OBP EE takes advantage of two Kubernetes operators to administer component deployment, resource utilization, and blockchain configuration. This allows Platform Manager to delegate much of the component’s lifecycle management operations to the Kubernetes layer, fitting smoothly with how other Kubernetes services are provisioned and managed.

- Kubernetes “worker nodes” take the place of the role of the VMs in the previous OBP EE architecture. That enables users to scale-out (or -in) the number of worker nodes/VMs in their cluster easily by interacting with the Kubernetes control plane itself – instead of worrying about spinning up VM images and adjusting networking and Docker Swarm configuration to be considered part of the overall OBP EE cluster. Dealing with worker nodes rather than “raw VM” resources, also enables the blockchain platform to leverage Kubernetes “self-healing”.

- Kubernetes is often referred to as “self-healing”, meaning that if worker nodes become unavailable, the Kubernetes “scheduler” will try to relocate Pods which are no longer available to healthy worker nodes and doing that transparently from the user thanks to its networking and storage abstraction layers.

While the VM-based Docker/Docker Swarm approach does allow to control and monitor availability of service to some extent (and OBP EE does take advantage of that), there is no equivalent in that environment to the more sophisticated capabilities that Kubernetes offers. - Networking access relies on a Kubernetes Ingress Gateway; OBP EE supports Istio in that role, and manages its configuration as part of the Platform Manager behavior.

In the previous OBP EE approach, networking access was handled either by an internal, non-HA (Highly Available), load balancer (suggested only for development setups); or the user had to take care of manually configuring an external load balancer which would perform TLS termination and then direct traffic to the various OBP EE components to their specific port numbers. While that mechanism is still flexible and secure when properly configured, management of such load balancer becomes a challenge when the user needs to deal with scaling out/in the number of OBP EE components (Peers, Ordering Nodes) that they are running. - Traffic routing for OBP components in Kubernetes cluster is achieved through SNI (Server Name Indication); this allows users to ignore the underlying networking details of each OBP EE component (port numbers, for example), and instead address them by “name”. That means that client applications accessing OBPEE components would do so by using names like “peer1.myblockchain.mycompany.com”, or “osn2.myblockchain.mycompany.com”.

In the previous OBP EE approach, SNI is not used; all OBP EE components are still individually reachable, but they are exposed through the same name; it is their port number which controls proper routing. In the previous version of OBP EE, for the simple example above, users would need to identify “peer1” and “osn2” as “myblockchain.mycompany.com:<port1>” or “myblockchain.mycompany.com:<port2>”. While ultimately that is the default approach exposed by most Hyperledger Fabric deployments, that can not only make things more complex to manage for client applications, but it also makes internal networking details a “must know” for any component higher up in the networking chain – like a potential external load balancer, as mentioned above. - All networking connections – internal and external – are TLS-secured. TLS termination is achieved by the OBP EE service Pod themselves either as part of their core behavior or as part of the Envoy component injected in them. In the previous OBP EE approach, TLS was terminated at the internal load balancer level. Direct communication across the individual components of an OBP EE instance would in most scenarios happen over non-TLS channels. That is still a perfectly safe and widely adopted deployment pattern – considering that such internal traffic is not accessible to malicious agents if VMs are properly configured behind firewall and security groups and relies on message signature authentication. But in contexts with stringent security requirements, it is often the preferred approach that all networking traffic – even the internal one – is TLS secured.

- Kubernetes provides a flexible model of associating storage to Pods. OBP EE containers deployed as Kubernetes StatefulSets also rely on Persistent Volume Claims (PVC) and Persistent Volumes (PVs) to persist their state. Unlike the previous OBP EE approach, Pod-level PVs allow fine grain management of the storage associated to each OBP EE component, instead of relying on an overall shared capacity at the VM level.

- In addition to supporting the automatic deployment of smart contracts as part of the same Kubernetes cluster where the other components are deployed, the new version of OBP EE also exposes Hyperledger Fabric’s support to run chaincodes as external services. That allows users to manage chaincode runtimes independently from peers, to deploy them as clustered services for additional High Availability and to run them on a different Kubernetes cluster and/or infrastructure if so desired.

Example Topology

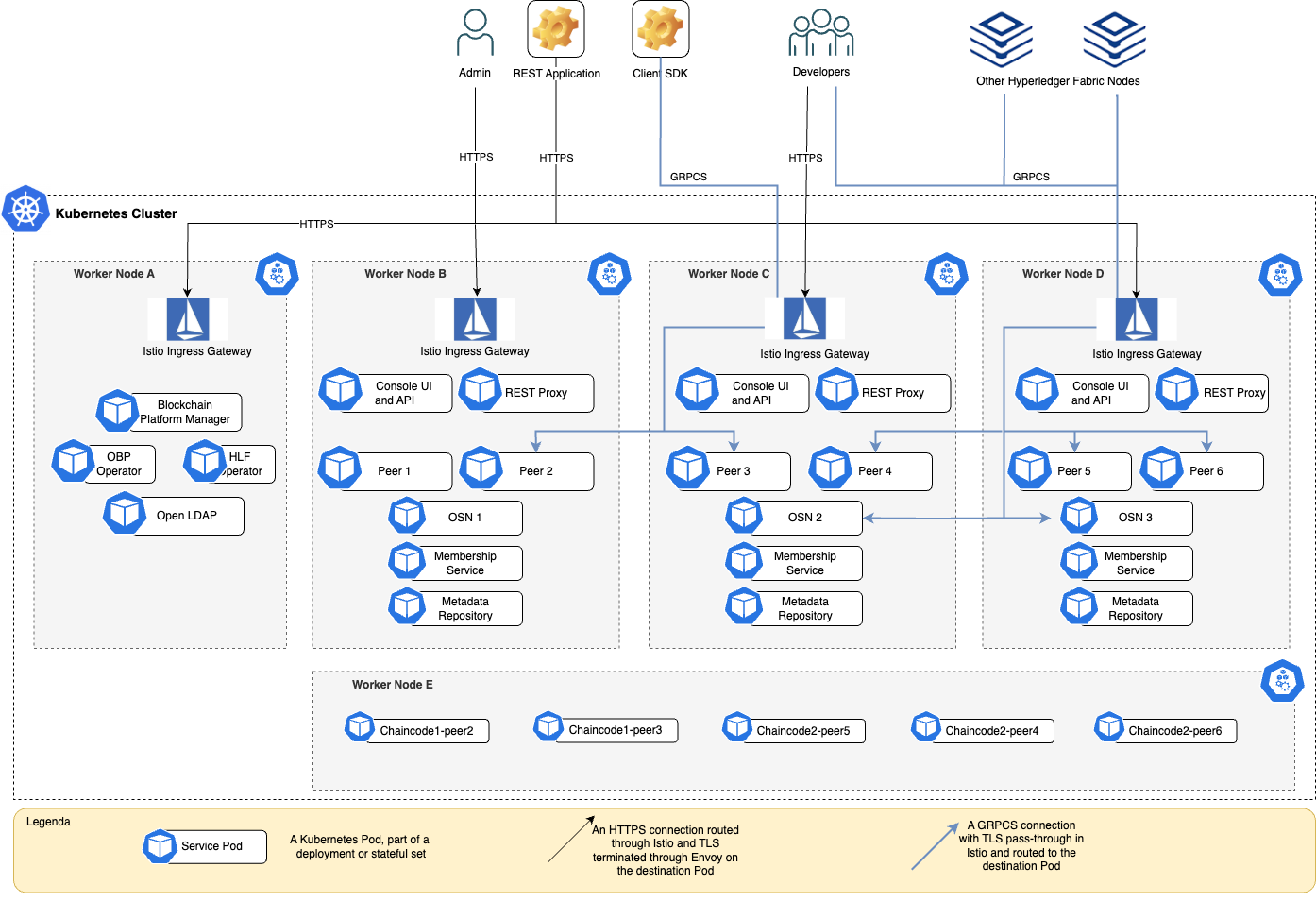

The diagram below describes an example topology of a OBP EE instance deployed on a Kubernetes cluster across five different worker nodes.

The topology shows how organizations may typically place OBP EE Platform Manager and its related components in a separate worker node than the ones running the OBP EE instance or instances. The main services part of an OBP EE instance are typically spread across at least three worker nodes, and it is not unusual that organizations have a desire to isolate deployed chaincodes in a different worker node as well.

Of course, this is just an example of the many ways a Kubernetes OBP EE deployment and one of its instances may look like. It is provided as a concrete illustration of how the services comprising OBP EE are typically deployed in the actual Kubernetes cluster. Once you have a running Kubernetes cluster with Istio and Blockchain Platform installed, you would use Blockchain Platform Manager to provision your blockchain instances and control their topology, which we will cover in the next post.

Summary

Kubernetes has become the de-facto standard as the container deployment and management solution for distributed services; that’s even more true when dealing with microservices – and you can easily think about Oracle Blockchain Platform as a set of collaborating microservices, which expose a comprehensive permissioned blockchain solution.

Moving to a Kubernetes-based architecture for OBP EE is just a natural evolution from its origins, which enables OBP to take advantage of the power and flexibility offered by a variety of Kubernetes platforms. Such platforms – which do work smoothly with OBP EE – include Oracle Kubernetes Engine, Red Hat OpenShift, Azure Kubernetes Service, but also simpler and developer-oriented solutions, like Minikube.

For more information about OBP EE and how to get started with it please visit its documentation. Watch for the next post detailing how to configure and scale Oracle Blockchain Platform in Kubernetes.