Introduction

Oracle APEX 24.2 introduces AI Configurations and RAG Sources, allowing you to supplement Generative AI calls with your custom data. Commercial Large Language Models (LLMs) are trained on vast volumes of publicly available data and generate text for tasks such as answering questions, translations, and more. However, when used in chatbots, these LLMs might provide generic or inaccurate answers because they lack access to up-to-date, domain-specific, or proprietary information unique to an organisation. This is where the ability to supplement Generative AI calls with business data becomes highly relevant.

In Oracle APEX, AI Configurations can be used in dynamic actions like Show AI Assistant to create intelligent, context-aware chatbots, and in Generate Text with AI to enable text generation informed by database data. Additionally you can also use AI Configurations with APEX_AI PL/SQL API to help programmatically generate response based on business data.

Before diving into the specifics of this feature, let’s first understand what Retrieval-Augmented Generation is and explore its benefits.

What is Retrieval Augmented Generation (RAG)?

Retrieval-Augmented Generation (RAG) can extend the capabilities of LLMs to specific domains or an organisation’s internal knowledge base. A RAG-enabled AI system can retrieve data from various sources based on the user prompt and use that information to enhance—or, in other words, augment—the prompt. This helps the LLM generate responses grounded in the business context.

RAG offers various benefits in terms of economics, accuracy, and the relevance of responses. Some of the key benefits include:

- Cost-effective: Businesses can use RAG to leverage existing pre-trained models to generate contextually aware responses, rather than training and fine-tuning a model from scratch, which incurs costs for extensive custom datasets and high computational resources. Additionally, combining RAG with AI Vector Search can optimize the retrieval of relevant information, reducing the input token lengths in Generative AI calls and ultimately leading to cost savings.

- Access to up-to-date information: RAG helps ensure that the LLM has access to up-to-date information by dynamically fetching relevant content based on the task, thereby preventing the model from relying solely on potentially outdated or generic training data.

- Domain-Specific Knowledge: RAG helps enable AI systems to provide contextually aware responses tailored to specific domains or an organisation’s internal knowledge, which is especially beneficial for specialised tasks.

Now that you understand how RAG can be helpful let’s see how you can leverage AI Configurations and RAG Sources in your APEX applications to reap these benefits.

Key Features of AI Configurations and RAG Sources

Some of the salient features of the AI Configurations and RAG sources are:

- AI Configurations as Shared Components: AI Configurations are available as Shared Components in APEX applications, allowing you to create them once and reuse them across application pages.

- Centralized Configuration: AI Configurations provide a centralised location to define your Generative AI settings, such as the Service, System Prompt, RAG Sources, and more, for specific use cases.

- Flexible RAG Source Creation: Optionally, you can create RAG Sources based on a SQL query or Function Body that retrieves data from the database and incorporates it into Generative AI requests.

- Conditional RAG Evaluation: RAG data sources in APEX can be evaluated conditionally for each call by leveraging server-side conditions. These conditions allow you to dynamically include relevant data in the system message. Additionally, APEX provides two specialized condition types: Any User Prompt contains and Last User Prompt contains. These enable you to conditionally execute a RAG source based on the content of the user’s prompt.

- Access to User Prompts: While computing RAG Sources or server-side conditions, you can access user prompts through the

APEX$AI_ALL_USER_PROMPTSandAPEX$AI_LAST_USER_PROMPTCLOB bind variables. - Integration with APEX_AI PL/SQL API : AI Configurations can be used with the APEX_AI PL/SQL API to programmatically generate responses.

Demo Use Case and Dataset Used

To help you understand how to use AI Configurations and RAG Sources, this blog walks you through the process of building a simple conversational chat user interface in the Highschools App using the New York High Schools Dataset. This chat assistant allows parents to inquire about school facilities, policies, and more, aiding them in making an informed decision about whether a specific school is an ideal fit for their child.

Here’s a sneak peek of RAG powered AI Assistant in the Highschools application:

Step-by-Step Implementation

Broadly, creating a RAG enabled AI Assistant in an APEX Application involves four simple steps:

- Configure the Generative AI service

- Create an AI Configuration

- Configure a RAG Source for the AI Configuration

- Create the Show AI Assistant Dynamic Action

And here’s the best part—a Bonus Tip awaits you at the end to help you minimise AI token usage and cut down on costs.

Let’s get down to business!

1. Configure the Generative AI service

At the heart of this solution is a large language model that generates responses to user questions. Oracle APEX enables seamless integration with popular AI Providers through Generative AI Services, which can be used across applications for language-related tasks like answering questions, summarising content or generating text.

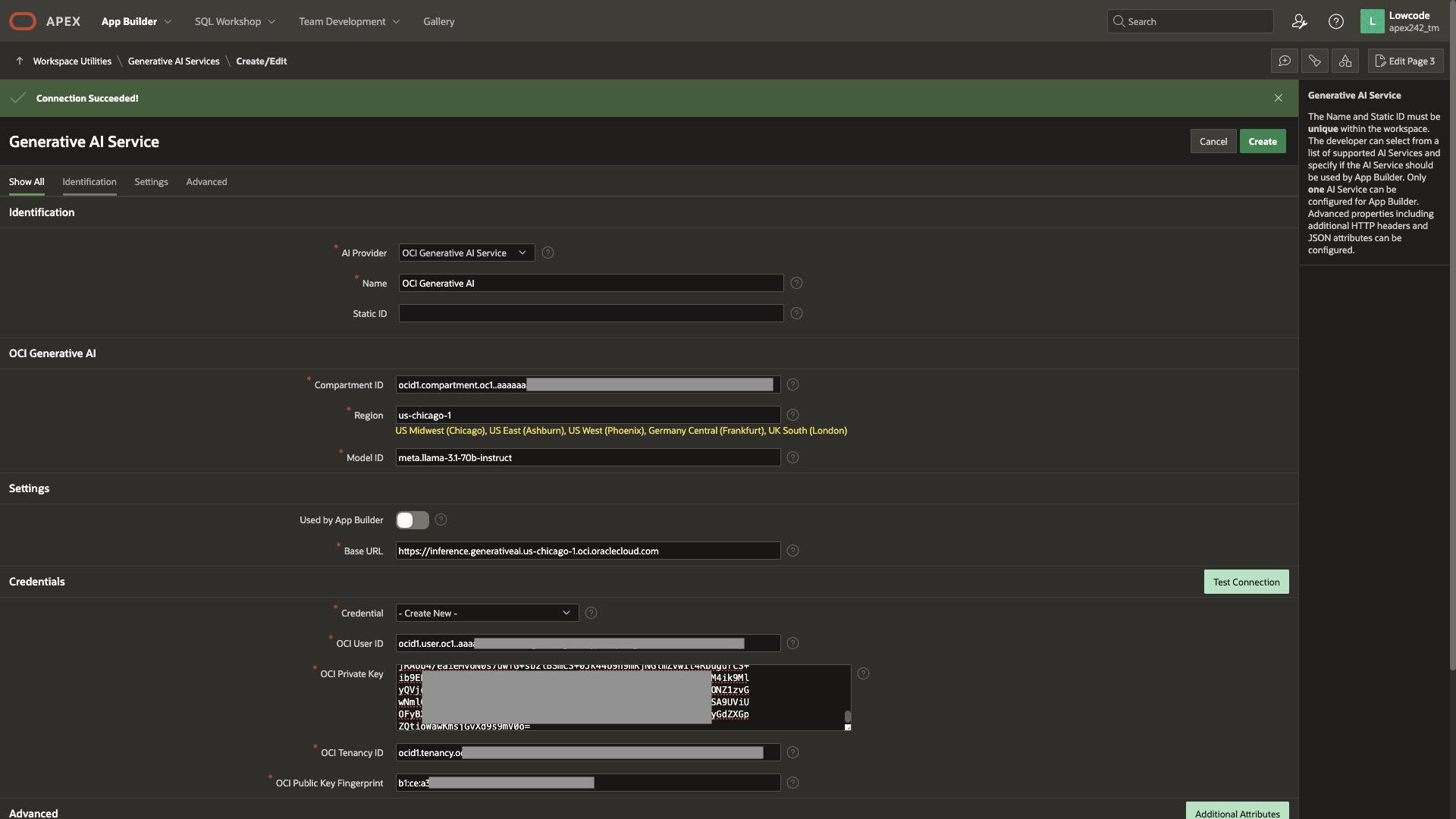

Navigate to Workspace Utilities > Generative AI to create a Generative AI Service. In this demo, we will use OCI Generative AI service as the AI Provider. Create a Generative AI Service with the following configuration:

- AI Provider: OCI Generative AI Service

- Name: OCI Generative AI

- Under the OCI Generative AI Section, configure details about the OCI Generative AI service like the Compartment ID, Region, and Model ID. In this demo, we will use the meta.llama-3.1-70b-instruct. You can choose your preferred pre-trained chat model based on the availability in your OCI Region. See the list of models here: https://docs.oracle.com/en-us/iaas/Content/generative-ai/pretrained-models.htm#pretrained-models

- Create an OCI API Key on your OCI Console and configure the API Key details under the Credentials section.

- Click Test Connection to verify if all the details provided are correct and APEX can establish a successful connection with the OCI Generative AI Service in the specified OCI compartment and the OCI Region.

- Click Create.

2. Create an AI Configuration

After configuring the Generative AI Service, the next step is to create an AI Configuration. The Show AI Assistant Dynamic Action utilizes this configuration to retrieve the Generative AI settings required for the AI Assistant’s functionality.

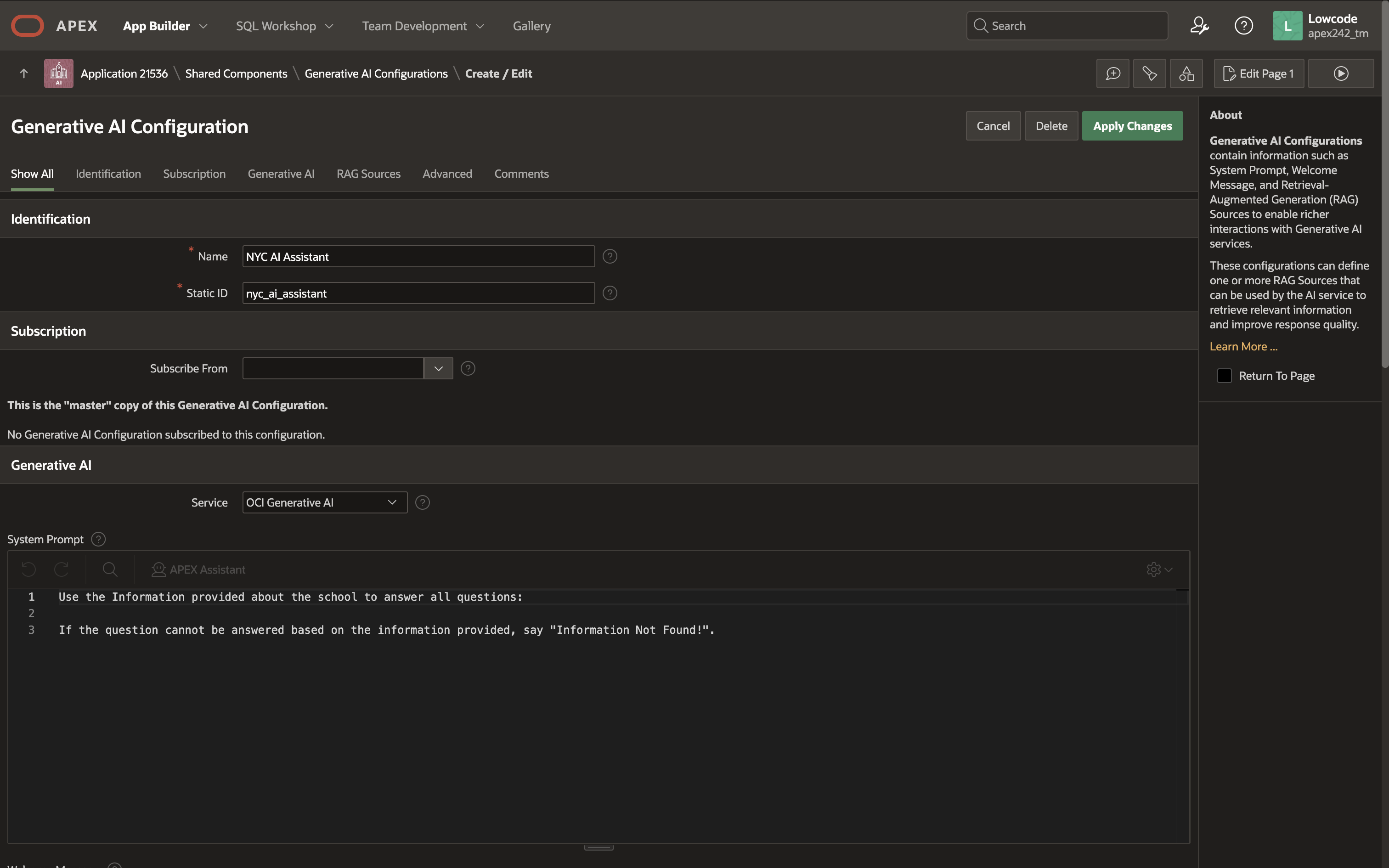

To create an AI Configuration, navigate to Highschools Application Home Page > Shared Components > AI Configurations. Create an AI Configuration with the following configuration:

- Name: NYC AI Assistant

- Under Generative AI, fill in the following attributes:

- Service: From the drop-down, select the Generative AI Service created above: OCI Generative AI.

- System Prompt:

Use the Information provided about the school to answer all questions: If the question cannot be answered based on the information provided, say "Sorry, I can't provide an answer to that question based on the information I have."

- Welcome Message:

Welcome! What would you like to know about this high school?

- Click Create

3. Configure a RAG Source in the AI Configuration

The AI Configuration created above includes details such as the Generative AI Service to be used, a generic system prompt, and a welcome message. To ground the AI Assistant in domain-specific information, you can add RAG sources. These sources dynamically retrieve relevant data from the database, which is then included as part of the system message.

In this demo, the RAG Source will fetch all available information from the database about a specific school the user is inquiring about.

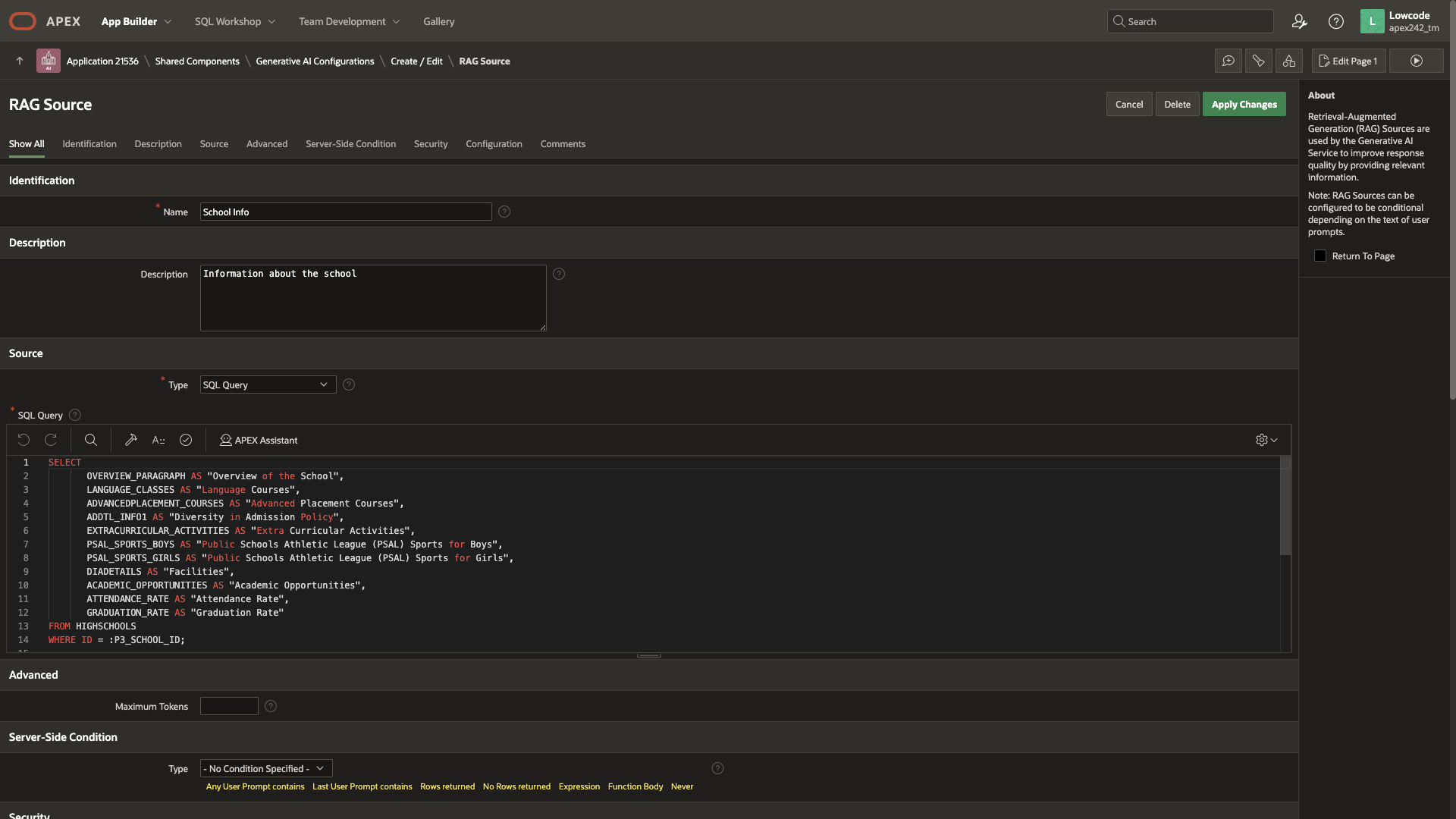

To create a RAG Source, navigate to the newly created AI Configuration. Under the RAG Sources section, click Create RAG Source.

- Name : School Info

- Description : Information about the school

- Source Type : SQL Query

- SQL Query :

SELECT OVERVIEW_PARAGRAPH AS "Overview of the School", LANGUAGE_CLASSES AS "Language Courses", ADVANCEDPLACEMENT_COURSES AS "Advanced Placement Courses", ADDTL_INFO1 AS "Diversity in Admission Policy", EXTRACURRICULAR_ACTIVITIES AS "Extra Curricular Activities", PSAL_SPORTS_BOYS AS "Public Schools Athletic League (PSAL) Sports for Boys", PSAL_SPORTS_GIRLS AS "Public Schools Athletic League (PSAL) Sports for Girls", DIADETAILS AS "Facilities", ACADEMIC_OPPORTUNITIES AS "Academic Opportunities", ATTENDANCE_RATE AS "Attendance Rate", GRADUATION_RATE AS "Graduation Rate" FROM HIGHSCHOOLS WHERE ID = :P3_SCHOOL_ID;The above SQL query retrieves all relevant information about a school from the HIGHSCHOOLS table, based on the school ID stored in the P3_SCHOOL_ID page item. Descriptive column aliases are used for each column, providing additional context about the type of data each column contains.

- Click Create

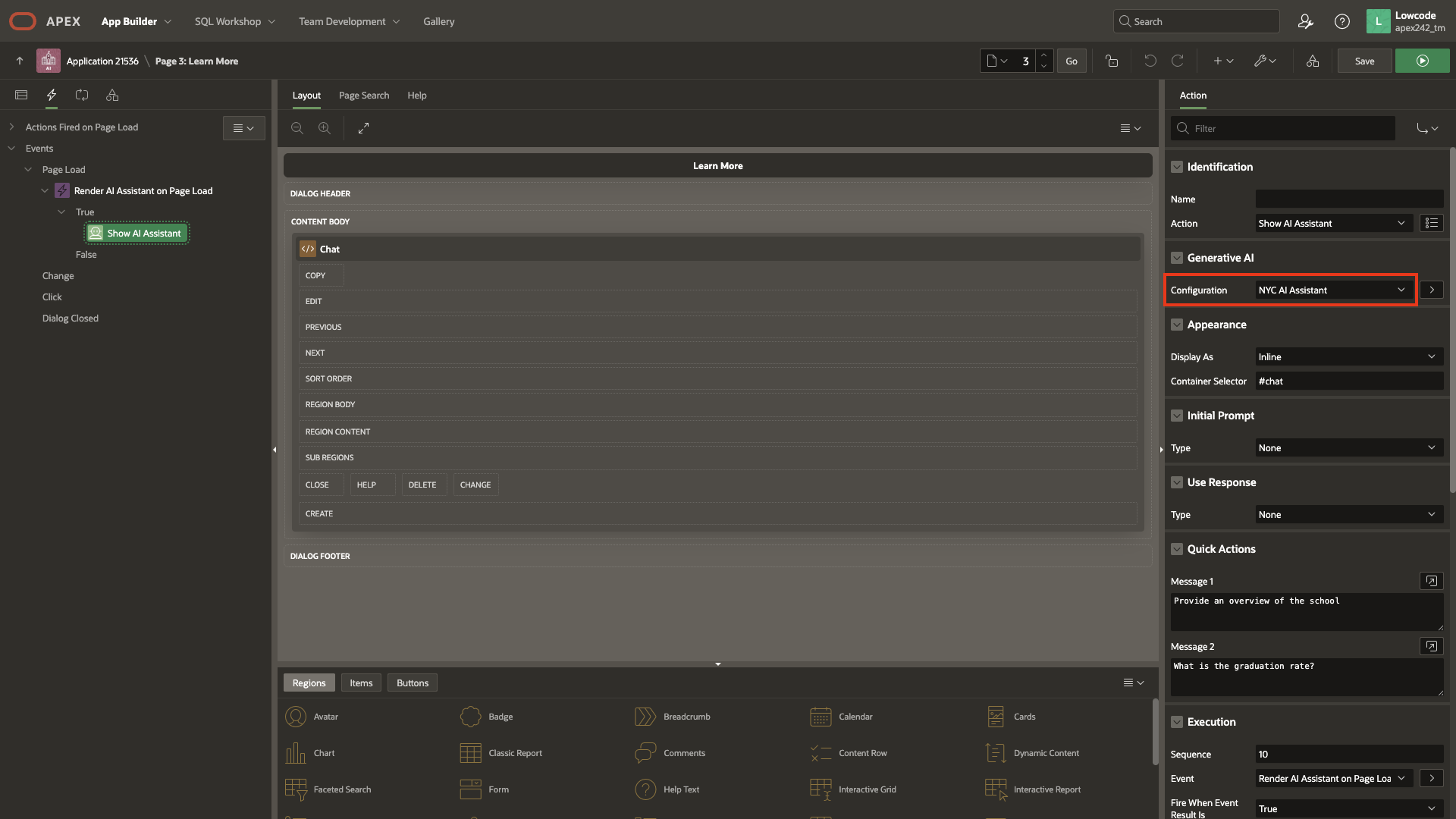

4. Create the Show AI Assistant Dynamic Action

The final step is to render an AI Assistant (chat user interface) within the NYC Schools Application. This AI Assistant will be implemented on the Learn More modal dialog page, which opens when the user clicks the Learn More action in the cards region. The Learn More action is configured to navigate to the modal dialog page (Page 3) and pass the ID of the selected school to the P3_SCHOOL_ID page item. This page item is used in the RAG Sources to retrieve relevant information about the school from the database.

To render the AI Assistant when the Learn More page loads, follow these steps:

-

Create a Dynamic Action on Page Load:

- Action: Show AI Assistant

- Configuration: Select the previously created AI Configuration, e.g., NYC AI Assistant.

- Under Appearance:

- Display As : Inline

- Container Selector : #chat (This directs the dynamic action to render the AI Assistant within the container with

id="chat")

- Create a region and set its Static ID attribute to

chat

That’s it! You now have an AI Assistant Chatbot with RAG capabilities integrated into the Highschools App.

Bonus Tip – Integrate RAG Sources with Oracle Vector Search for Efficient Data Retrieval

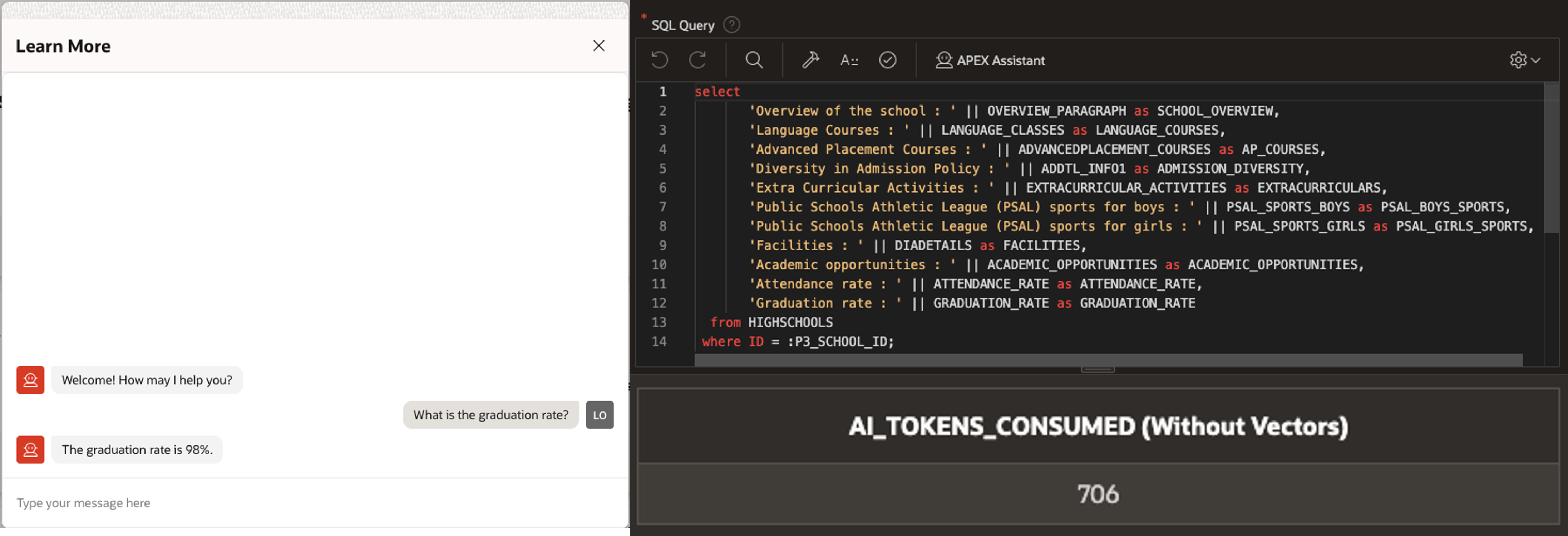

The SQL query used in the RAG Sources retrieves all available information about a given school. For instance, if you ask a question about the graduation rate, the RAG Source also includes details about the attendance rate, language courses, and more. In this example, the data for a single school isn’t excessively large. However, in real-world scenarios, business data can span multiple pages of extensive documents.

Including all this data in the system message could exceed the context window limits imposed by AI providers. Therefore, it becomes essential to implement a mechanism that retrieves only the most relevant information based on the user’s prompt.

This is where Oracle AI Vector Search in Oracle Database 23ai comes into play, providing an efficient way to fetch and utilize contextually relevant data. You can learn more on how to enable your APEX workspace to use Oracle AI Vector Search in this blog post.

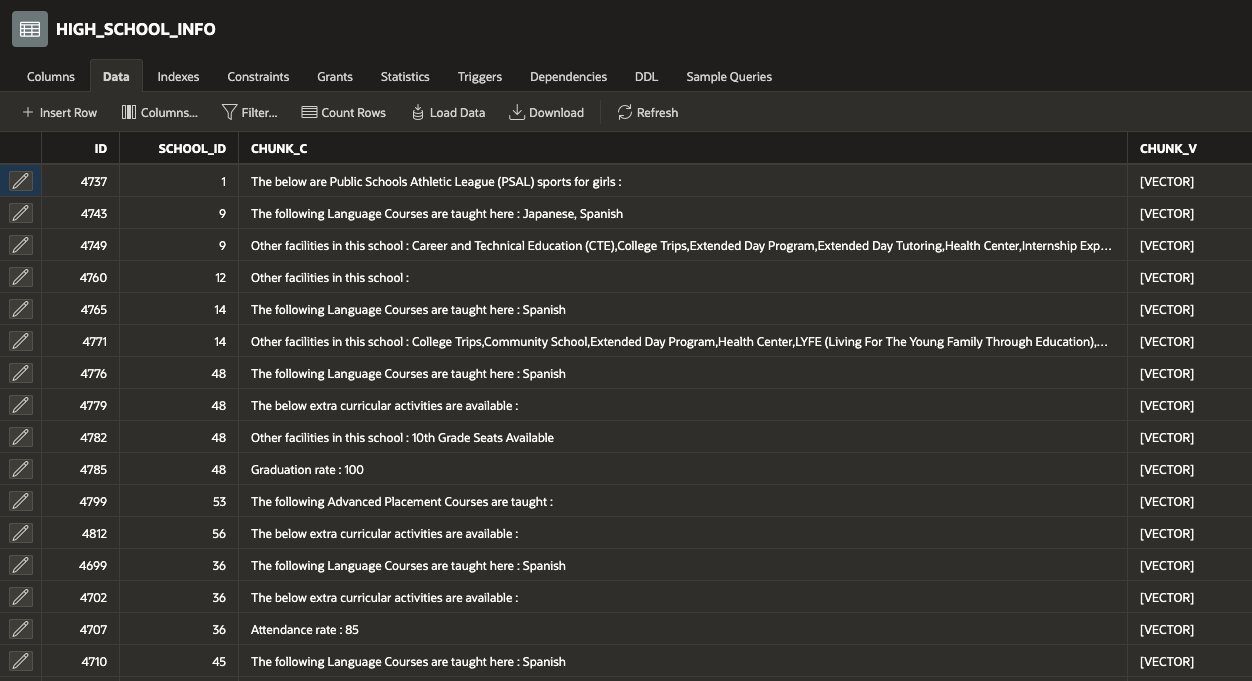

To implement AI Vector Search on the Highschool data, we start by creating an HIGH_SCHOOL_INFO table. This table stores chunks of meaningful information about schools, with each row representing a distinct data point for a school. Each chunk is then converted into a vector and stored in the same table.

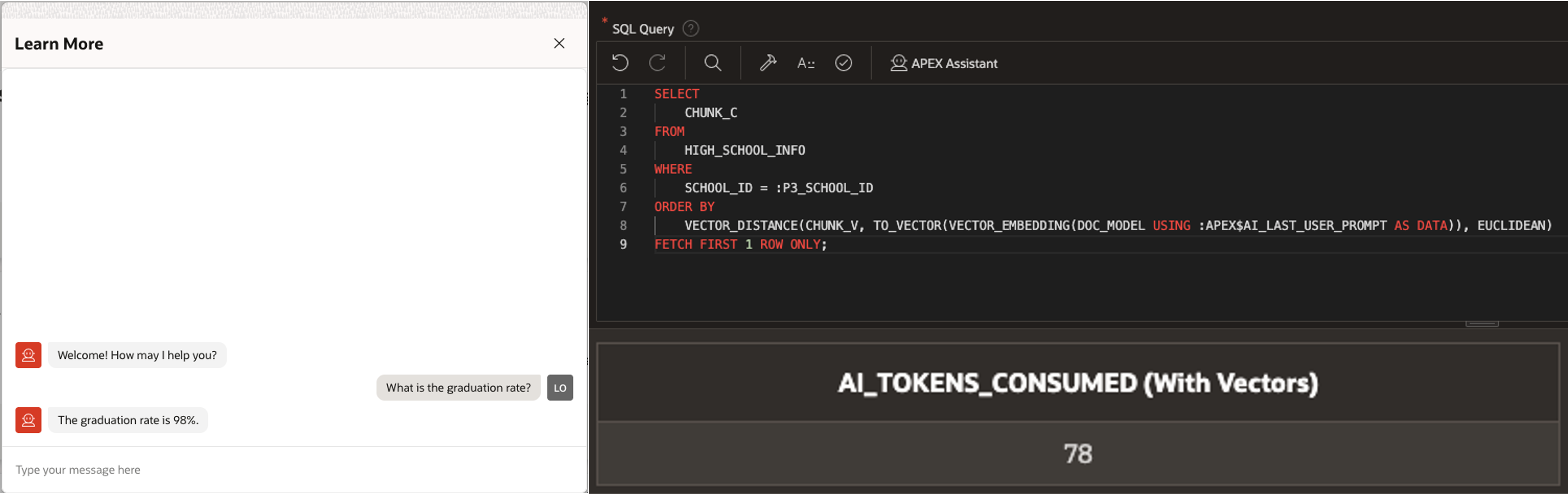

The RAG Source can now query the HIGH_SCHOOL_INFO table and perform a vector distance calculation between each chunk of information about a given school and the input user prompt :APEX$AI_LAST_USER_PROMPT

SELECT

CHUNK_C

FROM

HIGH_SCHOOL_INFO

WHERE

SCHOOL_ID = :P3_SCHOOL_ID

ORDER BY

VECTOR_DISTANCE(CHUNK_V, TO_VECTOR(VECTOR_EMBEDDING(DOC_MODEL USING :APEX$AI_LAST_USER_PROMPT AS DATA)), EUCLIDEAN)

FETCH FIRST 1 ROW ONLY;

To compare the vector implementation with the non-vector implementation, you can query the APEX_WEBSERVICE_LOG view and examine the AI_TOKENS_CONSUMED column. In the Highschools AI Assistant, for the same question — “What is the graduation rate?” — the approximate token count for the non-vector RAG Source is 706, whereas with the vector implementation, the token count is reduced to just 78.

Use AI Configurations Programmatically with APEX_AI PL/SQL API

You can programmatically generate responses by passing the static ID of an AI Configuration to the APEX_AI PL/SQL API. This is useful for generating AI responses in APEX Automations, Workflow Activities, or any custom PL/SQL logic.

DECLARE

l_messages apex_ai.t_chat_messages := apex_ai.c_chat_messages;

l_response clob;

BEGIN

:P2_RESPONSE := apex_ai.chat(

p_config_static_id => 'nyc_ai_assistant',

p_prompt => 'What is the graduation rate?',

p_messages => l_messages);

END;

Note: Since AI Configurations are part of an application, the above code can only be executed within an active APEX application session.

Conclusion

The Highschools App example demonstrates how to implement a conversational Q&A chat user interface which generates responses based on data stored in the database. Although the dataset in this example may not reflect the scale of the real-world scenarios, the same concept can be extrapolated and applied to the business use cases, within the limitation of the LLMs of course, where the data required to accurately answer the question is extensive. With AI Configurations and RAG Sources, Oracle APEX presents a declarative approach to creating data-centric AI Assistants equipped with RAG capabilities. This means low-code developers are no longer just app builders—they are AI developers too.