AI Quick Actions is a feature in Oracle Cloud Infrastructure (OCI) Data Science that allows customers to deploy, fine-tune, and evaluate foundation models with a few clicks. In the first release of AI Quick Actions in April, we provided a list of service curated foundation models that customers can use, including Mistral-7B and Falcon-7B. The low-code environment of AI Quick Actions allows a broad spectrum of users to harness the power of these foundation models.

In the latest release of AI Quick Actions, we’re releasing support for a bring your own model feature into AI Quick Actions through OCI Object Storage. This feature opens a large selection of models that customers can use in AI Quick Actions.

Bringing your own model into AI Quick Actions requires two main steps. First, you must download the model artifacts to an OCI Object Storage bucket. After that, you must register the model inside AI Quick Actions. In this post, we go through the steps to bring a model into AI Quick Actions.

Downloading model artifacts and uploading to OCI Object Storage

The first step to bring your model to AI Quick Actions is downloading the model artifacts for the model you want to use. One way to do so is by going to the Hugging Face website, an AI model repository, and downloading the model artifacts. Then, upload your model artifacts to a versioned bucket in OCI Object Storage. If you don’t have a versioned Object Storage bucket, you can create one in the Oracle Cloud Console or the OCI software developer kit (SDK). In addition, configure the policies to allow the Data Science service to read from and write to the Object Storage bucket.

Let’s take the example of using the Meta-Llama-3-8B-Instruct model in AI Quick Actions. If you want to download the Meta-Llama-3-8B-Instruct model from Hugging Face to your OCI Object Storage bucket, you must first get access to the model on the Hugging Face website and have a valid HuggingFace token, which is needed for certain gated models. If you don’t have a HuggingFace token, you can follow this guide to generate one. You can use the Hugging Face cli to download the model. Afterwards, you can upload the model to OCI Object Storage. The sample code below can be used in the Data Science notebook to download the Meta-Llama-3-8B-Instruct model and upload it to an Object Storage bucket. Replace the variable names with yours.

# Login to huggingface using env variable HUGGINGFACE_TOKEN = "<HUGGINGFACE_TOKEN>" # Your huggingface token !huggingface-cli login --token $HUGGINGFACE_TOKEN # Download the LLama3 model from Hugging Face to a local folder. !huggingface-cli download meta-llama/Meta-Llama-3-8B-Instruct --local-dir meta-llama-3-8b

To upload the model to OCI Object Storage, run the following command:

model_prefix = "Meta-Llama-3-8B-Instruct/" #"<bucket_prefix>" bucket= "<bucket_name>" # this should be a versioned bucket namespace = "<bucket_namespace>" !oci os object bulk-upload --src-dir $local_dir --prefix $model_prefix -bn $bucket -ns $namespace --auth "resource_principal" --no-overwrite

Registering the model

Register service verified model

After you have uploaded your model artifacts to Object Storage, you must register your model in AI Quick Actions and navigate to AI Quick Actions. For more information about getting access to AI Quick Actions in OCI Data Science, refer to our documentation.

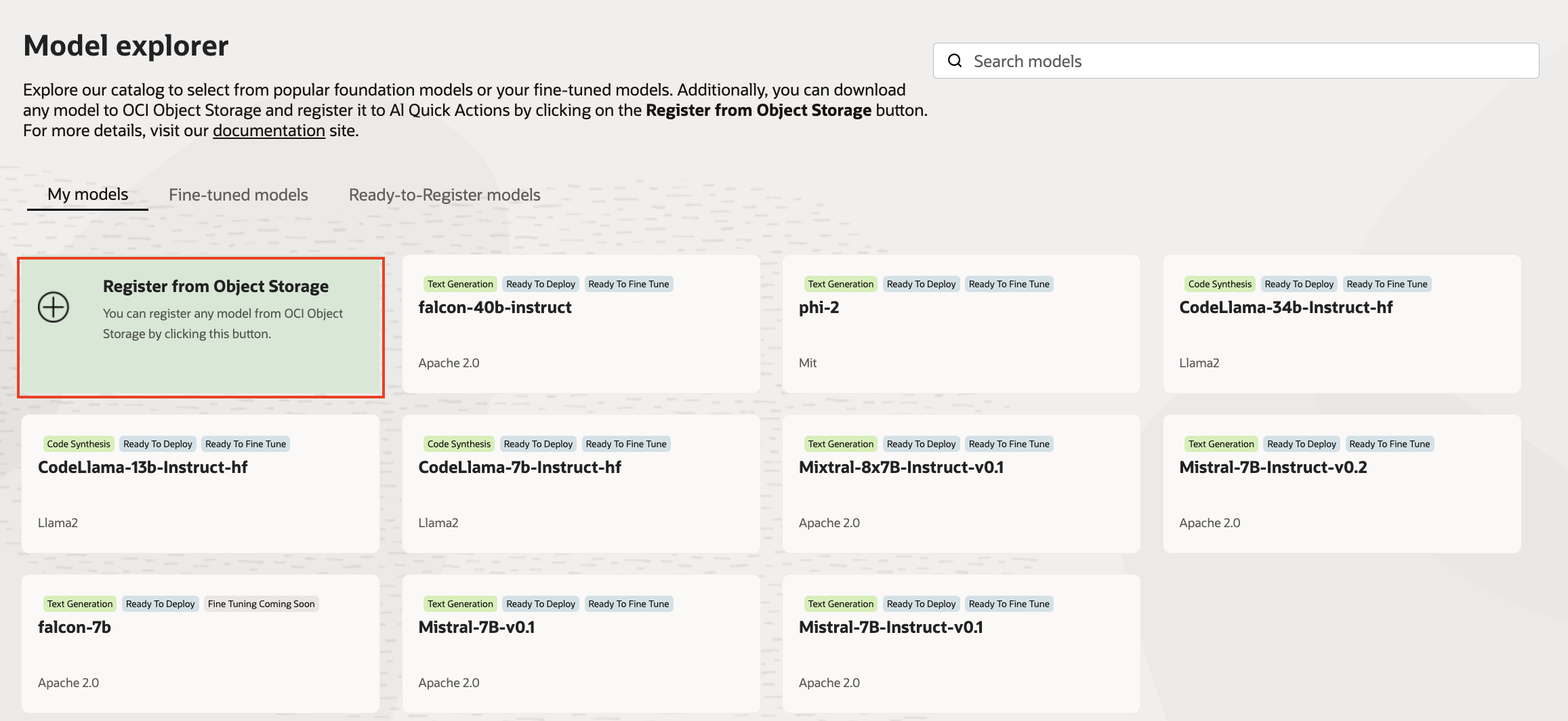

In AI Quick Actions, under Model explorer, there are three tabs: My models, Fine-tuned models, and Ready-to-Register models. When you select My models, a list shows the models curated by OCI Data Science and the models you have registered. To begin registering a model, select the Register from Object Storage button.

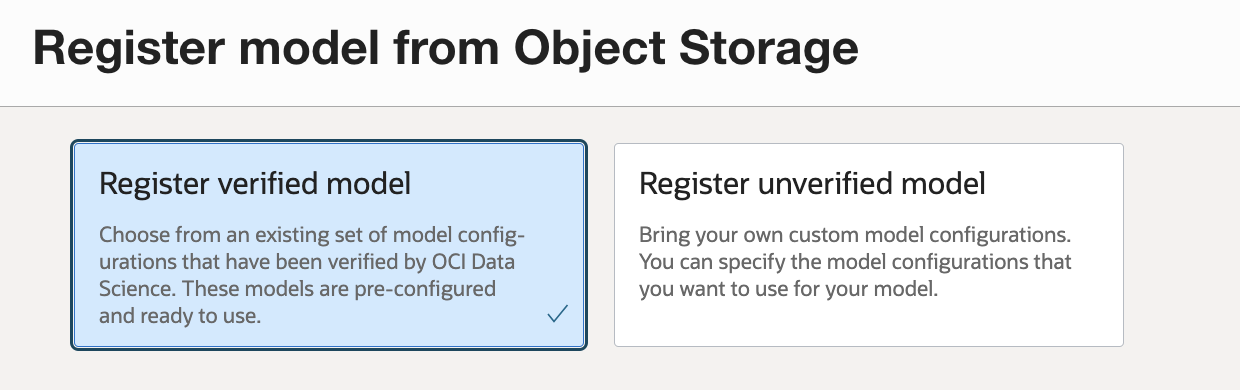

You can either register a verified model or register an unverified model. A verified model is a set of models that OCI Data Science has tested and verified for deployment and fine-tuning. The difference between the service-curated models in the My models section and verified models is that you must download the model artifact to your Object Storage bucket for verified model and register the model before you can use it in AI Quick Actions. With service curated model, you don’t need to download the model artifact.

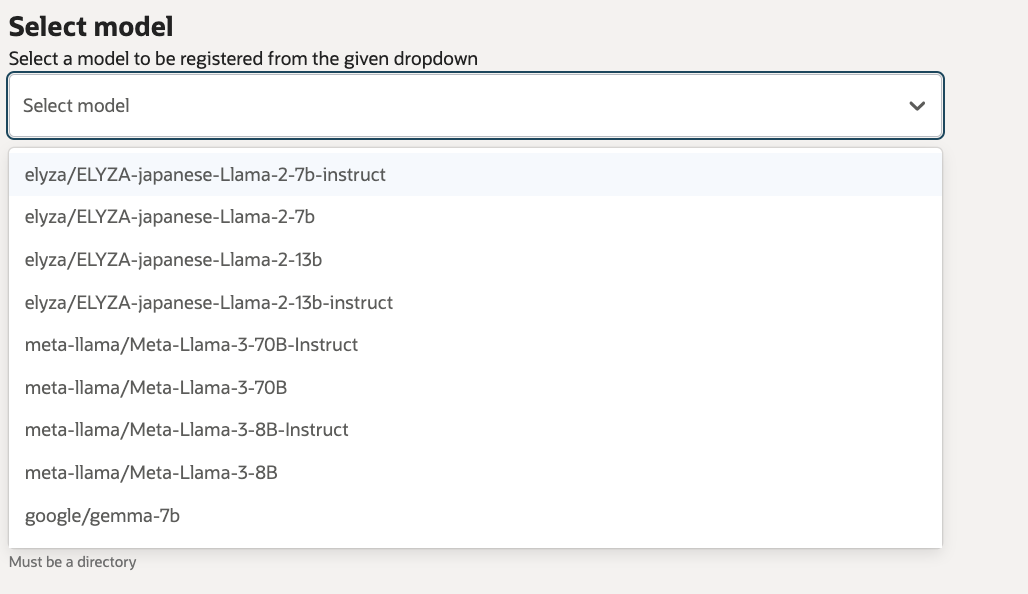

When you select Register verified model, you can use the Select model menu to see the list of models that have been verified by the service. The list includes the following verified models available in this release:

- meta-llama-3-8b

- meta-llama-3-8b-instruct

- meta-llama-3-70b

- meta-llama-3-70b-Instruct

- elyza/ELYZA-japanese-Llama-2-7b-instruct

- elyza/ELYZA-japanese-Llama-2-13b-instruct

- elyza/ELYZA-japanese-Llama-2-13b

- elyza/ELYZA-japanese-Llama-2-7b

- google/gemma-1.1-7b-it

- google/gemma-2b-it

- google/gemma-2b

- google/gemma-7b

- google/codegemma-1.1-2b

- google/codegemma-1.1-7b-it

- google/codegemma-1.1-2b

- google/codegemma-7b

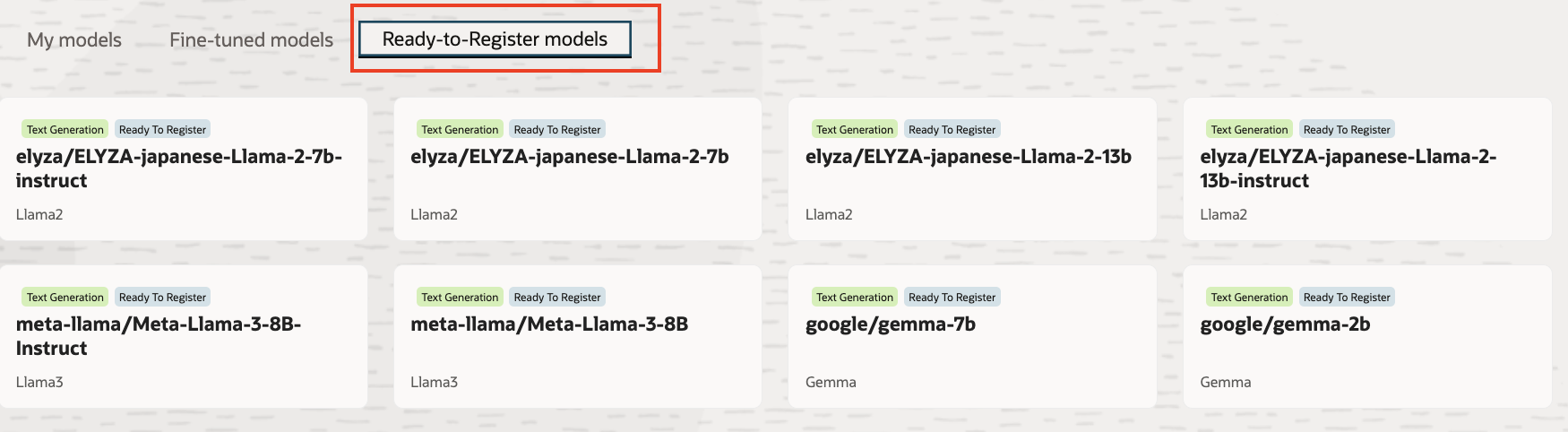

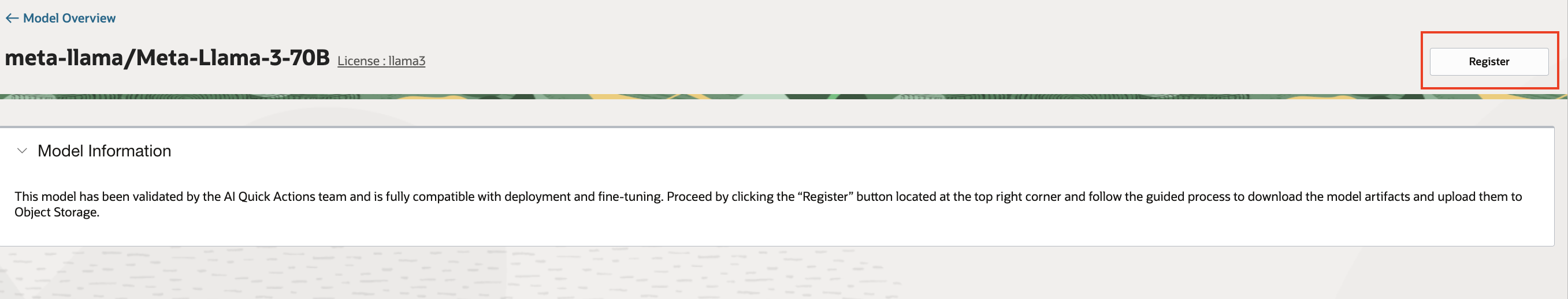

Another way to view the list of verified models available and register a verified model is by selecting the “Ready-to-Register models” tab in the Model Explorer. Each of the verified model has its own model card. By selecting the model card, you can get more information about the verified model. When you’re ready to register the verified model, you can select Register.

Register unverified model

Alternatively, you can register your own model not yet verified by the Data Science service by selecting Register unverified model. You must provide a name for the model and provide the Object Storage bucket information where the model is stored. You must also choose the service managed container for model deployment. In this release, we’re adding two inference containers with one container supporting models compatible with inference engine vLLM 0.4.1 and another container for models compatible with TGI 2.0.1. You can optionally choose to enable the model to be fine-tuned with the service managed container after the model has been registered. After the model registration step is complete, the registered model appears under the My models tab. The registration process can take several minutes to complete.

Once a model, verified or unverified, has been registered, you can deploy, fine tune and evaluate the models.

Additions of advanced options

In addition to the bring your own model feature, we are adding in this release advanced fine tuning options including hyperparameters specific to the LoRA technique. We are adding vllm and TGI start up parameters as advanced model deployment options.

Conclusion

In this release of AI Quick Actions, we open the option for customers to bring their own model to use in AI Quick Actions through OCI Object Storage. This enhancement greatly expands the model selection customers have for using AI Quick Actions. Soon, we plan to support more verified models and allow customers to bring their own custom containe. For more information on how to use AI quick actions, go to the Oracle Cloud Infrastructure Data Science YouTube playlist to see a demo video of AI Quick Actions, find our technical documentation, and see our Github repository with tips and examples.