Open AI recently released two open weight models gpt-oss-120b and gpt-oss-20b, their first since GPT-2. OpenAI trained these models with a mix of reinforcement learning and techniques based on OpenAI’s other internal models. According to OpenAI, their performance are on par or exceed OpenAI’s internal models, and both models perform strongly on tool use, few-shot function calling, CoT reasoning and HealthBench.

Here are the new OpenAI open weight models:

- gpt-oss-120b — designed for production, general-purpose and high-reasoning use cases. The model has 117B parameters with 5.1B active parameters

- gpt-oss-20b — designed for lower latency and local or specialized use cases. The model has 21B parameters with 3.6B active parameters

In our previous blog, we described how customers can use a Bring Your Own Container approach to deploy and fine tune these models in OCI (Oracle Cloud Infrastructure) Data Science. Both models are available in OCI Data Science AI Quick Actions, a no-code interface for working with generative AI models. The models are cached in our service and readily available to be deployed and fine tuned in AI Quick Actions, without the need for users to bring in the model artifacts from external sites.

Working with OpenAI open weight models in AI Quick Actions

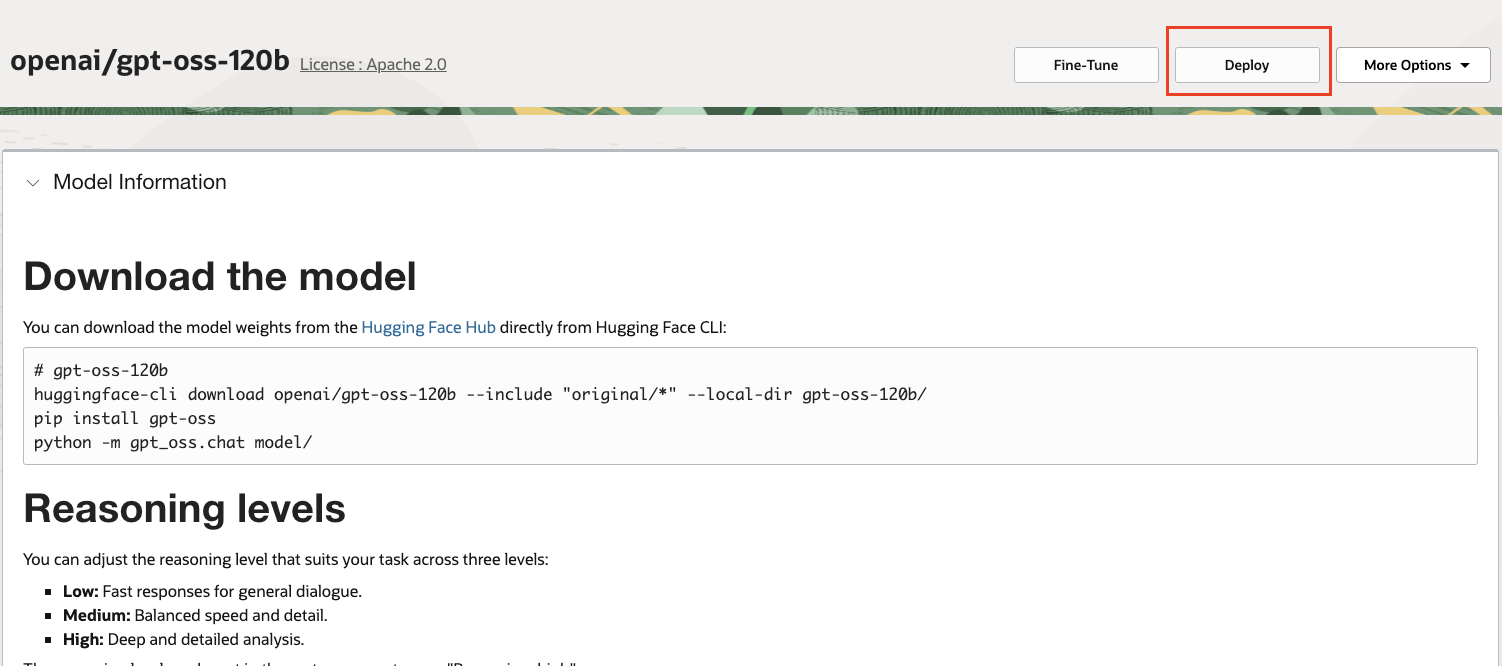

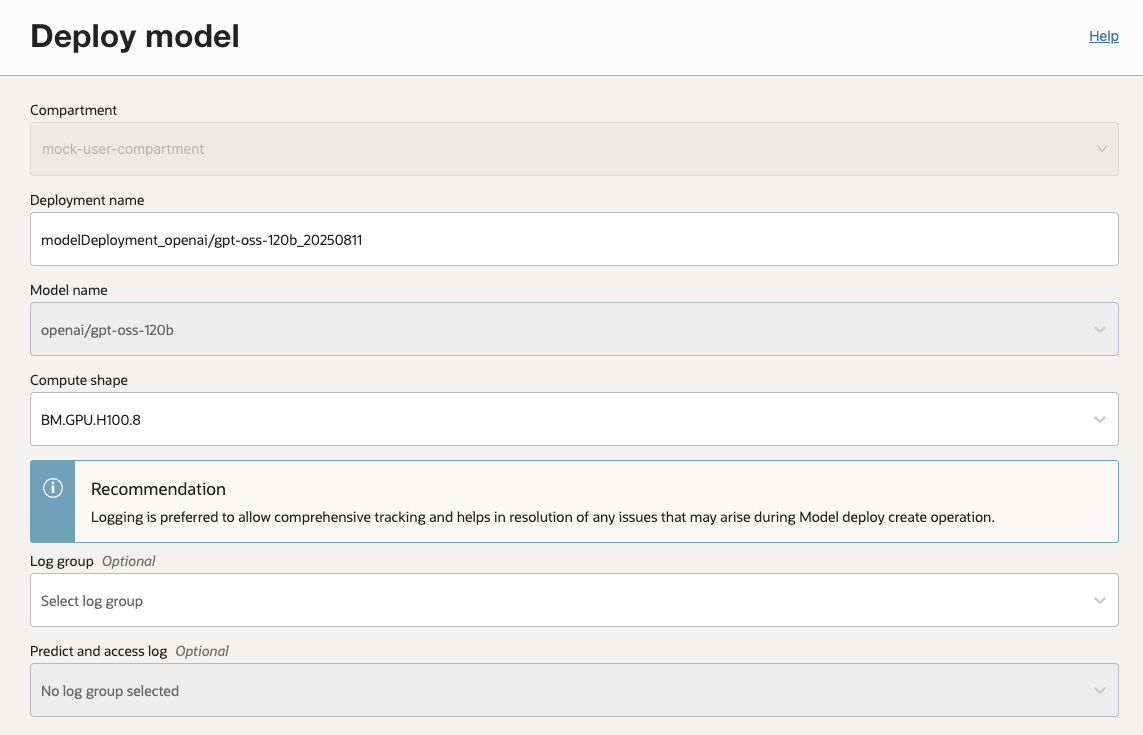

By using AI Quick Actions, customers can leverage our service managed container with the latest vllm version that supports both of the models, eliminating the need to build or bring your own container for working with the models. To access the latest models, users need to deactivate and reactivate the notebook session they use with AI Quick Actions. Alternatively, they can create a new notebook session. When users first launch AI Quick Actions, they can find the model cards in the Model Explorer as shown in figure 1. Upon clicking on the model card, users will see the options to deploy or fine tune the model as shown in figure 2. To deploy the model, users can choose the shape for deployment and logging options as shown in figure 3. OCI Data Science currently supports multiple GPUs including A10, A100, H100 and H200. gpt-oss-120b can be deployed with A100, H100 and H200 while gpt-oss-20b can be deployed with an A10.4, A100, H100 and H200.

Using AI Quick Actions to inference on gpt-oss models

The example below shows how to send a chat completion request to a gpt-oss model. This would also work with any other open-source LLM deployed via AI Quick Actions. We use the ads.aqua.get_httpx_client() utility from Oracle Accelerated Data Science SDK to enable the OpenAI client to work more seamlessly with OCI’s authentication and networking. The endpoint URL and authentication method must be updated according to your model deployment configuration.

import ads

import ads.aqua

from openai import OpenAI

# Authenticate with OCI using your preferred method.

# Here we use 'security_token', which must be configured in your environment.

# Alternatively the auth="resource_principal" can be used

ads.set_auth(auth="security_token")

# Replace <OCID> with the OCID of your deployed model

# and update the region in the endpoint URL accordingly.

ENDPOINT = "https://<MD_OCID>/"

# Create an OpenAI client configured to call the OCI Model Deployment service.

# - api_key is set to "OCI"

# - base_url points to your deployment's predict endpoint

# - http_client is provided by ads.aqua to handle OCI request signing

client = OpenAI(

api_key="OCI",

base_url=f"{ENDPOINT}/predict/v1/",

http_client=ads.aqua.get_httpx_client(),

)

# Send a chat-completion request to the deployed model.

# The model name is "odsc-llm" for single-model deployments,

response = client.chat.completions.create(

model="odsc-llm",

messages=[

{

"role": "user",

"content": "Who was the first president of the US?",

}

],

)

# Print the raw response object from the model.

print(response)

Why use OCI Data Science AI Quick Actions for OpenAI’s open weight models?

AI Quick Actions help make it easy for you to stay on top of AI innovations. You can deploy and fine-tune OpenAI’s latest open weight models in a no code environment.

- Managed infrastructure: You can focus on working with the model, not the setup. Access enterprise grade GPUs in a managed service.

- Minimal set up: Leverage AI Quick Actions’ service managed container for deployment and fine tuning

- Full lifecycle support: From development to deployment and monitoring.

OpenAI’s open weight models, combined with the scalability of Oracle Cloud Infrastructure, could help accelerate your AI journey from concept to deployment.

Resources