In the rapid, ever-evolving field of Large Language Models (LLMs), NVIDIA NIM microservices were created to accelerate the deployment of state-of-the-art generative AI models in enterprises.

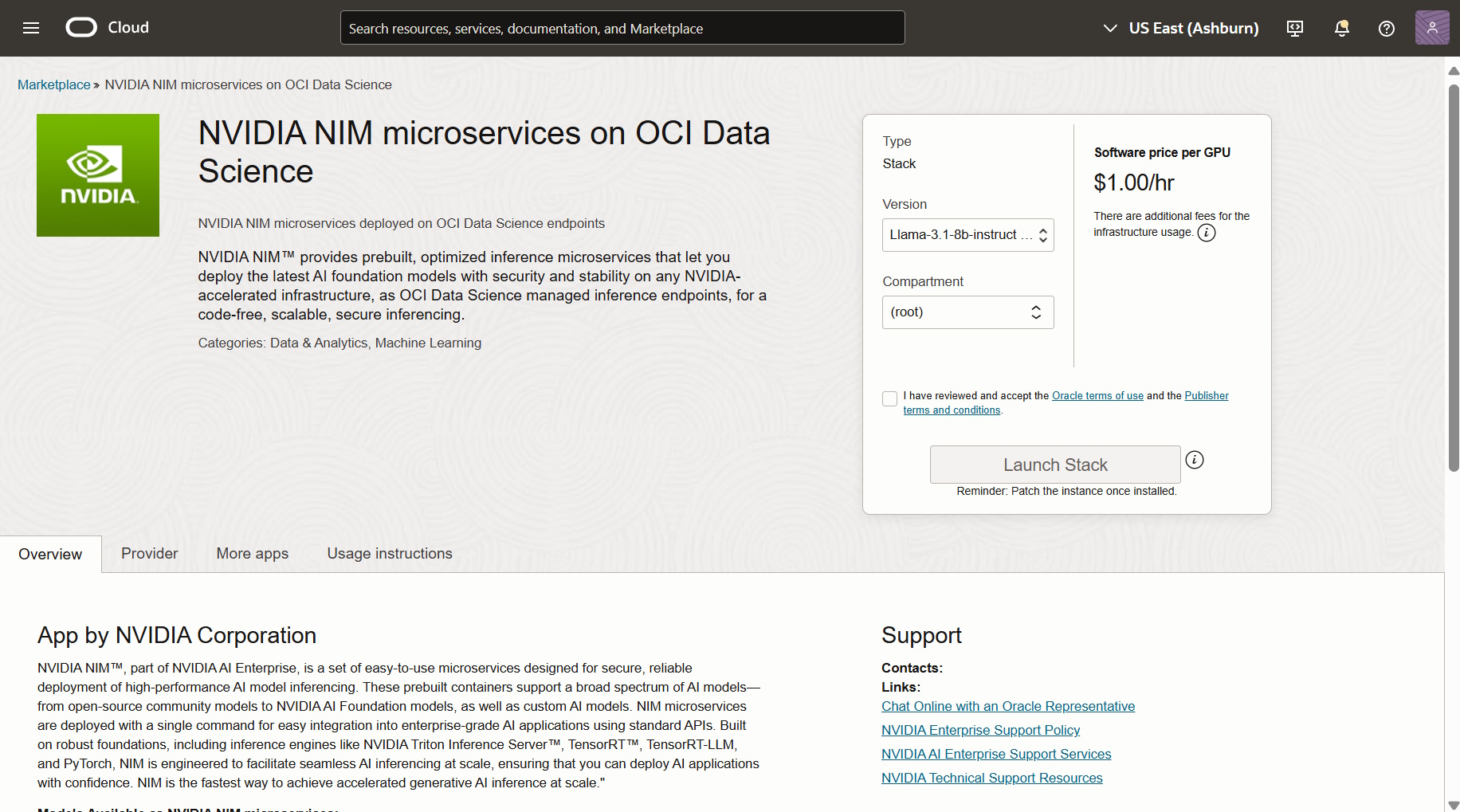

Building on top of Oracle’s long-lasting partnership with NVIDIA, we’re excited to introduce NVIDIA NIM Inference Endpoints in OCI Marketplace, allowing you to deploy LLMs on demand with pay-per-hour license from NVIDIA.

What is NVIDIA NIM?

NVIDIA NIM microservices is a suite of easy to use microservices that facilitate the deployment of generative AI models, such as large language models (LLMs) and models for reasoning, retrieval, speech, vision and more, across any NVIDIA accelerated infrastructure . NVIDIA NIM simplifies the process for IT and DevOps teams to manage LLMs in their environments, providing standard APIs for developers to create AI-driven applications like agents, copilots, chatbots, and assistants. It leverages NVIDIA GPU s for fast, scalable deployment, helping ensure efficient inference and high performance.

NVIDIA NIM provides pre-optimized AI inference endpoints designed to run on NVIDIA GPUs with minimal setup. With OCI Marketplace, you can now deploy these models in minutes, with no infrastructure complexity, and without writing code.

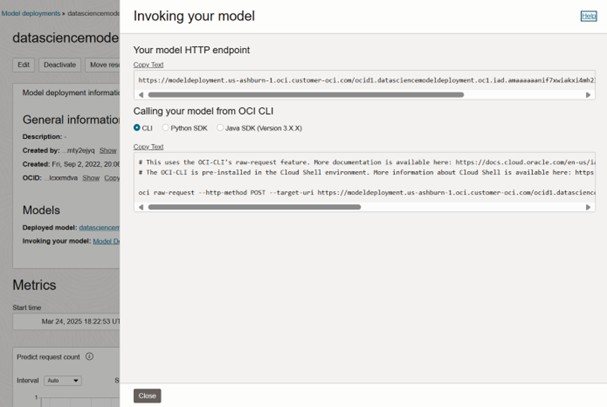

Endpoints are created as fully managed resources in OCI Data Science, and can be scaled as needed, from development and testing to production.

With OCI Data Science, you can manage your entire AI models lifecycle, from development to production, either code-first with Jupyter Notebooks, or without writing code with AI Quick Actions.

Key Benefits of NVIDIA NIM on OCI:

- Scalable Deployment: Seamlessly scale from a few users to millions.

- Advanced Language Model: Support with pre-generated optimized engines for a diverse range of cutting edge LLM architectures.

- Enterprise-Grade Security: Constantly monitoring and patching Common Vulnerabilities and Exposures (CVEs) and conducting internal penetration tests by NVIDIA.

- Performance optimized – Optimized inference servers and models on OCI’s high-performance NVIDIA GPUs.

- Deploy with just a few clicks – Select NVIDIA NIM on the OCI Marketplace and launch inference endpoints quickly.

- Pay-as-you-go – Use OCI credits for per-hour NVIDIA license costs.

- Data security & privacy – Deployed in your own OCI tenancy, enabling full control over data security and compliance.

Get started with NVIDIA NIM on OCI today

OCI continues to deliver the latest AI innovations—now with pay-as-you-go NVIDIA NIM inference models, giving customers a streamlined cloud-native AI experience.

If you’re looking for an easy, fast, cost-effective way to deploy AI models, try NVIDIA NIM in OCI Marketplace today!