Oracle 23ai and NVIDIA NeMo Retriever can help solve the challenges of modern enterprise adoption of large language models (LLMs). Together these technologies can be used to streamline, optimize, and enhance the adoption and deployment of LLMs with a cost-effective, simple, and powerful solution to enable you to fully harness the potential of AI for your enterprise application.

For enterprise adoption, LLM responses must be up-to-date, specific to the industry or domain, and generated from authoritative sources. Retrieval-augmented generation (RAG) can be used to cost-effectively help solve many of these challenges. RAG optimizes LLM responses to reference a knowledge base outside the training data and helps increase users’ trust in LLM responses with source attribution. Consider that, to accelerate the adoption of LLMs, enterprises need a simple yet powerful way to create the RAG pipeline.

NVIDIA and Oracle team up to power your RAG pipeline

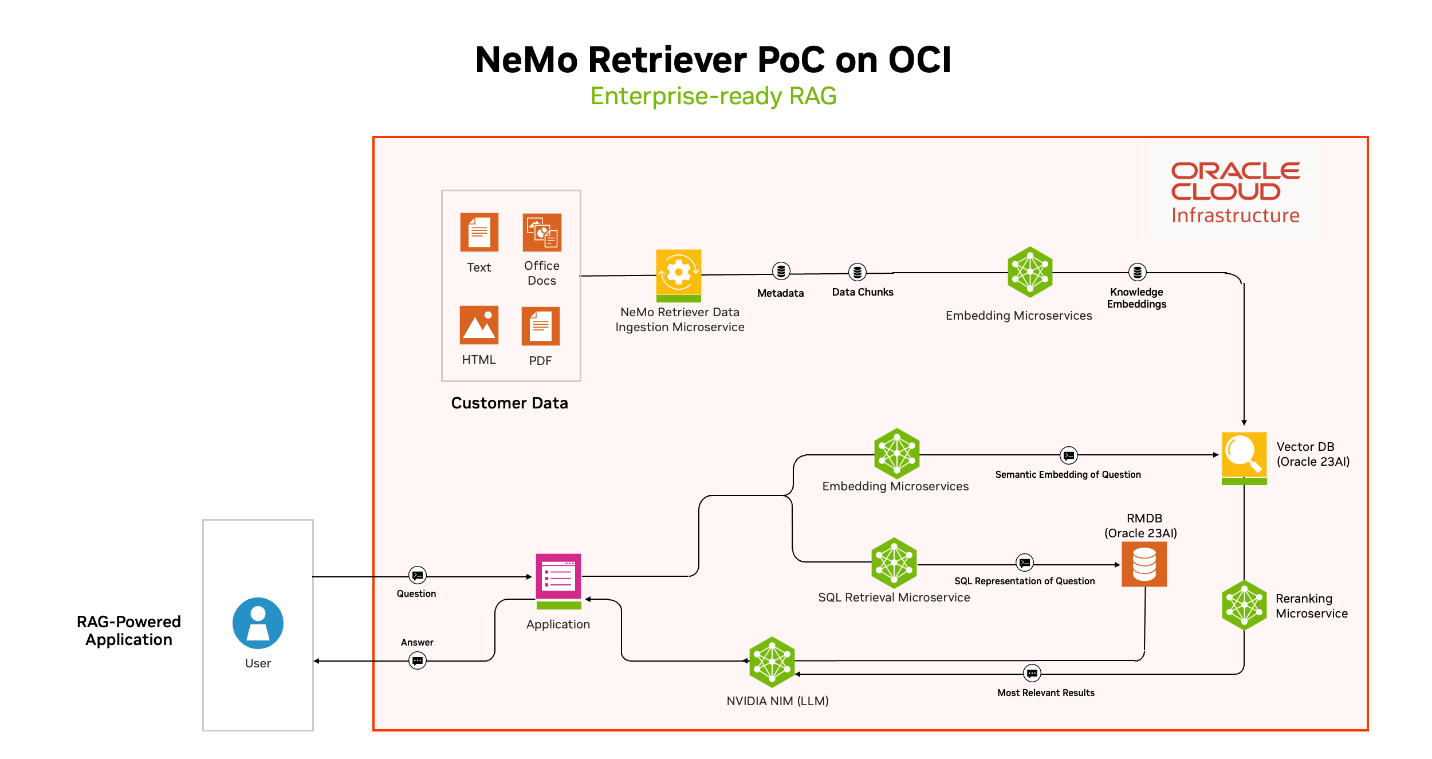

NVIDIA and Oracle partnered to provide a high-performance, developer-friendly, and enterprise-grade RAG pipeline to harness the power of your Oracle data. NVIDIA NeMo Retriever and Oracle Database 23ai can be used to simplify the creation of RAG pipelines by connecting to a wide variety of data types, enabling retrieval of more accurate responses, and reducing the complexity of the underlying infrastructure. This collaboration helps generate output by LLMs that is more relevant, more contextually accurate, and better aligned with the business’s specific needs. In the next section, we highlight the possible benefits of using NVIDIA NeMo and Oracle 23ai to power your RAG pipeline.

Potential benefits of NVIDIA NeMo Retriever and Oracle Database 23ai

NeMo Retriever allows enterprises to connect their LLMs with diverse knowledge sets and build context-aware AI applications and agentic AI workflows. NeMo Retriever provides the following benefits to power your enterprise-grade RAG pipeline compared to the competing offerings:

- Tight integration with unified capabilities: Retriever combines embedding and reranking models into the same microservice, creating a simple developer experience. Developers don’t need to plumb disparate applications to retrieve data from diverse data sources, vectorize it, and use ranking algorithms to choose the most context-aware response.

- Strong support with various embedding models: NeMo Retriever supports three embedding models: NV-EmbedQA-E5-v5, NV-EmbedQA-Mistral7B-v2, and Snowflake-Arctic-Embed-L. This variety makes testing different embedding models and finding the best one to meet the needs of your use case easy.

- Superior scalability and deployment: NeMo Retriever supports anything from a few to millions of users. Enterprise customers can deploy and scale Retriever in Oracle Cloud Infrastructure (OCI) with a few click configurations.

- Enhanced developer productivity: You can use all NVIDIA Inference Microservices (NIM) together or separately, offering developers a modular approach to building AI applications. In addition, you can integrate the microservices with community models, NVIDIA models, or users’ custom models.

Oracle Database 23ai takes the powerful data management platform you know and love and bundles a new collection of powerful tools that integrate AI seamlessly into data-driven applications, meant to facilitate a seamless evolution to next-gen, AI-centric applications. The following attributes separate Oracle 23ai Vector DB capabilities from the competition:

- Converged database: You don’t need to maintain a separate database to support Vector data. By integrating new data types, workloads, and paradigms as features within a converged database, you can support mixed workloads and data types in a much simpler way. You don’t need to manage and maintain multiple systems or worry about providing unified security across them.

- Serverless: You don’t have to manage individual database instances or deal with cumbersome upgrading, patching, or scaling. We can help take care of those factors behind the scenes.

- AI vector search: This capability allows for more accurate and relevant semantic searches, with the potential to significantly improve the results of business data queries with simple SQL.

- Integration: Integrated deeply with popular AI frameworks like LangChain, Oracle 23ai supports the entire generative AI pipeline, including vectorization and the augmentation of LLMs with specific business data.

Figure 1: RAG deployment using NVIDIA Microservices and Oracle Database 23ai

Try it yourself

If you’re excited to try this combination for yourself, we’ve put together thorough, step-by-step instructions to help fast-track your experience. See the the detailed guide on GitHub, which also includes sample Jupyter notebooks to help get started. If you have questions or want to provide feedback, raise an Issue in the repository and we’ll be in touch.

Using tools like Oracle Database 23ai and NVIDIA NeMo for RAG can help simplify integrating real-time, more contextually accurate data into AI-driven applications. These technologies can be leveraged to streamline the developer experience of RAG systems, enhance the output of LLMs, and help reduce the complexity of managing underlying data infrastructures, ultimately making it easier for organizations to harness the full potential of AI.

For more information, see the following resources: