In the rapidly changing field of cloud computing, efficiently and cost-effectively deploying machine learning models is a challenging endeavor for many organizations. The deployment process in a cloud setting encompasses a range of hurdles, including resource management and achieving a balance between cost and performance. Conventional cloud resources often fall short in flexibility, potentially leading to high costs, especially when dealing with the fluctuating demands characteristic of machine learning operations. These models require considerable computational resources during training or retraining phases but considerably less during inference or dormant phases, presenting a resource allocation and cost management quandary. Once a model is trained and optimized, it can be loaded into the machine’s memory to make predictions. The real challenge lies in ensuring that the hardware on which the model is loaded is fully utilized. How do you leverage the power of your machine to its fullest extent? How can you “squeeze the last drop” out of every processing unit? The introduction of burstable VMs fills this void.

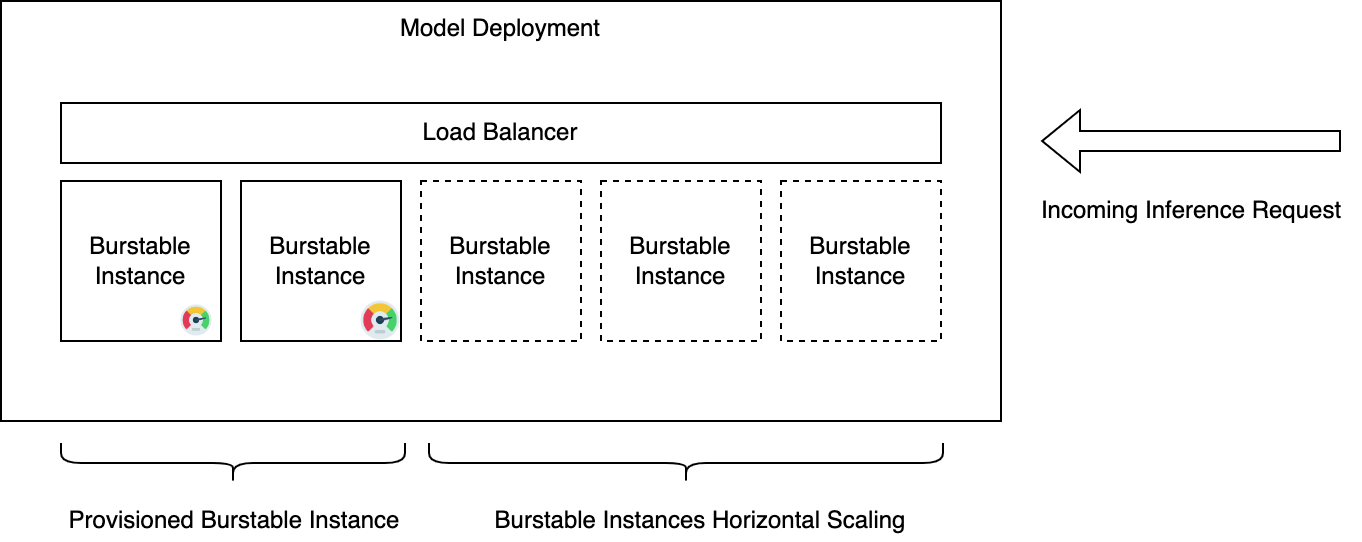

Take, for example, a healthcare analytics system that predicts patient admission rates. Under normal conditions, it relies on a standard set of VMs for daily predictive tasks. However, in situations like a health emergency, there’s a significant increase in the need for quick and precise predictions. Here, autoscaling and burstable VMs in Oracle Cloud Infrastructure (OCI) for model deployment play a crucial role. Autoscaling effectively adapts resources in response to immediate demands, guaranteeing that the infrastructure flexibly scales with changes in workload. While autoscaling addresses scalability, it might not always be the most cost-effective solution for workloads that fluctuate widely. Burstable VMs provide a baseline level of CPU performance for low-demand periods, with the added capability to ramp up processing power as needed, a feature known as “bursting”.

Key features of Autoscaling and Burstable VMs

Key features of Autoscaling and burstable VMs in OCI Data Science include:

| AutoScaling | Burstable VMs |

|---|---|

|

|

High Level Architecture

In the journey of utilizing Oracle Cloud Infrastructure (OCI) for machine learning, you can significantly optimize costs. A Burstable VM, is a type of virtual machine that offers a low-cost, low-to-moderate performance choice for applications that do not require the full capability of the CPU all of the time. This sort of virtual machine is appropriate for applications with fluctuating CPU consumption patterns and is cost-effective, making it a great alternative for small to medium-sized applications, development and testing environments, and even tiny databases. Burstable VMs are mainly used to save money for customers that do not need the full power of the CPU all of the time but still want to be able to burst the CPU performance when needed for short periods of time.The pricing model is tailored to the specific time and resources you use at each stage of the machine learning lifecycle, ensuring you pay only for the essentials. Furthermore, OCI Model Deploy offers burstable instances, perfect for scenarios where consistent high CPU levels aren’t necessary, but the ability to access high-speed CPUs on demand is a significant advantages.

Getting Started

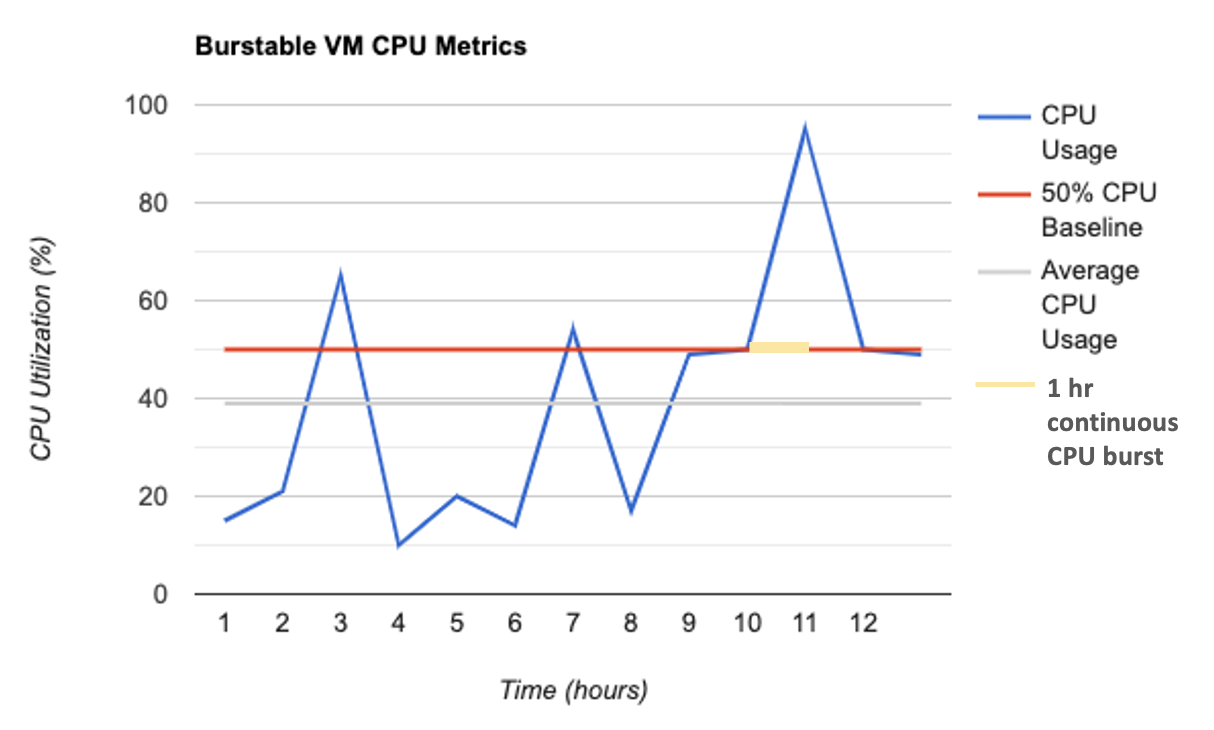

A burstable instance provides a baseline level of CPU performance with the ability to burst to a higher level to support occasional spikes in usage in contrast to a regular instance which has a dedicated CPU assigned all the time. A burstable instance with 2 OCPU count with a baseline of 50% will provide a baseline performance of a 1 OCPU, but has the flexibility to provide the performance of 2 OCPU cores for a limited time when needed. In contrast, a regular instance with 1 OCPU can only provide the maximum performance of a 1 OCPU core.

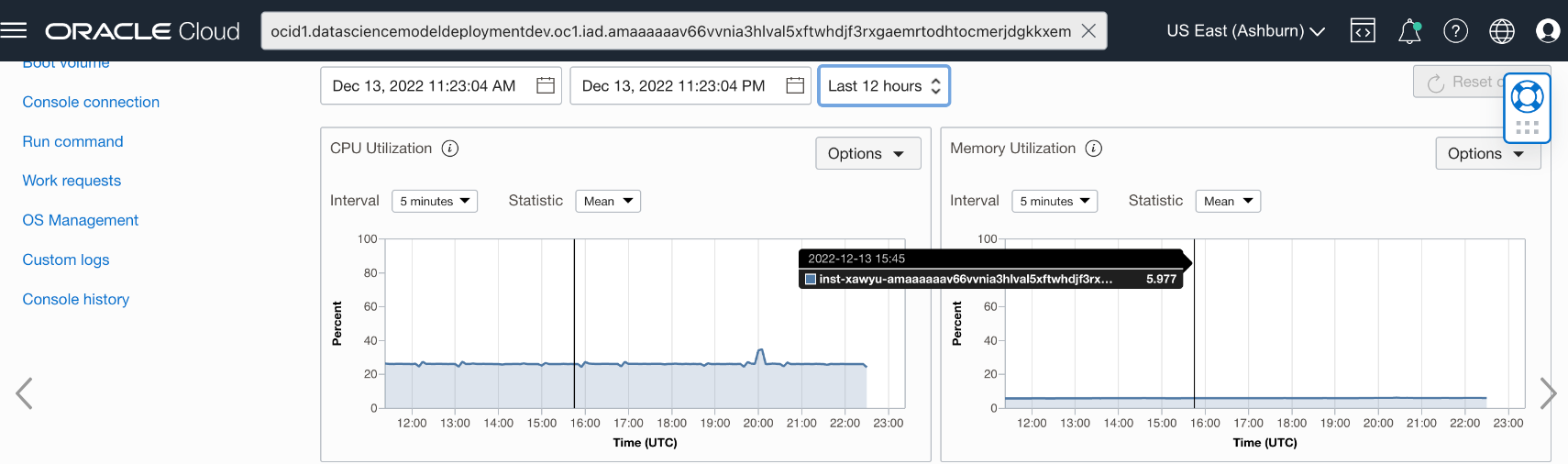

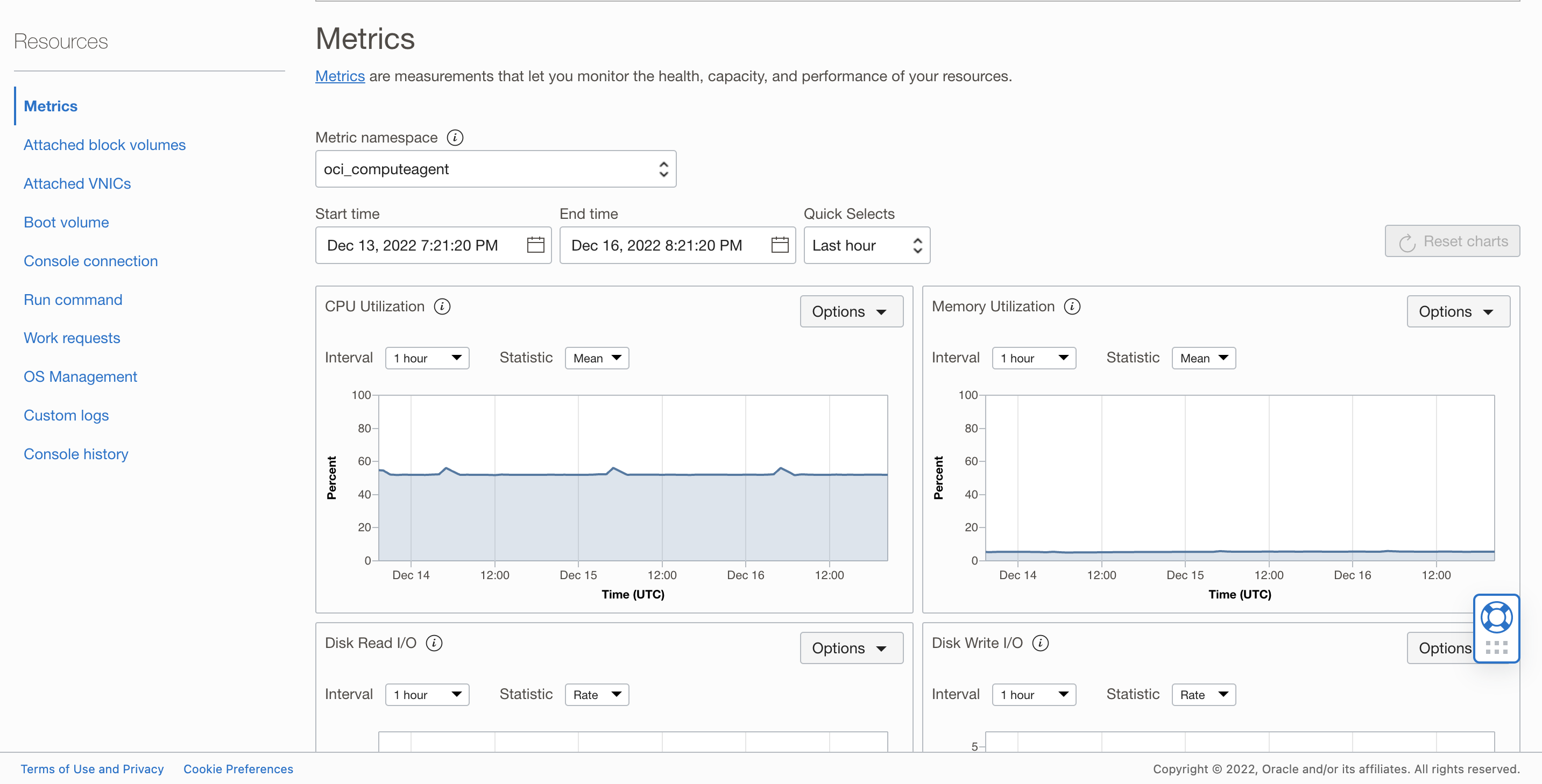

The following section shows the CPU and Memory Utilization for a machine learning model trained with Iris data-set deployed on both burstable VM and regular instance.

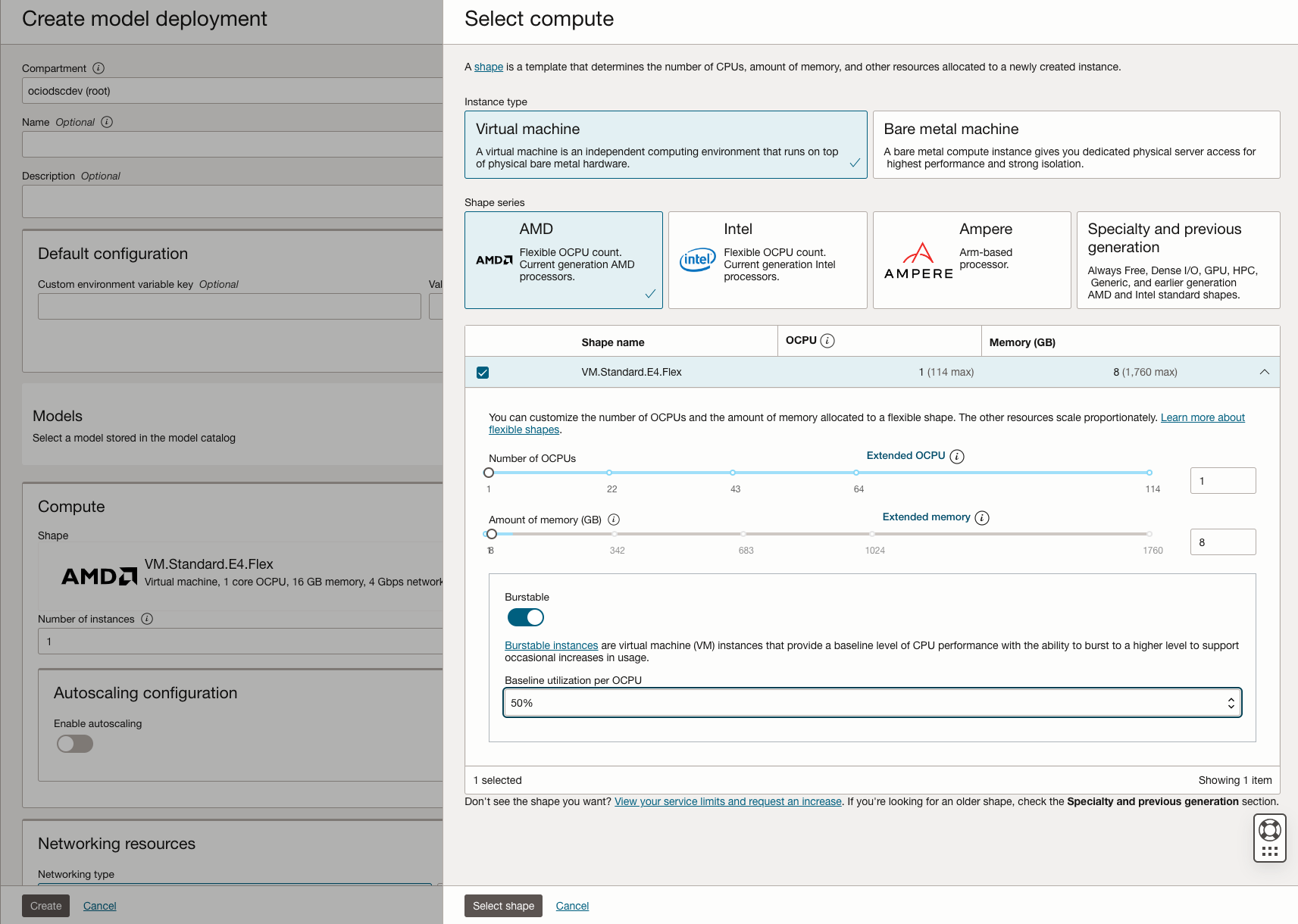

Creating a Model Deployment using OCI Console

Burstable ML Instance vs Regular ML Instance

A burstable instance with 50% baseline CPU with the same number of VM cores would cost much less than a normal instance and would provide similar predict latencies. Additionally, a burstable instance with a higher OCPU count and 50% baseline CPU can perform better than a normal VM instance with one OCPU count.

Burstable Instance

| Architecture | Instance Shape Type | Instance Count | vCPU | Memory GiB | Network Bandwidth GiB | Baseline CPU | Deployed Machine Learning Model |

|---|---|---|---|---|---|---|---|

| x86 | VM.Standard.E4.Flex | 1 | 2 | 32 | 2 | 50 | ML model trained with Iris data set |

VM Host Metrics

Regular Instance

| Architecture | Instance Shape Type | Instance Count | vCPU | Memory GiB | Network Bandwidth GiB | Deployed Machine Learning Model |

|---|---|---|---|---|---|---|

| x86 | VM.Standard.E4.Flex | 1 | 2 | 32 | 2 | ML model trained with Iris data set |

Key Considerations

Currently burstable instances are only available to flex shapes such as VM.Standard3.Flex, VM.Standard.E3.Flex, VM.Standard.E4.Flex. Secondly, the burst is limited to a maximum of one hour in duration. After 1 hour of continuous bursting, the CPU allocated for your VM goes back to the baseline value selected. Third, bursting phenomena is only related to CPU, memory and network bandwidth remains constant. Lastly, if the underlying host is oversubscribed, there is no guarantee that an instance will be able to burst exactly when needed. So its important to choose the right VM configuration for your workload.

Best Practices

There are several tips for optimizing a burstable VM. The most important tip is to make sure that the VM is properly sized for your workload. Some of the machine learning models will require higher computation power. In the event that the VM is too small, it may not have sufficient computation power to load the machine learning model into memory and/or to handle additional inference traffic. On the other hand, if it is too large, you may be paying for resources that you don’t need which would defy the purpose of the using burstable VMs. In addition, you should also make sure that the VM is properly configured. This includes ensuring that the VM is running the latest version of the operating system and software, and that the security settings are properly configured.

Try it yourself

In this blog, we saw how users can leverage autoscaling and burstable VMs for managing cloud resources efficiently. This approach not only optimizes operational costs but also ensures high performance and scalability. The autoscaling feature dynamically adjusts resources to meet varying workload demands, providing a flexible and responsive environment for model deployment. Meanwhile, burstable VMs offer a cost-effective solution for handling intermittent high-demand scenarios, ensuring that performance is not compromised during critical times.

Try Oracle Cloud Free Trial for yourself! A 30-day trial with US$300 in free credits gives you access to OCI Data Science service. For more information, see the following resources:

- Documentation Model Deployment Documentation

- Full sample including all files in OCI Data Science sample repository on Github.

- Visit our OCI Data Science service documentation.

- Configure your OCI tenancy with these setup instructions and start using OCI Data Science.

- Star and clone our new GitHub repo! We included notebook tutorials and code samples.

- Watch our tutorials on our YouTube playlist

- Try one of our LiveLabs. Search for “data science.”