Context: Enterprises want an open, flexible Generative AI stack that’s easy to deploy, secure by design, and production-ready. In this blog, we share how Oracle Cloud Infrastructure (OCI) brings that to life by combining the Meta’s Llama Stack open-source project with OCI AI Blueprints—integrated, opinionated recipes that streamline standing up LLM-based applications on high-performance OCI GPU infrastructure. We’ll walk through how teams can go from prototype to production faster with pre-built pipelines for common patterns—evaluation, testing, and high-throughput inference—as well as turnkey Retrieval-Augmented Generation (RAG) scenarios that pair LLMs with OCI-native vector stores and data services. You’ll see how customers are using this stack for knowledge search, support copilots, and document summarization, while keeping data private within their tenancy and governance consistent via OCI identity, networking, and observability. The results: simpler, repeatable deployments, predictable performance at scale, and a clear path to enterprise-grade operations—all with the openness of Llama Stack and the reliability of OCI. We’ll also highlight lessons learned from early adopters across industries and share best practices to help you accelerate time-to-value for your next Gen AI initiatives.

The why: “Simplicity is the ultimate sophistication.” – Leonardo da Vinci. In the fast-moving world of Generative AI, everyone wants to become an expert quickly—it’s an exciting race. However, this rapid pace brings its own challenges: models are evolving non-stop, new tools emerge daily, and keeping up can be overwhelming. The only constant is change.

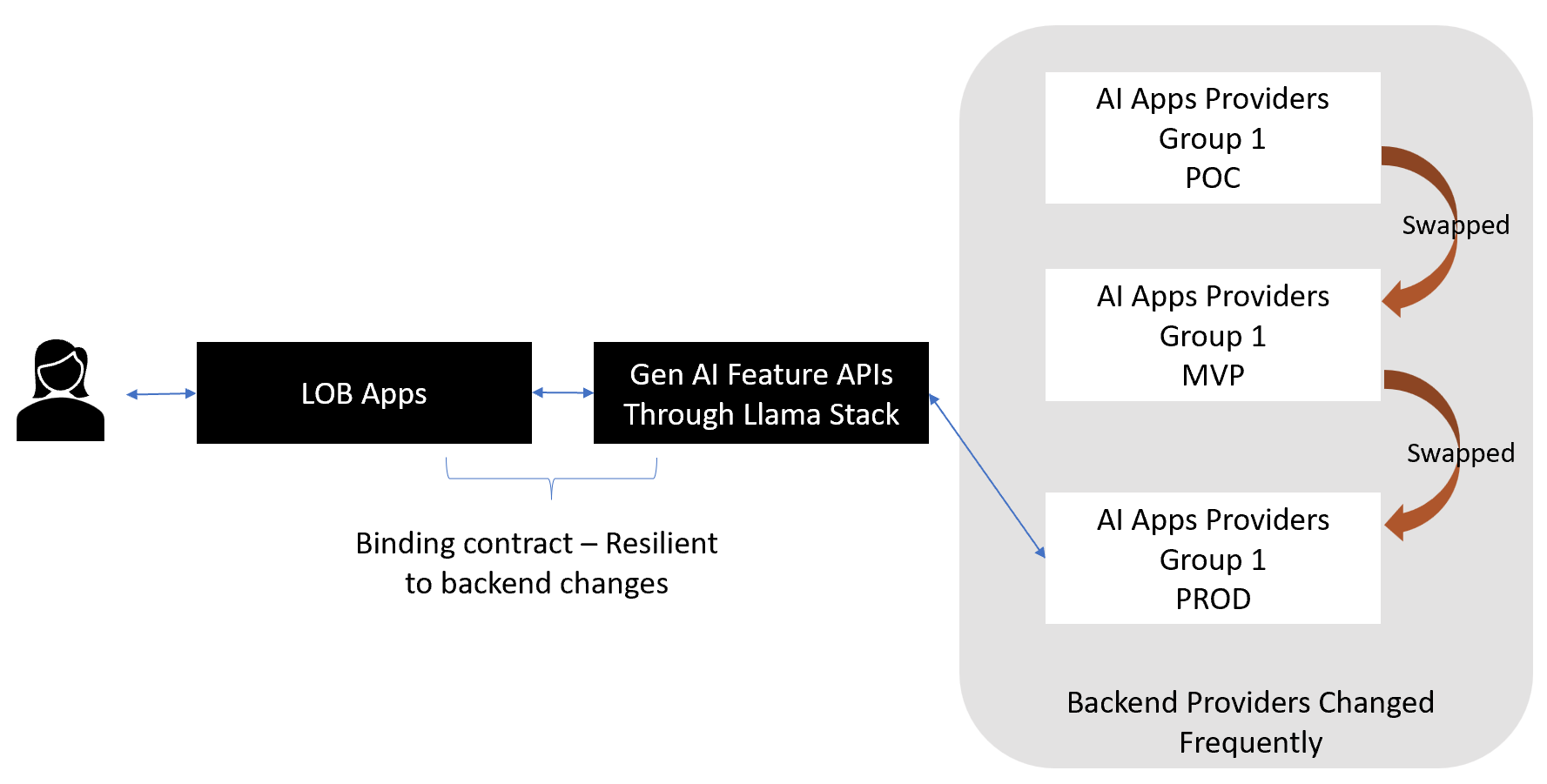

Business teams often seek quick wins with Generative AI, putting pressure on engineering and architecture teams to deliver results on tight timelines. We’ve seen many implementations struggle after locking themselves into rigid frameworks or vendor-specific solutions. For instance, in Retrieval-Augmented Generation (RAG) use cases where embeddings are stored in vector database backends, teams may begin with a popular open-source solution for their POCs, but later migrate to robust, enterprise-supported options like Oracle 26 AI as they move towards production.

Our experience spans hands-on collaborations with customers on a variety of Generative AI projects, from building intelligent customer service agents that integrate with internal systems to developing scalable knowledge search solutions using RAG. In these projects, we’ve noticed POCs progressing quickly—from pre-production, to soft launches, and finally to full deployment. As new model versions are released and requirements shift, some components encounter load challenges and require rearchitecting.

A key lesson learned is the importance of integrating AI features with existing line-of-business (LOB) applications. Establishing a single API contract with LOB systems, while evolving backend AI components, proved essential. This approach allowed us to preserve integration stability, even as we made the overall architecture more modular.

The turning point was adopting Meta’s Llama Stack—a unified open-source API with a modular, resilient, and plugin-based architecture that includes numerous pre-built functions. Llama Stack enabled our customers to iterate, test, and optimize by seamlessly switching providers, all while maintaining a consistent API contract. This flexibility accelerated success and allowed teams to find the best setup for their specific use cases.

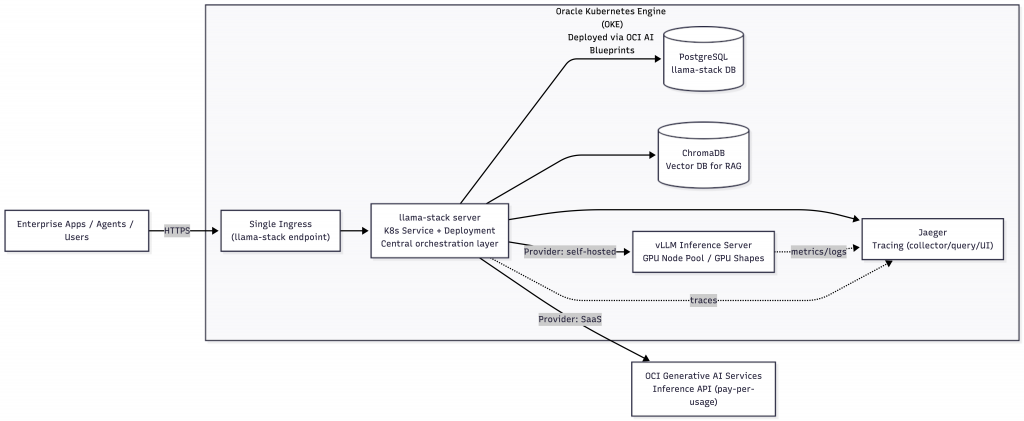

The how: OCI AI Blueprints is an API-first platform that runs on top of Oracle Kubernetes Engine (OKE), giving enterprises a reliable, fault-tolerant foundation for production AI services. AI Blueprints simplifies deployment through a streamlined JSON specification that captures the full contract for a service—hardware, software, scaling, and usage requirements—so teams can deploy consistently across environments with fewer hand-tuned manifests and less drift. The result is an opinionated, reproducible path from prototype to production that fits naturally into CI/CD and GitOps workflows.

This also comes baked with production observability: Prometheus + Grafana dashboards for infrastructure and application signals (GPU utilization, CPU load, memory pressure), plus model-serving metrics like token throughput and utilization in vLLM. That means teams get day-one visibility for capacity planning, performance tuning, and operational ownership—without building a monitoring stack from scratch.

In partnership with the Llama Stack team at Meta, we created a Llama Stack blueprint that deploys a complete, resilient service architecture: PostgreSQL as the Llama Stack DB, ChromaDB for vector search in RAG, Jaeger for tracing, vLLM on GPU shapes for LLM inference, and the llama stack server to unify these providers into a seamlessly integrated system. For customers who prefer SaaS-only consumption models, we also integrated OCI Generative AI Services directly as providers in Llama Stack —removing the need to deploy and operate GPUs while enabling pay-per-usage model consumption.

Since clients are often custom to the end-user, we expose the llama stack API and we’ve found that most customers prefer to attach their own client. This, paired with llama stack providing an OpenAI API Compatible endpoint for inference, and vector io for RAG, makes it easy to upload and reason with documents using the model of your choosing.

By integrating these components, Llama Stack deployed with OCI AI Blueprints gives enterprises an out-of-the-box production deployment for agentic RAG that’s easy to scale up or down, resilient by design, and operationally ready from day one—with first-class monitoring, tracing, and clear usage signals that support governance, chargeback/showback, and ongoing optimization.

Single GenAI API endpoint with Llama Stack, one click deploy with OCI AI Blueprints!

Next Steps

Simplicity and ease of use is what everyone seeks for and sophistication. Llama Stack support with OCI AI Blueprints is exactly what it is built for. Give it a try be a Gen AI hero and share your thoughts with us – https://github.com/oracle-quickstart/oci-ai-blueprints/blob/main/docs/sample_blueprints/partner_blueprints/llama-stack/README.md