We are thrilled to announce ML Applications, a powerful new capability within Oracle Cloud Infrastructure (OCI) Data Science, is now Generally Available (GA). This marks a significant milestone in industrializing Artificial Intelligence and Machine Learning.ML Applications is the platform that Oracle SaaS Cloud uses to power its own AI/ML features and is now available at your fingertips, ready to transform how you develop, deploy, and operate AI/ML features.

In our previous discussions, we highlighted the inherent complexities of integrating AI/ML into Software-as-a-Service (SaaS) applications at scale – a challenge Oracle has tackled for years. ML Applications emerged from this valuable experience, providing a standardized approach to overcome hurdles like managing thousands of pipelines and models across diverse customer datasets. Now, these benefits extend beyond Oracle’s internal use, empowering all our customers to accelerate their AI/ML initiatives and focus on solving critical business problems.

While ML Applications offer a comprehensive suite of features, in this blog post, we will focus on 5 transformative features.

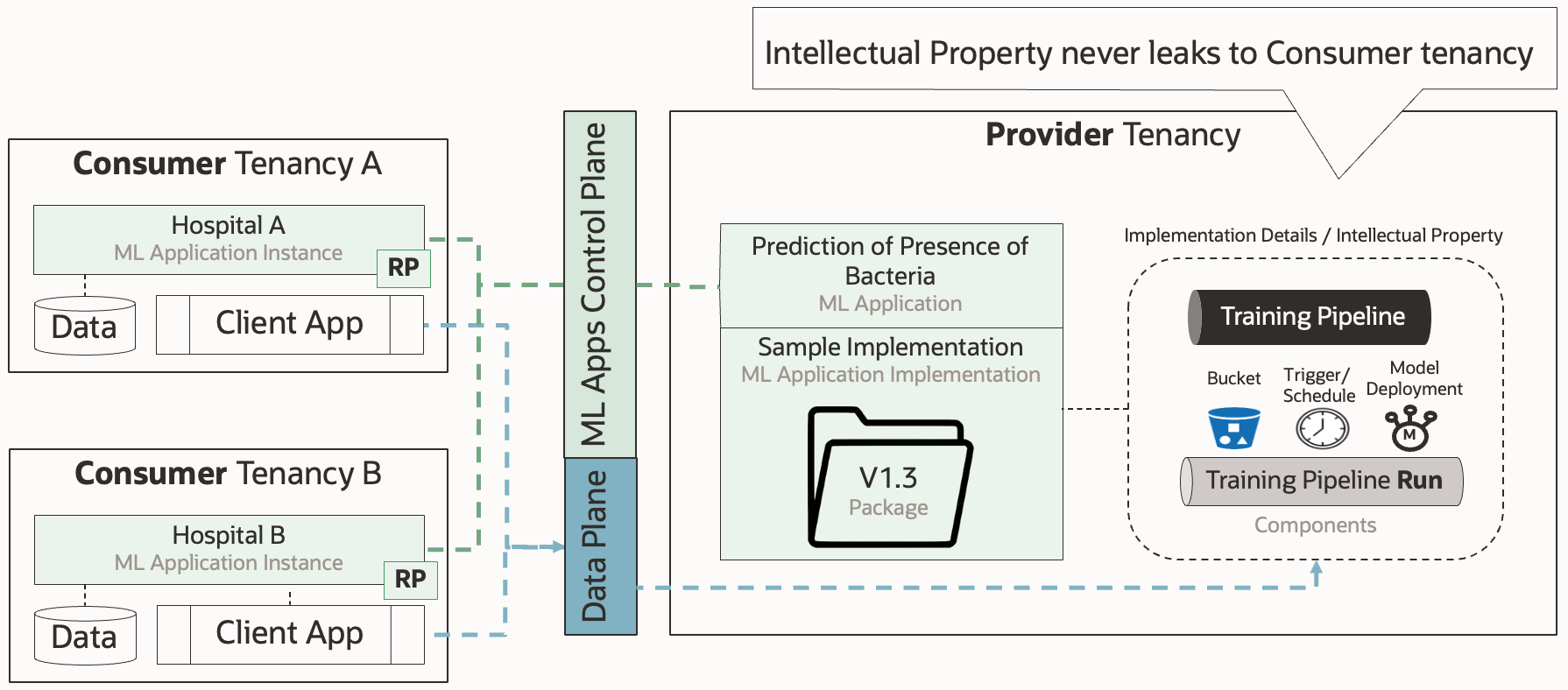

Solution Provided as-a-Service: The Consumer-Provider Concept

At its core, ML Applications embodies a consumer-provider paradigm that simplifies AI/ML solution delivery. Consumers of an AI/ML solution, such as client applications, are completely shielded from implementation details. They simply consume its prediction capabilities through well-defined APIs. This abstraction allows client applications to seamlessly integrate AI without understanding underlying models, training pipelines, or infrastructure.

On the other hand, providers own the end-to-end lifecycle of the solution. They are responsible for building, deploying, operating, and evolving the ML Application. ML Applications empower providers in several crucial ways:

- Observability: Providers gain crucial observability into solution usage, even across different tenancies, via ML Application Instance View resources that mirror consumer instances. This links all related resources and simplifies monitoring and troubleshooting.

- Evolvability: Solutions can evolve without impacting consumers. ML Applications enable zero-downtime upgrades of consumer instances, helping ensure continuous service during bug fixes or new version deployments. Consumers experience continuous service without even noticing maintenance.

Seamless interaction is achieved through a set of intuitive APIs:

- Control Plane APIs (Discovery): Allow consumers to discover available ML Applications.

- Control Plane APIs (Provisioning): Enable consumers to provision new solutions by creating ML Application Instances.

- Data Plane APIs: Allow consumers to direct calls prediction services.

Note that this consumer-provider model offers high flexibility: while it supports complex multi-tenancy scenarios (e.g., a marketplace where third-party providers offer solutions to external customers), most customers leverage it within a single organization or even a single team, acting as both provider and consumer in a single OCI tenancy.

Versioning: Controlled Evolution of ML Applications

Versioning is paramount for continuous evolution and reliable operations of AI/ML solutions. ML Applications automate version tracking, allowing comprehensive traceability. This allows you to easily pinpoint which version is deployed where and trace back versioned components to source code revisions.

Versioning is paramount for continuous evolution and reliable operations of AI/ML solutions. ML Applications automate version tracking, allowing comprehensive traceability. This allows you to easily pinpoint which version is deployed where and trace back versioned components to source code revisions.

Given as-a-Service delivery, supporting zero-downtime upgrades without impacting customers is critical. Providers have flexible upgrade strategies:

- Latest Version (Auto-upgrade): A upgrade all associated instances to the newest available version, simplifying fleet management.

- Custom Version (Provider-controlled): Providers retain granular control, deciding precisely when and to which specific version an instance is upgraded, allowing for staged rollouts or accommodating customer-specific requirements.

This flexible versioning approach provides a safe and transparent mechanism for delivering continuous improvements and new features to your customers, fostering agility while maintaining service stability.

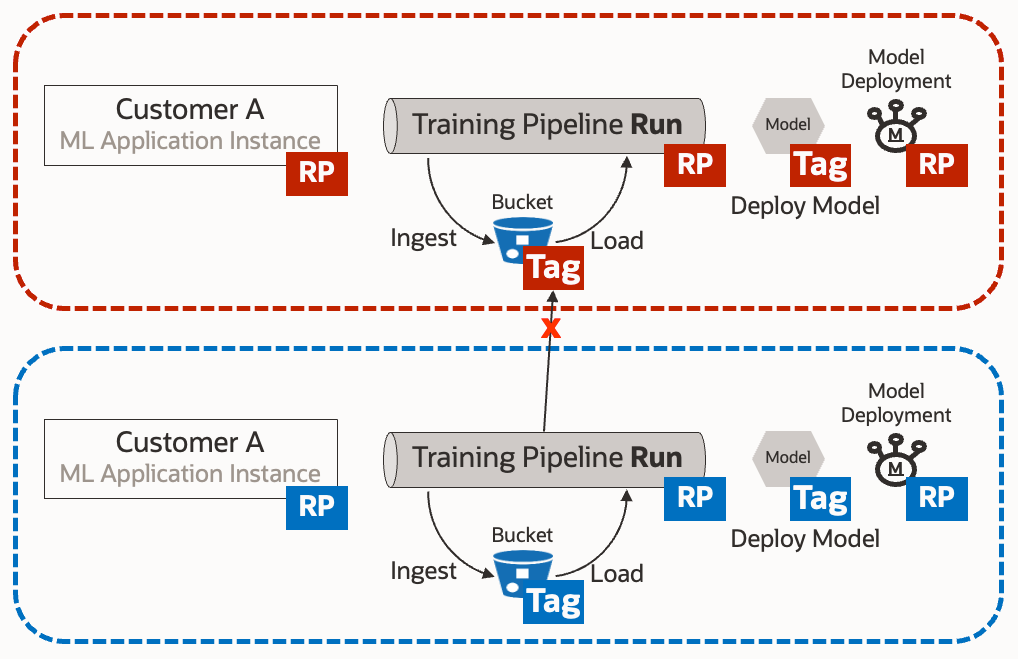

Tenant Isolation: Virtual Sandbox per-Customer

In multi-tenant environments, robust isolation between customer workloads is non-negotiable. ML Applications provide strong tenant isolation via an IAM-based virtual sandbox created for each ML Application Instance (customer). This helps ensure code running on behalf of a specific consumer can access only the resources explicitly belonging to that consumer.

Crucially, this goes beyond process-based isolation. Even defective code is prevented from accessing other consumers’ resources, significantly enhancing security and resilience.

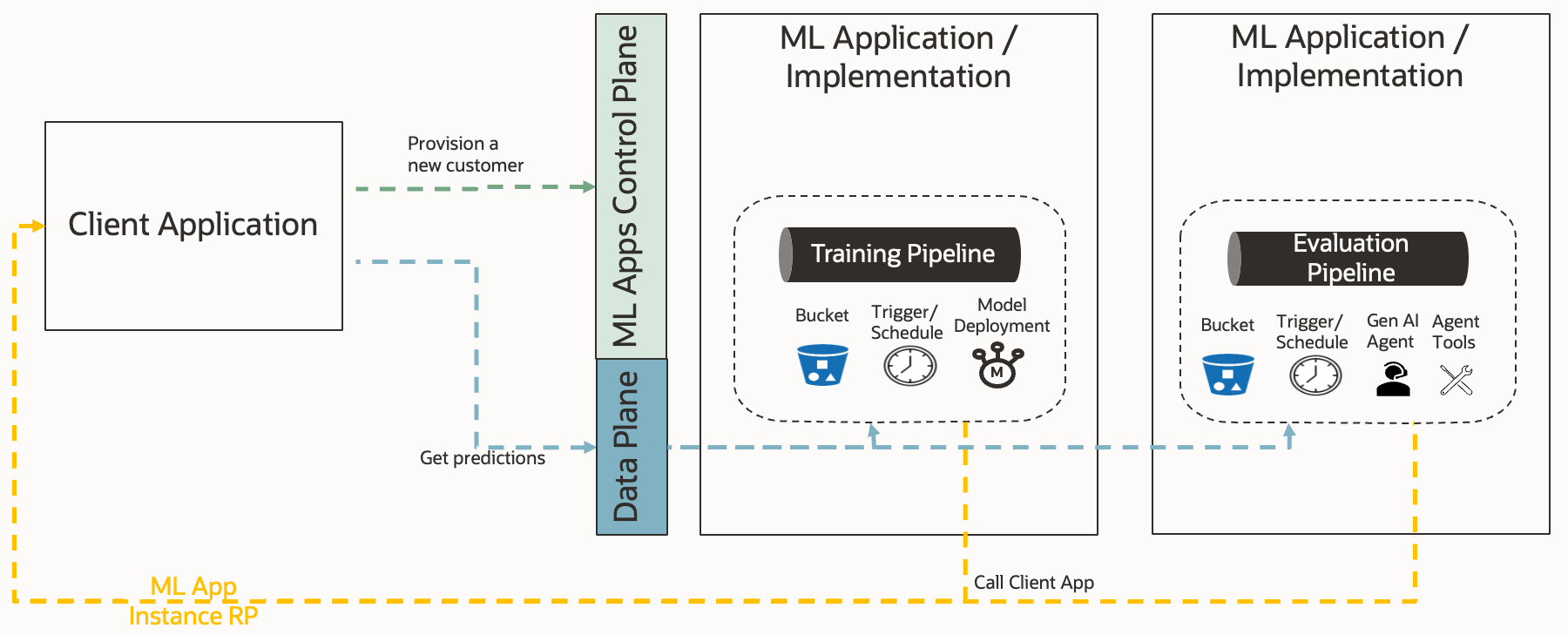

Unified Integration Layer: One Interface for a Wide Variety of AI Solutions

ML Applications serve as a unified integration layer, simplifying client application interaction with diverse AI/ML solutions. Regardless of underlying implementation (online, batch, or agentic), client applications use the same Control Plane and Data Plane APIs to provision and use these solutions.

Conversely, when a client application needs to grant privileges to the solution’s implementation to access its resources (e.g., databases, storage), it grants these privileges to the ML Application Instance (its Resource Principal) without needing to understand implementation intricacies. This standardization can significantly reduce integration complexity and promote consistency.

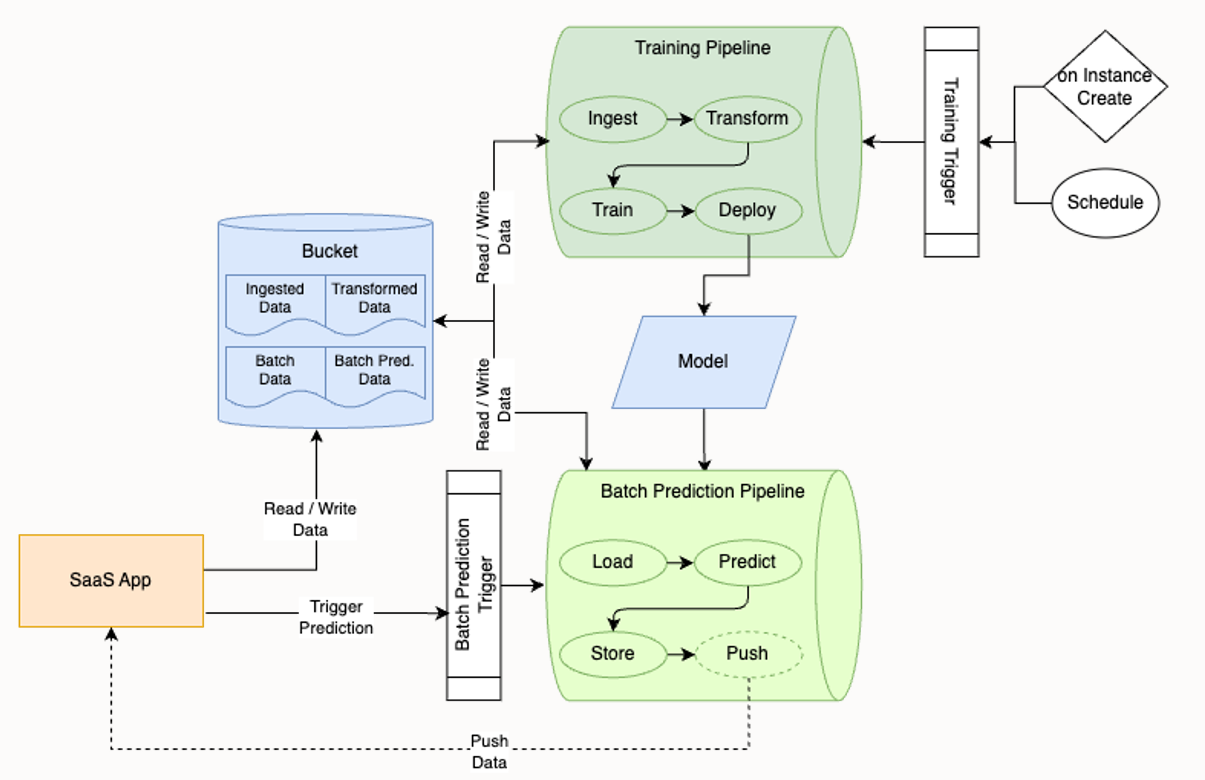

Composition: From Components to Production-Ready AI Solutions

ML Applications standardize the packaging and delivery of AI/ML solutions, enabling you to treat your entire solution as a cohesive unit. You can combine various fundamental components, leveraging OCI Data Science service resources like Jobs, Pipelines, and Model Deployments, alongside resources from other services such as Object Storage buckets or Data Flow Applications.

Your entire solution is then packaged and deployed as a single entity, discoverable and consumable by other applications without them needing to understand or manipulate the individual components. This allows you to implement diverse aspects of your AI/ML functionality and keep everything organized with well-defined interfaces for consumers. For example, you can bundle:

- Multiple training pipelines.

- Pipelines for feedback loops.

- Batch prediction pipelines.

- Schedules and triggers for periodic or event-driven runs.

- Online prediction services or intelligent agents.

- Data reconciliation or cleanup pipelines.

Everything is bundled together and treated as a unified, production-ready solution, significantly simplifying management and deployment.

Conclusion

ML Applications in OCI Data Science represent a significant leap forward in the industrialization of AI and ML. By providing a platform that streamlines development, standardizes packaging and delivery, we allow all OCI customers, from independent software vendors building SaaS applications to large enterprises seeking to customize their AI capabilities, to leverage AI with exceptional efficiency.

If you’re facing the challenges of delivering and operating AI/ML at scale, we invite you to explore ML Applications. Let Oracle’s experience and robust capabilities transform your AI/ML journey.