In my last post titled How To Retrieve Analytics Data from ZFS Storage?, I explained how we could read Analytics datasets from ZFS Storage.

We will try to overlay two graphs in this example. I hope my little attempt helps others to understand the overview of how we can download the data from ZFS Storage, clean up the data, and then plot graphs of your choice.

There are some points we need to discuss before overlaying the graphs.

- Why Do We Want To Overlay Datasets?

- Analytics Data Formats

- How To Parse Data

- Tips To Process Data formats

We are going to use the following packages for this experiment. We are again using Python3.

- Pandas

- Requests

- urllib3 (For us to suppress unnecessary warning messages.)

Why Do We Want To Overlay Datasets?

You may wonder why we need to draw a graph on our own. We have Analytics.

Unfortunately, the current Analytics does not allow you to overlay graphs from the different datasets. Having the data points from the same graph may help you read their co-relation between the datasets. Therefore, there are cases where you want to read the data and draw on your graphs.

Analytics Data Formats

In my previous post, How To Retrieve Analytics Data from ZFS Storage??, I used “requests” package in Python. There is a json() method to convert the received response into a Python dictionary. If you want to make a graph, get statistical values such as mean or distribution, or find co-relations among the datasets, table format is much handy because we can read them quite easily to R or Pandas.

Unfortunately, Analytics data format changes based on the data. Therefore, you need to translate them into an appropriate table and read to Pandas data frames, for example.

Analytics Data In Dictionary

The converted data by json() method is in the form of a Python dictionary. Based on which type of data you are reading, you have different formats. In this section, we would like to cover their formats, so that we can write code to convert later.

The top-level of the dictionary in the form of “{“data”: <value 1>}.2 The content of <value 1> is a list, whose elements are also dictionaries. A simplified format looks something like below.

|

|

Each “index-value” pair of the dictionary is as follows.

|

|

“sample” has the serial number of when the data was collected. The “samples” field has the last number of this dataset that you are getting the response to. “startTime” is a timestamp in UTC against this data point. “data” holds the actual data. This field has three variations, depending on the data we are receiving.

When we have no data, we get an empty dictionary like the example above. The second one has “max,” “min,” and “value” fields that each contains the maximum, minimum, and average number, respectively.

|

|

The third case is the one you would see in “the number of NFS commands per second” Analytics. It would have multiple data points in the form of a list of dictionaries.

“key” has what the data is about, and then we have “max,” “min,” and “value” fields for each maximum, minimum and average number, respectively.

|

|

How To Parse Data

To plot the graph in a single chart, in the end, we need to merge the datasets.

I initially tried to achieve the migration of data in the following steps.

- Read the data from ZFS Storage

- Convert the response into a dictionary using json() method.

- Concatenate the new dictionary with the old one.

- Repeat 1 to 3 until we get all the data necessary

- Convert the dictionary to Pandas

- Clean up NaN by converting it to 0 so that “matplotlib” can draw a graph later.

However, after some tries and errors, it looks to me that converting the responses into Pandas’ data frames using json_normalize() first, and then cleaning up data looks more flexible. However, this will make a column with multiple data points. To avoid that, I wrote a function to divide them into different columns of the data frame. My sample code uses this method.

It is not the best code, but I have a link to a working sample code at the end of this post.

Tips To Process Data Formats

There are a few things you need to do before passing your dataframe to matplotlib to draw a graph. The same tips apply when you are dealing with data with the dictionary.

(a) Avoid Collision of Dataframe Column Names

Pandas’ data frame is two dimensional. A value is stored using the name of the column and the value of the index. Specifying the same column name and the index will override the existing value. The same applies to the dictionary – specifying the same index for different data breaks the original data. Dictionaries have to have the unique key-value pair for storing different data.

However, as you may have noticed, min, max, and value are very general words. They are often used to represent the different columns. You may end up having the same column name from the different datasets. In the sample code, we take a look at the content of “data.data” and tried to make unique column names at conversion. We use the value of the “key” and the name for statistics such as min/max/value and create the column name such as “read_min.”

In reality, this still can end up collision if the datasets are for NFSv3 and NFSv4 command statistics. In my sample code, the user can specify a prefix of choice in front of the column name when covert happens. (“prefix” parameter that you can specify when calling “expand_column()” function.)

By having unique column names, concatenating data frames won’t cause collisions in column names. Therefore, the code doesn’t load the data to the wrong column.

(b) startTime Format

The original data type for “startTime” field is a string. In the sample code, I converted the column to the datetime type using to_datetime() method in Pandas. If you want to use the column as an index in matplotlib, please use set_index() method to make the column the index of the dataframe.

(c) Processing NaN

Missing a data point and having a 0 (zero) are different. However, for Matplotlib to plot correctly, you need to fill some number where NaN (Not a Number) is set. In my sample code, we treat NaNs as zeros.

(d) Drop Unnecessary Columns

When displaying the graph, columns such as “sample” or “samples” aren’t necessary. Please drop the columns before passing to “matplotlib.pyplot.”

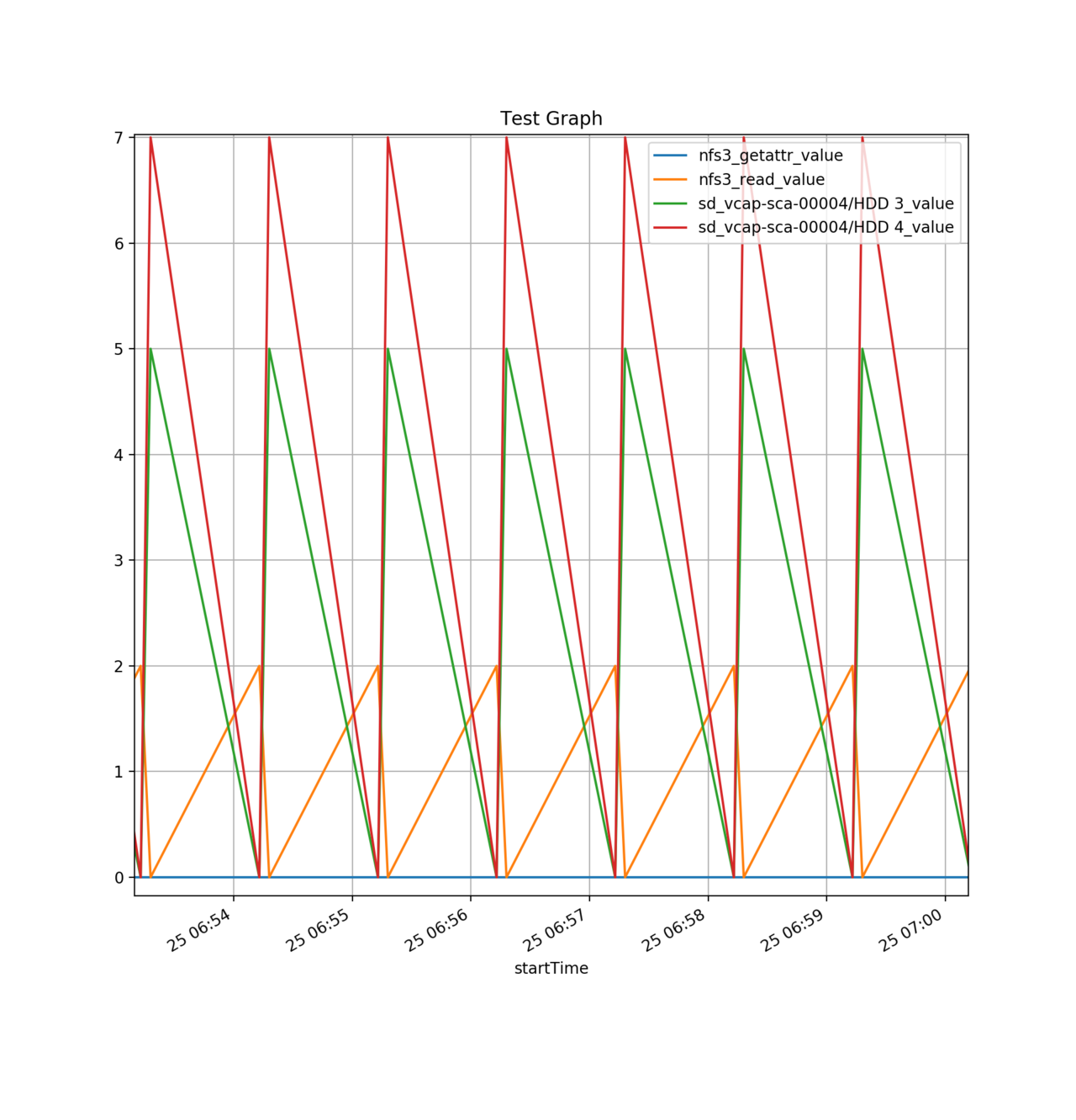

Sample Graph

I downloaded 24 hour worth of data for the number of commands for NFSv3 GETATTR and READ as well as disk IOPS for HDD3 and 4. Per-second data for 24 hours results in 86,400 data points for each dataset. Plotting all the data-points that I downloaded without any care made my line plot graph almost looks like a bar chart. So the sample below is a zoomed-in version for the 6-minute duration.

It’s a simple I/O graph and not the best graph for a sample, but you get an idea of how we can overlay the graphs.

Sample Code

https://gitlab.com/hisao.tsujimura/public/blob/master/zfssa-rest-analytics/graph_overlay.py