This blog post describes WebLogic Server global transactions running in a Kubernetes environment. First, we’ll review how the WebLogic Server Transaction Manager (TM) processes distributed transactions. Then, we’ll walk through an example transactional application that is deployed to WebLogic Server domains running in a Kubernetes cluster with the WebLogic Kubernetes Operator.

WebLogic Server Transaction Manager

Introduction

The WebLogic Server Transaction Manager (TM) is the transaction processing monitor implementation in WebLogic Server that supports the Java Enterprise Edition (Java EE) Java Transaction API (JTA). A Java EE application uses JTA to manage global transactions to ensure that changes to resource managers, such as databases and messaging systems, either complete as a unit, or are undone.

This section provides a brief introduction to the WebLogic Server TM, specifically around network communication and related configuration, which will be helpful when we examine transactions in a Kubernetes environment. There are many TM features, optimizations, and configuration options that won’t be covered in this article. Refer to the following WebLogic Server documentation for additional details:

· For general information about the WebLogic Server TM, see the WebLogic Server JTA documentation.

· For detailed information regarding the Java Transaction API, see the Java EE JTA Specification.

How Transactions are Processed in WebLogic Server

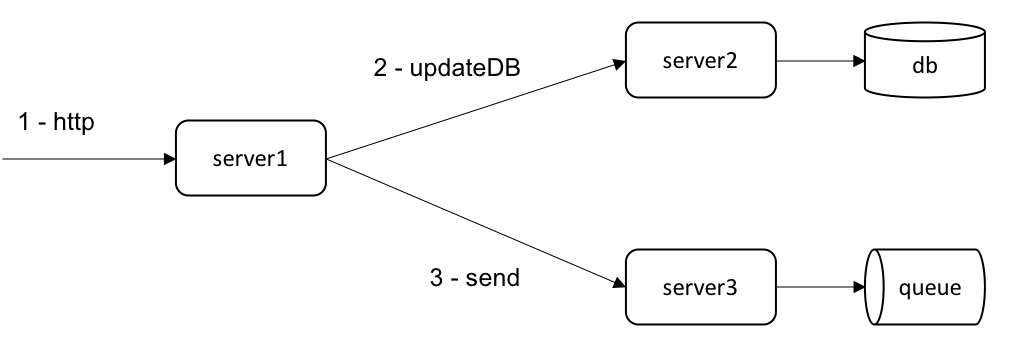

To get a basic understanding of how the WebLogic Server TM processes transactions, we’ll look at a hypothetical application. Consider a web application consisting of a servlet that starts a transaction, inserts a record in a database table, and sends a message to a Java Messaging Service (JMS) queue destination. After updating the JDBC and JMS resources, the servlet commits the transaction. The following diagram shows the server and resource transaction participants.

Transaction Propagation

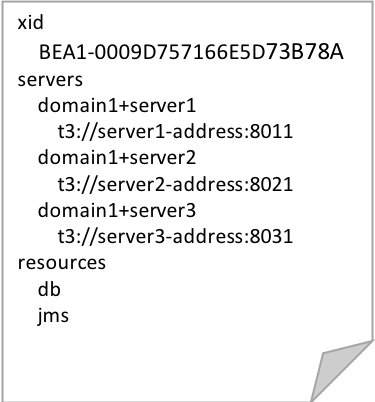

The transaction context builds up state as it propagates between servers and as resources are accessed by the application. For this application, the transaction context at commit time would look something like the following.

Server participants, identified by domain name and server name, have an associated URL that is used for internal TM communication. These URLs are typically derived from the server’s default network channel, or default secure network channel.

The transaction context also contains information about which server participants have javax.transaction.Synchronization callbacks registered. The JTA synchronization API is a callback mechanism where the TM invokes the Synchronization.beforeCompletion() method before commencing two-phase commit processing for a transaction. The Synchronization.afterCompletion(int status) method is invoked after transaction processing is complete with the final status of the transaction (for example, committed, rolled back, and such).

Transaction Completion

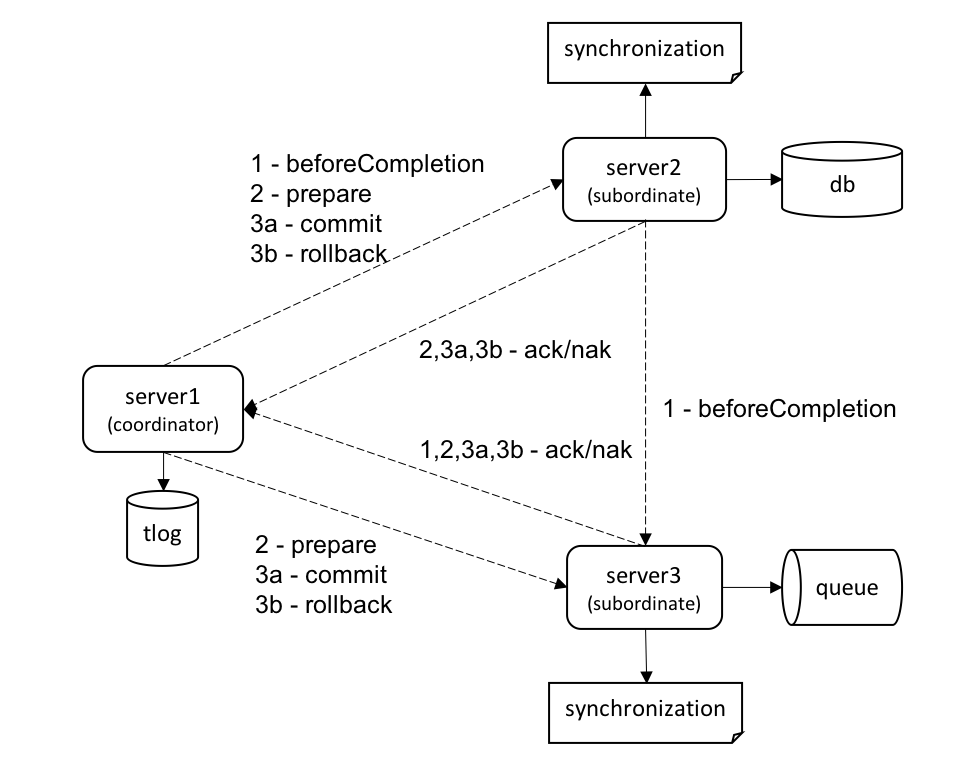

When the TM is instructed to commit the transaction, the TM takes over and coordinates the completion of the transaction. One of the server participants is chosen as the transaction coordinator to drive the two-phase commit protocol. The coordinator instructs the remaining subordinate servers to process registered synchronization callbacks, and to prepare, commit, or rollback resources. The TM communication channels used to coordinate the example transaction are illustrated in the following diagram.

The dashed-line arrows represent asynchronous RMI calls between the coordinator and subordinate servers. Note that the Synchronization.beforeCompletion() communication can take place directly between subordinate servers. It is also important to point out that application communication is conceptually separate from the internal TM communication, as the TM may establish network channels that were not used by the application to propagate the transaction. The TM could use different protocols, addresses, and ports depending on how the server default network channels are configured.

Configuration Recommendations

There are a few TM configuration recommendations related to server network addresses, persistent storage, and server naming.

Server Network Addresses

As mentioned previously, server participants locate each other using URLs included in the transaction context. It is important that the network channels used for TM URLs be configured with address names that are resolvable after node, pod, or container restarts where IP addresses may change. Also, because the TM requires direct server-to-server communication, cluster or load-balancer addresses that resolve to multiple IP addresses should not be used.

Transaction Logs

The coordinating server persists state in the transaction log (TLOG) that is used for transaction recovery processing after failure. Because a server instance may relocate to another node, the TLOG needs to reside in a network/replicated file system (for example, NFS, SAN, and such) or in a highly-available database such as Oracle RAC. For additional information, refer to the High Availability Guide.

Cross-Domain Transactions

Transactions that span WebLogic Server domains are referred to as cross-domain transactions. Cross-domain transactions introduce additional configuration requirements, especially when the domains are connected by a public network.

Server Naming

The TM identifies server participants using a combination of the domain name and server name. Therefore, each domain should be named uniquely to prevent name collisions. Server participant name collisions will cause transactions to be rolled back at runtime.

Security

Server participants that are connected by a public network require the use of secure protocols (for example, t3s) and authorization checks to verify that the TM communication is legitimate. For the purpose of this demonstration, we won’t cover these topics in detail. For the Kubernetes example application, all TM communication will take place on the private Kubernetes network and will use a non-SSL protocol.

For details on configuring security for cross-domain transactions, refer to the Configuring Secure Inter-Domain and Intra-Domain Transaction Communication chapter of the Fusion Middleware Developing JTA Applications for Oracle WebLogic Server documentation.

WebLogic Server on Kubernetes

In an effort to improve WebLogic Server integration with Kubernetes, Oracle has released the open source WebLogic Kubernetes Operator. The WebLogic Kubernetes Operator supports the creation and management of WebLogic Server domains, integration with various load balancers, and additional capabilities. For details refer to the GitHub project page, https://github.com/oracle/weblogic-kubernetes-operator, and the related blogs at https://blogs.oracle.com/weblogicserver/how-to-weblogic-server-on-kubernetes.

Example Transactional Application Walkthrough

To illustrate running distributed transactions on Kubernetes, we’ll step through a simplified transactional application that is deployed to multiple WebLogic Server domains running in a single Kubernetes cluster. The environment that I used for this example is a Mac running Docker Edge v18.05.0-ce that includes Kubernetes v1.9.6.

After installing and starting Docker Edge, open the Preferences page, increase the memory available to Docker under the Advanced tab (~8 GiB) and enable Kubernetes under the Kubernetes tab. After applying the changes, Docker and Kubernetes will be started. If you are behind a firewall, you may also need to add the appropriate settings under the Proxies tab. Once running, you should be able to list the Kubernetes version information.

$ kubectl version

Client Version: version.Info{Major:"1", Minor:"9", GitVersion:"v1.9.6", GitCommit:"9f8ebd171479bec0ada837d7ee641dec2f8c6dd1", GitTreeState:"clean", BuildDate:"2018-03-21T15:21:50Z", GoVersion:"go1.9.3", Compiler:"gc", Platform:"darwin/amd64"}

Server Version: version.Info{Major:"1", Minor:"9", GitVersion:"v1.9.6", GitCommit:"9f8ebd171479bec0ada837d7ee641dec2f8c6dd1", GitTreeState:"clean", BuildDate:"2018-03-21T15:13:31Z", GoVersion:"go1.9.3", Compiler:"gc", Platform:"linux/amd64"}

To keep the example file system path names short, the working directory for input files, operator sources and binaries, persistent volumes, and such, are created under $HOME/k8sop. You can reference the directory using the environment variable $K8SOP.

$ export K8SOP=$HOME/k8sop $ mkdir $K8SOP

Install the WebLogic Kubernetes Operator

The next step will be to build and install the weblogic-kubernetes-operator image. Refer to the installation procedures at https://oracle.github.io/weblogic-kubernetes-operator/userguide/managing-operators/installation/.

Note that for this example, the weblogic-kubernetes-operator GitHub project will be cloned under the $K8SOP/src directory ($K8SOP/src/weblogic-kubernetes-operator). Also note that when building the Docker image, use the tag “local” in place of “some-tag” that’s specified in the installation docs.

$ mkdir $K8SOP/src $ cd $K8SOP/src $ git clone https://github.com/oracle/weblogic-kubernetes-operator.git $ cd weblogic-kubernetes-operator $ mvn clean install $ docker login $ docker build -t weblogic-kubernetes-operator:local --no-cache=true .

After building the operator image, you should see it in the local registry.

$ docker images weblogic-kubernetes-operator REPOSITORY TAG IMAGE ID CREATED SIZE weblogic-kubernetes-operator local 42a5f70c7287 10 seconds ago 317MB

The next step will be to deploy the operator to the Kubernetes cluster. For this example, we will modify the create-weblogic-operator-inputs.yaml file to add an additional target namespace (weblogic) and specify the correct operator image name.

| Attribute | Value |

| targetNamespaces | default,weblogic |

| weblogicOperatorImage | weblogic-kubernetes-operator:local |

| javaLoggingLevel | WARNING |

Save the modified input file under $K8SOP/create-weblogic-operator-inputs.yaml.

Then run the create-weblogic-operator.sh script, specifying the path to the modified create-weblogic-operator.yaml input file and the path of the operator output directory.

$ cd $K8SOP $ mkdir weblogic-kubernetes-operator $ $K8SOP/src/weblogic-kubernetes-operator/kubernetes/create-weblogic-operator.sh -i $K8SOP/create-weblogic-operator-inputs.yaml -o $K8SOP/weblogic-kubernetes-operator

When the script completes you will be able to see the operator pod running.

$ kubectl get po -n weblogic-operator NAME READY STATUS RESTARTS AGE weblogic-operator-6dbf8bf9c9-prhwd 1/1 Running 0 44s

WebLogic Domain Creation

The procedures for creating a WebLogic Server domain are documented at https://oracle.github.io/weblogic-kubernetes-operator/quickstart/create-domain. Follow the instructions for pulling the WebLogic Server image from the Oracle Container Registry into the local registry https://oracle.github.io/weblogic-kubernetes-operator/userguide/base-images/#obtain-standard-images-from-the-oracle-container-registry. You’ll be able to pull the image after accepting the license agreement on the Docker store.

$ docker login container-registry.oracle.com $ docker pull container-registry.oracle.com/middleware/weblogic:12.2.1.4

Next, we’ll create a Kubernetes secret to hold the administrative credentials for our domain (weblogic/weblogic1).

$ kubectl -n weblogic create secret generic domain1-weblogic-credentials --from-literal=username=weblogic --from-literal=password=weblogic1

The persistent volume location for the domain will be under $K8SOP/volumes/domain1.

$ mkdir -m 777 -p $K8SOP/volumes/domain1

Then we’ll customize the $K8SOP/src/weblogic-kubernetes-operator/kubernetes/create-weblogic-domain-inputs.yaml example input file, modifying the following attributes:

| Attribute | Value |

| weblogicDomainStoragePath | {full path of $HOME}/k8sop/volumes/domain1 |

| domainName | domain1 |

| domainUID | domain1 |

| t3PublicAddress | {your-local-hostname} |

| exposeAdminT3Channel | true |

| exposeAdminNodePort | true |

| namespace | weblogic |

After saving the updated input file to $K8SOP/create-domain1.yaml, invoke the create-weblogic-domain.sh script as follows.

$ $K8SOP/src/weblogic-kubernetes-operator/kubernetes/create-weblogic-domain.sh -i $K8SOP/create-domain1.yaml -o $K8SOP/weblogic-kubernetes-operator

After the create-weblogic-domain.sh script completes, Kubernetes will start up the Administration Server and the clustered Managed Server instances. After a while, you can see the running pods.

$ kubectl get po -n weblogic NAME READY STATUS RESTARTS AGE domain1-admin-server 1/1 Running 0 5m domain1-cluster-1-traefik-9985d9594-gw2jr 1/1 Running 0 5m domain1-managed-server1 1/1 Running 0 3m domain1-managed-server2 1/1 Running 0 3m

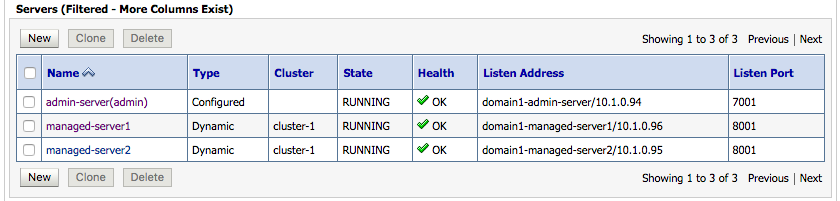

Now we will access the running Administration Server using the WebLogic Server Administration Console to check the state of the domain using the URL http://localhost:30701/console with the credentials weblogic/weblogic1. The following screen shot shows the Servers page.

The Administration Console Servers page shows all of the servers in domain1. Note that each server has a listen address that corresponds to a Kubernetes service name that is defined for the specific server instance. The service name is derived from the domainUID (domain1) and the server name.

These address names are resolvable within the Kubernetes namespace and, along with the listen port, are used to define each server’s default network channel. As mentioned previously, the default network channel URLs are propagated with the transaction context and are used internally by the TM for distributed transaction coordination.

Example Application

Now that we have a WebLogic Server domain running under Kubernetes, we will look at an example application that can be used to verify distributed transaction processing. To make the example as simple as possible, it will be limited in scope to transaction propagation between servers and synchronization callback processing. This will allow us to verify inter-server transaction communication without the need for resource manager configuration and the added complexity of writing JDBC or JMS client code.

The application consists of two main components: a servlet front end and an RMI remote object. The servlet processes a GET request that contains a list of URLs. It starts a global transaction and then invokes the remote object at each of the URLs. The remote object simply registers a synchronization callback that prints a message to stdout in the beforeCompletion and afterCompletion callback methods. Finally, the servlet commits the transaction and sends a response containing information about each of the RMI calls and the outcome of the global transaction.

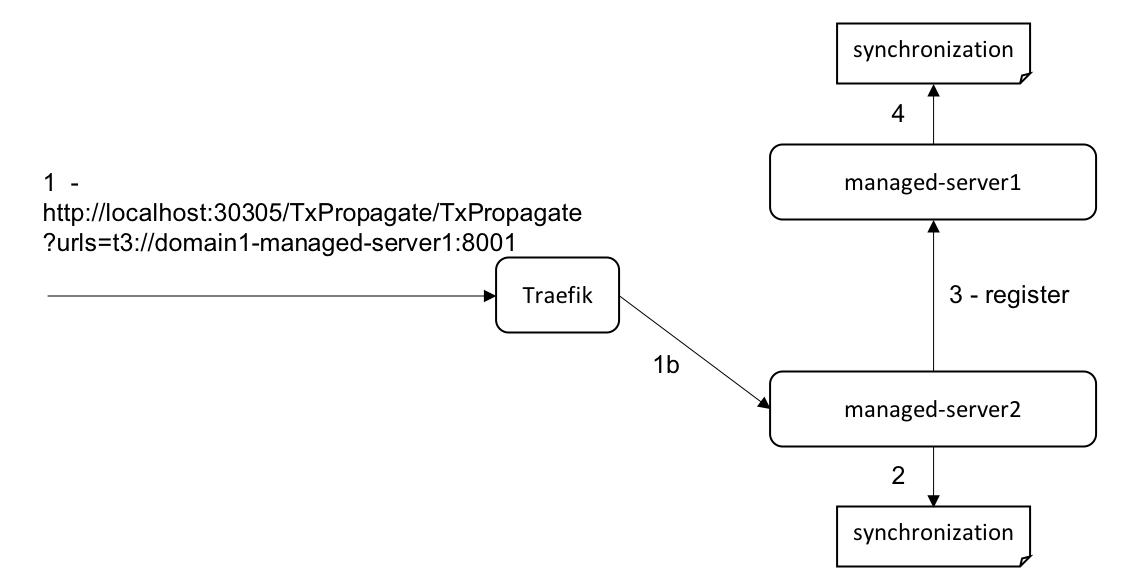

The following diagram illustrates running the example application on the domain1 servers in the Kubernetes cluster. The servlet is invoked using the Administration Server’s external port. The servlet starts the transaction, registers a local synchronization object, and invokes the register operation on the Managed Servers using their Kubernetes internal URLs: t3://domain1-managed-server1:8001 and t3://domain1-managed-server2:8001.

TxPropagate Servlet

As mentioned above, the servlet starts a transaction and then invokes the RemoteSync.register() remote method on each of the server URLs specified. Then the transaction is committed and the results are returned to the caller.

package example;

import java.io.IOException;

import java.io.PrintWriter;

import javax.naming.Context;

import javax.naming.InitialContext;

import javax.naming.NamingException;

import javax.servlet.ServletException;

import javax.servlet.annotation.WebServlet;

import javax.servlet.http.HttpServlet;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import javax.transaction.HeuristicMixedException;

import javax.transaction.HeuristicRollbackException;

import javax.transaction.NotSupportedException;

import javax.transaction.RollbackException;

import javax.transaction.SystemException;

import weblogic.transaction.Transaction;

import weblogic.transaction.TransactionHelper;

import weblogic.transaction.TransactionManager;

@WebServlet("/TxPropagate")

public class TxPropagate extends HttpServlet {

private static final long serialVersionUID = 7100799641719523029L;

private TransactionManager tm = (TransactionManager)

TransactionHelper.getTransactionHelper().getTransactionManager();

protected void doGet(HttpServletRequest request,

HttpServletResponse response) throws ServletException, IOException {

PrintWriter out = response.getWriter();

String urlsParam = request.getParameter("urls");

if (urlsParam == null) return;

String[] urls = urlsParam.split(",");

try {

RemoteSync forward = (RemoteSync)

new InitialContext().lookup(RemoteSync.JNDINAME);

tm.begin();

Transaction tx = (Transaction) tm.getTransaction();

out.println("<pre>");

out.println(Utils.getLocalServerID() + " started " +

tx.getXid().toString());

out.println(forward.register());

for (int i = 0; i < urls.length; i++) {

out.println(Utils.getLocalServerID() + " " + tx.getXid().toString() +

" registering Synchronization on " + urls[i]);

Context ctx = Utils.getContext(urls[i]);

forward = (RemoteSync) ctx.lookup(RemoteSync.JNDINAME);

out.println(forward.register());

}

tm.commit();

out.println(Utils.getLocalServerID() + " committed " + tx);

} catch (NamingException | NotSupportedException | SystemException |

SecurityException | IllegalStateException | RollbackException |

HeuristicMixedException | HeuristicRollbackException e) {

throw new ServletException(e);

}

}

Remote Object

The RemoteSync remote object contains a single method, register, that registers a javax.transaction.Synchronization callback with the propagated transaction context.

RemoteSync Interface

The following is the example.RemoteSync remote interface definition.

package example;

import java.rmi.Remote;

import java.rmi.RemoteException;

public interface RemoteSync extends Remote {

public static final String JNDINAME = "propagate.RemoteSync";

String register() throws RemoteException;

}

RemoteSyncImpl Implementation

The example.RemoteSyncImpl class implements the example.RemoteSync remote interface and contains an inner synchronization implementation class named SynchronizationImpl. The beforeCompletion and afterCompletion methods simply write a message to stdout containing the server ID (domain name and server name) and the Xid string representation of the propagated transaction.

The static main method instantiates a RemoteSyncImpl object and binds it into the server’s local JNDI context. The main method is invoked when the application is deployed using the ApplicationLifecycleListener, as described below.

package example;

import java.rmi.RemoteException;

import javax.naming.Context;

import javax.transaction.RollbackException;

import javax.transaction.Synchronization;

import javax.transaction.SystemException;

import weblogic.jndi.Environment;

import weblogic.transaction.Transaction;

import weblogic.transaction.TransactionHelper;

public class RemoteSyncImpl implements RemoteSync {

public String register() throws RemoteException {

Transaction tx = (Transaction)

TransactionHelper.getTransactionHelper().getTransaction();

if (tx == null) return Utils.getLocalServerID() +

" no transaction, Synchronization not registered";

try {

Synchronization sync = new SynchronizationImpl(tx);

tx.registerSynchronization(sync);

return Utils.getLocalServerID() + " " + tx.getXid().toString() +

" registered " + sync;

} catch (IllegalStateException | RollbackException |

SystemException e) {

throw new RemoteException(

"error registering Synchronization callback with " +

tx.getXid().toString(), e);

}

}

class SynchronizationImpl implements Synchronization {

Transaction tx;

SynchronizationImpl(Transaction tx) {

this.tx = tx;

}

public void afterCompletion(int arg0) {

System.out.println(Utils.getLocalServerID() + " " +

tx.getXid().toString() + " afterCompletion()");

}

public void beforeCompletion() {

System.out.println(Utils.getLocalServerID() + " " +

tx.getXid().toString() + " beforeCompletion()");

}

}

// create and bind remote object in local JNDI

public static void main(String[] args) throws Exception {

RemoteSyncImpl remoteSync = new RemoteSyncImpl();

Environment env = new Environment();

env.setCreateIntermediateContexts(true);

env.setReplicateBindings(false);

Context ctx = env.getInitialContext();

ctx.rebind(JNDINAME, remoteSync);

System.out.println("bound " + remoteSync);

}

}

Utility Methods

The Utils class contains a couple of static methods, one to get the local server ID and another to perform an initial context lookup given a URL. The initial context lookup is invoked under the anonymous user. These methods are used by both the servlet and the remote object.

package example;

import java.util.Hashtable;

import javax.naming.Context;

import javax.naming.InitialContext;

import javax.naming.NamingException;

public class Utils {

public static Context getContext(String url) throws NamingException {

Hashtable env = new Hashtable();

env.put(Context.INITIAL_CONTEXT_FACTORY,

"weblogic.jndi.WLInitialContextFactory");

env.put(Context.PROVIDER_URL, url);

return new InitialContext(env);

}

public static String getLocalServerID() {

return "[" + getDomainName() + "+"

+ System.getProperty("weblogic.Name") + "]";

}

private static String getDomainName() {

String domainName = System.getProperty("weblogic.Domain");

if (domainName == null) domainName = System.getenv("DOMAIN_NAME");

return domainName;

}

}

ApplicationLifecycleListener

When the application is deployed to a WebLogic Server instance, the lifecycle listener preStart method is invoked to initialize and bind the RemoteSync remote object.

package example;

import weblogic.application.ApplicationException;

import weblogic.application.ApplicationLifecycleEvent;

import weblogic.application.ApplicationLifecycleListener;

public class LifecycleListenerImpl extends ApplicationLifecycleListener {

public void preStart (ApplicationLifecycleEvent evt)

throws ApplicationException {

super.preStart(evt);

try {

RemoteSyncImpl.main(null);

} catch (Exception e) {

throw new ApplicationException(e);

}

}

}

Application Deployment Descriptor

The application archive contains the following weblogic-application.xml deployment descriptor to register the ApplicationLifecycleListener object.

<?xml version = '1.0' ?> <weblogic-application xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.bea.com/ns/weblogic/weblogic-application http://www.bea.com/ns/weblogic/weblogic-application/1.0/weblogic-application.xsd" xmlns="http://www.bea.com/ns/weblogic/weblogic-application"> <listener> <listener-class>example.LifecycleListenerImpl</listener-class> <listener-uri>lib/remotesync.jar</listener-uri> </listener> </weblogic-application>

Deploying the Application

The example application can be deployed using a number of supported deployment mechanisms (refer to https://blogs.oracle.com/weblogicserver/best-practices-for-application-deployment-on-weblogic-server-running-on-kubernetes-v2). For this example, we’ll deploy the application using the WebLogic Server Administration Console.

Assume that the application is packaged in an application archive named txpropagate.ear. First, we’ll copy txpropagate.ear to the applications directory under the domain1 persistent volume location ($K8SOP/volumes/domain1/applications). Then we can deploy the application from the Administration Console’s Deployment page.

Note that the path of the EAR file is /shared/applications/txpropagate.ear within the Administration Server’s container, where /shared is mapped to the persistent volume that we created at $K8SOP/volumes/domain1.

Deploy the EAR as an application and then target it to the Administration Server and the cluster.

On the next page, click Finish to deploy the application. After the application is deployed, you’ll see its entry in the Deployments table.

Running the Application

Now that we have the application deployed to the servers in domain1, we can run a distributed transaction test. The following CURL operation invokes the servlet using the load balancer port 30305 for the clustered Managed Servers and specifies the URL of managed-server1.

$ curl http://localhost:30305/TxPropagate/TxPropagate?urls=t3://domain1-managed-server1:8001

<pre>

[domain1+managed-server2] started BEA1-0001DE85D4EE

[domain1+managed-server2] BEA1-0001DE85D4EEC47AE630 registered example.RemoteSyncImpl$SynchronizationImpl@562a85bd

[domain1+managed-server2] BEA1-0001DE85D4EEC47AE630 registering Synchronization on t3://domain1-managed-server1:8001

[domain1+managed-server1] BEA1-0001DE85D4EEC47AE630 registered example.RemoteSyncImpl$SynchronizationImpl@585ff41b

[domain1+managed-server2] committed Xid=BEA1-0001DE85D4EEC47AE630(844351585),Status=Committed,numRepliesOwedMe=0,numRepliesOwedOthers=0,seconds since begin=0,seconds left=120,useSecure=false,SCInfo[domain1+managed-server2]=(state=committed),SCInfo[domain1+managed-server1]=(state=committed),properties=({ackCommitSCs={managed-server1+domain1-managed-server1:8001+domain1+t3+=true}, weblogic.transaction.partitionName=DOMAIN}),OwnerTransactionManager=ServerTM[ServerCoordinatorDescriptor=(CoordinatorURL=managed-server2+domain1-managed-server2:8001+domain1+t3+ CoordinatorNonSecureURL=managed-server2+domain1-managed-server2:8001+domain1+t3+ coordinatorSecureURL=null, XAResources={WSATGatewayRM_managed-server2_domain1},NonXAResources={})],CoordinatorURL=managed-server2+domain1-managed-server2:8001+domain1+t3+)

The following diagram shows the application flow.

Looking at the output, we see that the servlet request was dispatched on managed-server2 where it started the transaction BEA1-0001DE85D4EE.

[domain1+managed-server2] started BEA1-0001DE85D4EE

The local RemoteSync.register() method was invoked which registered the callback object SynchronizationImpl@562a85bd.

[domain1+managed-server2] BEA1-0001DE85D4EEC47AE630 registered example.RemoteSyncImpl$SynchronizationImpl@562a85bd

The servlet then invoked the register method on the RemoteSync object on managed-server1, which registered the synchronization object SynchronizationImpl@585ff41b.

[domain1+managed-server2] BEA1-0001DE85D4EEC47AE630 registering Synchronization on t3://domain1-managed-server1:8001 [domain1+managed-server1] BEA1-0001DE85D4EEC47AE630 registered example.RemoteSyncImpl$SynchronizationImpl@585ff41b

Finally, the servlet committed the transaction and returned the transaction’s string representation (typically used for TM debug logging).

[domain1+managed-server2] committed Xid=BEA1-0001DE85D4EEC47AE630(844351585),Status=Committed,numRepliesOwedMe=0,numRepliesOwedOthers=0,seconds since begin=0,seconds left=120,useSecure=false,SCInfo[domain1+managed-server2]=(state=committed),SCInfo[domain1+managed-server1]=(state=committed),properties=({ackCommitSCs={managed-server1+domain1-managed-server1:8001+domain1+t3+=true}, weblogic.transaction.partitionName=DOMAIN}),OwnerTransactionManager=ServerTM[ServerCoordinatorDescriptor=(CoordinatorURL=managed-server2+domain1-managed-server2:8001+domain1+t3+ CoordinatorNonSecureURL=managed-server2+domain1-managed-server2:8001+domain1+t3+ coordinatorSecureURL=null, XAResources={WSATGatewayRM_managed-server2_domain1},NonXAResources={})],CoordinatorURL=managed-server2+domain1-managed-server2:8001+domain1+t3+)

The output shows that the transaction was committed, that it has two server participants (managed-server1 and managed-server2) and that the coordinating server (managed-server2) is accessible using t3://domain1-managed-server2:8001.

We can also verify that the registered synchronization callbacks were invoked by looking at the output of admin-server and managed-server1. The .out files for the servers can be found under the persistent volume of the domain.

$ cd $K8SOP/volumes/domain1/domain/domain1/servers

$ find . -name '*.out' -exec grep -H BEA1-0001DE85D4EE {} ';'

./managed-server1/logs/managed-server1.out:[domain1+managed-server1] BEA1-0001DE85D4EEC47AE630 beforeCompletion()

./managed-server1/logs/managed-server1.out:[domain1+managed-server1] BEA1-0001DE85D4EEC47AE630 afterCompletion()

./managed-server2/logs/managed-server2.out:[domain1+managed-server2] BEA1-0001DE85D4EEC47AE630 beforeCompletion()

./managed-server2/logs/managed-server2.out:[domain1+managed-server2] BEA1-0001DE85D4EEC47AE630 afterCompletion()

To summarize, we were able to process distributed transactions within a WebLogic Server domain running in a Kubernetes cluster without having to make any changes. The WebLogic Kubernetes Operator domain creation process provided all of the Kubernetes networking and WebLogic Server configuration necessary to make it possible.

The following command lists the Kubernetes services defined in the weblogic namespace.

$ kubectl get svc -n weblogic NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE domain1-admin-server NodePort 10.102.156.32 <none> 7001:30701/TCP 11m domain1-admin-server-extchannel-t3channel NodePort 10.99.21.154 <none> 30012:30012/TCP 9m domain1-cluster-1-traefik NodePort 10.100.211.213 <none> 80:30305/TCP 11m domain1-cluster-1-traefik-dashboard NodePort 10.108.229.66 <none> 8080:30315/TCP 11m domain1-cluster-cluster-1 ClusterIP 10.106.58.103 <none> 8001/TCP 9m domain1-managed-server1 ClusterIP 10.108.85.130 <none> 8001/TCP 9m domain1-managed-server2 ClusterIP 10.108.130.92 <none> 8001/TCP

We were able to access the servlet through the Traefik NodePort service using port 30305 on localhost. From inside the Kubernetes cluster, the servlet is able to access other WebLogic Server instances using their service names and ports. Because each server’s listen address is set to its corresponding Kubernetes service name, the addresses are resolvable from within the Kubernetes namespace even if a server’s pod is restarted and assigned a different IP address.

Cross-Domain Transactions

Now we’ll look at extending the example to run across two WebLogic Server domains. As mentioned in the TM overview section, cross-domain transactions can require additional configuration to properly secure TM communication. However, for our example, we will keep the configuration as simple as possible. We’ll continue to use a non-secure protocol (t3), and the anonymous user, for both application and internal TM communication.

First, we’ll need to create a new domain (domain2) in the same Kubernetes namespace as domain1 (weblogic). Before generating domain2 we need to create a secret for the domain2 credentials (domain2-weblogic-credentials) in the weblogic namespace and a directory for the persistent volume ($K8SOP/volumes/domain2).

Next, modify the create-domain1.yaml file, changing the following attribute values, and save the changes to a new file named create-domain2.yaml.

| Attribute | Value |

| domainName | domain2 |

| domainUID | domain2 |

| weblogicDomainStoragePath | {full path of $HOME}/k8sop/volumes/domain2 |

| weblogicCredentialsSecretName | domain2-weblogic-credentials |

| t3ChannelPort | 32012 |

| adminNodePort | 32701 |

| loadBalancerWebPort | 32305 |

| loadBalancerDashboardPort | 32315 |

Now we’re ready to invoke the create-weblogic-domain.sh script with the create-domain2.yaml input file.

$ $K8SOP/src/weblogic-kubernetes-operator/kubernetes/create-weblogic-domain.sh -i $K8SOP/create-domain2.yaml -o $K8SOP/weblogic-kubernetes-operator

After the create script completes successfully, the servers in domain2 will start and, using the readiness probe, report that they have reached the RUNNING state.

$ kubectl get po -n weblogic NAME READY STATUS RESTARTS AGE domain1-admin-server 1/1 Running 0 27m domain1-cluster-1-traefik-9985d9594-gw2jr 1/1 Running 0 27m domain1-managed-server1 1/1 Running 0 25m domain1-managed-server2 1/1 Running 0 25m domain2-admin-server 1/1 Running 0 5m domain2-cluster-1-traefik-5c49f54689-9fzzr 1/1 Running 0 5m domain2-managed-server1 1/1 Running 0 3m domain2-managed-server2 1/1 Running 0 3m

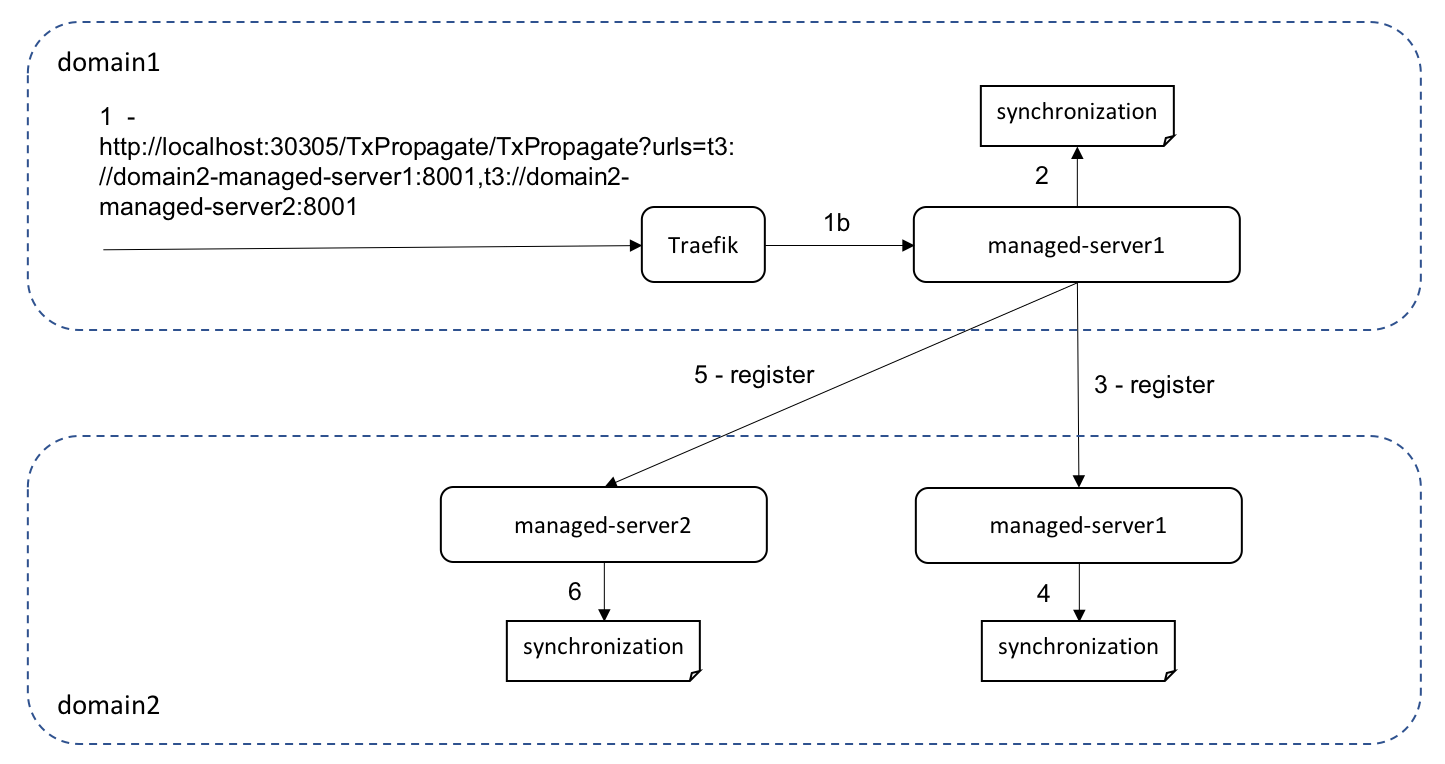

After deploying the application to the servers in domain2, we can invoke the application and include the URLs for the domain2 Managed Servers.

$ curl http://localhost:30305/TxPropagate/TxPropagate?urls=t3://domain2-managed-server1:8001,t3://domain2-managed-server2:8001

<pre>

[domain1+managed-server1] started BEA1-0001144553CC

[domain1+managed-server1] BEA1-0001144553CC5D73B78A registered example.RemoteSyncImpl$SynchronizationImpl@2e13aa23

[domain1+managed-server1] BEA1-0001144553CC5D73B78A registering Synchronization on t3://domain2-managed-server1:8001

[domain2+managed-server1] BEA1-0001144553CC5D73B78A registered example.RemoteSyncImpl$SynchronizationImpl@68d4c2d6

[domain1+managed-server1] BEA1-0001144553CC5D73B78A registering Synchronization on t3://domain2-managed-server2:8001

[domain2+managed-server2] BEA1-0001144553CC5D73B78A registered example.RemoteSyncImpl$SynchronizationImpl@1ae87d94

[domain1+managed-server1] committed Xid=BEA1-0001144553CC5D73B78A(1749245151),Status=Committed,numRepliesOwedMe=0,numRepliesOwedOthers=0,seconds since begin=0,seconds left=120,useSecure=false,SCInfo[domain1+managed-server1]=(state=committed),SCInfo[domain2+managed-server1]=(state=committed),SCInfo[domain2+managed-server2]=(state=committed),properties=({ackCommitSCs={managed-server2+domain2-managed-server2:8001+domain2+t3+=true, managed-server1+domain2-managed-server1:8001+domain2+t3+=true}, weblogic.transaction.partitionName=DOMAIN}),OwnerTransactionManager=ServerTM[ServerCoordinatorDescriptor=(CoordinatorURL=managed-server1+domain1-managed-server1:8001+domain1+t3+ CoordinatorNonSecureURL=managed-server1+domain1-managed-server1:8001+domain1+t3+ coordinatorSecureURL=null, XAResources={WSATGatewayRM_managed-server1_domain1},NonXAResources={})],CoordinatorURL=managed-server1+domain1-managed-server1:8001+domain1+t3+)

The application flow is shown in the following diagram.

In this example, the transaction includes server participants from both domain1 and domain2, and we can verify that the synchronization callbacks were processed on all participating servers.

$ cd $K8SOP/volumes

$ find . -name '*.out' -exec grep -H BEA1-0001144553CC {} ';'

./domain1/domain/domain1/servers/managed-server1/logs/managed-server1.out:[domain1+managed-server1] BEA1-0001144553CC5D73B78A beforeCompletion()

./domain1/domain/domain1/servers/managed-server1/logs/managed-server1.out:[domain1+managed-server1] BEA1-0001144553CC5D73B78A afterCompletion()

./domain2/domain/domain2/servers/managed-server1/logs/managed-server1.out:[domain2+managed-server1] BEA1-0001144553CC5D73B78A beforeCompletion()

./domain2/domain/domain2/servers/managed-server1/logs/managed-server1.out:[domain2+managed-server1] BEA1-0001144553CC5D73B78A afterCompletion()

./domain2/domain/domain2/servers/managed-server2/logs/managed-server2.out:[domain2+managed-server2] BEA1-0001144553CC5D73B78A beforeCompletion()

./domain2/domain/domain2/servers/managed-server2/logs/managed-server2.out:[domain2+managed-server2] BEA1-0001144553CC5D73B78A afterCompletion()

Summary

In this article we reviewed, at a high level, how the WebLogic Server Transaction Manager processes global transactions and discussed some of the basic configuration requirements. We then looked at an example application to illustrate how cross-domain transactions are processed in a Kubernetes cluster. In future articles we’ll look at more complex transactional use-cases such as multi-node, cross Kubernetes cluster transactions, failover, and such.