Overview

This blog is a step-by-step guide to configuring and running a sample WebLogic JMS application in a Kubernetes cluster. First we explain how to create a WebLogic domain that has an Administration Server, and a WebLogic cluster. Next we add WebLogic JMS resources and a data source, deploy an application, and finally run the application.

This application is based on a sample application named ‘Classic API – Using Distributed Destination’ that is included in the WebLogic Server sample applications. This application implements a scenario in which employees submit their names when they arrive, and a supervisor monitors employee arrival time. Employees choose whether to send their check-in messages to a distributed queue or a distributed topic. These destinations are configured on a cluster with two active Managed Servers. Two message-driven beans (MDBs), corresponding to these two destinations, are deployed to handle the check-in messages and store them in a database. A supervisor can then scan all of the check-in messages by querying the database.

The two main approaches for automating WebLogic configuration changes are WLST and the REST API. To run the scripts, WLST or REST API, in a WebLogic domain on Kubernetes, you have two options:

- Running the scripts inside Kubernetes cluster pods — If you use this option, use ‘localhost’, NodePort service name, or Statefulset’s headless service name, pod IP, Cluster IP, and the internal ports. The instructions in this blog use ‘localhost’.

- Running the scripts outside the Kubernetes cluster — If you use this option, use hostname/IP and the NodePort.

In this blog we use the REST API and run the scripts within the Administration Server pod to deploy all the resources. All the resources are targeted to the whole cluster which is the recommended approach for WebLogic Server on Kubernetes because it works well when the cluster scales up or scales down.

Creating the WebLogic Base Domain

We use the sample WebLogic domain in GitHub to create the base domain. In this WebLogic sample you will find a Dockerfile, scripts, and yaml files to build and run the WebLogic Server instances and cluster in the WebLogic domain on Kubernetes. The sample domain contains an Administration Server named AdminServer and a WebLogic cluster with four Managed Servers named managed-server-0 through managed-server-3. We configure four Managed Servers but we start only the first two: managed-server-0 and managed-server-1.

One feature that distinguishes a JMS service from others is that it’s highly stateful and most of its data needs to be kept in a persistent store, such as persistent messages, durable subscriptions, and so on. A persistent store can be a database store or a file store, and in this sample we demonstrate how to use external volumes to store this data in file stores.

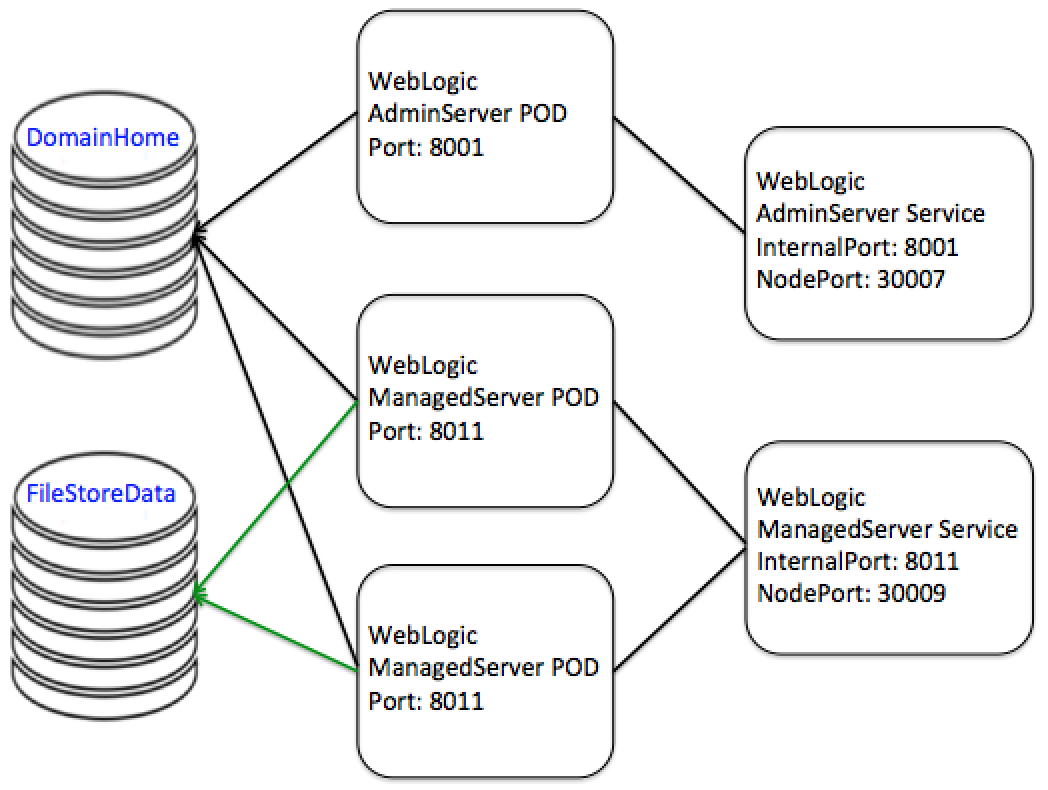

In this WebLogic domain we configure three persistent volumes for the following:

- The domain home folder – This volume is shared by all the WebLogic Server instances in the domain; that is, the Administration Server and all Managed Server instances in the WebLogic cluster.

- The file stores – This volume is shared by the Managed Server instances in the WebLogic cluster.

- A MySQL database – The use of this volume is explained later in this blog.

Note that by default a domain home folder contains configuration files, log files, diagnostic files, application binaries, and the default file store files for each WebLogic Server instance in the domain. Custom file store files are also placed in the domain home folder by default, but we customize the configuration in this sample to place these files in a separate, dedicated persistent volume. The two persistent volumes – one for the domain home, and one for the customer file stores – are shared by multiple WebLogic Servers instances. Consequently, if the Kubernetes cluster is running on more than one machine, these volumes must be in a shared storage.

Complete the steps in the README.md file to create and run the base domain. Wait until all WebLogic Server instances are running; that is, the Administration Server and two Managed Servers. This may take a short while because Managed Servers are started in sequence after the Administration Server is running and the provisioning of the initial domain is complete.

$ kubectl get pod NAME READY STATUS RESTARTS AGE admin-server-1238998015-kmbt9 1/1 Running 0 5m managed-server-0 1/1 Running 0 3m managed-server-1 1/1 Running 0 3m

Note that in the commands used in this blog you need to replace $adminPod and $mysqlPod with the actual pod names.

Deploying the JMS Resources with a File Store

When the domain is up and running, we can deploy the JMS resources.

First, prepare a JSON data file that contains definitions for one file store, one JMS server, and one JMS module. The file will be processed by a Python script to create the resources, one-by-one, using the WebLogic Server REST API.

file jms1.json:

{"resources": {

"filestore1": {

"url": "fileStores",

"data": {

"name": "filestore1",

"directory": "/u01/filestores/filestore1",

"targets": [{

"identity":["clusters", "myCluster"]

}]

}

},

"jms1": {

"url": "JMSServers",

"data": {

"messagesThresholdHigh": -1,

"targets": [{

"identity":["clusters", "myCluster"]

}],

"persistentStore": [

"fileStores",

"filestore1"

],

"name": "jmsserver1"

}

},

"module": {

"url": "JMSSystemResources",

"data": {

"name": "module1",

"targets":[{

"identity": [ "clusters", "myCluster" ]

}]

}

},

"sub1": {

"url": "JMSSystemResources/module1/subDeployments",

"data": {

"name": "sub1",

"targets":[{

"identity": [ "JMSServers", "jmsserver1" ]

}]

}

}

}}

Second, prepare the JMS module file, which contains a connection factory, a distributed queue, and a distributed topic.

file module1-jms.xml:

<?xml version='1.0' encoding='UTF-8'?>

<weblogic-jms xmlns="http://xmlns.oracle.com/weblogic/weblogic-jms" xmlns:sec="http://xmlns.oracle.com/weblogic/security" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:wls="http://xmlns.oracle.com/weblogic/security/wls" xsi:schemaLocation="http://xmlns.oracle.com/weblogic/weblogic-jms http://xmlns.oracle.com/weblogic/weblogic-jms/1.1/weblogic-jms.xsd">

<connection-factory name="cf1">

<default-targeting-enabled>true</default-targeting-enabled>

<jndi-name>cf1</jndi-name>

<transaction-params>

<xa-connection-factory-enabled>true</xa-connection-factory-enabled>

</transaction-params>

<load-balancing-params>

<load-balancing-enabled>true</load-balancing-enabled>

<server-affinity-enabled>false</server-affinity-enabled>

</load-balancing-params>

</connection-factory>

<uniform-distributed-queue name="dq1">

<sub-deployment-name>sub1</sub-deployment-name>

<jndi-name>dq1</jndi-name>

</uniform-distributed-queue>

<uniform-distributed-topic name="dt1">

<sub-deployment-name>sub1</sub-deployment-name>

<jndi-name>dt1</jndi-name>

<forwarding-policy>Partitioned</forwarding-policy>

</uniform-distributed-topic>

</weblogic-jms>

Third, copy these two files to the Administration Server pod, then run the Python script to create the JMS resources within the Administration Server pod:

$ kubectl exec $adminPod -- mkdir /u01/wlsdomain/config/jms/ $ kubectl cp ./module1-jms.xml $adminPod:/u01/wlsdomain/config/jms/ $ kubectl cp ./jms1.json $adminPod:/u01/oracle/ $ kubectl exec $adminPod -- python /u01/oracle/run.py createRes /u01/oracle/jms1.json

Launch the WebLogic Server Administration Console, by going to your browser and entering the URL http://<hostIP>:30007/console in the address bar, and make sure that all the JMS resources are running successfully.

Deploying the Data Source

Setting Up and Running MySQL Server in Kubernetes

This sample stores the check-in messages in a database. So let’s set up MySQL Server and get it running in Kubernetes.

First, let’s prepare the mysql.yml file, which defines a secret to store encrypted username and password credentials, a persistent volume claim (PVC) to store database data in an external directory, and a MySQL Server deployment and service. In the base domain, one persistent volume is reserved and available so that it can be used by the PVC that is defined in mysql.yml.

file mysql.yml:

apiVersion: v1

kind: Secret

metadata:

name: dbsecret

type: Opaque

data:

username: bXlzcWw=

password: bXlzcWw=

rootpwd: MTIzNHF3ZXI=

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-pv-claim

labels:

app: mysql-server

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

---

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: mysql-server

spec:

replicas: 1

template:

metadata:

labels:

app: mysql-server

spec:

containers:

- name: mysql-server

image: mysql:5.7

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: dbsecret

key: rootpwd

- name: MYSQL_USER

valueFrom:

secretKeyRef:

name: dbsecret

key: username

- name: MYSQL_PASSWORD

valueFrom:

secretKeyRef:

name: dbsecret

key: password

- name: MYSQL_DATABASE

value: "wlsdb"

volumeMounts:

- mountPath: /var/lib/mysql

name: db-volume

volumes:

- name: db-volume

persistentVolumeClaim:

claimName: mysql-pv-claim

---

apiVersion: v1

kind: Service

metadata:

name: mysql-server

labels:

app: mysql-server

spec:

ports:

- name: client

port: 3306

protocol: TCP

targetPort: 3306

clusterIP: None

selector:

app: mysql-server

Next, deploy MySQL Server to the Kubernetes cluster:

$ kubectl create -f mysql.yml

Creating the Sample Application Table

First, prepare the DDL file for the sample application table:

file sampleTable.ddl:

create table jms_signin (

name varchar(255) not null,

time varchar(255) not null,

webServer varchar(255) not null,

mdbServer varchar(255) not null);

Next, create the table in MySQL Server:

$ kubectl exec -it $mysqlPod -- mysql -h localhost -u mysql -pmysql wlsdb < sampleTable.ddlCreating a Data Source for the WebLogic Server Domain

We need to configure a data source so that the sample application can communicate with MySQL Server. First, prepare the ds1-jdbc.xml module file.

file ds1-jdbc.xml:

<?xml version='1.0' encoding='UTF-8'?>

<jdbc-data-source xmlns="http://xmlns.oracle.com/weblogic/jdbc-data-source" xmlns:sec="http://xmlns.oracle.com/weblogic/security" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:wls="http://xmlns.oracle.com/weblogic/security/wls" xsi:schemaLocation="http://xmlns.oracle.com/weblogic/jdbc-data-source http://xmlns.oracle.com/weblogic/jdbc-data-source/1.0/jdbc-data-source.xsd">

<name>ds1</name>

<datasource-type>GENERIC</datasource-type>

<jdbc-driver-params>

<url>jdbc:mysql://mysql-server:3306/wlsdb</url>

<driver-name>com.mysql.jdbc.Driver</driver-name>

<properties>

<property>

<name>user</name>

<value>mysql</value>

</property>

</properties>

<password-encrypted>mysql</password-encrypted>

<use-xa-data-source-interface>true</use-xa-data-source-interface>

</jdbc-driver-params>

<jdbc-connection-pool-params>

<capacity-increment>10</capacity-increment>

<test-table-name>ACTIVE</test-table-name>

</jdbc-connection-pool-params>

<jdbc-data-source-params>

<jndi-name>jndi/ds1</jndi-name>

<algorithm-type>Load-Balancing</algorithm-type>

<global-transactions-protocol>EmulateTwoPhaseCommit</global-transactions-protocol>

</jdbc-data-source-params>

<jdbc-xa-params>

<xa-transaction-timeout>50</xa-transaction-timeout>

</jdbc-xa-params>

</jdbc-data-source>

Then deploy the data source module to the WebLogic Server domain:

$ kubectl cp ./ds1-jdbc.xml $adminPod:/u01/wlsdomain/config/jdbc/

$ kubectl exec $adminPod -- curl -v \

--user weblogic:weblogic1 \

-H X-Requested-By:MyClient \

-H Accept:application/json \

-H Content-Type:application/json \

-d '{

"name": "ds1",

"descriptorFileName": "jdbc/ds1-jdbc.xml",

"targets":[{

"identity":["clusters", "myCluster"]

}]

}' -X POST http://localhost:8001/management/weblogic/latest/edit/JDBCSystemResources

Deploying the Servlet and MDB Applications

First, download the two application archives: signin.war and signinmdb.jar.

Enter the commands below to deploy these two applications using REST APIs within the pod running the WebLogic Administration Server.

# copy the two app files to admin pod

$ kubectl cp signin.war $adminPod:/u01/wlsdomain/signin.war

$ kubectl cp signinmdb.jar $adminPod:/u01/wlsdomain/signinmdb.jar

# deploy the two app via REST api

$ kubectl exec $adminPod -- curl -v \

--user weblogic:weblogic1 \

-H X-Requested-By:MyClient \

-H Content-Type:application/json \

-d "{

name: 'webapp',

sourcePath: '/u01/wlsdomain/signin.war',

targets: [ { identity: [ 'clusters', 'myCluster' ] } ]

}" \

-X POST http://localhost:8001/management/weblogic/latest/edit/appDeployments

$ kubectl exec $adminPod -- curl -v \

--user weblogic:weblogic1 \

-H X-Requested-By:MyClient \

-H Content-Type:application/json \

-d "{

name: 'mdb',

sourcePath: '/u01/wlsdomain/signinmdb.jar',

targets: [ { identity: [ 'clusters', 'myCluster' ] } ]

}" \

-X POST http://localhost:8001/management/weblogic/latest/edit/appDeployments

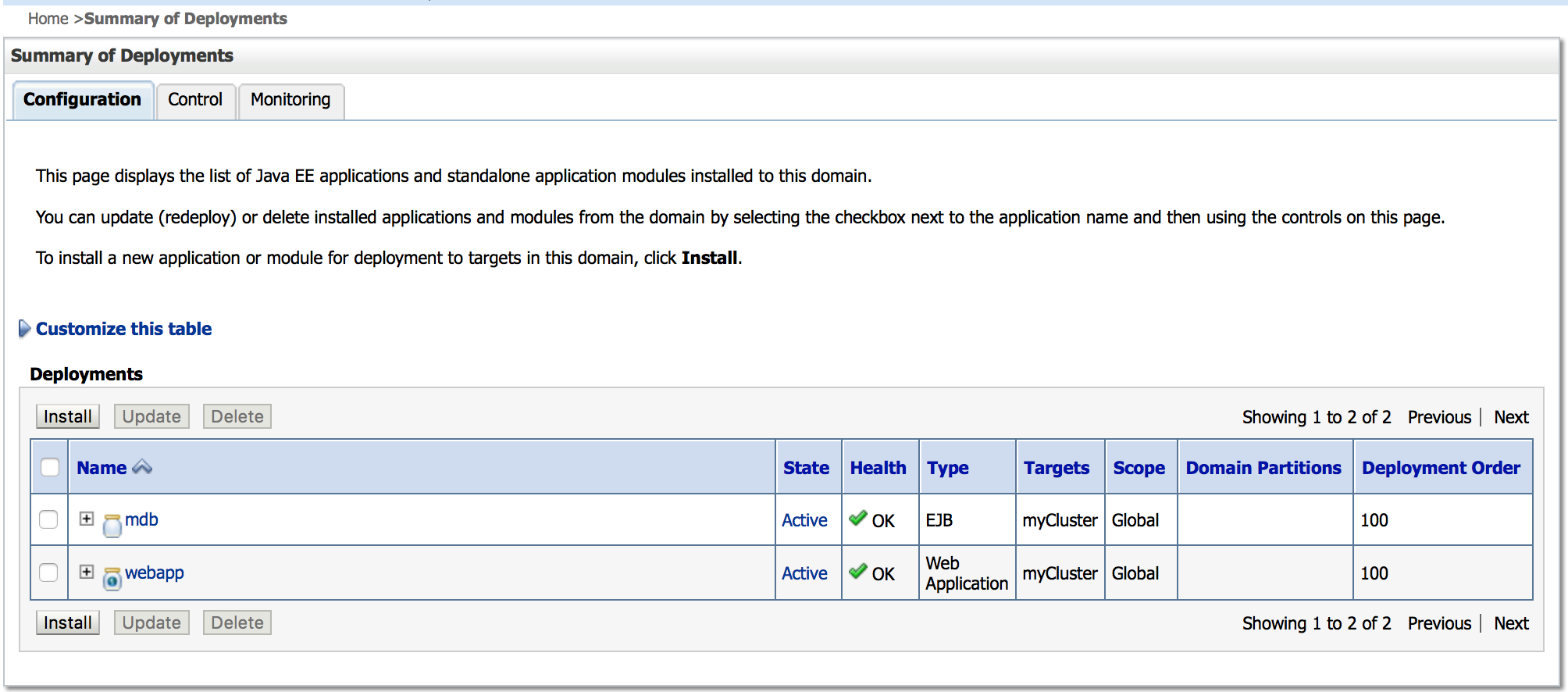

Next, go to the WebLogic Server Administration Console (http://<hostIP>:30007/console) to verify the applications have been successfully deployed and running.

Running the Sample

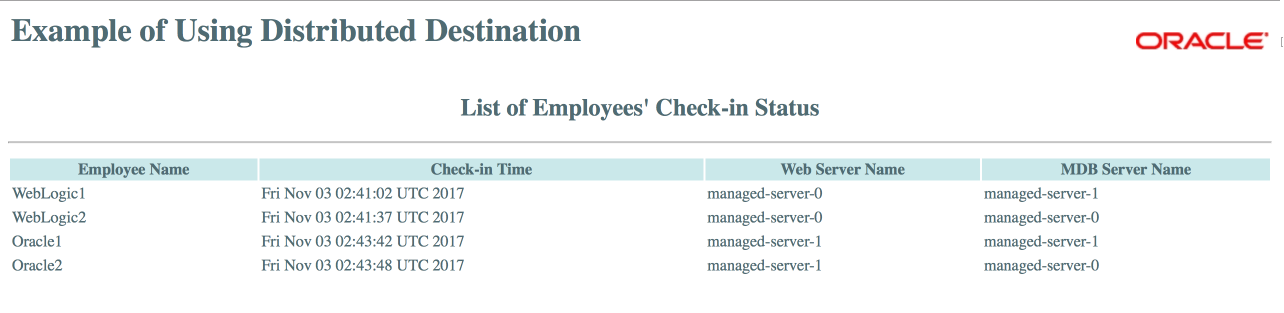

Invoke the application on the Managed Server by going to a browser and entering the URL http://<hostIP>:30009/signIn/. Using a number of different browsers and machines to simulate multiple web clients, submit several unique employee names. Then check the result by entering the URL http://<hostIP>:30009/signIn/response.jsp. You can see that there are two different levels of load balancing taking place:

- HTTP requests are load balanced among Managed Servers within the cluster. Notice the entries beneath the column labeled Web Server Name. For each employee check-in, thiscolumn identifies the name of the WebLogic Server instance that contains the servlet instance that is processing the corresponding HTTP request.

- JMS messages that are sent to a distributed destination are load balanced among the MDB instances within the cluster. Notice the entries beneath the column labeled MDB Server Name. This column identifies the name of the WebLogic Server instance that contains the MDB instance that is processing the message.

Restarting All Pods

Restart the MySQL pod, WebLogic Administration Server pod and WebLogic Managed Server pods. This will demonstrate that the data in your external volumes is indeed preserved independently of your pod life cycles.

First, gracefully shut down the MySQL Server pod:

$ kubectl exec -it $mysqlpod /etc/init.d/mysql stop

After the MySQL Server pod is stopped, the Kubernetes control panel will restart it automatically.

Next, follow the section “Restart Pods” in the README.md in order to restart all WebLogic servers pods.

$ kubectl get pod NAME READY STATUS RESTARTS AGE admin-server-1238998015-kmbt9 1/1 Running 1 7d managed-server-0 1/1 Running 1 7d managed-server-1 1/1 Running 1 7d mysql-server-3736789149-n2s2l 1/1 Running 1 3h

You will see that the restart count for each pod has increased from 0 to 1.

After all pods are running again, access the WebLogic Server Administration Console to verify that the servers are in running state. After servers restart all messages will get recovered. You’ll get the same results as you did prior to the restart because all data is persisted in the external data volumes and therefore can be recovered after the pods are restarted.

Cleanup

Enter the following command to clean up the resources used by the MySQL Server instance:

$ kubectl delete -f mysql.yml

Next, follow the steps in the “Cleanup” section of the README.md to remove the base domain and delete all other resources used by this example.

Summary and Futures

This blog helped demonstrate using Kubernetes as a flexible and scalable environment for hosting WebLogic Server JMS cluster deployments. We leveraged basic Kubernetes facilities to manage WebLogic server life-cycles, used file based message persistence, and demonstrated intra-cluster JMS communication between Java EE applications. We also demonstrated that File based JMS persistence works well when externalizing files to a shared data volume outside the Kubernetes pods, as this persists data beyond the life cycle of the pods.

In future blogs we’ll explore hosting a WebLogic JMS cluster using Oracle’s upcoming fully certified WebLogic’s Kubernetes ‘operator based’ Kubernetes environment. In addition, we’ll also explore using external JMS clients to communicate with WebLogic JMS services running inside a Kubernetes cluster, using database instead of file persistence, and using WebLogic JMS automatic service migration to automatically migrate JMS instances from shutdown pods to running pods.