Oracle has been working with the WebLogic community to find ways to make it as easy as possible for organizations using WebLogic Server to run important workloads and to move those workloads into the cloud. One aspect of that effort is the delivery of the Oracle WebLogic Server Kubernetes Operator. In this article we will demonstrate a key feature that assists with the management of WebLogic domains in a Kubernetes environment: the ability to publish and analyze logs from the operator using products from the Elastic Stack.

What Is the Elastic Stack?

The Elastic Stack (ELK) consists of several open source products, including Elasticsearch, Logstash, and Kibana. Using the Elastic Stack with your log data, you can gain insight about your application’s performance in near real time.

Elasticsearch is a scalable, distributed and RESTful search and analytics engine based on Lucene. It provides a flexible way to control indexing and fast search over various sets of data.

Logstash is a server-side data processing pipeline that can consume data from several sources simultaneously, transform it, and route it to a destination of your choice.

Kibana is a browser-based plug-in for Elasticsearch that you use to visualize and explore data that has been collected. It includes numerous capabilities for navigating, selecting, and arranging data in dashboards.

A customer who uses the operator to run a WebLogic Server cluster in a Kubernetes environment will need to monitor the operator and servers. Elasticsearch and Kibana provide a great way to do it. The following steps explain how to set this up.

Processing Logs Using ELK

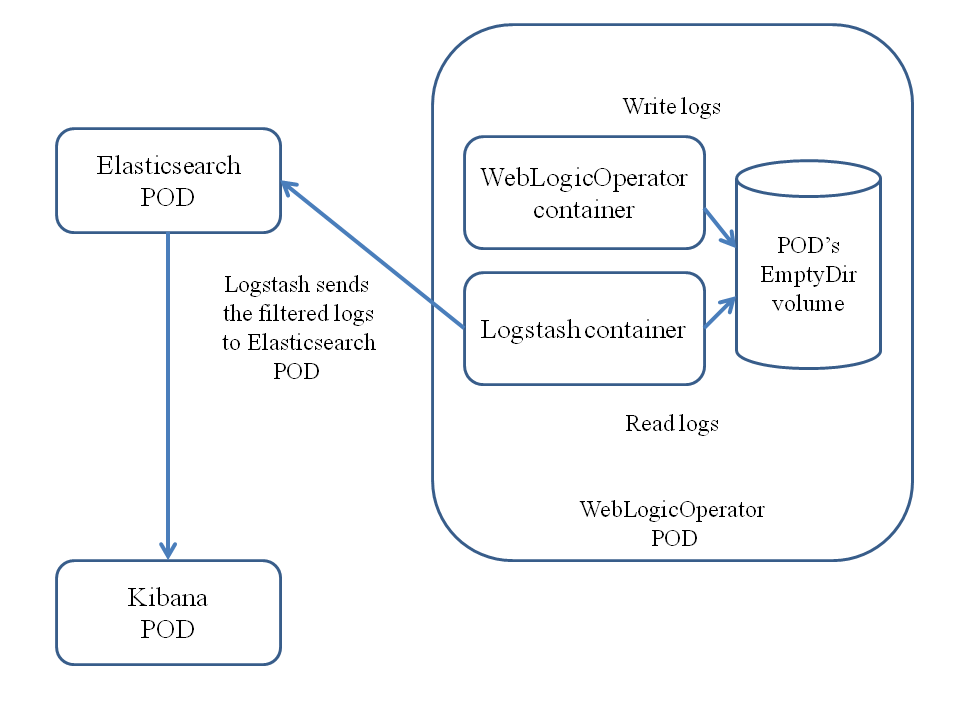

In this example, the operator and the Logstash agent are deployed in one pod, and Elasticsearch and Kibana are deployed as two independent pods in the default namespace. We will use a memory-backed volume that is shared between the operator and Logstash containers and that is used to store the logs. The operator instance places the logs into the shared volume, /logs. Logstash collects the logs from the volume and transfers the filtered logs to Elasticsearch. Finally, we will use Kibana and its browser-based UI to analyze and visualize the logs.

Operator and ELK integration

To enable ELK integration with the operator, first we need to set the elkIntegrationEnabled parameter in the create-operator-inputs.yaml file to true. This causes Elasticsearch, Logstash and Kibana to be installed, and Logstash to be configured to export the operator’s logs to Elasticsearch. Then simply follow the installation instructions to install and start the operator.

To verify that ELK integration is activated, check the output produced by the following command:

$ . ./create-weblogic-operator.sh -i create-operator-inputs.yaml

This command should print the following information for ELK:

Deploy ELK…

deployment “elasticsearch” configured

service “elasticsearch” configured

deployment “kibana” configured

service “kibana” configured

To ensure that all three deployments are up and running, perform these steps:

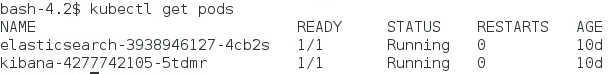

- Check that the Elasticsearch and Kibana pods are deployed and started (note that they run in the default Kubernetes namespace):

$ kubectl get pods

The following output is expected:

- Verify that the operator pod is deployed and running. Note that it runs in the weblogic-operator namespace:

$ kubectl -n weblogic-operator get pods

The following output is expected:

![]()

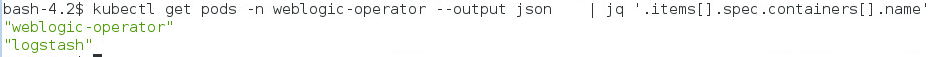

- Check that the operator and Logstash containers are running inside the operator’s pod:

$ kubectl get pods -n weblogic-operator –output json | jq ‘.items[].spec.containers[].name’

The following output is expected:

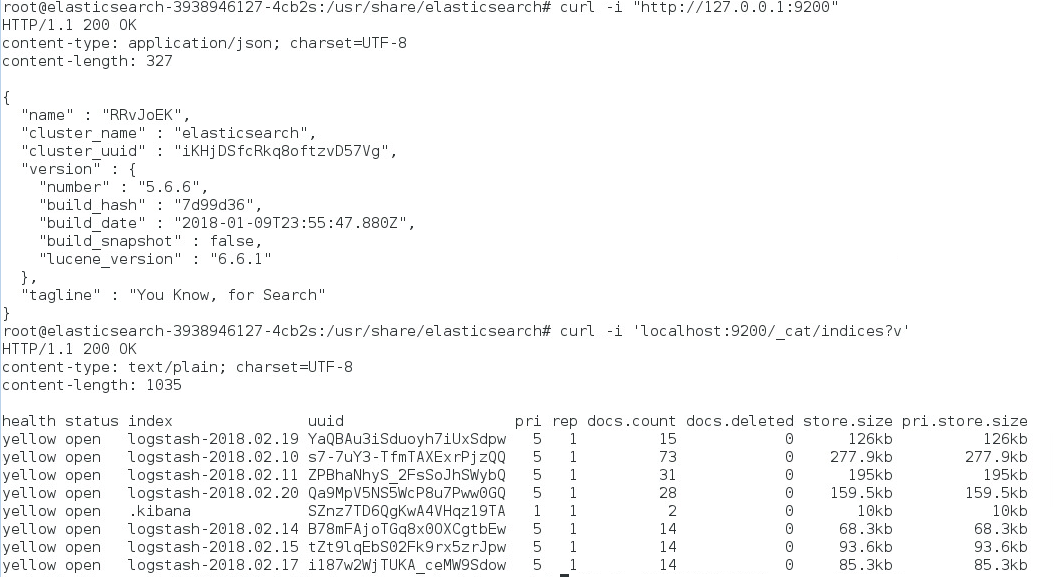

- Verify that the Elasticsearch pod has started:

$ kubectl exec -it elasticsearch-3938946127-4cb2s /bin/bash

$ curl “http://localhost:9200“

$ curl “http://localhost:9200/_cat/indices?v”

We get the following indices if Elasticstash was successfully started:

If Logstash is not listed, then you might check the Logstash log output:

$ kubectl logs weblogic-operator-501749275-nhjs0 -c logstash -n weblogic-operator

If there are no errors in the Logstash log, then it is possible that the Elasticsearch pod has started after the Logstash container. If that is the case, simply restart Logstash to fix it.

Using Kibana

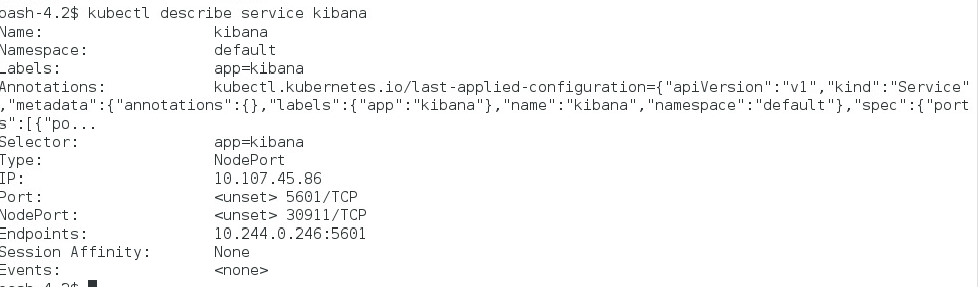

Kibana provides a web application for viewing logs. Its Kubernetes service configuration includes a NodePort so that the application can be accessed outside of the Kubernetes cluster. To find its port number, run the following command:

$ kubectl describe service kibana

This should print the service NodePort information, similar to this:

From the description of the service in our example, the NodePort value is 30911. Kibana’s web application can be accessed at the address http://[NODE_IP_ADDRESS]:30911.

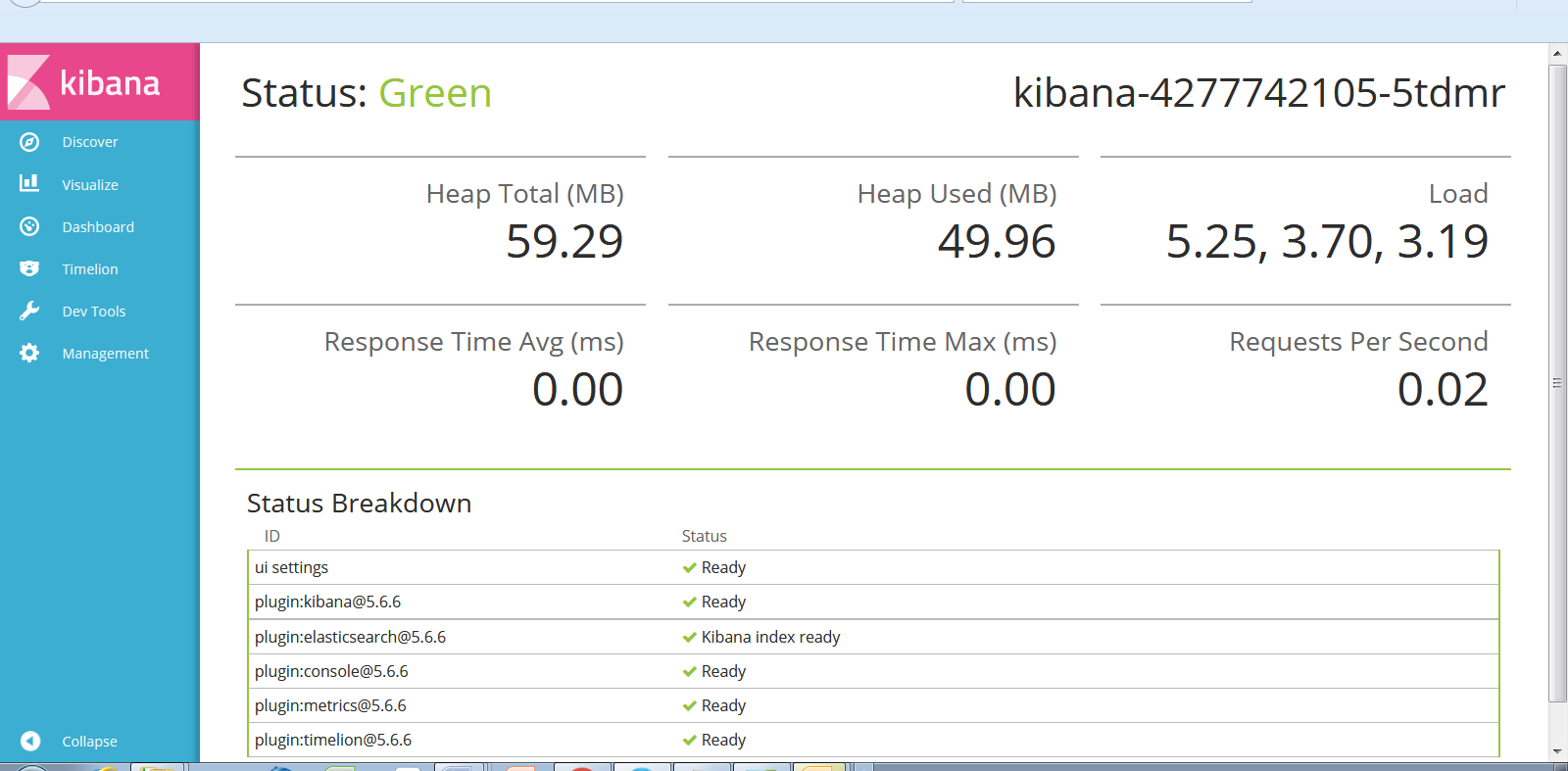

To verify that Kibana is installed correctly and to check its status, connect to the web page at http://[NODE_IP_ADDRESS]:30911/status. The status should be Green.

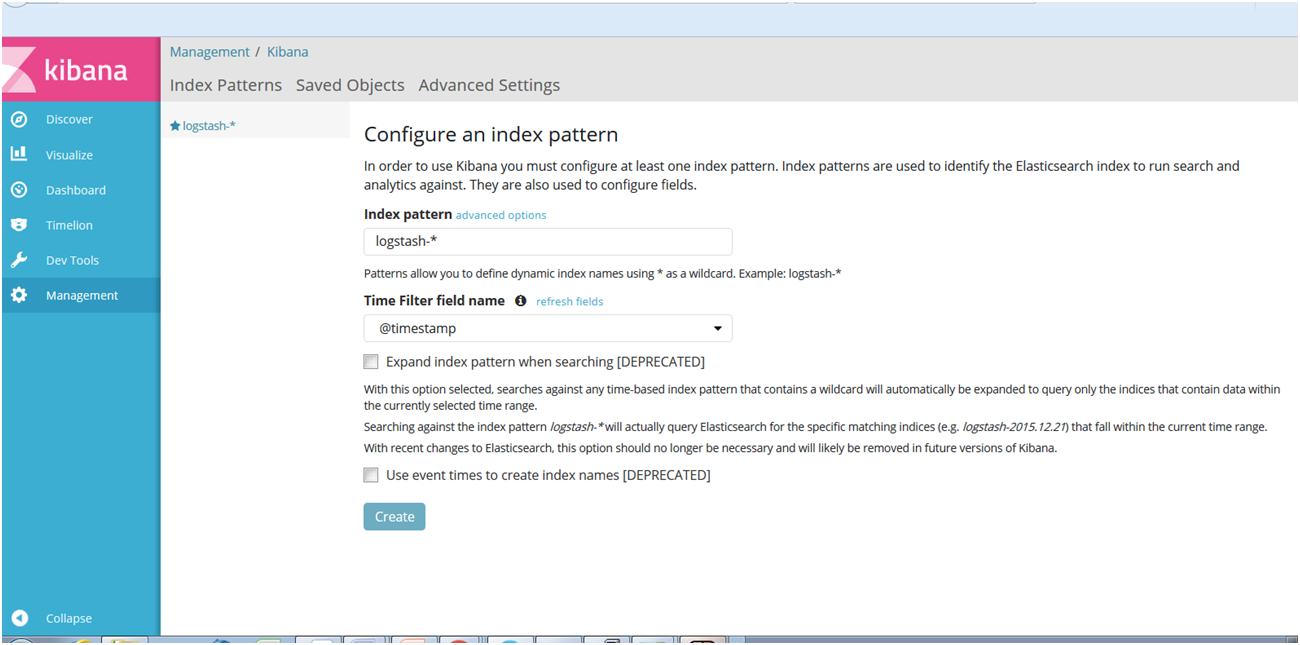

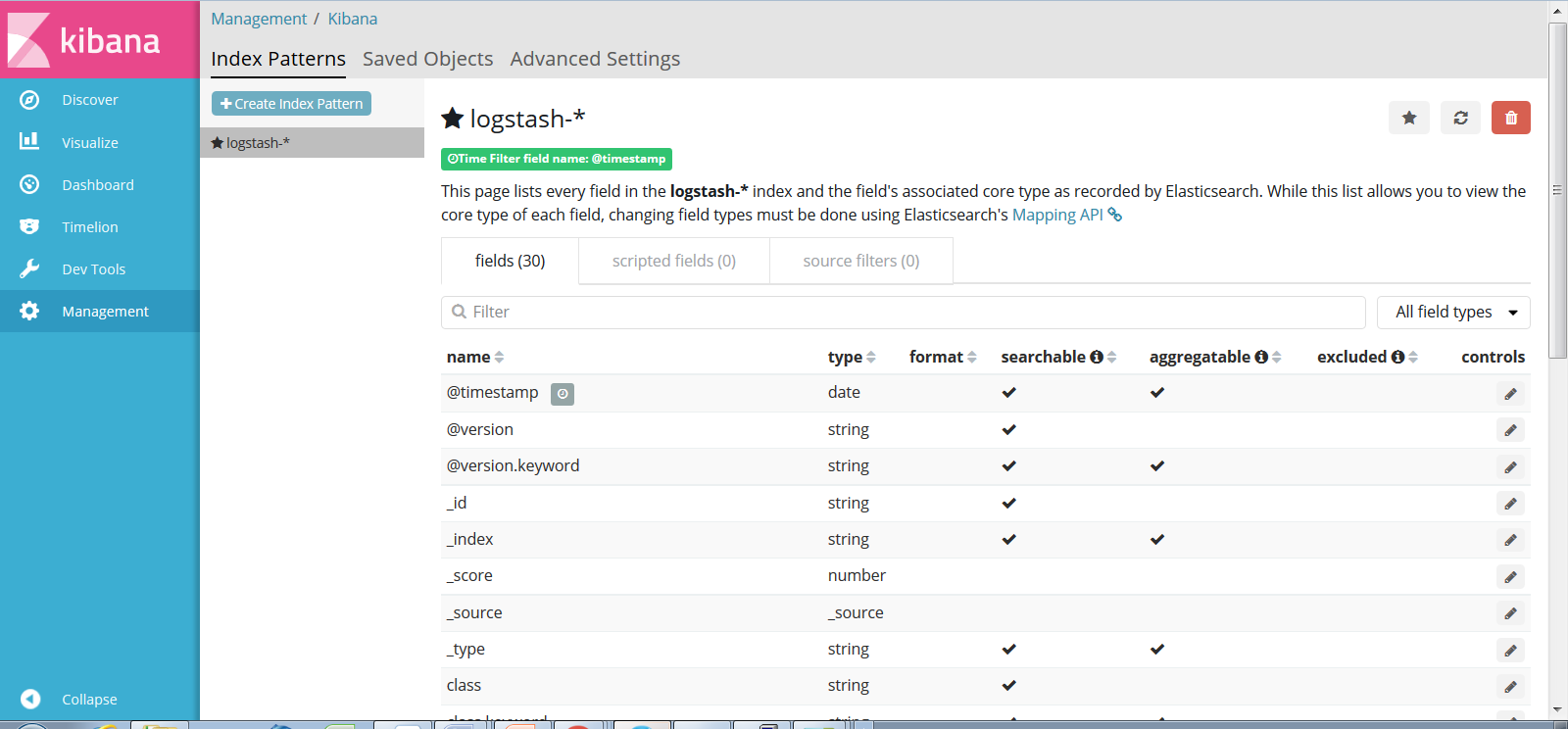

The next step is to define a Kibana index pattern. To do this, click Discover in the left panel. Notice that the default index pattern is logstash-*, and that the default time filter field name is @timestamp. Click Create.

The Management page displays the fields for the logstash* index:

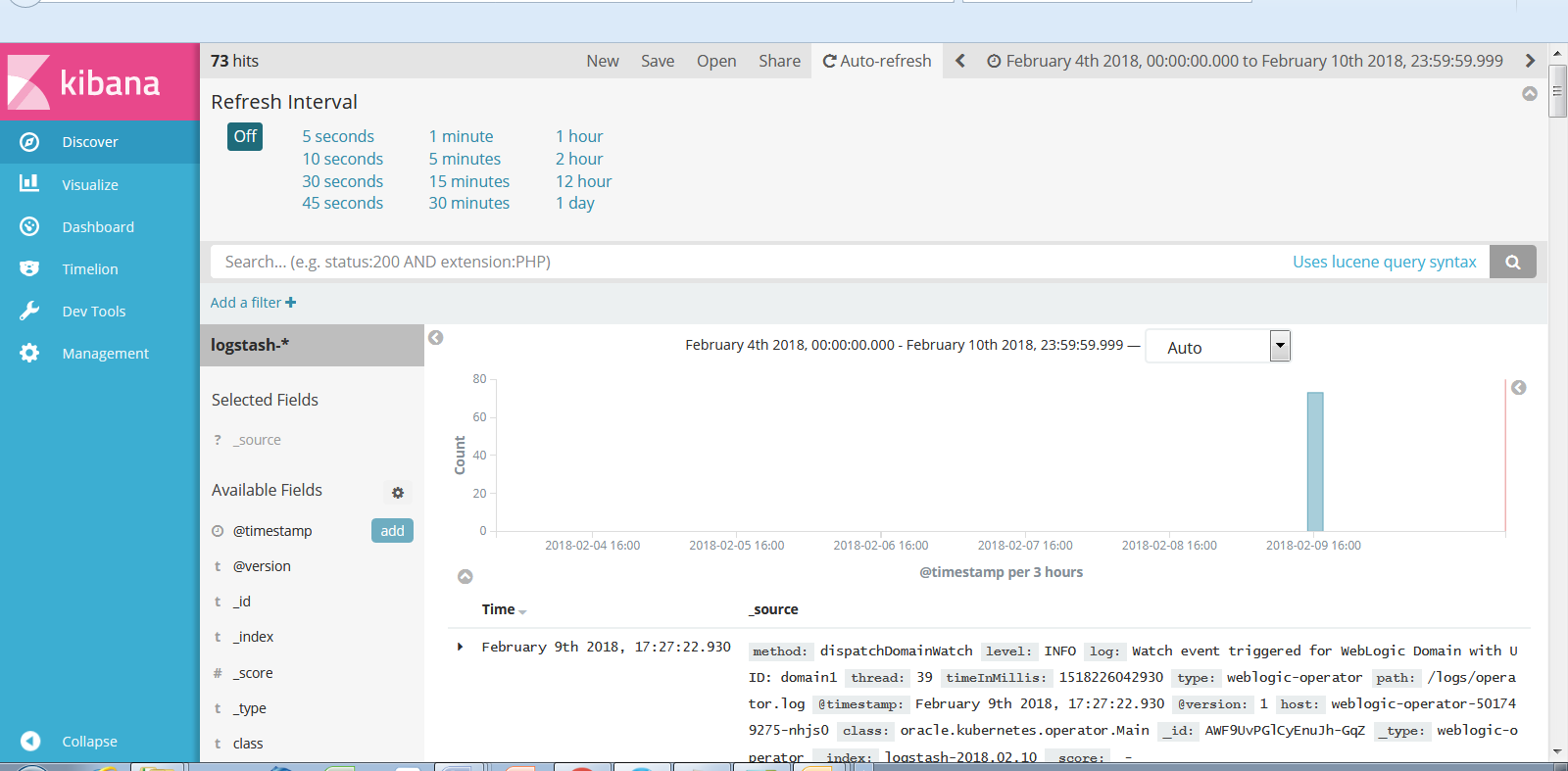

The next step is to customize how the operator logs are presented. To configure the time interval and auto-refresh settings, click the upper-right corner of the Discover page, double-click the Auto-refresh tab, and select the desired interval. For example, 10 seconds.

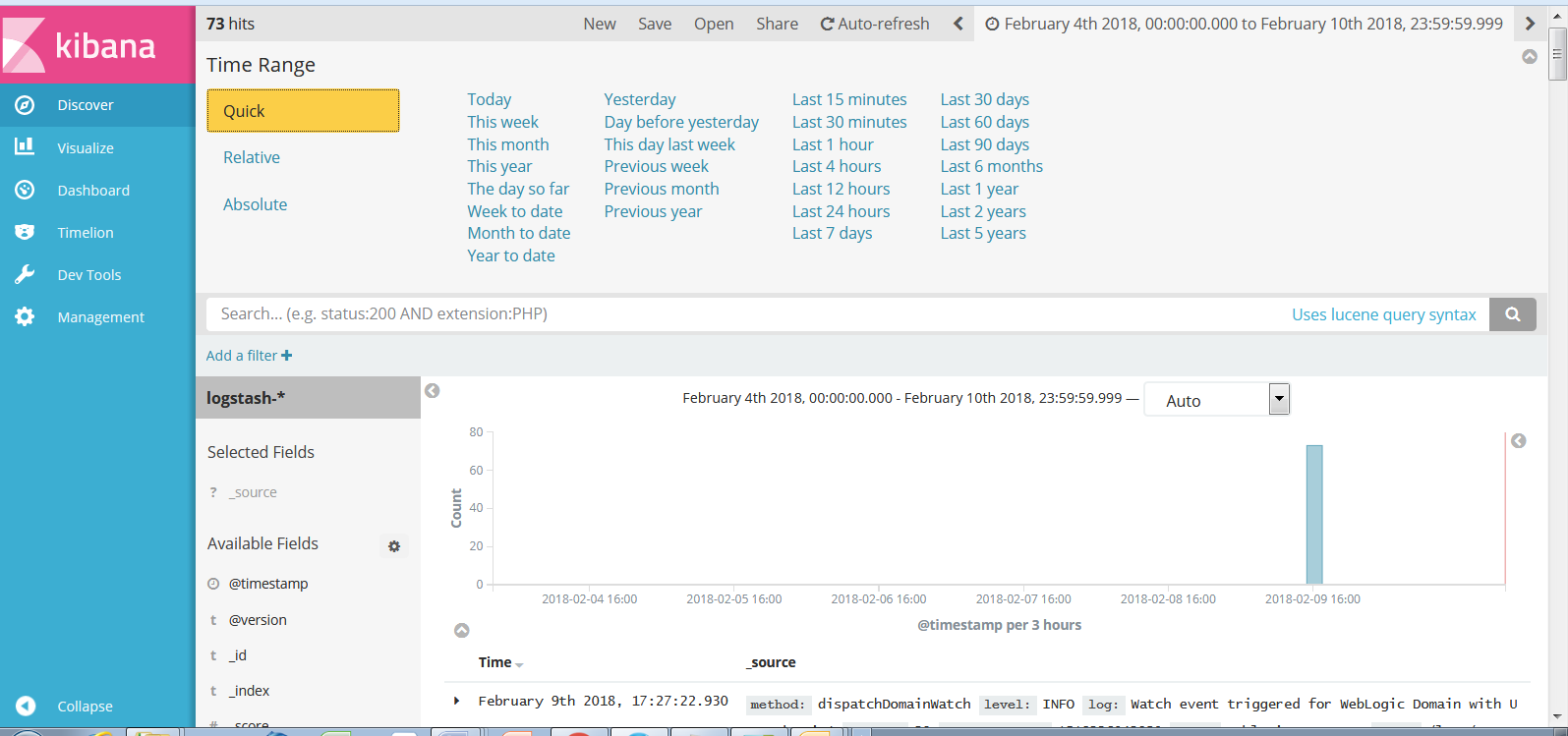

You can also set the time range to limit the log messages to those generated during a particular interval:

Logstash is configured to split the operator log records into separate fields. For example:

method:

dispatchDomainWatch

level:

INFO

log:

Watch event triggered for WebLogic Domain with UID: domain1

thread:

39

timeInMillis:

1518372147324

type:

weblogic-operator

path:

/logs/operator.log

@timestamp:

February 11th 2018, 10:02:27.324

@version:

1

host:

weblogic-operator-501749275-nhjs0

class:

oracle.kubernetes.operator.Main

_id:

AWGGCFGulCyEnuJh-Gq8

_type:

weblogic-operator

_index:

logstash-2018.02.11

_score:

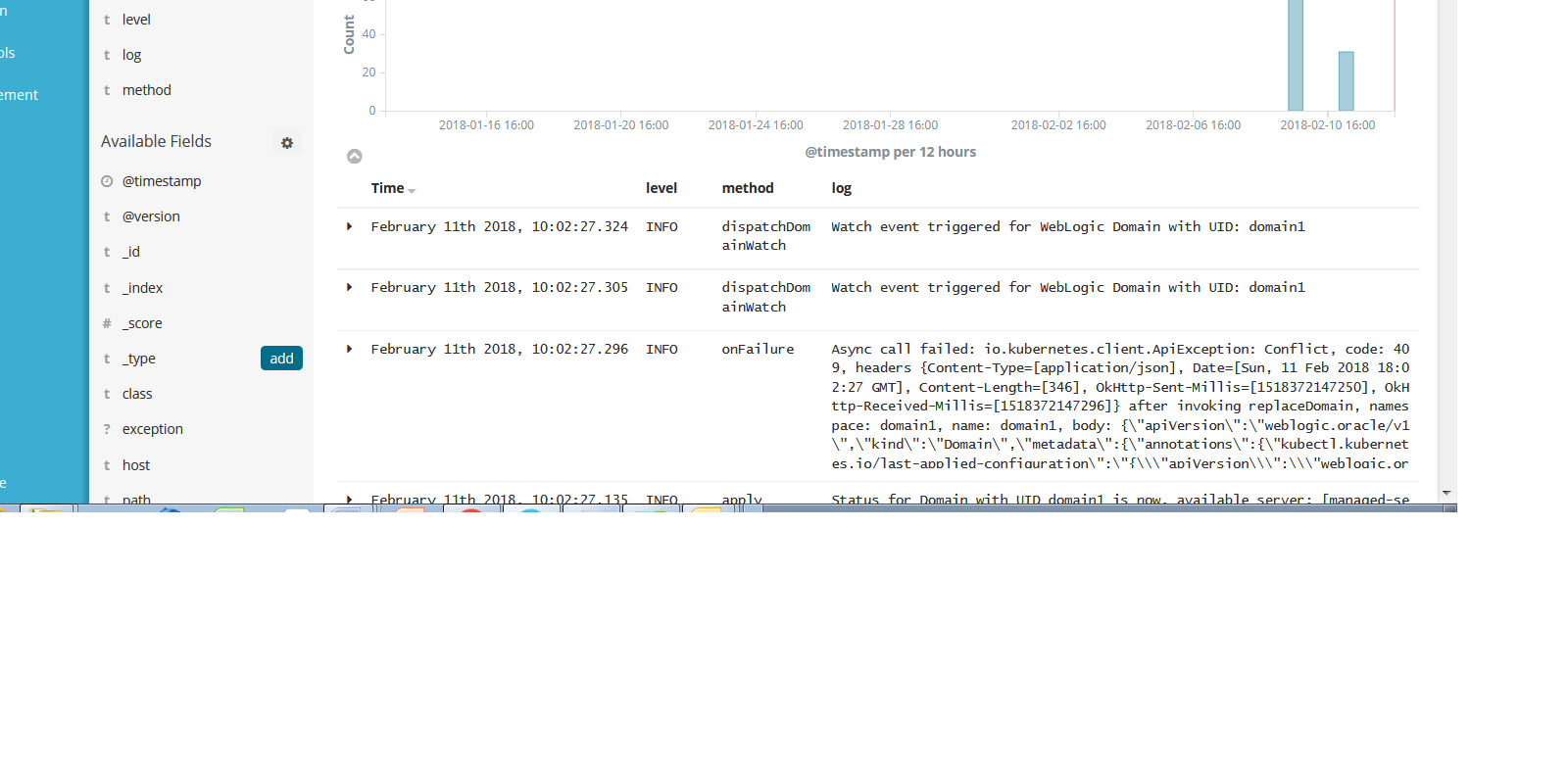

You can limit the fields that are displayed. For example, select the level, method, and log fields, then click add. Now only those fields will be shown.

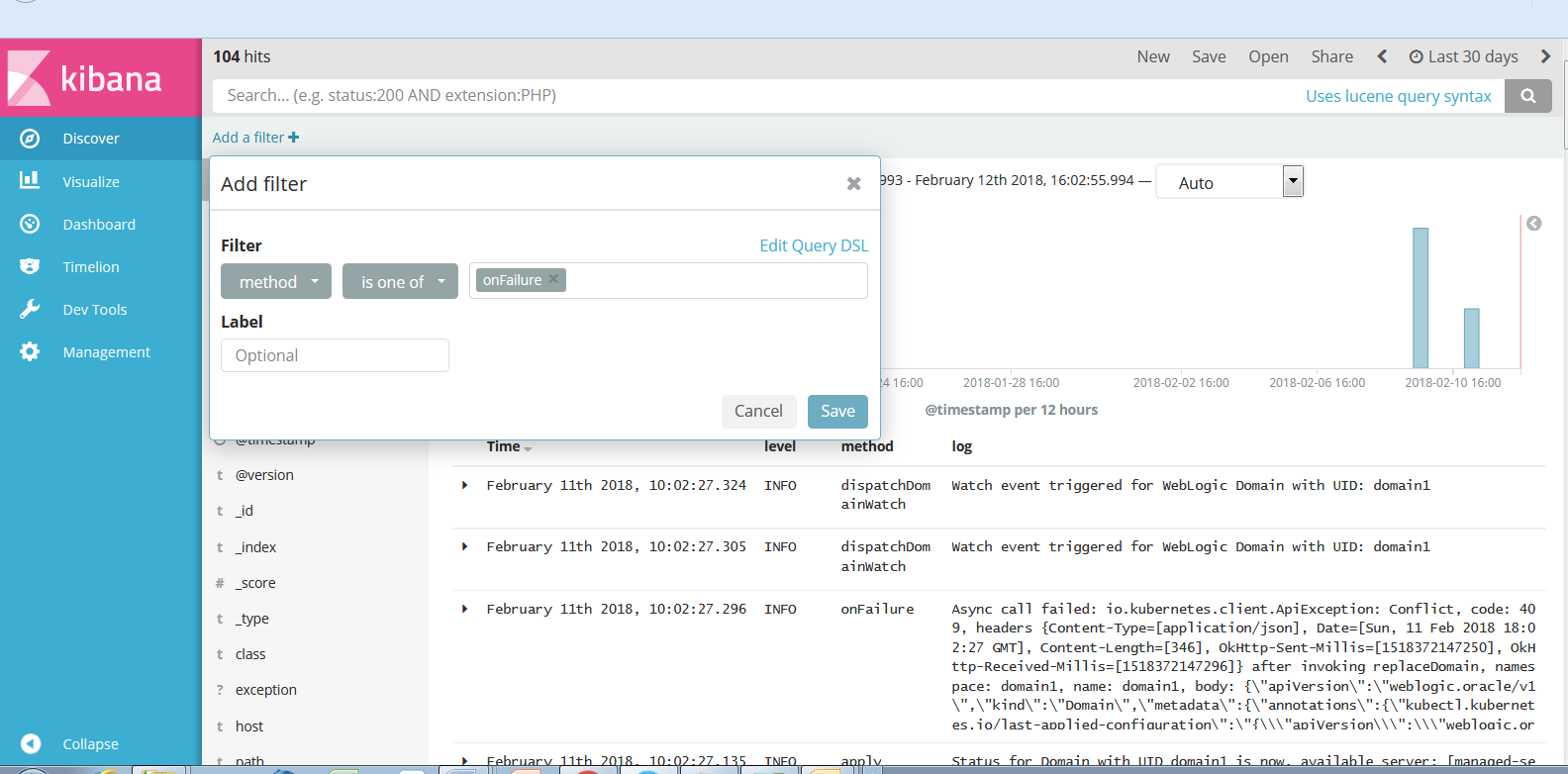

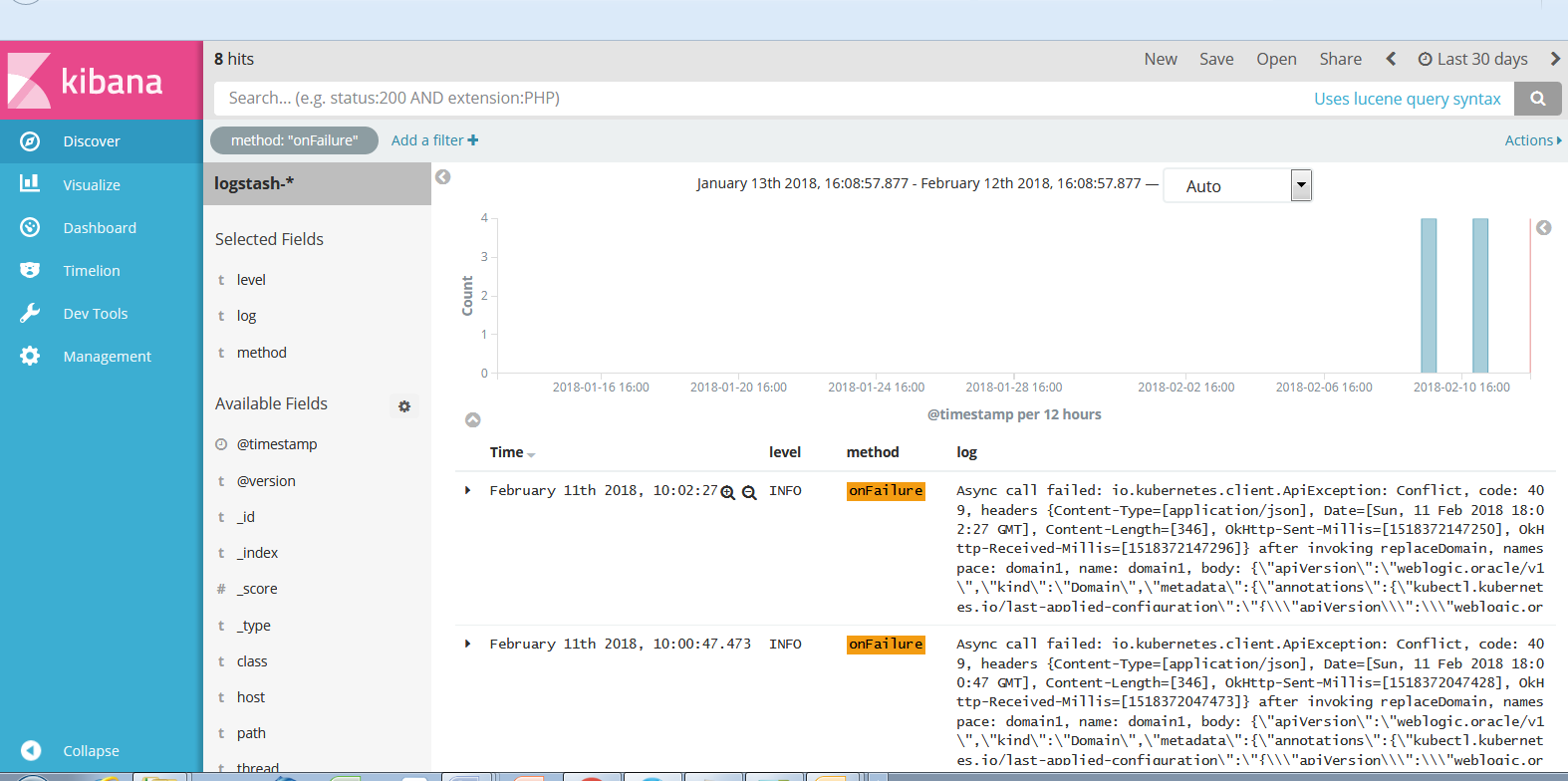

You can also use filters to display only those log messages whose fields match an expression. Click Add a filter at the top of the Discover page to create a filter expression. For example, choose method, is one of, and onFailure.

Kibana will display all log messages from the onFailure methods:

Kibana is now configured to collect the operator logs. You can use its browser-based viewer to easily view and analyze the data in those logs.

Summary

In this blog, you learned about the Elastic Stack and the Oracle WebLogic Server Kubernetes Operator integration architecture, followed by a detailed explanation of how to set up and configure Kibana for interacting with the operator logs. You will find its capabilities, flexibility, and rich feature set to be an extremely valuable asset for monitoring WebLogic domains in a Kubernetes environment.