This blog covers TimesTen memory management – what to look out for and how to tune it.

The concepts and actions apply to TimesTen 11.2.2.8 and 18.1 (both TimesTen Classic and TimesTen Scaleout).

This blogs covers running out of memory in a correctly configured database. When you run out of memory in a TimesTen database, the error looks like either:

- 802: Database permanent space exhausted

6220: Permanent data region free space insufficient to allocate XXX bytes of memory - 802: Database temporary space exhausted

6221: Temporary data region free space insufficient to allocate YYY bytes of memory

Overview

There are four main things to consider for TimesTen memory management:

- How much memory do you need

- The sustained SQL Insert and Delete rates for user tables

- The sustained SQL Insert and Delete rate for tables with automatic aging

- Memory fragmentation and how to combat it

Tuning memory for TimesTen performance is a separate subject that will be covered another day.

Background

TimesTen is a relational database with objects like tables, indexes, sequences, views, materialized views and PLSQL packages and procedures. As TimesTen is an In-Memory Database, these objects are always in memory all of the time to enable extreme performance. These objects are also stored in the file system for persistence.

As these objects are always in memory all of the time, care must be taken to not run out of memory. Although modern computers can have Terabytes of memory, you still need to carefully manage TimesTen Database memory. Most of the time, the TimesTen memory management ‘will just work’. When there is a memory management issue, this blog should cover the issues and how to address them.

How Much Memory do you need

You should have an estimate of how much data that you expect to store in your TimesTen Database. You will need more memory than is needed to just store the data. TimesTen uses memory for the following to create a shared memory segment for a database:

- Permanent memory for database persistent database objects like tables, indexes, sequences and materialized views

- Temporary memory for transient objects for things like SQL sorting and grouping

- The Log Buffer for fast transaction log file reads and writes

- Database connections need memory for context

TimesTen also uses a small amount of memory for the PLSQL runtime.

Sustained SQL Insert and Delete rates for user tables

The memory used by the TimesTen database can be thought of as a swimming pool.

You need to put water into it to be able to swim, but you do not want to overfill the pool.

If you keep adding water to a swimming pool it will eventually become full. For databases, if you keep adding data (eg SQL Inserts) and never remove and data [eg SQL Deletes] then your database will eventually become full. This is true whether the database is an In-Memory Database [like TimesTen] or a disk based database [like Oracle, MySQL or SQL Server etc].

If you keep adding water to a swimming pool at the same rate that you remove water, then the pool will tend to stay at the same water level. For databases, if you have a sustained SQL insert rate which is the same as the sustained SQL delete rate, then the database will tend to have a steady number of rows.

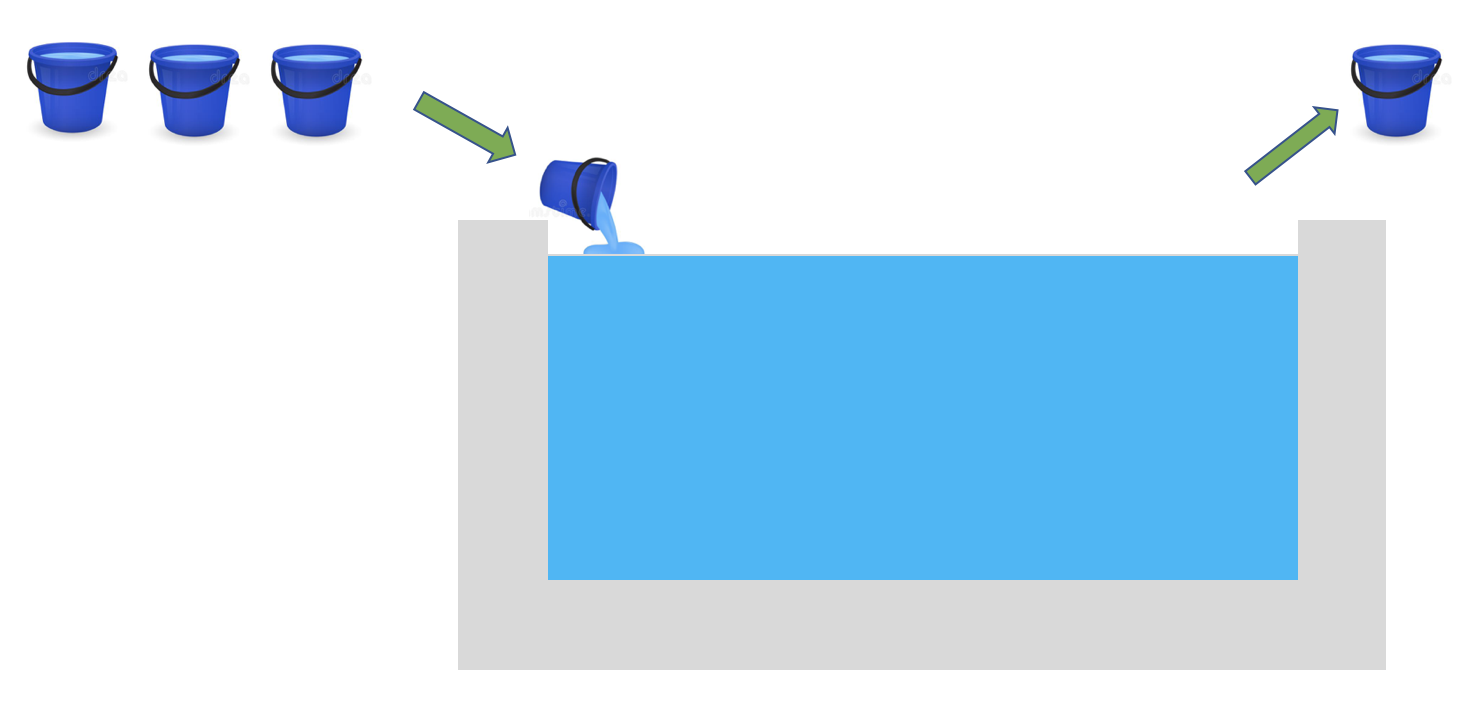

If the sustained rate at which you add water to a swimming pool is greater than the sustained rate at which you remove water from a swimming pool, then eventually the pool will fill up with water. For databases, if the sustained SQL insert rate is greater than the sustained SQL delete rate, then eventually the database will become full. Likewise if the sustained SQL insert rate is less than the sustained SQL delete rate then those tables will eventually become empty.

How to observe the SQL Insert/Delete Rates

You can get insight into the sustained SQL insert and delete rates via the ttStats command line utility. eg

$ttStats yourDSN

Running ttStats in monitor mode will look at a bunch of performance metrics and show the trends in operations per second over time.

How to observe how full a Database is

You can determine how full (as a percentage) a database is with the following SQL query from ttIsql:

Select round(((PERM_IN_USE_SIZE * 1.0) / (PERM_ALLOCATED_SIZE * 1.0)) * 100, 1) as percentFull

from sys.monitor;

For any database, you need to manage the sustained SQL insert and delete rates so that you do not end up with too much to too little data. If you have a large TimesTen database [eg a PermSize of many TB] and a low insert rate, then it will take a long time for the database to become full. However if your TimesTen database is small [eg a few Gigabytes] and you are inserting millions of rows per second then you could quickly run out space in your database.

Sustained SQL Insert and Delete Rates for tables with automatic aging

TimesTen allows you to optionally define tables to have automatic ‘aging’. This table aging is an automatic way of deleting rows from a table. The table aging algorithm can either be LRU (Least Recently Used) or time based. In addition to ‘local’ tables, TimesTen cache groups can optionally have LRU or time based aging defined for cache tables. Dynamic cache groups must use LRU aging.

LRU Aging

For LRU based aging, a single dedicated ‘aging’ sub-daemon thread will automatically delete batches of rows based on the LRU configuration via the ttAgingLRUConfig built-in. This aging sub-daemon thread is called ‘aging’ in the ttStatus output.

The input parameters to ttAgingLRUConfig are:

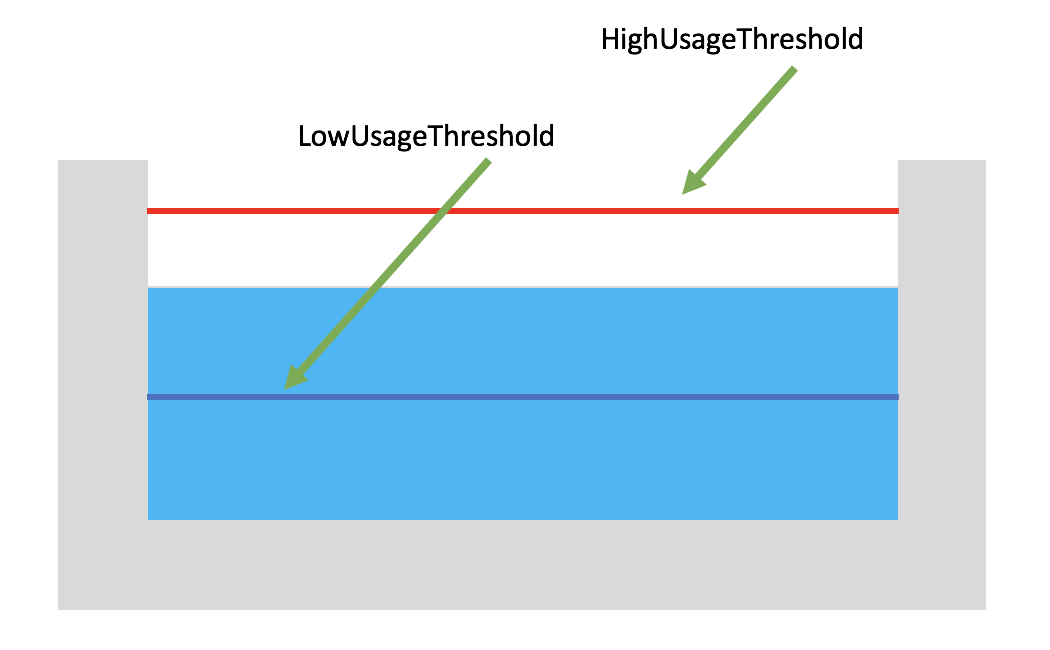

- lowUsageThreshold. Use LRU aging to delete rows until the database is only this percent full.

- highUsageThreshold. Do not start LRU aging if the database is less than this percent full.

- agingCycle. If you make this parmeter 0, it will apply the LRU aging algorithm every second

The default values are lowUsageThreshold 80%, highUsageThreshold 90% and agingCycle 0 (ie every second).

Using the default LRU aging configuration, if the database is 50% full then no LRU aging will occur as 50% < 90%.

If you have a database with a large sustained SQL insert rate [eg many connections each inserting in batches of rows in parallel], then it is possible that the single LRU aging thread with the default configuration will not be able to keep up. You can increase the sustained SQL delete rate by either:

- using a more aggressive LRU configuration. For example, with lowUsageThreshold 50%, highUsageThreshold 70% and an agingCycle of 0 there is more head room [eg 30% rather than 10%] and the LRU aging thread can delete up to 50% of the oldest data which means that it will take longer for the database to grow to the highUsageThreshold %

- and/or using one or more custom SQL delete programs to increase the effective sustained SQL delete rate

You decide on a table by table basis whether to use LRU table aging. The LRU aging configuration is defined at the database level. Usually the default LRU aging configuration is OK for most tables.

Time Based Aging

You can also optionally use time based aging for tables. When you use time based aging, you define the data lifetime for rows and how often the aging process checks for old data to delete.

Time based aging also uses the ‘aging’ sub-deamon thread.

If you have a database with a large sustained SQL insert rate then using time based aging where the data is only checked one per day would not be good idea. Instead for this workload, using an AgingCycle defined in seconds and a lifetime of seconds or minutes would be more appropriate.

Memory fragmentation and how to combat it

The TimesTen In-Memory Database has multiple different memory objects and algorithms. TimesTen can be thought of as using pools [or regions] for some memory management algorithms. User visible memory objects include the permanent memory region, the temporary memory region, the log buffer, the PLSQL SGA, perm blocks and heaps.

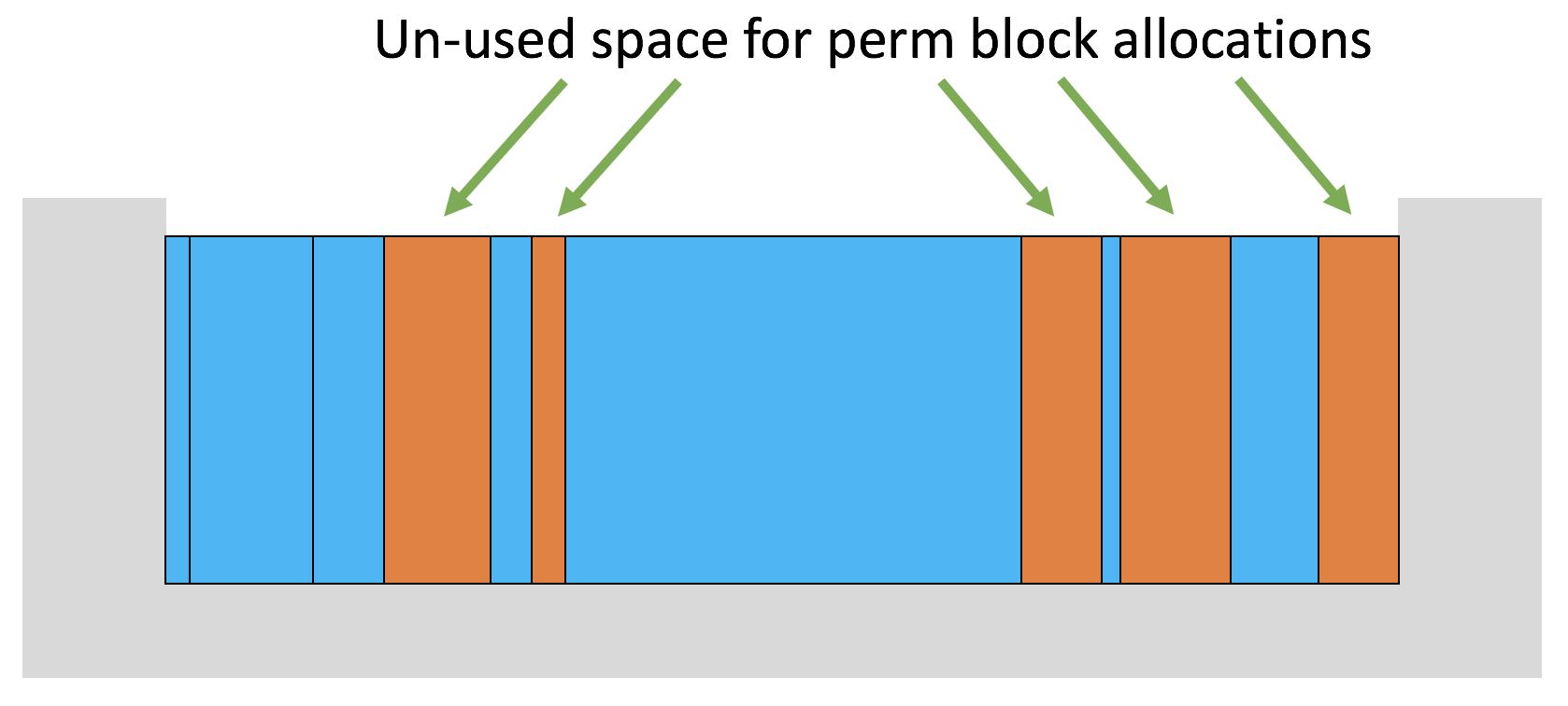

SQL inserts tend to result in [perm] blocks being allocated and SQL deletes tend to result in [perm] blocks being de-allocated. To enable fast block allocations and de-allocations, TimesTen uses free lists. Perm blocks can vary in size. An issue that all memory managers need to deal with is memory fragmentation.

Memory fragmentation can seem strange if you are not aware that it is happening. For example, if your 100 GB database is 99% full but you cannot insert a row that uses 100KB due to ‘insufficient’ permanent memory then you will likely be confused and annoyed. Although your database in theory has 1GB of free space, in practice due to excessive memory fragmentation (in blocks and/or heaps), it may be that an allocation of 100KB it not possible at that time [even though smaller allocations are possible].

Using ttBlockInfo to check for block fragmentation

The ttBlockInfo built-in provides visibility for block fragmentation.

The output of ttBlockInfo is:

- TotalBlocks

- FreeBlocks

- FreeBytes

- LargestFree

A symptom of excessive block fragmentation is that ‘large’ block allocations are not possible even though there are sufficient FreeBytes because the LargestFree block is not big enough. Although block fragmentation in TimesTen is possible, but not being able to allocate a large block due to fragmentation is rare.

Using ttHeapInfo to check for heap fragmentation

TimesTen memory heaps dynamically grow/shrink and use freelists for fast heap allocations/de-allocations. Each heap’s deferred freelists can also suffer from memory fragmentation. Freelist compaction is used to minimize memory fragmentation.

The ttHeapInfo built-in provides visibility into the various TimesTen heaps [eg tables, indexes and compiled SQL statements]. For each heap, ttHeapInfo output includes:

- The heap name [name]

- The heap allocated size [size]

- The current heap size [inuse]

- The maximum amount of memory used in the heap [highWater]

- The number of deferred freed memory buffers [freeDeferred]

Freelist compaction can either be done within the scope of a transaction [the default] or in the background. The default memory management configurations and algorithms are sufficient for most workloads.

How to combat memory fragmentation

There are several ways to address block fragmentation and heap freelist management.

A. Choose your foreground memory compaction algorithm

TimesTen 11.2.2.8 and 18.1 support two algorithms for compaction of heaps and blocks.

- The default ‘old’ algorithm for TimesTen 11.2.2 does minimal compaction in the current transaction

- An alternative ‘new’ algorithm does more compaction [in constant time] in the current transaction

- The memory compaction algorithm can be changed at runtime using the ttDBConfig built-in:

- ttDBConfig(‘newHeapBehavior’, 0)

- This means that the ‘old’ heap algorithm is used

- This is the default for TimesTen 11.2.2

- ttDBConfig(‘newHeapBehavior’, 1)

- This means that the ‘new’ heap algorithm is used

- This is the default for TimesTen 18.1

- ttDBConfig(‘newHeapBehavior’, 0)

If you are experiencing memory fragmentation, it is advisable to test your workloads with both heap management algorithms. Make sure that you measure the latency and throughput for the expected concurrency for both algorithms so that you can make an informed decision on which heap management algorithm to use.

B. Optionally use a background thread for memory compaction

If needed, a dedicated memory compaction thread can enabled via the ttDBCompactConfig built-in:

- This thread does block coalescing and heap freelist management in the background

- By default, this compaction thread is not enabled as it is not always needed and it has an overhead

- You can choose how often and how much work that this compaction thread does:

- The quantum parameter determines how many blocks are coalesced per execution

- The compactsPerSecond parameter determines how many times compaction occurs per second

- The threshold parameter determines whether there is sufficient fragmentation to require compaction for that iteration

- In TimesTen 11.2.2.8, the memory compaction sub-daemon thread is called AsyncMV in the ttStatus output

- In TimesTen 18.1, the memory compaction sub-daemon thread is called Garbage Collector in the ttStatus output

- The newHeapBehavior algorithms and the dedicated compaction thread are independent mechanisms which can be combined if the workload has excessive memory fragmentation

C. Optionally use more, smaller blocks for row allocations

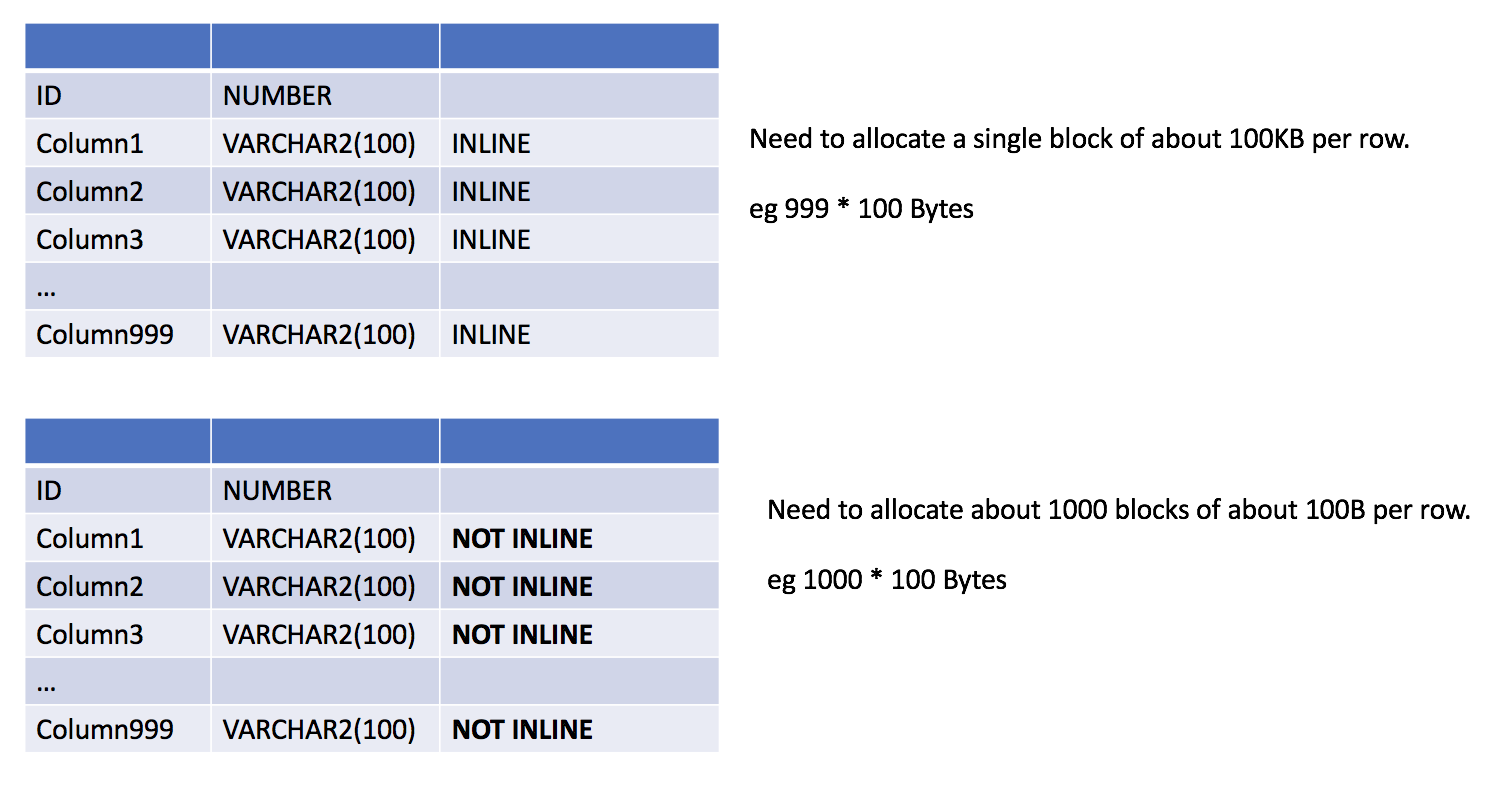

‘Large’ block allocation failures due to memory fragmentation may be able to be reduced by using out of line storage for variable length table columns

- Variable length columns in TimesTen tables [eg varchar2 or nvarchar2] which use less than 128 bytes are by default stored INLINE. If these columns are greater then 128 bytes then by default they are stored NOT INLINE

- If a column is stored out of line [ie NOT INLINE] then the memory for that column can be allocated from a separate database block.

- If a table has a large number of variable length columns which are <= 128 bytes in length, then by declaring that those columns are NOT INLINE, more smaller blocks are used rather than one large block

Note, the actual memory allocated per row is far more complex than this in both cases.

In this example, about the same total amount of memory is allocated in both cases, but in the second case, many smaller allocations are needed as compared with fewer larger allocations, and the smaller allocations are more likely to succeed in the presence of severe memory fragmentation.

Summary

Most of the time, memory management is not of concern for the TimesTen In-Memory Database as everything just works. When your workload is experiencing memory management issues, you should look at the following things in this order:

- Whether there is sufficient memory for the expected workloads

- The sustained SQL insert and delete rates for tables which are running out of space

- The sustained SQL insert and delete rates for tables with automatic aging which are running out of space

- Which heap management algorithm is being used

- Whether the dedicated memory compaction sub-daemon thread should be used and if so how it is configured

- Whether declaring variable length columns as NOT INLINE will help to reduce memory allocation failures

- The key builtins and utilities used in this blog are:

Disclaimer: These are my personal thoughts and do not represent Oracle’s official viewpoint in any way, shape, or form.