Overview

Since Oracle Solaris 11.4, state of Zones on the system is kept in a shared database in /var/share/zones/, meaning a single database is accessed from all boot environments (BEs). However, up until Oracle Solaris 11.3, each BE kept its own local copy in /etc/zones/index, and individual copies were never synced across BEs.

This article provides some history, why we moved to the shared zones state database, and what it means for administrators when updating from 11.3 to 11.4.

Keeping Zone State in 11.3

In Oracle Solaris 11.3, state of zones is associated separately with every global zone BE in a local text database /etc/zones/index. The zoneadm and zonecfg commands then operate on a specific copy based on which BE is booted.

In the world of systems being migrated between hosts, having a local zone state database in every BE constitutes a problem if we, for example, update to a new BE and then migrate a zone before booting into the newly updated BE. When we boot the new BE eventually, the zone will end up in an unavailable state (the system recognizes the shared storage is already used and puts the zone into such state), suggesting that it should be possibly attached. However, as the zone was already migrated, an admin is expecting the zone in the configured state instead.

The 11.3 implementation may also lead to a situation where all BEs on a system represent the same Solaris instance (see below for the definition of what is a Solaris instance), and yet every BE can be linked to a non-global Zone BE (ZBE) for a zone of the same name with the ZBE containing an unrelated Solaris instance. Such a situation happens on 11.3 if we reinstall a chosen non-global zone in each BE.

Solaris Instance

A Solaris instance represents a group of related IPS images. Such a group is created when a system is installed. One installs a system from the media or an install server, via “zoneadm install” or “zoneadm clone“, or from a clone archive. Subsequent system updates add new IPS images to the same image group that represents the same Solaris instance.

Uninstalling a Zone in 11.3

In 11.3, uninstalling a non-global zone (ie. the native solaris(5) branded zone) means to delete ZBEs linked to the presently booted BE, and updating the state of the zone in /etc/zones/index. Often it is only one ZBE to be deleted. ZBEs linked to other BEs are not affected. Destroying a BE only destroys a ZBE(s) linked to the BE. Presently the only supported way to completely uninstall a non-global zone from a system is to boot each BE and uninstall the zone from there.

For Kernel Zones (KZs) installed on ZVOLs, each BE that has the zone in its index file is linked to the ZVOL via an <dataset>.gzbe:<gzbe_uuid> attribute. Uninstalling a Kernel Zone on a ZVOL removes that BE specific attribute, and only if no other BE is linked to the ZVOL, the ZVOL is deleted during the KZ uninstall or BE destroy. In 11.3, the only supported way to completely uninstall a KZ from a system was to boot every BE and uninstall the KZ from there.

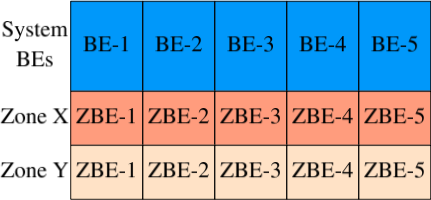

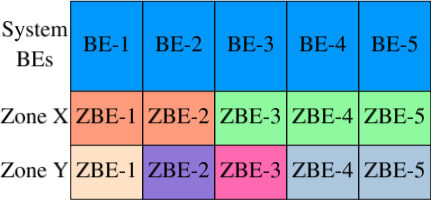

What can happen when one reinstalls zones during the normal system life cycle is depicted on the following pictures. Each color represents a unique Solaris instance.

The picture below shows a usual situation with solaris(5) branded zones. After the system is installed, two zones are installed there into BE-1. Following that, on every update, the zones are being updated as part of the normal pkg update process.

The next picture, though, shows what happens if zones are being reinstalled during the normal life cycle of the system. In BE-3, Zone X was reinstalled, while Zone Y was reinstalled in BE-2, BE-3, and BE-4 (but not in BE-5). That leads to a situation where there are two different instances of Zone X on the system, and four different Zone Y instances. Whichever zone instance is used depends on what BE is the system booted into. Note that the system itself and any zone always represent different Solaris instances.

Undesirable Impact of the 11.3 Behavior

The described behavior could lead to undesired situations in 11.3:

- With multiple ZBEs present, if a non-global zone is reinstalled, we end up with ZBEs under the zone’s rpool/VARSHARE dataset representing Solaris instances that are unrelated, and yet share the same zone name. That leads to possible problems with migration mentioned in the first section.

- If a Kernel Zone is used in multiple BEs and the KZ is uninstalled and then tried to get re-installed again, the installation fails with an error message that the ZVOL is in use in other BEs.

- The only supported way to uninstall a zone is to boot into every BE on a system and uninstall the zone from there. With multiple BEs, that is definitely a suboptimal solution.

Sharing a Zone State across BEs in 11.4

As already stated in the Overview section, in Oracle Solaris 11.4, the system shares a zone state across all BEs. The shared zone state resolves the issues mentioned in the previous sections.

In most situations, nothing changes for the users and administrators as there were no changes in the existing interfaces (some were extended though). The major implementation change was to move the local, line oriented textual database /etc/zones/index to a shared directory /var/share/zones/ and store it in a JSON format. However, as before, the location and format of the database is just an implementation detail and is not part of any supported interface.

To be precise, we did EOF zoneadm -R <root> mark as part of the Shared Zone State project. That interface was of very little use already and then only in some rare maintenance situations.

Also let us be clear that all 11.3 systems use and will continue to use the local zone state index /etc/zones/index. We have no plans to update 11.3 to use the shared zone state database.

Changes between 11.3 and 11.4 with regard to keeping the Zones State

With the introduction of the shared zone state, you can run into situations that before were either just not possible or could have been only created via some unsupported behavior.

Creating a new zone on top of an existing configuration

When deleting a zone, the zone record is removed from the shared index database and the zone configuration is deleted from the present BE. Mounting all other non-active BEs and removing the configuration files from there would be quite time consuming so the files are left behind there.

That means if one later boots into one of those previously non-active BEs and tries to create the zone there (which does not exist as we removed it from the shared database before), zonecfg may hit an already existing configuration file. We extended the zonecfg interface in a way that you have a choice to overwrite the configuration or reuse it:

root# zonecfg -z zone1 create Zone zone1 does not exist but its configuration file does. To reuse it, use -r; create anyway to overwrite it (y/[n])? n root# zonecfg -z zone1 create -r Zone zone1 does not exist but its configuration file does; do you want to reuse it (y/[n])? y

Existing Zone without a configuration

If an admin creates a zone, the zone record is put into the shared index database. If the system is later booted into a BE that existed before the zone was created, that BE will not have the zone configuration file (unless left behind before, obviously) but the zone will be known as the zone state database is shared across all 11.4 BEs. In that case, the zone state in such a BE will be reported as incomplete (note that not even the zone brand is known as that is also part of a zone configuration).

root# zoneadm list -cv ID NAME STATUS PATH BRAND IP 0 global running / solaris shared - zone1 incomplete - - -

When listing the auxiliary state, you will see that the zone has no configuration:

root# zoneadm list -sc NAME STATUS AUXILIARY STATE global running zone1 incomplete no-config

If you want to remove that zone, the -F option is needed. As removing the zone will make it invisible from all BEs, possibly those with a usable zone configuration, we introduced a new option to make sure an administrator will not accidentally remove such zones.

root# zonecfg -z newzone delete Use -F to delete an existing zone without a configuration file. root# zonecfg -z newzone delete -F root# zoneadm -z newzone list zoneadm: zone 'newzone': No such zone exists

Updating to 11.4 while a shared zone state database already exists

When the system is updated from 11.3 to 11.4 for the first time, the shared zone database is created from the local /etc/zones/index on the first boot into the 11.4 BE. A new svc:/system/zones-upgrade:default service instance takes care of that. If the system is then brought back to 11.3 and updated again to 11.4, the system (ie. the service instance mentioned above) will find an existing shared index when first booting to this new 11.4 BE and if the two indexes differ, there is a conflict that must be taken care of.

In order not to add more complexity to the update process, the rule is that on every update from 11.3 to 11.4, any existing shared zone index database /var/share/zones/index.json is overwritten with data converted from the /etc/zone/index file from the 11.3 BE the system was updated from, if the data differs.

If there were no changes to Zones in between the 11.4 updates, either in any older 11.4 BEs nor in the 11.3 BE we are again updating to 11.4 from, there are no changes in the shared zone index database, and the existing shared database needs no overwrite.

However, if there are changes to the Zones, eg. a zone was created, installed, uninstalled, or detached, the old shared index database is saved on boot, the new index is installed, and the service instance svc:/system/zones-upgrade:default is put to a degraded state. As the output of svcs -xv now in 11.4 newly reports services in a degraded state as well, that serves as a hint to a system administrator to go and check the service log:

root# beadm list

BE Flags Mountpoint Space Policy Created

-- ----- ---------- ----- ------ -------

s11_4_01 - - 2.93G static 2018-02-28 08:59

s11u3_sru24 - - 12.24M static 2017-10-06 13:37

s11u3_sru31 NR / 23.65G static 2018-04-24 02:45

root# zonecfg -z newzone create

root# pkg update entire@latest

root# reboot -f

root#

root# beadm list

BE Name Flags Mountpoint Space Policy Created

----------- ----- ---------- ------ ------ ----------------

s11_4_01 - - 2.25G static 2018-02-28 08:59

s11u3_sru24 - - 12.24M static 2017-10-06 13:37

s11u3_sru31 - - 2.36G static 2018-04-24 02:45

s11_4_03 NR / 12.93G static 2018-04-24 04:24

root# svcs -xv

svc:/system/zones-upgrade:default (Zone config upgrade after first boot)

State: degraded since April 24, 2018 at 04:32:58 AM PDT

Reason: Degraded by service method: "Unexpected situation during zone index conversion to JSON."

See: http://support.oracle.com/msg/SMF-8000-VE

See: /var/svc/log/system-zones-upgrade:default.log

Impact: Some functionality provided by the service may be unavailable.

root# tail /var/svc/log/system-zones-upgrade:default.log

[ 2018 Apr 24 04:32:50 Enabled. ]

[ 2018 Apr 24 04:32:56 Executing start method ("/lib/svc/method/svc-zones-upgrade"). ]

Converting /etc/zones/index to /var/share/zones/index.json.

Newly generated /var/share/zones/index.json differs from the previously existing

one. Forcing the degraded state. Please compare current

/var/share/zones/index.json with the original one saved as

/var/share/zones/index.json.backup.2018-04-24--04-32-57, then clear the service.

Moving /etc/zones/index to /etc/zones/index.old-format.

Creating old format skeleton /etc/zones/index.

[ 2018 Apr 24 04:32:58 Method "start" exited with status 103. ]

[ 2018 Apr 24 04:32:58 "start" method requested degraded state: "Unexpected

situation during zone index conversion to JSON" ]

root# svcadm clear svc:/system/zones-upgrade:default

root# svcs svc:/system/zones-upgrade:default

STATE STIME FMRI

online 4:45:58 svc:/system/zones-upgrade:default

If you diff(1)’ed the backed up JSON index and the present one, you would see that the zone newzone was added. The new index could be also missing some zones that were created before. Based on the index diff output, he/she can create or remove Zones on the system as necessary, using standard zonecfg(8) command, possibly reusing existing configurations as shown above. Also note that the degraded state here did not mean any degradation of functionality, its sole purpose was to notify the admin about the situation.

Do not use BEs as a Backup Technology for Zones

The previous implementation in 11.3 allowed for using BEs with linked ZBEs as a backup solution for Zones. That means that if a zone was uninstalled in a current BE, one could usually boot to an older BE or, in case of non-global zones, try to attach and update another existing ZBE from the current BE using the attach -z <ZBE> -u/-U subcommand.

With the current implementation, uninstalling a zone means to uninstall the zone from the system, and that is uninstalling all ZBEs (or a ZVOL in case of Kernel Zones) as well. If you uninstall a zone in 11.4, it is gone.

If an admin used previous implementation also as a convenient backup solution, we recommend to use archiveadm instead, whose functionality also provides for backing up zones.

Future Enhancements

An obvious future enhacement would be shared zone configurations across BEs. However, it is not on our short term plan at this point and neither we can guarantee this functionality will ever be implemented. One thing is clear – it would be a more challenging task than the shared zone state.