This blog is part two of the series on the multi-region table blog. In the first blog, we saw the need for a geographically distributed database and the advantages of that in terms of providing low latency local read and writes. We also looked at the potential use-cases that can benefit from this feature. In this blog, we’ll look at more details about multi-region tables, as well as different components that make up this feature and finally how to correctly set-up a multi-region table in the Oracle NoSQL Database for an active-active architecture.

In the last blog, we looked at an active-active architecture, and from a database point of view, here are some of the critical requirements that a database should satisfy to provide a correct active-active set-up:

- Geographic distribution – Deploying a system across a broad set of regions separated by a significant speed of light distance (> 10 ms). The system should be automatically able to replicate the data to all the connected regions without requiring manual work from the end-users.

- Performance (low read/write latency) – The ability to minimize the read/write latency based on user location.

- Write anywhere – The ability to write to any record, in any region, at any time with the apparent notion that these writes may conflict when the record with the same key is updated in multiple regions.

Multi-region table and multi-region architecture

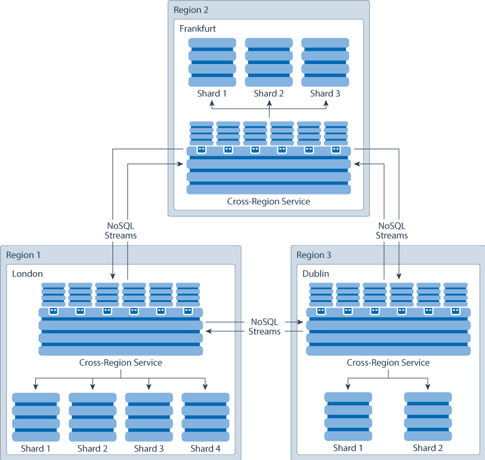

At a high level, a multi-region architecture is two or more independent, geographically distributed Oracle NoSQL Database clusters bridged by bi-directional replication. For example, a user can deploy three Oracle NoSQL Database instances (also referred to as a store) in Frankfurt, London, and Dublin. Now, suppose you want to collect and maintain similar data across these multiple regions. You need a mechanism to create tables that can span across multiple regions and keep themselves updated with the inputs from all the participating regions. You can achieve this using multi-region tables. A multi-region table is a read-anywhere and write-anywhere table that lives in multiple regions.

Figure 1: Multi-region Architecture

Please note: the term “region” has different meanings in different contexts. Unlike Oracle NoSQL Cloud service (NDCS), where “region” means one of the OCI regions, e.g., us-east (Ashburn, VA), us-west (Phoenix, AZ), or EU-central (Frankfurt), here the region means an independent Oracle NoSQL Database installation. Each Oracle NoSQL Database installation may be geographically distributed.

In this architecture, all the distributed stores form a fully-connected graph. All multi-region tables on those three regions would be synchronized. Within each region, a new component called the Cross-Region (XRegion) Service is deployed and is responsible for subscribing to committed changes on multi-region tables. Each region’s committed changes are streamed directly out of the store’s transaction log and delivered to all remote subscribers. Please refer to our documentation to learn more details about the architecture and various components, including the XRegion Service.

With the above as a background, let’s set up a multi-region table and discuss its life cycle with an example. Consider an Oracle NoSQL Database with two regions, Frankfurt and London. We create a table called Users to store the user details in each of the regions. Let’s summarize the steps that you must perform to create and manage the table:

- Prepare the JSON config file and make a writeable directory for each region. The JSON config file describes how regions will connect (e.g., the hosts and ports) as well as the security file used.

- Deploy stores in each region

- Start XRegion Service in each region

- Define the local region’s name, and define the remote regions

- Create a multi-region table in each region

We then perform data operations like INSERT, UPDATE, and DELETE on the table in one region, and will see those changes propagated to the other region.

Preparation

The first step is to create a home directory for XRegion Service and copy the example JSON config file into the directory. The JSON config file should specify this directory as the “path” (see example below).

bash-4.1$ /home/aachanda/xrshome

bash-4.1$ ls -lrt

total 1

-rw-r--r--+ 1 aachanda g900 303 April 1 16:25 json.config

Prepare JSON config file

These are two JSON config files used to create a 2-region multi-region table across region London (LND) and Frankfurt (FRA). The attributes in these JSON config files are explained below:

Region LND

{

"path" : "/home/aachanda/work/xrshome",

"agentGroupSize" : 1,

"agentId" : 0,

"region" : "LND”,

"store" : "mystore",

"helpers" : [ "localhost:5000" ],

"regions" : [ {

"name" : "FRA”,

"store" : "mystore",

"helpers" : [ "lnd-nosql-1.oracle.com:5000" ]

} ]

}

Region FRA

{

"path" : "/home/aachanda/work/xrshome",

"agentGroupSize" : 1,

"agentId" : 0,

"region" : "FRA”,

"store" : "mystore",

"helpers" : [ "localhost:5000" ],

"regions" : [ {

"name" : "LND”,

"store" : "mystore",

"helpers" : [ "fra-nosql-1.oracle.com:5000" ]

} ]

}

Path: This is the root directory of the XRegion Service. The service will use the directory to dump logs, statistics, and other auxiliary files. The directory shall be readable and writable to the service.

AgentGroupSize and AgentId: These two parameters specify the number of service agents and the agent id (0-based numbering) in the group. The service uses them to form a group of agents to serve the local region to achieve horizontal scalability. In the current release, we only support a single service for each local region, therefore in its JSON config file

- “agentGroupSize” is set to 1;

- “agentId” is set to 0.

Region: This is the local region name. Users can name the local region using a name different from its store name. This name will be used in DDL to create a multi-region table in a remote region. For example, if the local region name is “LND,” the name “LND” shall be used when the user creates a multi-region table in the remote region “FRA.”

Store and Helpers: These are the store name and helpers of the local NoSQL Database store. These helper hosts are the same ones that are used to connect to the store from a KV client.

Deploy the NoSQL Database Stores

Each region needs to deploy its store as usual. Please see Oracle NoSQL Database document for instructions on deploying a store. After a store is deployed, you can use the PING command to check the health of the store.

bash-4.1$ java -jar $KVHOME/dist/lib/kvstore.jar ping -port 5000 -host localhost

Pinging components of store mystore based upon topology sequence #12

8 partitions and 1 storage nodes

Time: 2020-04-06 05:41:38 UTC Version: 20.1.12

Shard Status: healthy:1 writable-degraded:0 read-only:0 offline:0 total:1

Admin Status: healthy

Zone [name=FRA id=zn1 type=PRIMARY allowArbiters=false masterAffinity=false] RN Status: online:1 read-only:0 offline:0

Storage Node [sn1] on fra-nosql-1:5000

Zone: [name=HPN id=zn1 type=PRIMARY allowArbiters=false masterAffinity=false] Status: RUNNING Ver: 20.1.12 2020-03-27 04:12:18 UTC Build id: 7fce2c227666 Edition: Enterprise

Admin [admin1] Status: RUNNING,MASTER

Rep Node [rg1-rn1] Status: RUNNING,MASTER sequenceNumber:131 haPort:5011 available storage size:27 GB

Start XRegion Service

You need to start XRegion Service in each region using the XRSTART command with the path to the JSON config file. Here is an example to start the service in region LND, and we need to start the service at FRA as well. If the local region is not up, the XRegion Service will start and poll the local region until it is up and running.

bash-4.1$ nohup java -Xms256m -Xmx2048m -jar $KVHOME/dist/lib/kvstore.jar xrstart -config /home/aachanda/ xrshome/json.config > /home/aachanda/ /xrshome/nohup.out &

[1] 5618

bash-4.1$ jps | grep xrstart

3:5618 /home/aachanda/KV/kvhome/dist/lib/kvstore.jar xrstart -config /home/aachanda/xrshome/json.config

bash-4.1$ cat /home/aachanda/work/xrshome/nohup.out

Cross-region agent (region=FRA, store=mystore, helpers=[localhost:5000]) starts up from config file=/home/aachanda/xrshome/json.config

Define Region

Before creating the first multi-region table in each participating region, you must set a local region name and define each remote region in the local region. After that, we can verify that the region is set up by execute ‘show regions’.

In FRA, we shall name Frankfurt as local and define London as remote

bash-4.1$ java -jar $KVHOME/dist/lib/kvstore.jar runadmin -host fra-nosql-1 -port 5000

kv-> connect store -name mystore

Connected to mystore at fra-nosql-1:5000.

kv-> execute 'SET LOCAL REGION FRA’

Statement completed successfully

kv-> execute 'CREATE REGION LND’

Statement completed successfully

kv-> execute 'show regions'

regions

FRA (local, active)

LND (remote, active)

kv-> exit

In LND, we shall name London as local and define Frankfurt as remote

bash-4.1$ java -jar $KVHOME/dist/lib/kvstore.jar runadmin -host lnd-nosql-1 -port 5000

kv-> connect store -name mystore

Connected to mystore at lnd-nosql-1:5000.

kv-> execute 'SET LOCAL REGION LND’

Statement completed successfully

kv-> execute 'CREATE REGION FRA’

Statement completed successfully

kv-> execute 'show regions'

regions

LND (local, active)

FRA (remote, active)

kv-> exit

Create a multi-region table in LND and FRA

You must create a multi-region table on each store in the connected graph, and specify the list of regions that the table should span. Now we create the Users table in LND with FRA as a remote region.

kv-> execute ‘CREATE TABLE Users(uid INTEGER, person JSON,PRIMARY KEY(uid))IN REGIONS FRA’

Statement completed successfully(uid))IN REGIONS FRA’

Next, we create the Users table in FRA with LND as the remote region.

kv-> execute ‘CREATE TABLE Users(uid INTEGER, person JSON,PRIMARY KEY(uid))IN REGIONS LND’

Statement completed successfully(uid))IN REGIONS FRA’

Verify the Table Is Ready

Now we have the table Users created in two regions, FRA and LND. After the table is created, we can check the status of the table. The remote regions will show up in the “regions” section at the end of the output. For example, if we do “show tables” in FRA, the remote region of table “Users” is LND

kv-> show table -name Users

{

"json_version" : 1,

"type" : "table",

"name" : "Users",

"shardKey" : [ "uid" ],

"primaryKey" : [ "uid" ],

"fields" : [ {

"name" : "uid",

"type" : "INTEGER",

"nullable" : false,

"default" : null

}, {

"name" : "person",

"type" : "JSON",

"nullable" : true,

"default" : null

} ],

"regions" : {

"1" : "FRA"

"2" : "LND"

}

}

Perform the Data Operations

Now we are ready to try some DML operations. Suppose we are in FRA.

INSERT/UPDATE

We first insert a few rows in FRA and update one of them.

bash-4.1$ java -jar $KVHOME/dist/lib/sql.jar -helper-hosts fra-1-nosql:5000 -store mystore

sql-> insert into users values(1,{"firstName":"jack","lastName":"ma","location":"FRA"});

{"NumRowsInserted":1}

1 row returned

sql-> insert into users values(2, {"firstName":"foo","lastName":"bar","location":null});

{"NumRowsInserted":1}

1 row returned

sql-> update users u set u.person.location = "FRA" where uid = 2;

{"NumRowsUpdated":1}

1 row returned

.. and now we query the table at LND.

bash-4.1$ java -jar $KVHOME/dist/lib/sql.jar -helper-hosts lnd-1-nosql:5000 -store mystore

sql-> select * from users;

{"uid":1,"person":{"firstName":"jack","lastName":"ma","location": "FRA"}}

{"uid":2,"person":{"firstName":"foo","lastName":"bar","location": "FRA"}}

2 rows returned

Now we update a row(uid=1), for example, to indicate that this user has traveled to LND by changing the description location from “FRA” to “LND”.

sql-> update users u set u.person.location= "LND" where uid =1;

{"NumRowsUpdated":1}

1 row returned

We go back to FRA and query the table, note the row (uid =1) the user’s location has been changed from “FRA” to “LND”.

bash-4.1$ java -jar $KVHOME/dist/lib/sql.jar -helper-hosts fra-1-nosql:5000 -store mystore

sql-> select * from users;

{"uid":1,"person":{"firstName":"jack","lastName":"ma","location": "LND"}}

{"uid":2,"person":{"firstName":"foo","lastName":"bar","location": "FRA"}}

2 rows returned

DELETE

Finally, let us delete a row in FRA. While we are still in FRA, we can go ahead and delete the row where uid=1.

bash-4.1$ java -jar $KVHOME/dist/lib/sql.jar -helper-hosts fra-1-nosql:5000 -store mystore

sql-> delete from users where uid=1;

{"numRowsDeleted":1}

1 row returned

bash-4.1$ java -jar $KVHOME/dist/lib/sql.jar -helper-hosts lnd-1-nosql:5000 -store mystore

sql-> select * from users;

{"uid":2,"person":{"firstName":"foo","lastName":"bar","location": "FRA"}}

1 row returned

Developers can also read and write the multi-region tables using the existing NoSQL Database APIs like any non-multi-region table. For details API details, please see Oracle NoSQL Database Table Documentation.

At any given point in time, users can add or remove the regions as shown below:

Remove Region

For example, if the user would like to remove Frankfurt from the example above, then:

- In the London store, remove Frankfurt from its remote region list

- Command: kv-> execute ‘alter table Users drop regions FRA’

- In the Frankfurt store, remove London from its remote region list

- Command: kv-> execute ‘alter table Users drop regions LND’

Depending on the application requirement, the table at Frankfurt may have to be dropped first. If not, the table still exists at Frankfurt but would be out-of-sync with the London region.

Add Region

You can expand a multi-region table to new regions. Another way to look at this is you are adding a new region to an existing multi-region table. In the above example, if you would like to add another region, for example, Paris to the multi-region table “Users,” then you would need to create that table in the Paris region. When this is done, the existing regions must be specified when creating the multi-region table in Paris. Second, the new region (Paris) needs to be added to Frankfurt and London with an Alter Table DDL command.

- Command: kv-> execute ‘alter table Users add regions PAR’

The Paris region will first initialize the table by copying the rows from LND and FRA. The table at Paris is fully active and able to read and write during initialization, but some rows at other regions might not show up before the table copy is done.

A couple of points to note about the current behavior of multi-region table:

- Asynchronous Propagation: In the above INSERT, UPDATE, DELETE operations, the changes are synchronized across multiple regions asynchronously. It means, when you write a row in the FRA region, the write operation is executed entirely in the FRA (Local) region without waiting for the subscribing regions to update.

- Heterogenous Topologies: Stores can have different topologies. For example, a NoSQL store in Frankfurt may have three shards while a NoSQL store in London may only have a single shard. Each region can also independently support elasticity operations enabling the addition or removal of shards separately in each region.

- Regional Security. You need to authenticate with each region and gain proper access privileges to create, read, and write a multi-region table in that region. Modifying security constraints for the given table in one region will not affect other regions.

- Automatic-failover: When a region fails, multi-region tables in other live regions will continue to work as usual and will be not be affected by the failed region. When the failed region comes back, its multi-region table will be re-synced with the others.

This concludes the two-part series on the Multi-region table feature.

Happy Exploring!

Acknowledgment: A special thanks to Junyi Xie, the lead developer behind this feature, and Dave Rubin, our Engineering head for proofreading this blog and suggesting changes.