Contributors: Kumar Varun and C P Mishra

Note: The Oracle Cloud Infrastructure services are now migrated to Identity and Access Management (IAM) Domains. You can now use the IAM Domain Audit Logs for analyzing your cloud application usage activities.

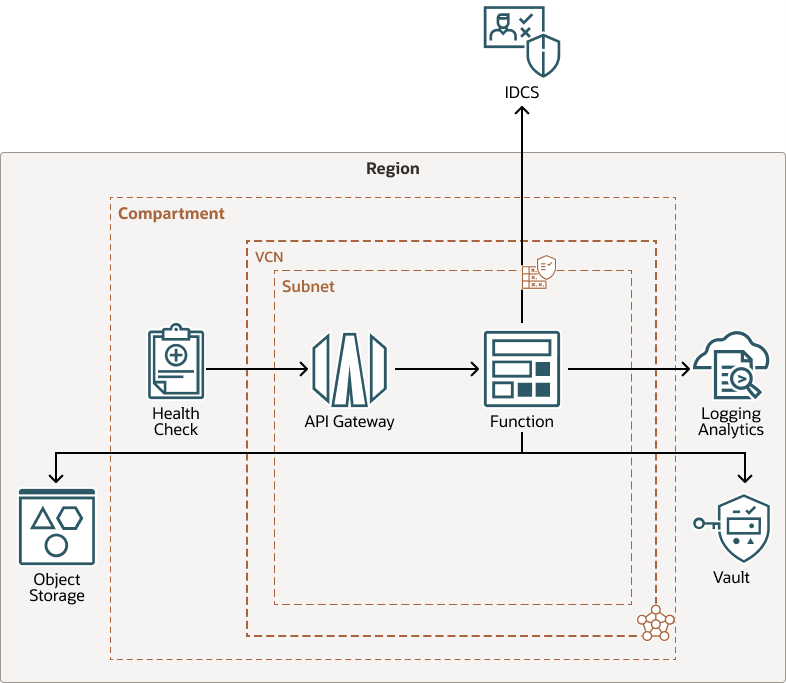

Logging Analytics users now have a new reference architecture for collecting audit logs to get security and governance Insights from an Identity Cloud Service (IDCS) instance. The reference architecture leverages the functionality of other OCI services to present an integrated solution that simplifies the continuous log collection experience, enriches logs during ingestion, and processes and analyzes the logs.

Monitoring audit logs from IDCS can provide deep insights into the access and usage activities of cloud applications that help troubleshoot and evaluate compliance.

As shown above, the following OCI services and components are integrated in the solution:

- A serverless function from Functions service to automate the process of collecting audit logs from IDCS

Functions is a fully managed, multi-tenant, highly scalable, on-demand, Functions-as-a-Service platform. It is built on the enterprise-grade Oracle Cloud Infrastructure and powered by the Fn Project open-source engine.

- IDCS REST API endpoints to collect audit logs from an Identity Cloud Service instance

- LogAnalytics REST API to collect the logs in Logging Analytics

- API Gateway and Health Check REST API to schedule the function to run every 5 minutes

The API Gateway service publishes APIs with private endpoints that are accessible from within the network and can be exposed with public IP addresses if the internet traffic is accepted.

The Oracle Cloud Infrastructure Health Check service provides users with high-frequency external monitoring to determine the availability and performance of any publicly facing service, including hosted websites, API endpoints, or externally facing load balancers.

- Oracle Vault service to manage the access keys

Oracle Cloud Infrastructure Vault is a key management service that stores and manages master encryption keys and secrets for secure access to resources. The key encryption algorithms that the Vault service supports include Advanced Encryption Standard (AES), Rivest-Shamir-Adleman (RSA) algorithm, and the elliptic curve digital signature algorithm (ECDSA).

- Store the current operation status for the Oracle Function service in the Oracle Object Storage bucket.

OCI Resource Manager Terraform Stack solution automates OCI provisioning

To simplify the integration experience, Oracle provides a ready configuration to use. Follow the instructions provided in OCI Resource Manager to create and deploy the Terraform stack.

OCI Resource Manager automates the process of provisioning Oracle Cloud Infrastructure resources. Using Terraform, Resource Manager helps install, configure, and manage resources.

After the terraform resources are created, deploy the function using Terraform CLI. The function will load the logs in 5 minutes and will be visible in the Logging Analytics Log Explorer.

Terraform stack deployment creates Oracle-Defined Dashboards automatically

These Oracle-defined dashboards are automatically created in the Logging Analytics service when the Terraform stack is deployed. Now, the analysis can be viewed in a single pane. A single pane view helps closely monitor the cloud application activities, detect anomalous events, and govern the admin actions.

IDCS Audit Logs Dashboard:

The IDCS Audit Logs dashboard summarizes all the below information using widgets in a single pane with charts and visualizations, based on the selection of the time range. This gives a broad view of the various application and user activities over the selected time period.

- A total of (for application): The count of the number of application events and user events. In the above dashboard snapshot, there are 6,344 events in the period Last 7 Days.

- Application events: Chart depicting the periodic record of application events

- A total of (for user): The count of the number of application events and user events. In the above dashboard snapshot, there are 135 events in the period Last 7 Days.

- User events: Chart depicting the periodic record of user events

- Events by application by type: A treemap visualization of events grouped by event type for each application

- Events by user by type: A treemap visualization of events grouped by event type for each user

- Repartition by application: A pie chart of the distribution of the count of events grouped by applications. For example, in the above image, the 6,344 application events are distributed in the pie chart based on the application that generated the event.

- Repartition by event type (for application): A pie chart of the count of events grouped by event type only for the application events. For example, in the above image, the 6,344 application events are distributed in the pie chart based on the event type.

- Repartition by user: A pie chart of distribution of the count of events grouped by users. For example, in the above image, the 135 user events are distributed in the pie chart based on the user who generated the event.

- Repartition by event type (for user): A pie chart of the count of events grouped by event type only for the user events. For example, in the above image, the 135 user events are distributed in the pie chart based on the event type.

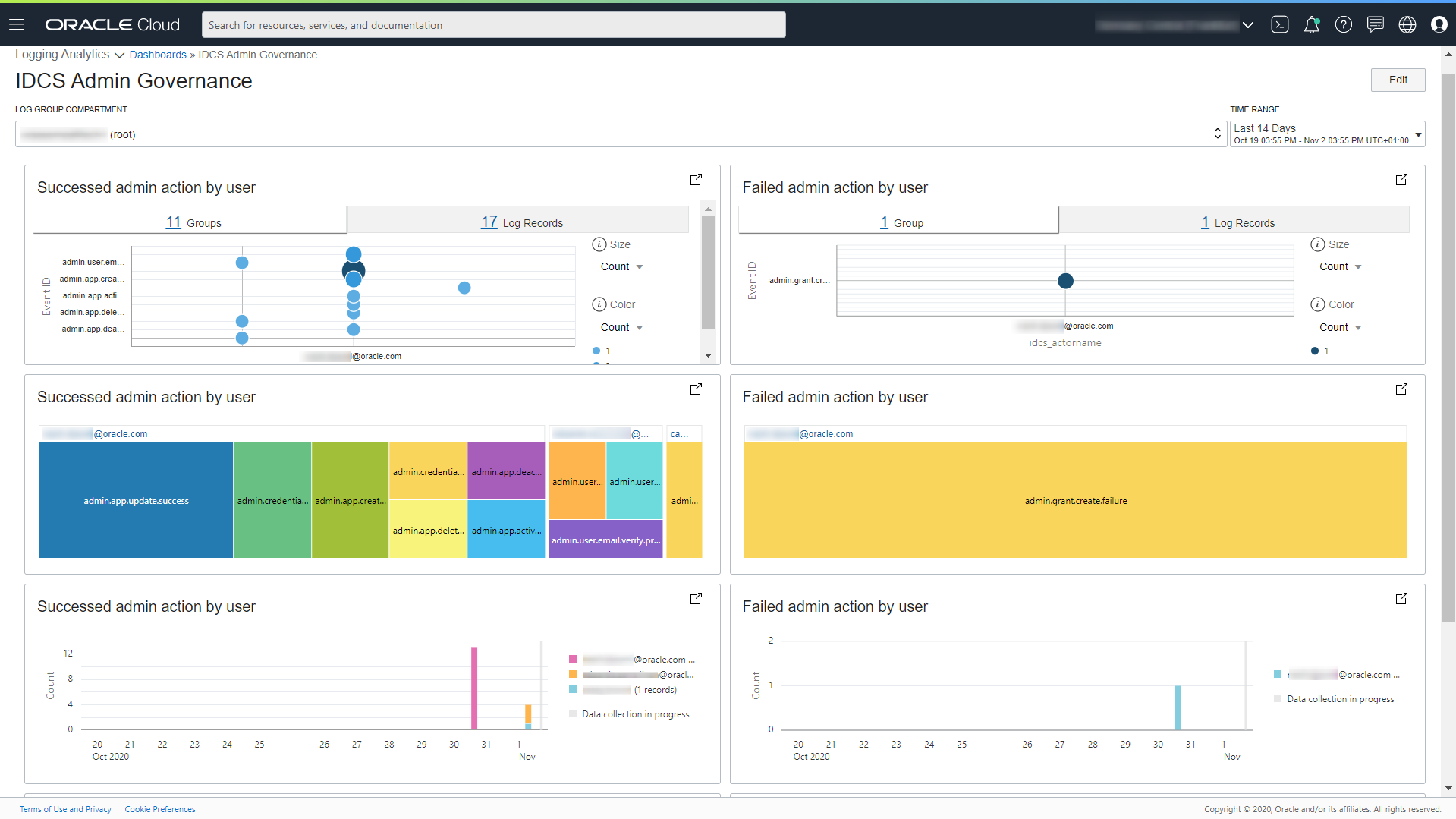

IDCS Admin Governance Dashboard

The IDCS Admin Governance dashboard presents the parallel perspective for successful and failed admin actions by user, based on the selection of the time range. This dashboard provides an overview of all the admin activities and insights into successful and failed actions. To drill down, open the visualizations in the Log Explorer and click on the exact events to root cause.

- Link visualization of the events involving successful or failed admin action of user grouped by event type and user. Each bubble in the chart represents a group of events of a specific type by a particular user. This visualization is helpful in identifying anomalous activities.

- Treemap visualization of the events involving successful or failed admin action of user grouped by event type and user. For each user, a set of rectangles representing successful admin action events is displayed. The visualization is helpful in viewing each user’s admin activities.

- A stacked histogram view of the count of matching events distributed over the selected time period. The different colors in each bar represent the events for different users.

- A tabular analysis (not visible in the above image) of events where roles were granted to specific users for specific applications.

For detailed instructions to implement the proposed solution, visit Solution Playbook: Securing and Monitoring Oracle Identity Cloud Service.

Resources:

- Oracle IDCS: Security and Monitoring Marketplace App

- About Dashboards

- Visualize Data Using Charts and Controls

- Some analysis examples