Oracle Linux kernel developer Sudhakar Dindukurti contributed this post on the work he’s doing to bring the Resilient RDMA IP feature from RDS into upstream. This code currently is maintained in Oracle’s open source UEK kernel and we are working on integrating this into the upstream Linux source code.

1.0 Introduction to Resilient RDMA IP

The Resilient RDMAIP module assists ULPs (RDMA Upper Level Protocols) to do failover, failback and load-balancing for InfiniBand and RoCE adapters.

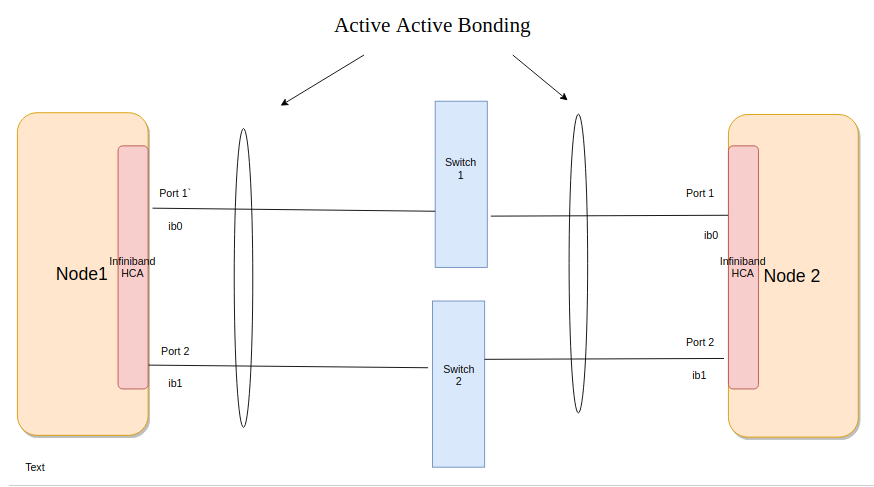

RDMAIP is a feature for RDMA connections in Oracle Linux. When this feature, also known as active-active bonding, is enabled the Resilient RDMAIP module creates an active bonding group among the ports of an adapter. Then, if any network adapter is lost the IPs on that port will be moved to the other port automatically providing HA for the application while allowing the full available bandwidth to be used in the non-failure scenario.

Reliable Datagram Sockets (RDS) are high-performance, low-latency reliable connection-less sockets for delivering datagrams. RDS provides reliable, ordered datagram delivery by using a single reliable transport between two nodes. For more information on RDS protocol, please see the RDS documentation. RDS RDMA uses Resilient RDMAIP module to provide HA support. RDS RDMA module listens to RDMA CM Address change events that are delivered by the Resilient RDMAIP module. RDS drops all the RC connections associated with the failing port when it receives address change event and re-establishes new RC connections before sending the data the next time.

Transparent high availability is an important issue for RDMA-capable NIC adapters compared to standard NICs (Network Interface Cards). In case of standard NICs, the IP layer can decide which path or which netdev interface to use for sending a packet. This is not possible for RDMA capable adapters for security and performance reasons which tie the hardware to a specific port and path. To send a data packet using RDMA to the remote node, there are several steps:

1) Client application registers the memory with the RDMA adapter and the RDMA adapter returns an R_Key for the registered memory region to the client. Note that the registration information is saved on the RDMA adapter.

2) Client sends this “R_key” to the remote server

3) Server includes this R_key while requesting RDMA_READ/RDMA_WRITE to client

4) RDMA adapter on the client side uses the “R_key” to find the memory region and proceed with the transaction. Since the “R_key’ is bound to a particular RDMA adapter, same R_KEY cannot be used to send the data over another RDMA adapter. Also, since RDMA applications can directly talk to the hardware, bypassing the kernel, traditional bonding (which lies in kernel) cannot provide HA.

Resilient RDMAIP does not provide transparent failover for kernel ULPs or for OS bypass applications, however, it enables ULPs to failover, failback, and load balance over RDMA capable adapters. RDS (Reliable Datagram Sockets) protocol is the first client that is using Resilient RDMAIP module support to provide HA. The below section talks about the role of Resilient RDMAIP for different features.

1.1 Load balancing

All the interfaces in the active active bonding group have individual IPs. RDMA consumers can use one or more interfaces to send data simultaneously and are responsible to spread the load across all the active interfaces.

1.2 Failover

If any interface in the active active bonding group goes down, then Resilient RDMAIP module moves the IP address(s) of the interface to the other interface in the same group and it also sends a RDMA CM (Communication Manager) address change event to the RDMA kernel ULPs. RDMA kernel ULPs that are HA capable, would stop using the interface that went down and start using the other active interfaces. For example, if there are any Reliable Connections (RC) established on the downed interface, the ULP can close all those connections and re-establishes them on the failover interface.

1.3 Failback

If the interface that went down earlier comes back up, then Resilient RDMAIP module moves back the IP address to the original interface and it again sends RDMA CM address change event to the kernel consumers. RDMA kernel consumers would take action when they receive address change event. For example, RDMA consumers would move the connections that were moved as part of failover.

2.0 Resilient RDMAIP module provides the below module parameters

-

rdmaip_active_bonding_enabled

-

Set to 1 to enable active active bonding feature

-

Set to 0 to disable active active bonding feature

-

By default, active active bonding feature is disabled. If active bonding is enabled, then the Resilient RDMAIP module creates an active bonding group among ports of the same RDMA adapter. For example, consider a system with two RDMA adapters each with two ports, one Infiniband (ib0 and ib1) and one RoCE (eth5 and eth5). On this setup, two active bonding groups will be created 1) Bond 1 with ib0 and ib1 2) Bond 2 with eth4 and eth5

-

rdmaip_ipv4_exclude_ips_list

-

For IPs listed in this parameters, active bonding feature will be disabled.

-

by default, link local addresses are excluded by Resilient RDMAIP.

3.0 How it works ?

In Figure 1, there are two nodes with one 2-port Infiniband HCA each and each port of the HCA is connected to a different switch as shown. Two IPoIB interfaces (ib0 and ib1) are created, one for each port as shown in the diagram. When active active bonding is enabled, Resilient RDMAIP module automatically creates a bond between two ports of the Infiniband HCA.

1) All the IB interfaces are up and configured

#ip a

---

ib0:

mtu 2044 qdisc pfifo_fast state UP qlen 256

link/infiniband 80:00:02:08:fe:80:00:00:00:00:00:00:00:10:e0:00:01:29:65:01 brd 00:ff:ff:ff:ff:12:40:1b:ff:ff:00:00:00:00:00:00:ff:ff:ff:ff

inet 10.10.10.92/24 brd 10.10.10.255 scope global ib0

valid_lft forever preferred_lft forever

ib1:

mtu 2044 qdisc pfifo_fast state UP qlen 256

link/infiniband 80:00:02:09:fe:80:00:00:00:00:00:00:00:10:e0:00:01:29:65:02 brd 00:ff:ff:ff:ff:12:40:1b:ff:ff:00:00:00:00:00:00:ff:ff:ff:ff

inet 10.10.10.102/24 brd 10.10.10.255 scope global secondary ib0:P06

valid_lft forever preferred_lft forever

2) When Port 2 on Node 1 goes down, ib1 IP ‘10.10.10.102’ will be moved to Port 1 (ib0) – Failover

#ip a

--------------

ib0:

mtu 2044 qdisc pfifo_fast state UP qlen 256

link/infiniband 80:00:02:08:fe:80:00:00:00:00:00:00:00:10:e0:00:01:29:65:01 brd 00:ff:ff:ff:ff:12:40:1b:ff:ff:00:00:00:00:00:00:ff:ff:ff:ff

inet 10.10.10.92/24 brd 10.10.10.255 scope global ib0

valid_lft forever preferred_lft forever

inet 10.10.10.102/24 brd 10.10.10.255 scope global secondary ib0:P06

valid_lft forever preferred_lft forever

inet6 fe80::210:e000:129:6501/64 scope link

valid_lft forever preferred_lft forever

ib1:

mtu 2044 qdisc pfifo_fast state DOWN qlen 256

link/infiniband 80:00:02:09:fe:80:00:00:00:00:00:00:00:10:e0:00:01:29:65:02 brd 00:ff:ff:ff:ff:12:40:1b:ff:ff:00:00:00:00:00:00:ff:ff:ff:ff

----------------

3) When Port 2 on node 1 comes back, IP ‘10.10.10.102’ will be moved back to Port 2 (ib1) – Failback

#ip a

---

ib0:

mtu 2044 qdisc pfifo_fast state UP qlen 256

link/infiniband 80:00:02:08:fe:80:00:00:00:00:00:00:00:10:e0:00:01:29:65:01 brd 00:ff:ff:ff:ff:12:40:1b:ff:ff:00:00:00:00:00:00:ff:ff:ff:ff

inet 10.10.10.92/24 brd 10.10.10.255 scope global ib0

valid_lft forever preferred_lft forever

ib1:

mtu 2044 qdisc pfifo_fast state UP qlen 256

link/infiniband 80:00:02:09:fe:80:00:00:00:00:00:00:00:10:e0:00:01:29:65:02 brd 00:ff:ff:ff:ff:12:40:1b:ff:ff:00:00:00:00:00:00:ff:ff:ff:ff

inet 10.10.10.102/24 brd 10.10.10.255 scope global secondary ib0:P06

valid_lft forever preferred_lft forever

Example: RDS Implementation

Here are the sequence steps that occur during failover and failback. Consider an RDS application establishing an RDS socket between IP1 on node 1 Port 1 to IP3 on node 2. For this case, at RDS kernel level, there will be one RC connection between IP1 and IP3.

Case 1: Port 1 on Node 1 goes down

- Resilient RDMAIP module moves the IP address IP1 from Port 1 to Port 2

- Port 2 will have two IPs (IP1 and IP2)

- Resilient RDMAIP module sends an RDMA CM address change event to RDS

- RDS RDMA driver, drops the IB connection between IP1 (Port 1) to IP3 as part of handling the address change event.

- RDS RDMA driver creates a new RC connection between IP1 (Port 2) to IP3 when it receives a new send request from IP1 to IP3

- After failover, when RDS resolves IP1, it will get path records for Port 2 as IP1 is now bound to Port 2.

Case 2: Port 1 on Node 1 comes back UP

- Resilient RDMAIP module moves the IP address IP1 from Port 2 to Port 1

- Resilient RDMAIP module sends an RDMA CM address change event to RDS

- RDS RDMA driver drops the IB connection between IP1 (Port 2) to IP3 as part of handling the address change event.

- RDS RDMA driver creates a new RC connection between IP1 (Port 1) to IP3 when it receives a new send request from IP1 to IP3

- After failback, when RDS resolves IP1, it will get path records for Port 1 as IP1 is now bound to Port 1.

4.0 Future work

The Resilient RDMAIP module’s current implementation not tightly coupled with the network stack implementation. For example, RDMA kernel consumers do not have an option to create active bonding groups and also there are no APIs that can tell the RDMA consumers about the active bond groups and which interfaces that are configured in the active bond group. As a result, current design and implementation are not suitable tor upstream. We are currently working on developing an version of this module which would be something we can submit to upstream Linux, but until then the code for RDMAIP can be found on oss.oracle.com and our github pages.