Introduction

Myth, legend and marketing are all based on some element of truth. The same is true for page table sharing in Linux.

There are efforts underway today to create a generalized method for page table sharing via a mechanism known as mshare(). This is a work in progress, and it is unknown when such efforts will come to fruition. That effort is not discussed here. Rather this article will discuss a little known method of page table sharing that exists in the Linux kernel today.

It all started long ago in the Linux v2.6 time frame. At that time there were several efforts to introduce page table sharing in Linux such as this, this and this. Ultimately, all these proposals were rejected and never made their way into the kernel. However, one method of page table sharing did make it into the v2.6.20 kernel with a patch titled “shared page table for hugetlb page”. This change enabled the sharing of PMD pages of hugetlb mappings on the x86 architecture. This functionality was never documented, and sharing did not require any application changes, so few people actually know about it. Within the Linux kernel source code, this functionality is known as Huge PMD Sharing.

Huge PMD Sharing only applies to hugetlb mappings. hugetlb mappings are created by one of the following methods:

- mmap’ing a file in a hugetlbfs filesystem –

mmap() - Creating a System V shared memory segment with the

SHM_HUGETLBflag –shmget(SHM_HUGETLB) - Creating an anonymous mapping with the

MAP_HUGETLB flag–mmap(MAP_HUGETLB) mmap'ingan anonymous file created viamemfd_createwith theMFD_HUGETLBflag –memfd_create(MFD_HUGETLB)

In addition to only working with hugetlb mappings, Huge PMD Sharing is only available on certain system architectures. Those are: arm64, riscv and x86. Huge PMD Sharing is available in kernels built with the CONFIG_HUGETLB_PAGE and CONFIG_ARCH_WANT_HUGE_PMD_SHARE config options.

What exactly is Huge PMD Sharing?

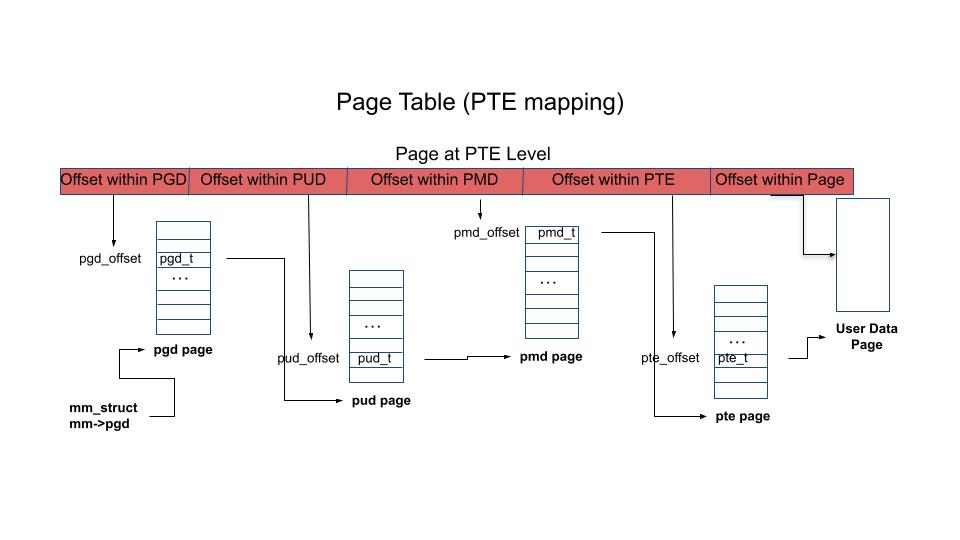

It is the sharing of a PMD page in the page tables of multiple processes. As a refresher, here is a diagram of a simple 4 level page table with a PTE mapping.

Note the 4 levels within the table that contain pages of page table entries: PGD, PUD, PMD and PTE. These entries are pointers to a page in the next level of the table. It is only the entries at the PTE level that point to pages containing user data.

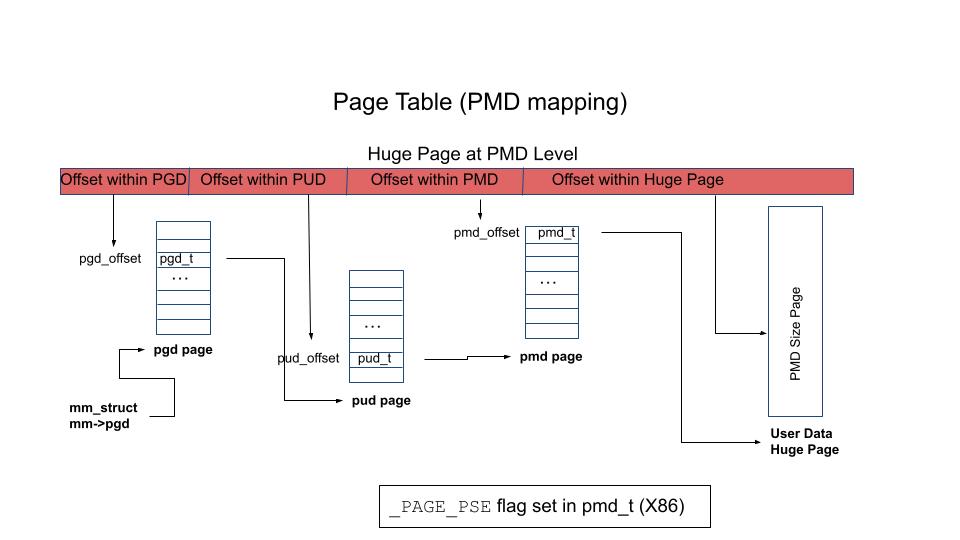

Now consider a page table describing user data mapped at the PMD level. One common example is a hugetlb mapping on x86 with 2MB size hugetlb pages.

Note that in this diagram, 2MB user data pages are pointed to by entries in the PMD page. The amount of memory that can be represented by a single PMD page is the same whether using a PMD mapping or a PTE mapping. Here is the math:

- Entries for each page table page are 1 word (8 bytes) in size. This is true for PGD, PUD, PMD and PTE pages.

- 512 words are in one 4KB page.

- A PTE page can point to 512 4K pages.

- A PTE page can then represent (512 x 4K) 2MB of memory.

- A PMD page can point to 512 PTE pages each representing 2MB of memory for a total of 1GB of memory.

- A PMD page can also point to 512 2MB hugetlb pages also representing a total of 1GB of memory.

One immediate observation here is that hugetlb mappings require fewer page table entries than normal (base page) mappings. In the case of PMD mappings, the PTE level is not needed to represent the memory associated with the mapping.

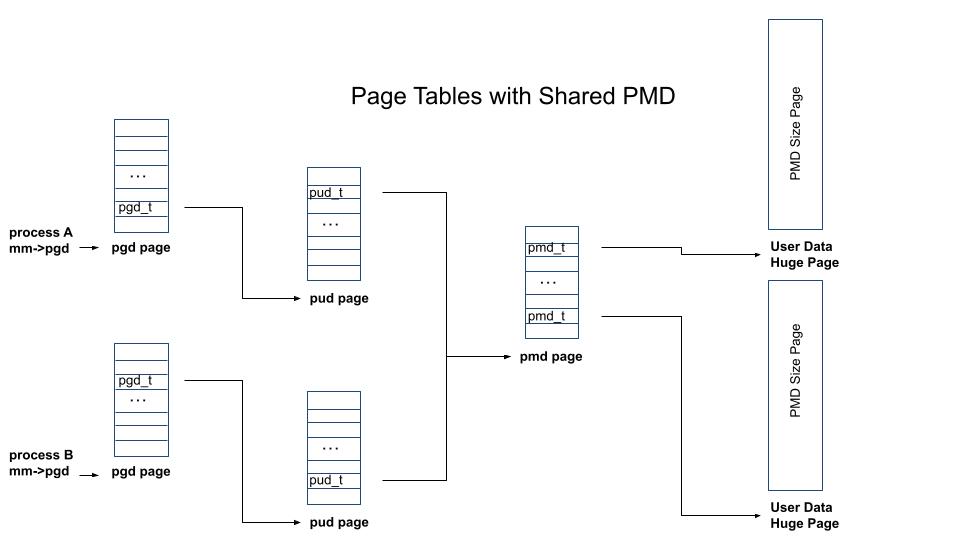

Now, let’s take a look at the page tables of two processes sharing a PMD page.

Here we see two page tables with pud_t entries pointing to the same PMD page. This is the basic concept of Huge PMD Sharing. Instead of having separate PMD pages, multiple processes can share a common page. One obvious benefit of such a feature is the memory savings. In the diagram above, one PMD page (4K) of memory is saved. In addition, the shared PMD page can be populated by either sharing process. So, if process A faults in all hugetlb pages referenced by the PMD page, process B can access those pages without incurring any page faults. Those may not seem like huge benefits in the simple diagram above. However, consider an environment where shared PMD pages are used in a TeraByte size mapping, and there are tens of thousands of processes sharing those PMD pages. In such an environment, the memory savings and performance benefits of Huge PMD Sharing can be significant.

What mappings are eligible for Huge PMD Sharing?

As mentioned above, Huge PMD Sharing is only available on hugetlb mappings, and only certain architectures support Huge PMD Sharing. In addition the following conditions must be met for Huge PMD Sharing.

- The mappings must be sharable. This should be fairly obvious. System V shared memory segments are sharable by default. Mappings created via

mmap()must include theMAP_SHAREDflag to be sharable. - The mapping must cover an entire PMD page. This means it must be at least PUD_SIZE (1GB on x86) in size and suitably aligned to potentially use all entries within a PMD page.

- The mapping must not include a

userfaultfdrange operating inUFFD_FEATURE_MINOR_HUGETLBFS,UFFD_FEATURE_WP_HUGETLBFS_SHMEMorUFFD_FEATURE_WP_UNPOPULATED mode.

Mappings which meet these conditions are said to be eligible for Huge PMD Sharing.

When is Huge PMD Sharing established?

Huge PMD Sharing is established at page fault time. If the page fault handling code needs to allocate a new PMD page in the page table, it first checks if the address and mapping are eligible for Huge PMD Sharing. If so, then it checks to determine if an existing PMD page can be used. This check is performed by searching all existing mappings of the underlying object (file or shared memory segment) that cover the same PUD_SIZE range. If it finds such a mapping, the page tables of the process owning the found mapping are examined for an existing PMD page. If the PMD page is found, the PMD page is inserted into the page table of the faulting process and is shared with other processes using the same PMD page. It should be noted that access attributes such as read, write, etc must be the same for all mappings sharing a PMD page. The code which searches for other process mappings checks this condition.

It may not be obvious, but memory referenced by the shared PMD page does not need to be at the same virtual address in each sharing process. This is because the virtual address corresponds to the position of the PMD page within the page table. In the figure above showing Page Tables with Shared PMD, note the different position of the pud_t pointer to the shared PMD page in the sharing processes. Hence, the memory referenced by the PMD page is at different virtual addresses in each process.

What can cause Huge PMD Unsharing?

A PMD page can only be shared if the sharing mappings have the same access attributes. This is checked when Huge PMD Sharing is first established. However, it is possible that a process may perform an operation after sharing is established that will change these attributes and require unsharing of the PMD page. Some examples of these operations are:

- Changing access permissions via

mprotect(). - Changing the size of the mapping via

munmap()ormremap(). - Registering part of the mapping for

userfaultfdinUFFD_FEATURE_MINOR_HUGETLBFS,UFFD_FEATURE_WP_HUGETLBFS_SHMEMorUFFD_FEATURE_WP_UNPOPULATED mode.

When an operation causes unsharing of a PMD page, the PMD page is removed from the page table of the process initiating the operation. Unsharing effectively leaves a PUD_SIZE (1GB on x86) hole in the process page table. Any subsequent access to this area will result in page faults to recreate the page table entries.