NVMe over fabrics support was added to the mainline Linux Kernel starting with v4.10. Oracle Linux UEK5 introduced NVMe over Fabric support with Fibre Channel transport. In UEK6, NVMe over fabrics for TCP transport was introduced. Boot from SAN has been supported with Fabrics protocol for a few decades now, notably for iSCSI and Fibre channel. This blog will explore the work that is on-going to bring the same Boot from SAN capability for NVMe over Fabrics protocol as well.

What is Boot From SAN?

Boot from SAN is generally used for booting a host Operating System from a Storage LUN instead of an internal hard drive. The host system might or might not have any local hard drives installed. This process of booting from remote storage is advantageous in very large data centers where reliability and backup features are crucial.

We will now explore the boot enviornment of early storage area networks and options available currently for NVMe SAN boot.

Boot Environment

The boot environment typically consists of a BIOS or system firmware (e.g. UEFI) which enumerates hardware devices on a PCIe bus. A system boot device list is configured as an ordred list of devices from where an OS image can be loaded into system memory. The Boot firmware selects each of these ordered devices in order and attempts to read the OS image into memory. By default, in a system BIOS (or UEFI environment), a CD/DVD-ROM device is given priority as the first-order device, with a USB as the second device, followed by a Hard disk drive (HDD). A failure to load a valid OS-boot image results in a boot device failure (can reboot a system) or a system halt at the prompt.

A SCSI host bus storage adapter is typically described as hardware which uses parallel SCSI, SAS, Fibre Channel or Ethernet as a transport to discover SCSI block devices. The concept of bus topology expanded the meaning of device connectivity to the host bus adapter. In expanded terminology, a single SCSI Target will support many SCSI LUNs, each LUN being a block storage device. The transport driver supporting various protocols can discover block devices to be used as a boot device. A concept of network topology using switches and routers allowed storage to become remote and allowed dynamic configuration by supporting addition, removal and detection order on a SCSI bus.

Boot from SAN Using Fibre Channel Storage

A typical boot from a SAN environment using Fibre channel storage consistent with a SCSI-based block storage device (a SCSI Target), is connected to a Fabric switch. A host system consisting of a Fibre Channel Host Bus Adapter (HBA) is also connected to the same Fabric switch. To configure the FC adapter to search for SAN devices, follow vendor-specific (Qlogic or Emulex) steps described in the user manual for the Host bus adapter [10, 11]. Follow the instructions to add WWPN to the target device. Upon connection, the FC host adapter performs a LUN discovery and presents data to the Operating System.

Fibre channel protocol is designed for self-discovering storage, i.e. as soon as a Fibre channel target is connected to a fabric network, any host with an FC HBA will be able to discover SCSI LUNs exported by the target device. This self-discovery helps the system administrator to configure a host to boot from a remote SCSI LUN. This configuration is described as a boot from SAN for the FC adapter.

Boot from SAN Using iSCSI Storage

Boot from iSCSI storage is another way of using SCSI-based block storage for boot devices. This configuration uses ethernet transport to discover SCSI LUNs exported by an iSCSI target. This involves using an IP address for the discovery of an iSCSI target device.

An iSCSI storage device is not a self-discovering target device, i.e. when a host adapter is connected to a network switch, SCSI LUNs are not discovered until the discovery process has been kicked off by the system administrator. A Converge Network adapter (CNA) can work as SW iSCSI transport where a Firmware running on the CNA adapter performs the device discovery based on the information provided by the user or using an OS provided initiator driver to perform device discovery of the block devices.

This discovery process requires a system administrator to know the IP address and port number of an iSCSI Target device to establish an initial TCP Connection. To facilitate ease of connectivity, network services such as DHCP, and iSCSI servers such as iSNS or SLP may be used to establish iSCSI boot device sessions.

To resolve the issue of self-discovery, an iSCSI Boot Firmware Table (iBFT) was created which is populated by the user before performing OS installation, Information entered at the HBA firmware prompt is stored in the iBFT table, and the OS uses the ACPI API during system boot. The iBFT table contains iSCSI session information which will automatically connect to the iSCSI session such that the OS environment would be able to boot from an iSCSI LUN. The operating system provides the open-iscsi package which contains the iscsistart utility which will perform discovery using information stored in the iBFT once the session is established.

Boot from SAN with NVMe-oF

NVMe-oF already supports remote NVMe namespace connectivity. This concept of booting an operating system using a remote SCSI LUN is well established by the SCSI protocol. The NVMe Boot Specification [1] provides an outline of how to achieve booting from a remote namespace using NVMe transport.

TCP transport emerged as a clear choice due to its nature of being a widely deployed transport in data centres. Refer to this blog about NVMe over TCP [2] which describes the setup and how to use TCP for deploying NVMe storage.

Advantages of using NVMe-oF for remote boot:

- Current NVMe-oF provides a fabric-independent capability to connect to a remote namespace.

- NVMe being a PCIe-based transport, eliminates SCSI command translation. Ethernet provides a widespread acceptance in a modern data-center and so TCP emerged as the transport protocol of choice with NVMe-oF.

- NVMe-oF has increased scalability and flexibility via a well-defined discovery mechanism.

Booting from NVMe-oF requires the configuration of the UEFI pre-OS driver by an administrator. The administrator will need to gather the following information:

- Target Subsystem NQN

- Target IP Address

- Target Port Address

- Target Namespace

- Host NQN, and

- Security Related Information.

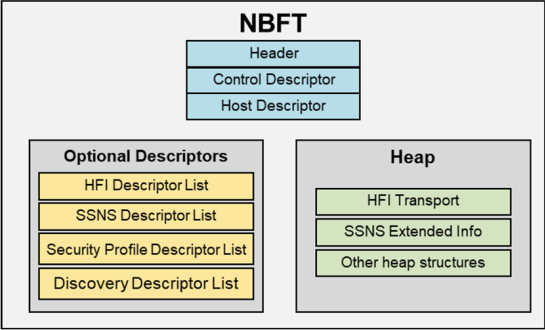

This information is presented to OS when the boot process is taking place using an ACPI table called NVMe Boot Firmware Table (NBFT). Refer to Boot Specification for details on the pre-os boot process with the UEFI environment.

NVMe/TCP was chosen as the first fabric transport to develop Proof of Concept development of boot from SAN prototype for NVMe-oF.

As shown in Figure 1, an NBFT table consists of NBFT memory space, a Heap with HFI (Host Fabric Interface) and SSNS (Subsystem and Namespace Descriptor) data structures and optional descriptors which are useful for discovery and connectivity using various transport protocols.

Information presented in NBFT may be used in multiple ways:

- To connect to the NVMe subsystem on which the OS is installed.

- To connect parameters for connecting/discovering other NVMe Subsystems

- To configure the Operating System itself (by using hostNQN, and hostID parameters from NBFT)

In addition to developing the NVMe-oF boot specification, the NVMe community has also developed a Discovery mechanism via Technical Proposal.

The NVMe-oF/TCP boot specification is developed with the following goals in mind:

- Remove pain points of iSCSI discovery and configurability.

- Simplify discovering and booting an OS from a remote storage LUN using TCP transport.

- Provide the same level of auto-discovery as the Fibre channel fabric network.

- Make it secure and reliable as a Fibre channel protocol.

Proof of Concept Implementation

The NVMe-oF implementation consists of the following components which are still in a PoC stage as of the writing of this blog [2]:

-

EDK II driver: TianoCore [4] is an open-source community for UEFI platform interface development. This community provides the EDK II driver which uses UEFI specifications to develop new features for UEFI firmware [5].

-

Security Profile via Redfish: Redfish security profile is used by UEFI firmware for a secure boot option with NVMe-oF storage boot [6]. This provides security equivalence to Fibre channel transport in an SAN environment.

-

Libnvme: NVMe library will need to learn how to decode ACPI NBFT table content from user space[7].

-

NVMe-cli: NVMe-Cli utility is modified to interact with the NBFT table at the command line. New command ‘nbft show’ was added to the nvme-cli [7].

-

SPDK: Storage Performance Development Kit was modified for the handling of UEFI translation of the NBFT table[7].

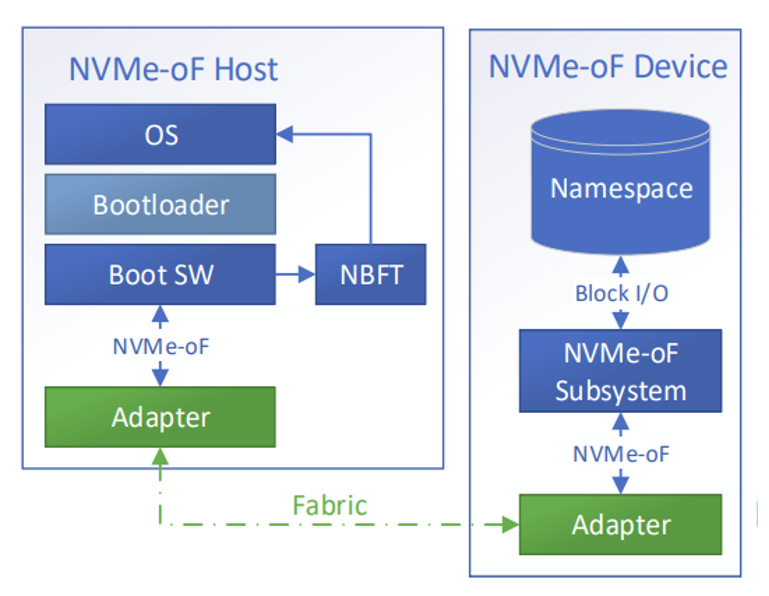

Figure 2 shows an overview of how NVMe-oF will be used for the discovery and booting from a remote Namespace.

Conclusion

In this blog, we touched upon the history of Storage Area Networks and described existing SAN technologies and implementations available to the user. We also described which technology has the least pain points when it comes to configuring remote storage for device discovery. We then briefly described how the device discovery is performed for each protocol and how the storage device is configured for OS installation. From the ease of discovery and configuration, the Fibre channel is widely used because of the self-discovery of storage block devices in a SAN environment. In the storage deployment of the future, NVMe-oF is preferred as the SAN storage protocol of choice. With NVMe-oF as a choice for storage enhancements, booting from SAN was the most obvious topic that is being developed for robust SAN solutions.

References

- NVMe Over TCP Blog

- NVMe Boot Specification

- NVMe-oF boot Proof-of-concept repositories are hosted at Timberland-SIG

- TianoCore open source repositories for UEFI/EDK development

- EDK-2 implementation for NBFT table

- DMTF Redfish specification

- Libnvme changes for NBFT translation from user space

- NVME cli implementation for NBFT show command

- Storage Performace Development Kit (SPDK) changes for NVMe-oF

- Marvell (Qlogic) User Guide describing Boot from FC SAN configuration

- Broadcom (Emulex) FC SAN Configuration page

Further Reading

- RFC 3720, “Internet Small Computer Systems Interface (iSCSI)”.

- NVM Express® Base Specification, Revision 2.0c. Available from https://www.nvmexpress.org

- NVM Express® Command Set Specification, Revision 1.0b. Available from https://www.nvmexpress.org

- NVM Express® TCP Transport Specification, Revision 1.0b. Available from https://www.nvmexpress.org

- SNIA Presentation for NVMe-of/TCP Boot

- Open Fabric Presentation for NVMe-OF Boot

- Unified Extensible Firmware Interface (UEFI) Platform Interface Forum