QEMU has a really great feature of being able to emulate block devices, this article by Dongli Zhang from the Oracle Linux kernel team shows you how to get started.

Block devices are significant components of the Linux kernel. In this article, we introduce the usage of QEMU to emulate some of these block devices, including SCSI, NVMe, Virtio and NVDIMM. This ability facilitates Linux administrators or developers, to study, debug and develop the Linux kernel, as it is much easier to customize the configuration and topology of block devices with QEMU. In addition, it is also considerably faster to reboot a QEMU/KVM virtual machine than to reboot a baremetal server.

For all examples in this article the KVM virtual machine is running Oracle Linux 7, the virtual machine kernel version is 5.5.0, and the QEMU version is 4.2.0.

All examples run the boot disk (ol7.qcow2) as default IDE, while all other disks (e.g., disk.qcow2, disk1.qcow2 and disk2.qcow2) are used for corresponding devices.

The article focuses on the usage of QEMU with block devices. It does not cover any prerequisite knowledge on block device mechanisms.

megasas

The below example demonstrates how to emulate megasas by adding two SCSI LUNs to the HBA.

qemu-system-x86_64 -machine accel=kvm -vnc :0 -smp 4 -m 4096M \

-net nic -net user,hostfwd=tcp::5022-:22 \

-hda ol7.qcow2 -serial stdio \

-device megasas,id=scsi0 \

-device scsi-hd,drive=drive0,bus=scsi0.0,channel=0,scsi-id=0,lun=0 \

-drive file=disk1.qcow2,if=none,id=drive0 \

-device scsi-hd,drive=drive1,bus=scsi0.0,channel=0,scsi-id=1,lun=0 \

-drive file=disk2.qcow2,if=none,id=drive1

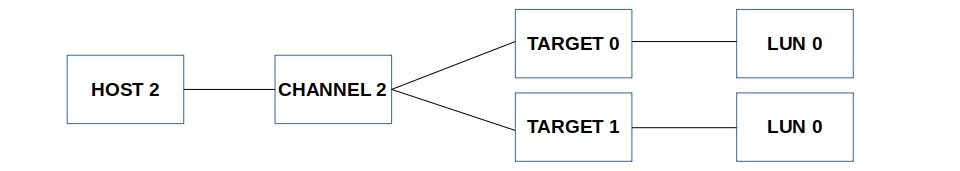

The figure below depicts the SCSI bus topology for this example. There are two SCSI adapters (each with one SCSI LUN) connecting to the HBA via the same SCSI channel.

Syslog output extracted from the virtual machine verifies the SCSI bus topology scanned by the kernel matches QEMU’s configuration. The only exception being QEMU specifies a channel id of 1, while the kernel assigns a channel id of 2. The disks disk1.qcow2 and disk2.qcow2 map to ‘2:2:0:0’ and ‘2:2:1:0’ respectively.

[ 2.439170] scsi host2: Avago SAS based MegaRAID driver [ 2.445926] scsi 2:2:0:0: Direct-Access QEMU QEMU HARDDISK 2.5+ PQ: 0 ANSI: 5 [ 2.446098] scsi 2:2:1:0: Direct-Access QEMU QEMU HARDDISK 2.5+ PQ: 0 ANSI: 5 [ 2.463466] sd 2:2:0:0: Attached scsi generic sg1 type 0 [ 2.467891] sd 2:2:1:0: Attached scsi generic sg2 type 0 [ 2.478002] sd 2:2:0:0: [sdb] Attached SCSI disk [ 2.485895] sd 2:2:1:0: [sdc] Attached SCSI disk

lsi53c895a

This section demonstrates how to emulate lsi53c895a by adding two SCSI LUNs to the HBA.

qemu-system-x86_64 -machine accel=kvm -vnc :0 -smp 4 -m 4096M \

-net nic -net user,hostfwd=tcp::5023-:22 \

-hda ol7.qcow2 -serial stdio \

-device lsi53c895a,id=scsi0 \

-device scsi-hd,drive=drive0,bus=scsi0.0,channel=0,scsi-id=0,lun=0 \

-drive file=disk1.qcow2,if=none,id=drive0 \

-device scsi-hd,drive=drive1,bus=scsi0.0,channel=0,scsi-id=1,lun=0 \

-drive file=disk2.qcow2,if=none,id=drive1

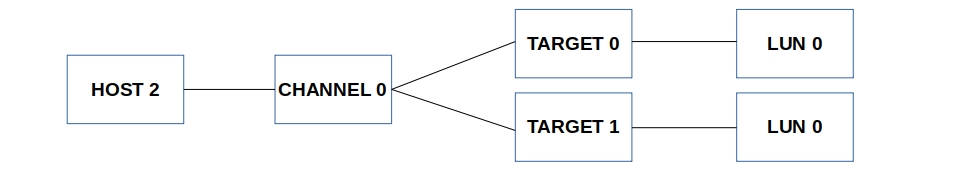

The figure below depicts the SCSI bus topology for this example. There are two SCSI adapters (each with one SCSI LUN) connecting to the HBA via the same SCSI channel.

As with megasas, syslog output from the virtual machine shows the SCSI bus topology scanned by the kernel matches what is configured with QEMU. Disks disk1.qcow2 and disk2.qcow2 are mapped to ‘2:0:0:0’ and ‘2:0:1:0’ respectively.

[ 2.443221] scsi host2: sym-2.2.3 [ 5.534188] scsi 2:0:0:0: Direct-Access QEMU QEMU HARDDISK 2.5+ PQ: 0 ANSI: 5 [ 5.544931] scsi 2:0:1:0: Direct-Access QEMU QEMU HARDDISK 2.5+ PQ: 0 ANSI: 5 [ 5.558896] sd 2:0:0:0: Attached scsi generic sg1 type 0 [ 5.559889] sd 2:0:1:0: Attached scsi generic sg2 type 0 [ 5.574487] sd 2:0:0:0: [sdb] Attached SCSI disk [ 5.579512] sd 2:0:1:0: [sdc] Attached SCSI disk

virtio-scsi

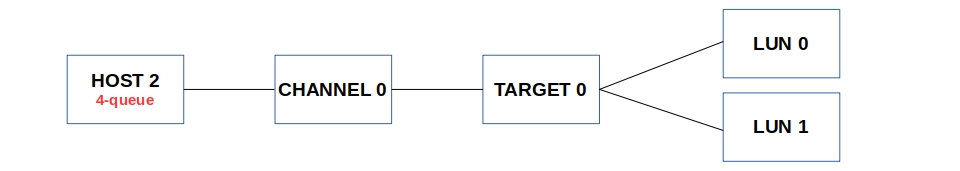

This section demonstrates the usage of paravirtualized virtio-scsi. The virtio-scsi device provides really good ‘multiqueue’ support. Therefore it can be used to study or debug the ‘multiqueue’ feature of SCSI and the block layer. The below example creates a 4-queue virtio-scsi HBA with two LUNs (which both belong to the same SCSI target).

qemu-system-x86_64 -machine accel=kvm -vnc :0 -smp 4 -m 4096M \

-net nic -net user,hostfwd=tcp::5023-:22 \

-hda ol7.qcow2 -serial stdio \

-device virtio-scsi-pci,id=scsi0,num_queues=4 \

-device scsi-hd,drive=drive0,bus=scsi0.0,channel=0,scsi-id=0,lun=0 \

-drive file=disk1.qcow2,if=none,id=drive0 \

-device scsi-hd,drive=drive1,bus=scsi0.0,channel=0,scsi-id=0,lun=1 \

-drive file=disk2.qcow2,if=none,id=drive1

The figure below illustrates the SCSI bus topology for this example, which has one single SCSI adapter with two LUNs.

Again as with previous examples syslog extracted from the virtual machine verifies the SCSI bus topology scanned by the kernel matches QEMU’s configuration. In this scenario disks disk1.qcow2 and disk2.qcow2 map to ‘2:0:0:0’ and ‘2:0:0:1’ respectively.

[ 1.212182] scsi host2: Virtio SCSI HBA [ 1.213616] scsi 2:0:0:0: Direct-Access QEMU QEMU HARDDISK 2.5+ PQ: 0 ANSI: 5 [ 1.213851] scsi 2:0:0:1: Direct-Access QEMU QEMU HARDDISK 2.5+ PQ: 0 ANSI: 5 [ 1.371305] sd 2:0:0:0: [sdb] Attached SCSI disk [ 1.372284] sd 2:0:0:1: [sdc] Attached SCSI disk [ 2.400542] sd 2:0:0:0: Attached scsi generic sg0 type 0 [ 2.403724] sd 2:0:0:1: Attached scsi generic sg1 type 0

The information below extracted from the virtual machine confirms that the virtio-scsi HBA has 4 I/O queues. Each I/O queue has one virtio0-request interrupt.

# ls /sys/block/sdb/mq/ 0 1 2 3 # ls /sys/block/sdc/mq/ 0 1 2 3

24: 0 0 0 0 PCI-MSI 65536-edge virtio0-config 25: 0 0 0 0 PCI-MSI 65537-edge virtio0-control 26: 0 0 0 0 PCI-MSI 65538-edge virtio0-event 27: 30 0 0 0 PCI-MSI 65539-edge virtio0-request 28: 0 140 0 0 PCI-MSI 65540-edge virtio0-request 29: 0 0 34 0 PCI-MSI 65541-edge virtio0-request 30: 0 0 0 276 PCI-MSI 65542-edge virtio0-request

virtio-blk

In this section we demonstrate usage of the paravirtualized virtio-blk device. This example shows virtio-blk with 4 I/O queues, with the backend device being disk.qcow2.

qemu-system-x86_64 -machine accel=kvm -vnc :0 -smp 4 -m 4096M \

-net nic -net user,hostfwd=tcp::5023-:22 \

-hda ol7.qcow2 -serial stdio \

-device virtio-blk-pci,drive=drive0,id=virtblk0,num-queues=4 \

-drive file=disk.qcow2,if=none,id=drive0

The information below extracted from the virtual machine confirms the virtio-blk device has 4 I/O queues. Each I/O queue having one virtio0-req.X interrupt.

# ls /sys/block/vda/mq/ 0 1 2 3

24: 0 0 0 0 PCI-MSI 65536-edge virtio0-config 25: 3 0 0 0 PCI-MSI 65537-edge virtio0-req.0 26: 0 31 0 0 PCI-MSI 65538-edge virtio0-req.1 27: 0 0 33 0 PCI-MSI 65539-edge virtio0-req.2 28: 0 0 0 0 PCI-MSI 65540-edge virtio0-req.3

nvme

This section demonstrates how to emulate NVMe. This example shows an NVMe device with 8 hardware queues. As the virtual machine has as 4 vcpus, only 4 hardware queues would be used. The backend NVMe device is disk.qcow2.

qemu-system-x86_64 -machine accel=kvm -vnc :0 -smp 4 -m 4096M \

-net nic -net user,hostfwd=tcp::5023-:22 \

-hda ol7.qcow2 -serial stdio \

-device nvme,drive=nvme0,serial=deadbeaf1,num_queues=8 \

-drive file=disk.qcow2,if=none,id=nvme0

Syslog extracted from the virtual machine ensures detection of the NVMe device by the Linux kernel is successful.

[ 1.181405] nvme nvme0: pci function 0000:00:04.0 [ 1.212434] nvme nvme0: 4/0/0 default/read/poll queues

The information below taken from the virtual machine confirms the NVMe device has 4 I/O queues in addition to the admin queue. Each queue having one nvme0qX interrupt.

24: 0 11 0 0 PCI-MSI 65536-edge nvme0q0 25: 40 0 0 0 PCI-MSI 65537-edge nvme0q1 26: 0 41 0 0 PCI-MSI 65538-edge nvme0q2 27: 0 0 0 0 PCI-MSI 65539-edge nvme0q3 28: 0 0 0 4 PCI-MSI 65540-edge nvme0q4

NVMe in QEMU also supports ‘cmb_size_mb’ which is used to configure the amount of memory available as Controller Memory Buffer (CMB). In addition, upstream development are continually adding more features to NVMe emulation for QEMU such as multiple namespaces.

nvdimm

This section briefly demonstrates how to emulate NVDIMM by adding one 6GB NVDIMM to the virtual machine.

qemu-system-x86_64 -machine pc,nvdimm,accel=kvm -vnc :0 -smp 4 -m 4096M \

-net nic -net user,hostfwd=tcp::5023-:22 \

-hda ol7.qcow2 -serial stdio \

-m 2G,maxmem=10G,slots=4 \

-object memory-backend-file,share,id=md1,mem-path=nvdimm.img,size=6G \

-device nvdimm,memdev=md1,id=nvdimm1

The following information extracted from the virtual machine demonstrates that the NVDIMM device is exported as block device /dev/pmem0.

# dmesg | grep NVDIMM [ 0.020285] ACPI: SSDT 0x000000007FFDFD85 0002CD (v01 BOCHS NVDIMM 00000001 BXPC 00000001)

# ndctl list

[

{

"dev":"namespace0.0",

"mode":"raw",

"size":6442450944,

"sector_size":512,

"blockdev":"pmem0"

}

]

# lsblk | grep pmem pmem0 259:0 0 6G 0 disk

NVDIMM features and configuration are quite complex with extra support continually being added to QEMU. For more NVDIMM usage with QEMU, please refer to QEMU NVDIMM documentation at https://docs.pmem.io

Power Management

This section introduces how QEMU can be used to emulate power management, e.g. freeze/resume. While this is not limited to block devices, we will demonstrate using a NVMe device. This helps to understand how block device drivers work with power management.

The first step is to boot a virtual machine with an NVMe device. The only difference with the prior NVMe example is to use “-monitor stdio” instead of “-serial stdio” to facilitate interaction with QEMU via a shell.

qemu-system-x86_64 -machine accel=kvm -vnc :0 -smp 4 -m 4096M \

-net nic -net user,hostfwd=tcp::5023-:22 \

-hda ol7.qcow2 -monitor stdio \

-device nvme,drive=nvme0,serial=deadbeaf1,num_queues=8 \

-drive file=disk.qcow2,if=none,id=nvme0

(qemu)

To suspend the operating system run the following within the virtual machine:

# echo freeze > /sys/power/state

This will have the effect of freezing the virtual machine. To resume run the following from the QEMU shell:

(qemu) system_powerdown

The following extract from the virtual machine syslog demonstrates the behaviour of the Linux kernel during the freeze/resume cycle.

[ 26.945439] PM: suspend entry (s2idle) [ 26.951256] Filesystems sync: 0.005 seconds [ 26.951922] Freezing user space processes ... (elapsed 0.000 seconds) done. [ 26.953489] OOM killer disabled. [ 26.953942] Freezing remaining freezable tasks ... (elapsed 0.000 seconds) done. [ 26.955631] printk: Suspending console(s) (use no_console_suspend to debug) [ 26.962704] sd 0:0:0:0: [sda] Synchronizing SCSI cache [ 26.962972] sd 0:0:0:0: [sda] Stopping disk [ 54.674206] sd 0:0:0:0: [sda] Starting disk [ 54.678859] nvme nvme0: 4/0/0 default/read/poll queues [ 54.707283] OOM killer enabled. [ 54.707710] Restarting tasks ... done. [ 54.708596] PM: suspend exit [ 54.834191] ata2.01: NODEV after polling detection [ 54.834379] ata1.01: NODEV after polling detection [ 56.770115] e1000: ens3 NIC Link is Up 1000 Mbps Full Duplex, Flow Control: RX

This method can be used to reproduce and analyze bugs related to NVMe and power management such as http://lists.infradead.org/pipermail/linux-nvme/2019-April/023237.html

This highlights that QEMU is not just an emulator, it can also be used to study, debug and develop the Linux kernel.

Summary

In this article we discussed how to emulate numerous different block devices with QEMU. Facilitating the abilty to customize the configuration of block devices such as the topology of the SCSI bus or the number of queues in NVMe.

Block devices support many features, and the best way to fully understand how to configure block devices in QEMU is to study the QEMU source code. This will also assist in understanding block device specifications. This article should primarily be used as cheat sheet.